Jiaxing Li

CharacterShot: Controllable and Consistent 4D Character Animation

Aug 10, 2025Abstract:In this paper, we propose \textbf{CharacterShot}, a controllable and consistent 4D character animation framework that enables any individual designer to create dynamic 3D characters (i.e., 4D character animation) from a single reference character image and a 2D pose sequence. We begin by pretraining a powerful 2D character animation model based on a cutting-edge DiT-based image-to-video model, which allows for any 2D pose sequnce as controllable signal. We then lift the animation model from 2D to 3D through introducing dual-attention module together with camera prior to generate multi-view videos with spatial-temporal and spatial-view consistency. Finally, we employ a novel neighbor-constrained 4D gaussian splatting optimization on these multi-view videos, resulting in continuous and stable 4D character representations. Moreover, to improve character-centric performance, we construct a large-scale dataset Character4D, containing 13,115 unique characters with diverse appearances and motions, rendered from multiple viewpoints. Extensive experiments on our newly constructed benchmark, CharacterBench, demonstrate that our approach outperforms current state-of-the-art methods. Code, models, and datasets will be publicly available at https://github.com/Jeoyal/CharacterShot.

Instance-Prototype Affinity Learning for Non-Exemplar Continual Graph Learning

May 15, 2025Abstract:Graph Neural Networks (GNN) endure catastrophic forgetting, undermining their capacity to preserve previously acquired knowledge amid the assimilation of novel information. Rehearsal-based techniques revisit historical examples, adopted as a principal strategy to alleviate this phenomenon. However, memory explosion and privacy infringements impose significant constraints on their utility. Non-Exemplar methods circumvent the prior issues through Prototype Replay (PR), yet feature drift presents new challenges. In this paper, our empirical findings reveal that Prototype Contrastive Learning (PCL) exhibits less pronounced drift than conventional PR. Drawing upon PCL, we propose Instance-Prototype Affinity Learning (IPAL), a novel paradigm for Non-Exemplar Continual Graph Learning (NECGL). Exploiting graph structural information, we formulate Topology-Integrated Gaussian Prototypes (TIGP), guiding feature distributions towards high-impact nodes to augment the model's capacity for assimilating new knowledge. Instance-Prototype Affinity Distillation (IPAD) safeguards task memory by regularizing discontinuities in class relationships. Moreover, we embed a Decision Boundary Perception (DBP) mechanism within PCL, fostering greater inter-class discriminability. Evaluations on four node classification benchmark datasets demonstrate that our method outperforms existing state-of-the-art methods, achieving a better trade-off between plasticity and stability.

Lightweight Contrastive Distilled Hashing for Online Cross-modal Retrieval

Feb 28, 2025Abstract:Deep online cross-modal hashing has gained much attention from researchers recently, as its promising applications with low storage requirement, fast retrieval efficiency and cross modality adaptive, etc. However, there still exists some technical hurdles that hinder its applications, e.g., 1) how to extract the coexistent semantic relevance of cross-modal data, 2) how to achieve competitive performance when handling the real time data streams, 3) how to transfer the knowledge learned from offline to online training in a lightweight manner. To address these problems, this paper proposes a lightweight contrastive distilled hashing (LCDH) for cross-modal retrieval, by innovatively bridging the offline and online cross-modal hashing by similarity matrix approximation in a knowledge distillation framework. Specifically, in the teacher network, LCDH first extracts the cross-modal features by the contrastive language-image pre-training (CLIP), which are further fed into an attention module for representation enhancement after feature fusion. Then, the output of the attention module is fed into a FC layer to obtain hash codes for aligning the sizes of similarity matrices for online and offline training. In the student network, LCDH extracts the visual and textual features by lightweight models, and then the features are fed into a FC layer to generate binary codes. Finally, by approximating the similarity matrices, the performance of online hashing in the lightweight student network can be enhanced by the supervision of coexistent semantic relevance that is distilled from the teacher network. Experimental results on three widely used datasets demonstrate that LCDH outperforms some state-of-the-art methods.

Efficient Stochastic Polar Decoder With Correlated Stochastic Computing

Jan 29, 2025

Abstract:Polar codes have gained significant attention in channel coding for their ability to approach the capacity of binary input discrete memoryless channels (B-DMCs), thanks to their reliability and efficiency in transmission. However, existing decoders often struggle to balance hardware area and performance. Stochastic computing offers a way to simplify circuits, and previous work has implemented decoding using this approach. A common issue with these methods is performance degradation caused by the introduction of correlation. This paper presents an Efficient Correlated Stochastic Polar Decoder (ECS-PD) that fundamentally addresses the issue of the `hold-state', preventing it from increasing as correlation computation progresses. We propose two optimization strategies aimed at reducing iteration latency, increasing throughput, and simplifying the circuit to improve hardware efficiency. The optimization can reduce the number of iterations by 25.2% at $E_b/N_0$ = 3 dB. Compared to other efficient designs, the proposed ECS-PD achieves higher throughput and is 2.7 times more hardware-efficient than the min-sum decoder.

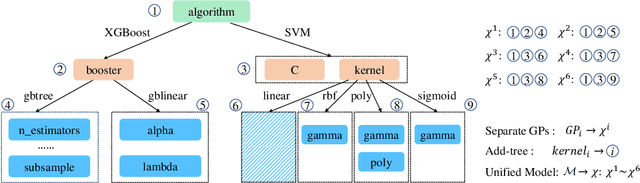

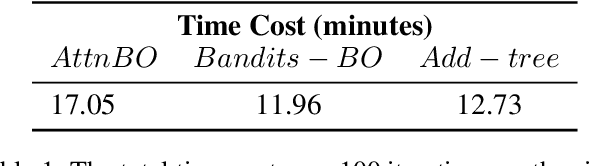

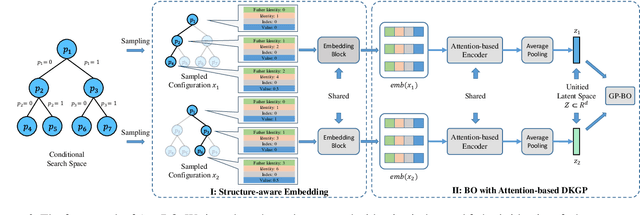

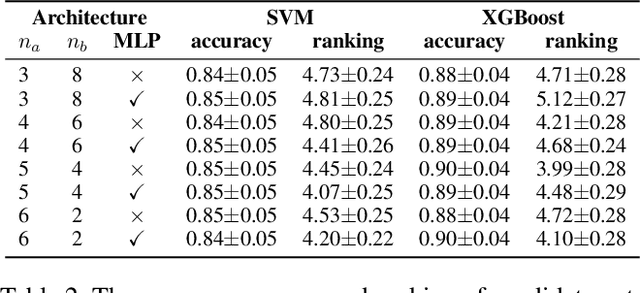

Modeling All Response Surfaces in One for Conditional Search Spaces

Jan 08, 2025

Abstract:Bayesian Optimization (BO) is a sample-efficient black-box optimizer commonly used in search spaces where hyperparameters are independent. However, in many practical AutoML scenarios, there will be dependencies among hyperparameters, forming a conditional search space, which can be partitioned into structurally distinct subspaces. The structure and dimensionality of hyperparameter configurations vary across these subspaces, challenging the application of BO. Some previous BO works have proposed solutions to develop multiple Gaussian Process models in these subspaces. However, these approaches tend to be inefficient as they require a substantial number of observations to guarantee each GP's performance and cannot capture relationships between hyperparameters across different subspaces. To address these issues, this paper proposes a novel approach to model the response surfaces of all subspaces in one, which can model the relationships between hyperparameters elegantly via a self-attention mechanism. Concretely, we design a structure-aware hyperparameter embedding to preserve the structural information. Then, we introduce an attention-based deep feature extractor, capable of projecting configurations with different structures from various subspaces into a unified feature space, where the response surfaces can be formulated using a single standard Gaussian Process. The empirical results on a simulation function, various real-world tasks, and HPO-B benchmark demonstrate that our proposed approach improves the efficacy and efficiency of BO within conditional search spaces.

Global Estimation of Building-Integrated Facade and Rooftop Photovoltaic Potential by Integrating 3D Building Footprint and Spatio-Temporal Datasets

Dec 02, 2024Abstract:This research tackles the challenges of estimating Building-Integrated Photovoltaics (BIPV) potential across various temporal and spatial scales, accounting for different geographical climates and urban morphology. We introduce a holistic methodology for evaluating BIPV potential, integrating 3D building footprint models with diverse meteorological data sources to account for dynamic shadow effects. The approach enables the assessment of PV potential on facades and rooftops at different levels-individual buildings, urban blocks, and cities globally. Through an analysis of 120 typical cities, we highlight the importance of 3D building forms, cityscape morphology, and geographic positioning in measuring BIPV potential at various levels. In particular, our simulation study reveals that among cities with optimal facade PV performance, the average ratio of facade PV potential to rooftop PV potential is approximately 68.2%. Additionally, approximately 17.5% of the analyzed samples demonstrate even higher facade PV potentials compared to rooftop installations. This finding underscores the strategic value of incorporating facade PV applications into urban sustainable energy systems.

Autonomous Drifting Based on Maximal Safety Probability Learning

Sep 05, 2024Abstract:This paper proposes a novel learning-based framework for autonomous driving based on the concept of maximal safety probability. Efficient learning requires rewards that are informative of desirable/undesirable states, but such rewards are challenging to design manually due to the difficulty of differentiating better states among many safe states. On the other hand, learning policies that maximize safety probability does not require laborious reward shaping but is numerically challenging because the algorithms must optimize policies based on binary rewards sparse in time. Here, we show that physics-informed reinforcement learning can efficiently learn this form of maximally safe policy. Unlike existing drift control methods, our approach does not require a specific reference trajectory or complex reward shaping, and can learn safe behaviors only from sparse binary rewards. This is enabled by the use of the physics loss that plays an analogous role to reward shaping. The effectiveness of the proposed approach is demonstrated through lane keeping in a normal cornering scenario and safe drifting in a high-speed racing scenario.

Topology-Aware Dynamic Reweighting for Distribution Shifts on Graph

Jun 03, 2024

Abstract:Graph Neural Networks (GNNs) are widely used for node classification tasks but often fail to generalize when training and test nodes come from different distributions, limiting their practicality. To overcome this, recent approaches adopt invariant learning techniques from the out-of-distribution (OOD) generalization field, which seek to establish stable prediction methods across environments. However, the applicability of these invariant assumptions to graph data remains unverified, and such methods often lack solid theoretical support. In this work, we introduce the Topology-Aware Dynamic Reweighting (TAR) framework, which dynamically adjusts sample weights through gradient flow in the geometric Wasserstein space during training. Instead of relying on strict invariance assumptions, we prove that our method is able to provide distributional robustness, thereby enhancing the out-of-distribution generalization performance on graph data. By leveraging the inherent graph structure, TAR effectively addresses distribution shifts. Our framework's superiority is demonstrated through standard testing on four graph OOD datasets and three class-imbalanced node classification datasets, exhibiting marked improvements over existing methods.

SCALM: Towards Semantic Caching for Automated Chat Services with Large Language Models

May 24, 2024Abstract:Large Language Models (LLMs) have become increasingly popular, transforming a wide range of applications across various domains. However, the real-world effectiveness of their query cache systems has not been thoroughly investigated. In this work, we for the first time conducted an analysis on real-world human-to-LLM interaction data, identifying key challenges in existing caching solutions for LLM-based chat services. Our findings reveal that current caching methods fail to leverage semantic connections, leading to inefficient cache performance and extra token costs. To address these issues, we propose SCALM, a new cache architecture that emphasizes semantic analysis and identifies significant cache entries and patterns. We also detail the implementations of the corresponding cache storage and eviction strategies. Our evaluations show that SCALM increases cache hit ratios and reduces operational costs for LLMChat services. Compared with other state-of-the-art solutions in GPTCache, SCALM shows, on average, a relative increase of 63% in cache hit ratio and a relative improvement of 77% in tokens savings.

Exposing AI-generated Videos: A Benchmark Dataset and a Local-and-Global Temporal Defect Based Detection Method

May 07, 2024Abstract:The generative model has made significant advancements in the creation of realistic videos, which causes security issues. However, this emerging risk has not been adequately addressed due to the absence of a benchmark dataset for AI-generated videos. In this paper, we first construct a video dataset using advanced diffusion-based video generation algorithms with various semantic contents. Besides, typical video lossy operations over network transmission are adopted to generate degraded samples. Then, by analyzing local and global temporal defects of current AI-generated videos, a novel detection framework by adaptively learning local motion information and global appearance variation is constructed to expose fake videos. Finally, experiments are conducted to evaluate the generalization and robustness of different spatial and temporal domain detection methods, where the results can serve as the baseline and demonstrate the research challenge for future studies.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge