Jeff W. Lichtman

Department of Molecular and Cellular Biology, Harvard University, Cambridge, MA, Center for Brain Science, Harvard University, Cambridge, MA

ZAPBench: A Benchmark for Whole-Brain Activity Prediction in Zebrafish

Mar 04, 2025

Abstract:Data-driven benchmarks have led to significant progress in key scientific modeling domains including weather and structural biology. Here, we introduce the Zebrafish Activity Prediction Benchmark (ZAPBench) to measure progress on the problem of predicting cellular-resolution neural activity throughout an entire vertebrate brain. The benchmark is based on a novel dataset containing 4d light-sheet microscopy recordings of over 70,000 neurons in a larval zebrafish brain, along with motion stabilized and voxel-level cell segmentations of these data that facilitate development of a variety of forecasting methods. Initial results from a selection of time series and volumetric video modeling approaches achieve better performance than naive baseline methods, but also show room for further improvement. The specific brain used in the activity recording is also undergoing synaptic-level anatomical mapping, which will enable future integration of detailed structural information into forecasting methods.

FreSeg: Frenet-Frame-based Part Segmentation for 3D Curvilinear Structures

Apr 19, 2024

Abstract:Part segmentation is a crucial task for 3D curvilinear structures like neuron dendrites and blood vessels, enabling the analysis of dendritic spines and aneurysms with scientific and clinical significance. However, their diversely winded morphology poses a generalization challenge to existing deep learning methods, which leads to labor-intensive manual correction. In this work, we propose FreSeg, a framework of part segmentation tasks for 3D curvilinear structures. With Frenet-Frame-based point cloud transformation, it enables the models to learn more generalizable features and have significant performance improvements on tasks involving elongated and curvy geometries. We evaluate FreSeg on 2 datasets: 1) DenSpineEM, an in-house dataset for dendritic spine segmentation, and 2) IntrA, a public 3D dataset for intracranial aneurysm segmentation. Further, we will release the DenSpineEM dataset, which includes roughly 6,000 spines from 69 dendrites from 3 public electron microscopy (EM) datasets, to foster the development of effective dendritic spine instance extraction methods and, consequently, large-scale connectivity analysis to better understand mammalian brains.

VICE: Visual Identification and Correction of Neural Circuit Errors

May 14, 2021

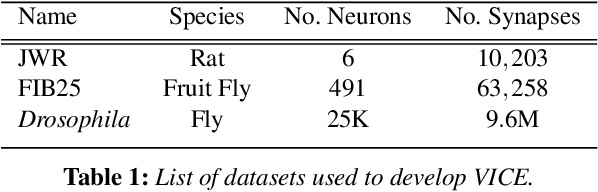

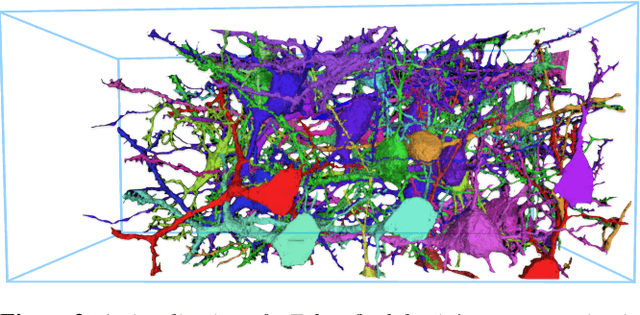

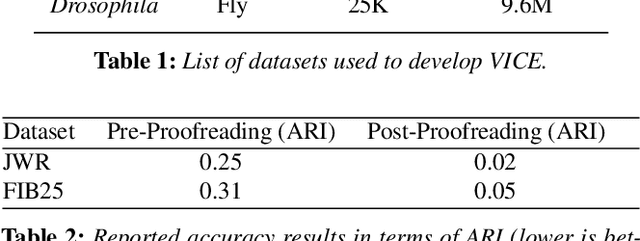

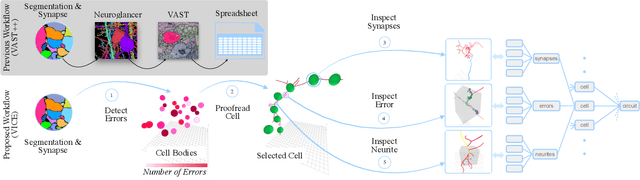

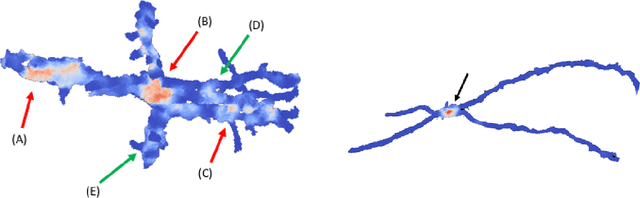

Abstract:A connectivity graph of neurons at the resolution of single synapses provides scientists with a tool for understanding the nervous system in health and disease. Recent advances in automatic image segmentation and synapse prediction in electron microscopy (EM) datasets of the brain have made reconstructions of neurons possible at the nanometer scale. However, automatic segmentation sometimes struggles to segment large neurons correctly, requiring human effort to proofread its output. General proofreading involves inspecting large volumes to correct segmentation errors at the pixel level, a visually intensive and time-consuming process. This paper presents the design and implementation of an analytics framework that streamlines proofreading, focusing on connectivity-related errors. We accomplish this with automated likely-error detection and synapse clustering that drives the proofreading effort with highly interactive 3D visualizations. In particular, our strategy centers on proofreading the local circuit of a single cell to ensure a basic level of completeness. We demonstrate our framework's utility with a user study and report quantitative and subjective feedback from our users. Overall, users find the framework more efficient for proofreading, understanding evolving graphs, and sharing error correction strategies.

Learning Guided Electron Microscopy with Active Acquisition

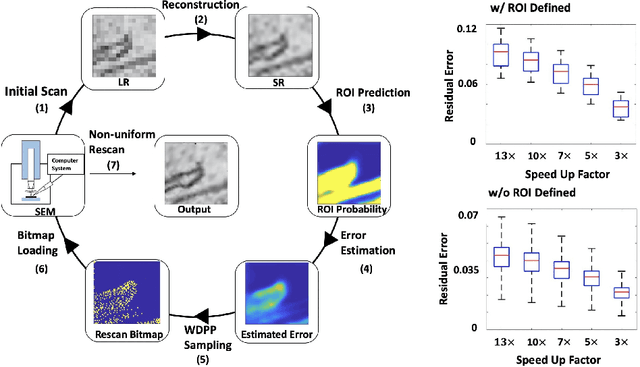

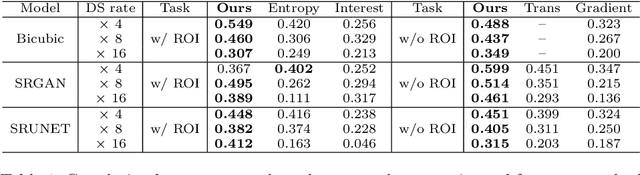

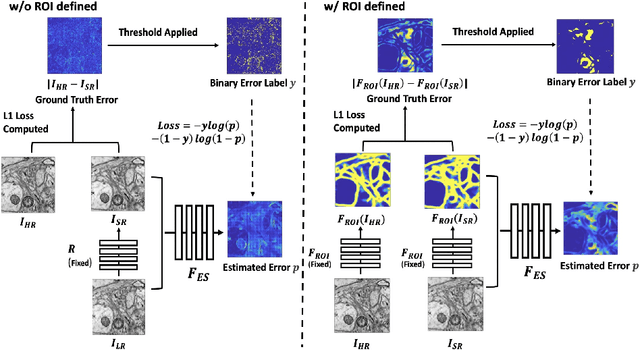

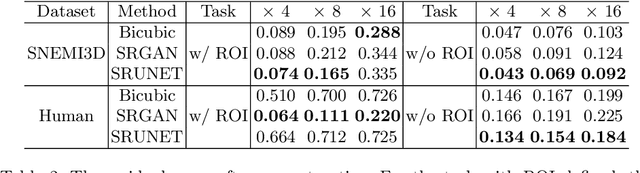

Jan 07, 2021

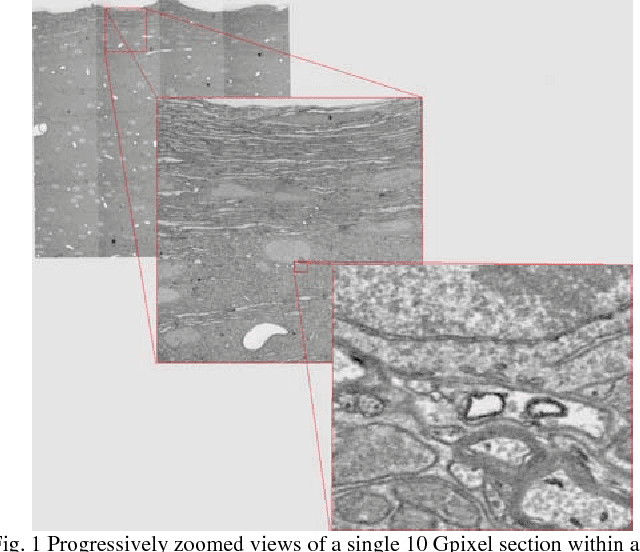

Abstract:Single-beam scanning electron microscopes (SEM) are widely used to acquire massive data sets for biomedical study, material analysis, and fabrication inspection. Datasets are typically acquired with uniform acquisition: applying the electron beam with the same power and duration to all image pixels, even if there is great variety in the pixels' importance for eventual use. Many SEMs are now able to move the beam to any pixel in the field of view without delay, enabling them, in principle, to invest their time budget more effectively with non-uniform imaging. In this paper, we show how to use deep learning to accelerate and optimize single-beam SEM acquisition of images. Our algorithm rapidly collects an information-lossy image (e.g. low resolution) and then applies a novel learning method to identify a small subset of pixels to be collected at higher resolution based on a trade-off between the saliency and spatial diversity. We demonstrate the efficacy of this novel technique for active acquisition by speeding up the task of collecting connectomic datasets for neurobiology by up to an order of magnitude.

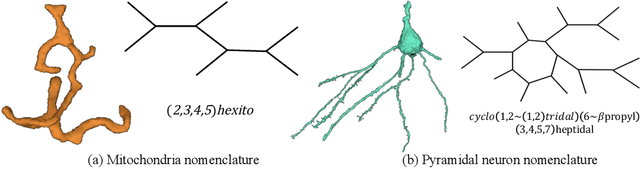

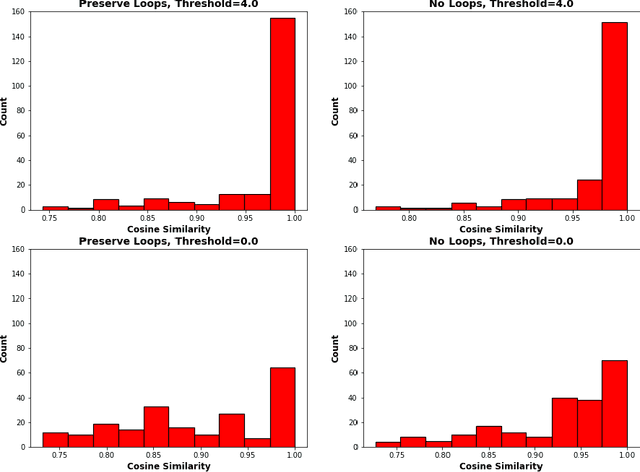

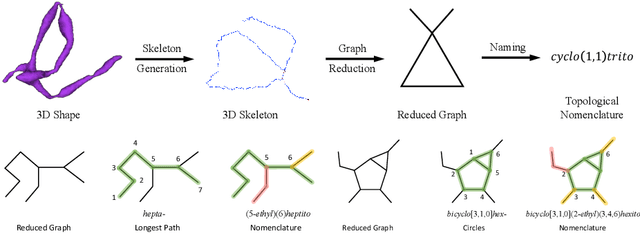

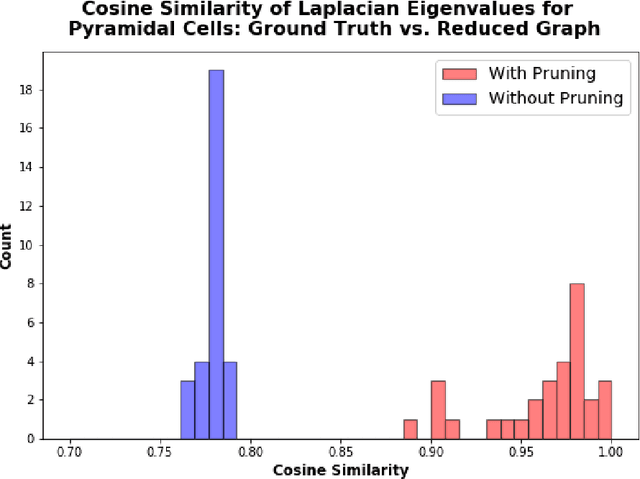

A Topological Nomenclature for 3D Shape Analysis in Connectomics

Sep 27, 2019

Abstract:An essential task in nano-scale connectomics is the morphology analysis of neurons and organelles like mitochondria to shed light on their biological properties. However, these biological objects often have tangled parts or complex branching patterns, which makes it hard to abstract, categorize, and manipulate their morphology. Here we propose a topological nomenclature to name these objects like chemical compounds for neuroscience analysis. To this end, we convert the volumetric representation into the topology-preserving reduced graph, develop nomenclature rules for pyramidal neurons and mitochondria from the reduced graph, and learn the feature embedding for shape manipulation. In ablation studies, we show that the proposed reduced graph extraction method yield graphs better in accord with the perception of experts. On 3D shape retrieval and decomposition tasks, we show that the encoded topological nomenclature features achieve better results than state-of-the-art shape descriptors. To advance neuroscience, we will release a 3D mesh dataset of mitochondria and pyramidal neurons reconstructed from a 100{\mu}m cube electron microscopy (EM) volume. Code is publicly available at https://github.com/donglaiw/ibexHelper.

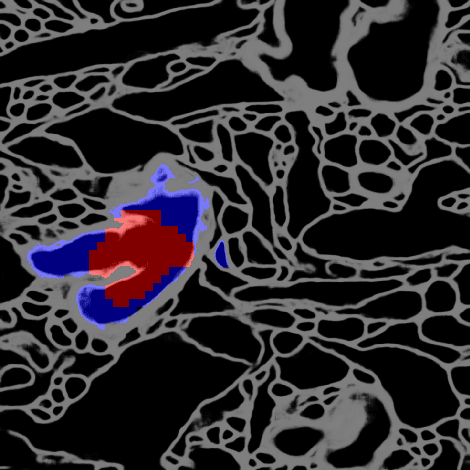

Detecting Synapse Location and Connectivity by Signed Proximity Estimation and Pruning with Deep Nets

Oct 25, 2018

Abstract:Synaptic connectivity detection is a critical task for neural reconstruction from Electron Microscopy (EM) data. Most of the existing algorithms for synapse detection do not identify the cleft location and direction of connectivity simultaneously. The few methods that computes direction along with contact location have only been demonstrated to work on either dyadic (most common in vertebrate brain) or polyadic (found in fruit fly brain) synapses, but not on both types. In this paper, we present an algorithm to automatically predict the location as well as the direction of both dyadic and polyadic synapses. The proposed algorithm first generates candidate synaptic connections from voxelwise predictions of signed proximity generated by a 3D U-net. A second 3D CNN then prunes the set of candidates to produce the final detection of cleft and connectivity orientation. Experimental results demonstrate that the proposed method outperforms the existing methods for determining synapses in both rodent and fruit fly brain.

Anisotropic EM Segmentation by 3D Affinity Learning and Agglomeration

Aug 03, 2018

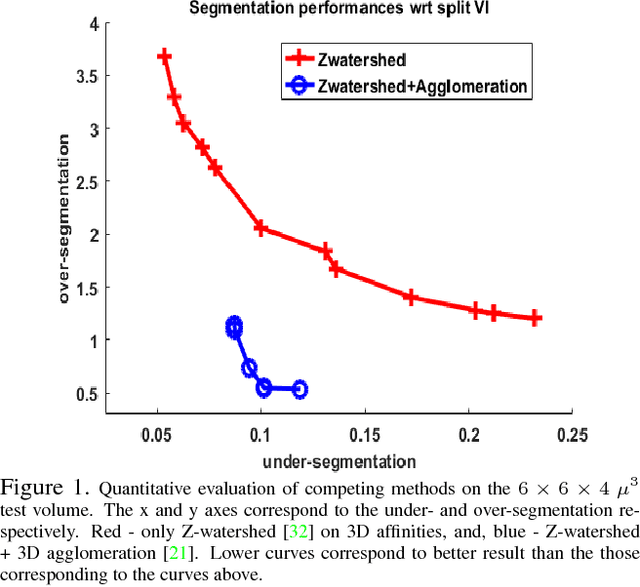

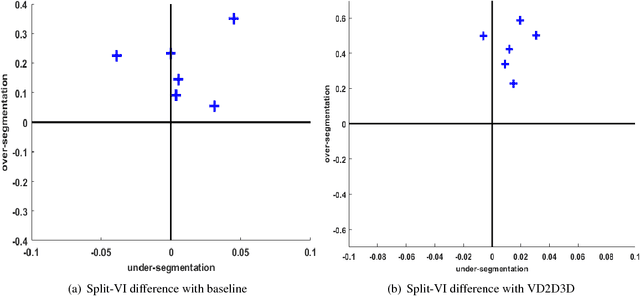

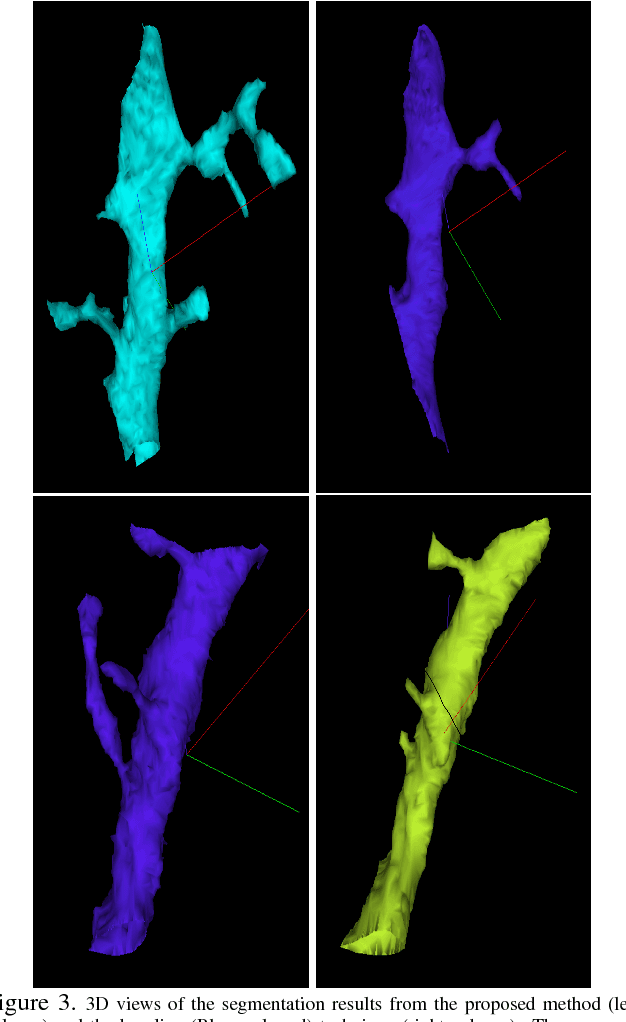

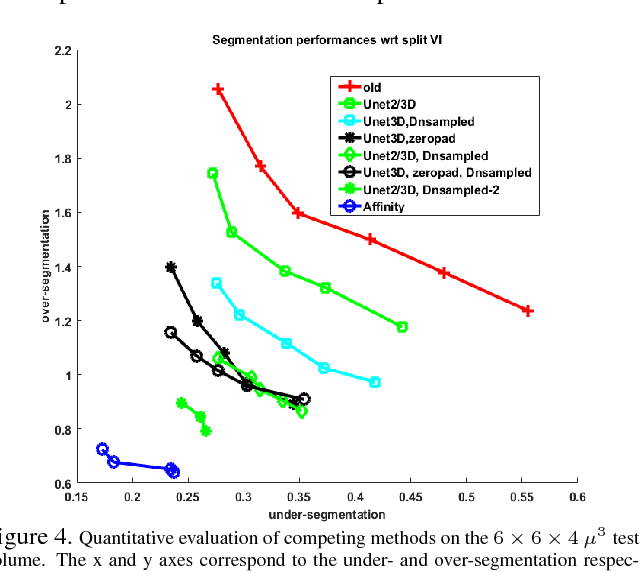

Abstract:The field of connectomics has recently produced neuron wiring diagrams from relatively large brain regions from multiple animals. Most of these neural reconstructions were computed from isotropic (e.g., FIBSEM) or near isotropic (e.g., SBEM) data. In spite of the remarkable progress on algorithms in recent years, automatic dense reconstruction from anisotropic data remains a challenge for the connectomics community. One significant hurdle in the segmentation of anisotropic data is the difficulty in generating a suitable initial over-segmentation. In this study, we present a segmentation method for anisotropic EM data that agglomerates a 3D over-segmentation computed from the 3D affinity prediction. A 3D U-net is trained to predict 3D affinities by the MALIS approach. Experiments on multiple datasets demonstrates the strength and robustness of the proposed method for anisotropic EM segmentation.

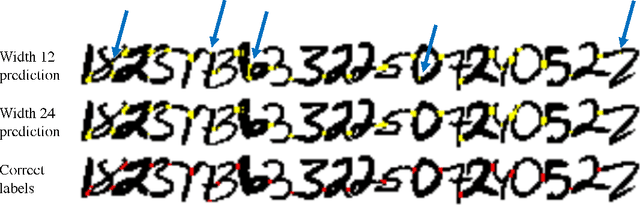

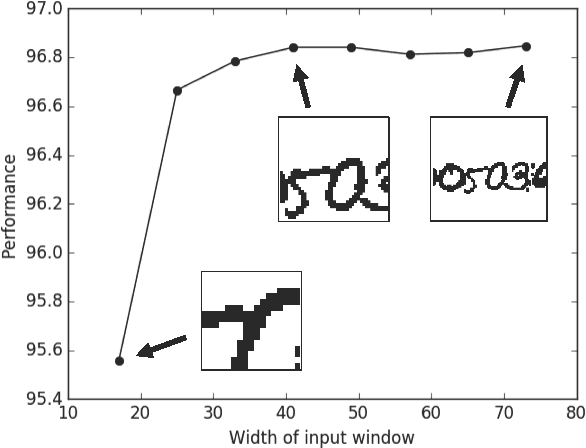

Morphological Error Detection in 3D Segmentations

May 30, 2017

Abstract:Deep learning algorithms for connectomics rely upon localized classification, rather than overall morphology. This leads to a high incidence of erroneously merged objects. Humans, by contrast, can easily detect such errors by acquiring intuition for the correct morphology of objects. Biological neurons have complicated and variable shapes, which are challenging to learn, and merge errors take a multitude of different forms. We present an algorithm, MergeNet, that shows 3D ConvNets can, in fact, detect merge errors from high-level neuronal morphology. MergeNet follows unsupervised training and operates across datasets. We demonstrate the performance of MergeNet both on a variety of connectomics data and on a dataset created from merged MNIST images.

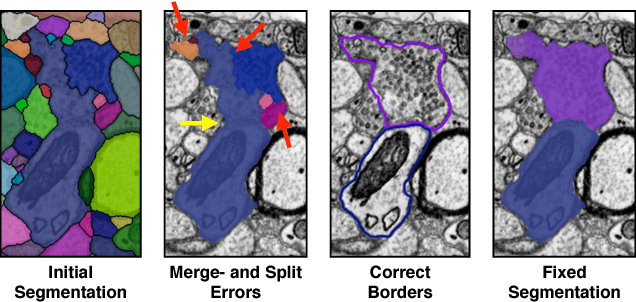

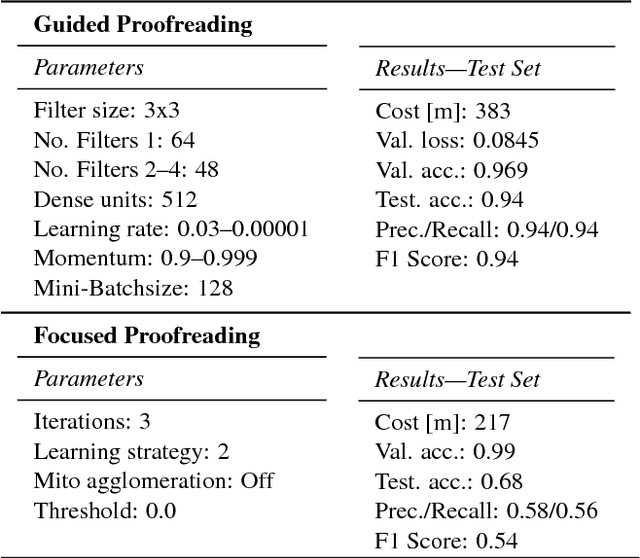

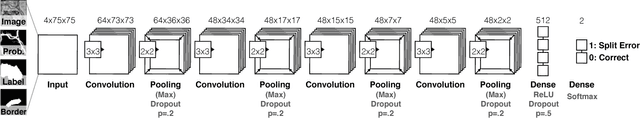

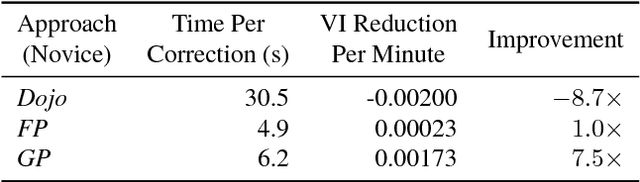

Guided Proofreading of Automatic Segmentations for Connectomics

Apr 04, 2017

Abstract:Automatic cell image segmentation methods in connectomics produce merge and split errors, which require correction through proofreading. Previous research has identified the visual search for these errors as the bottleneck in interactive proofreading. To aid error correction, we develop two classifiers that automatically recommend candidate merges and splits to the user. These classifiers use a convolutional neural network (CNN) that has been trained with errors in automatic segmentations against expert-labeled ground truth. Our classifiers detect potentially-erroneous regions by considering a large context region around a segmentation boundary. Corrections can then be performed by a user with yes/no decisions, which reduces variation of information 7.5x faster than previous proofreading methods. We also present a fully-automatic mode that uses a probability threshold to make merge/split decisions. Extensive experiments using the automatic approach and comparing performance of novice and expert users demonstrate that our method performs favorably against state-of-the-art proofreading methods on different connectomics datasets.

Registering large volume serial-section electron microscopy image sets for neural circuit reconstruction using FFT signal whitening

Dec 14, 2016

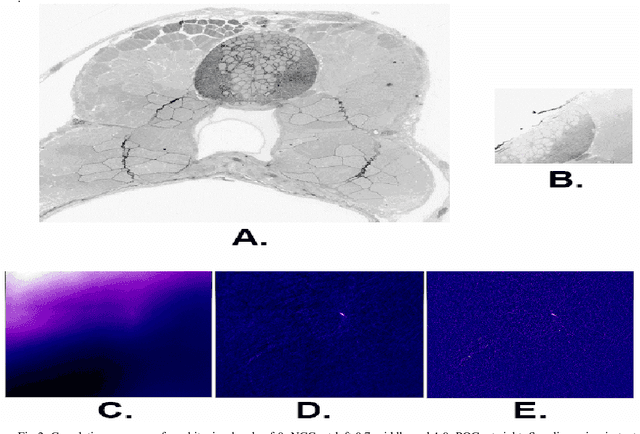

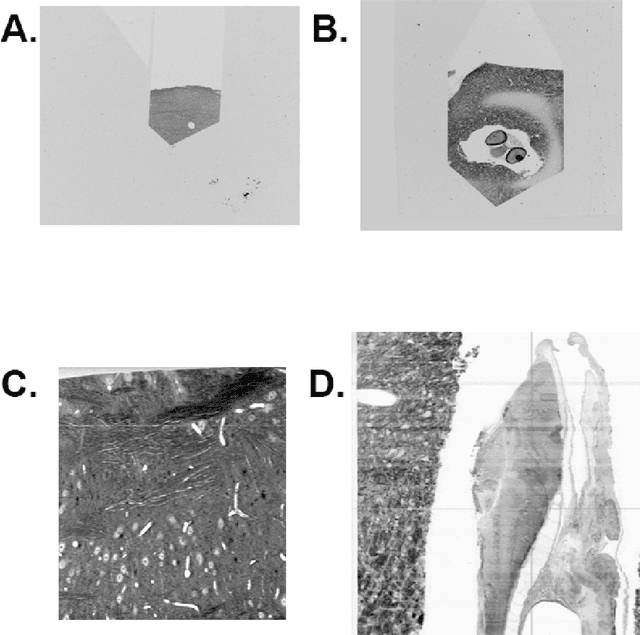

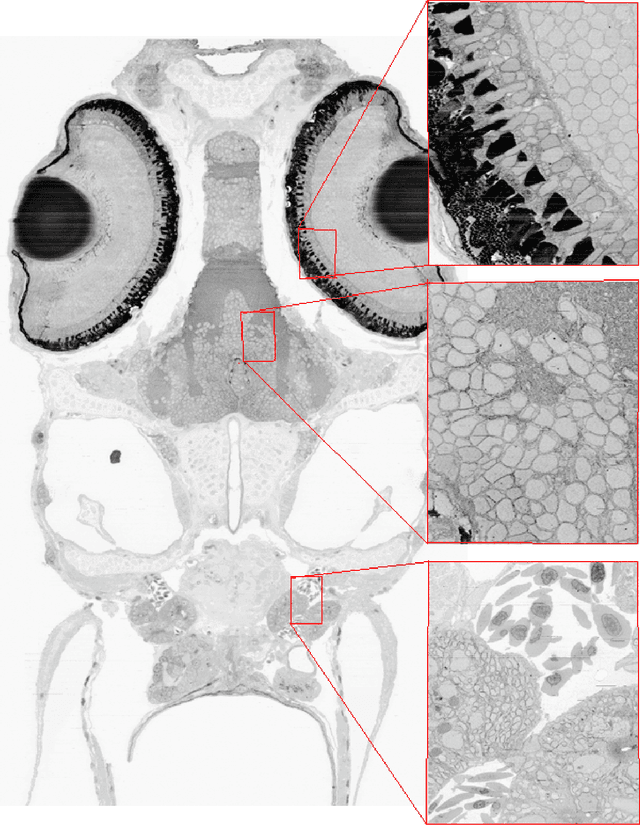

Abstract:The detailed reconstruction of neural anatomy for connectomics studies requires a combination of resolution and large three-dimensional data capture provided by serial section electron microscopy (ssEM). The convergence of high throughput ssEM imaging and improved tissue preparation methods now allows ssEM capture of complete specimen volumes up to cubic millimeter scale. The resulting multi-terabyte image sets span thousands of serial sections and must be precisely registered into coherent volumetric forms in which neural circuits can be traced and segmented. This paper introduces a Signal Whitening Fourier Transform Image Registration approach (SWiFT-IR) under development at the Pittsburgh Supercomputing Center and its use to align mouse and zebrafish brain datasets acquired using the wafer mapper ssEM imaging technology recently developed at Harvard University. Unlike other methods now used for ssEM registration, SWiFT-IR modifies its spatial frequency response during image matching to maximize a signal-to-noise measure used as its primary indicator of alignment quality. This alignment signal is more robust to rapid variations in biological content and unavoidable data distortions than either phase-only or standard Pearson correlation, thus allowing more precise alignment and statistical confidence. These improvements in turn enable an iterative registration procedure based on projections through multiple sections rather than more typical adjacent-pair matching methods. This projection approach, when coupled with known anatomical constraints and iteratively applied in a multi-resolution pyramid fashion, drives the alignment into a smooth form that properly represents complex and widely varying anatomical content such as the full cross-section zebrafish data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge