Won-Dong Jang

Object Propagation via Inter-Frame Attentions for Temporally Stable Video Instance Segmentation

Nov 15, 2021

Abstract:Video instance segmentation aims to detect, segment, and track objects in a video. Current approaches extend image-level segmentation algorithms to the temporal domain. However, this results in temporally inconsistent masks. In this work, we identify the mask quality due to temporal stability as a performance bottleneck. Motivated by this, we propose a video instance segmentation method that alleviates the problem due to missing detections. Since this cannot be solved simply using spatial information, we leverage temporal context using inter-frame attentions. This allows our network to refocus on missing objects using box predictions from the neighbouring frame, thereby overcoming missing detections. Our method significantly outperforms previous state-of-the-art algorithms using the Mask R-CNN backbone, by achieving 35.1% mAP on the YouTube-VIS benchmark. Additionally, our method is completely online and requires no future frames. Our code is publicly available at https://github.com/anirudh-chakravarthy/ObjProp.

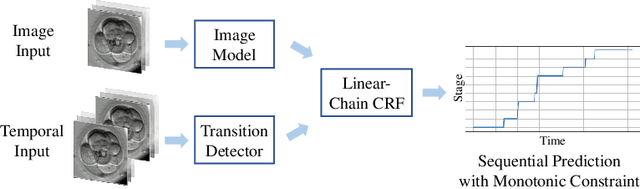

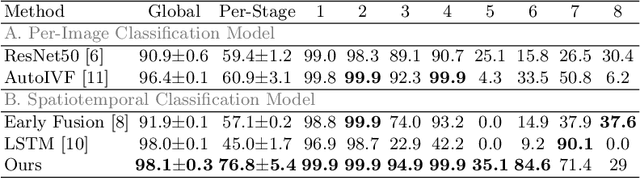

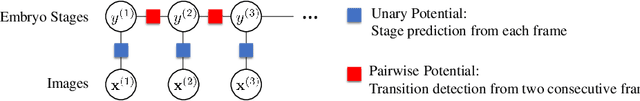

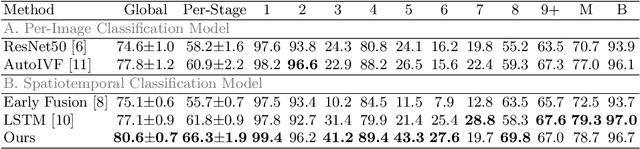

Developmental Stage Classification of Embryos Using Two-Stream Neural Network with Linear-Chain Conditional Random Field

Jul 13, 2021

Abstract:The developmental process of embryos follows a monotonic order. An embryo can progressively cleave from one cell to multiple cells and finally transform to morula and blastocyst. For time-lapse videos of embryos, most existing developmental stage classification methods conduct per-frame predictions using an image frame at each time step. However, classification using only images suffers from overlapping between cells and imbalance between stages. Temporal information can be valuable in addressing this problem by capturing movements between neighboring frames. In this work, we propose a two-stream model for developmental stage classification. Unlike previous methods, our two-stream model accepts both temporal and image information. We develop a linear-chain conditional random field (CRF) on top of neural network features extracted from the temporal and image streams to make use of both modalities. The linear-chain CRF formulation enables tractable training of global sequential models over multiple frames while also making it possible to inject monotonic development order constraints into the learning process explicitly. We demonstrate our algorithm on two time-lapse embryo video datasets: i) mouse and ii) human embryo datasets. Our method achieves 98.1 % and 80.6 % for mouse and human embryo stage classification, respectively. Our approach will enable more profound clinical and biological studies and suggests a new direction for developmental stage classification by utilizing temporal information.

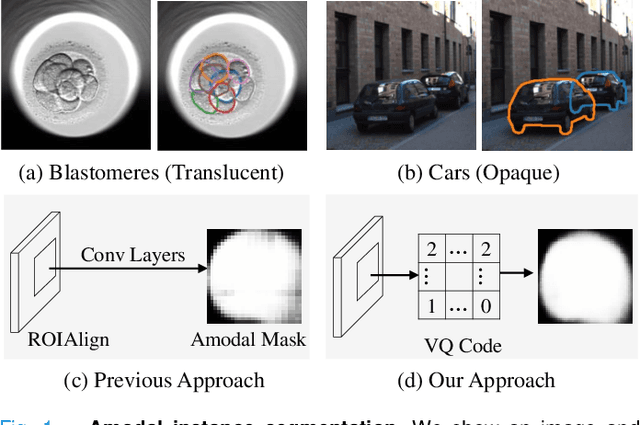

Learning Vector Quantized Shape Code for Amodal Blastomere Instance Segmentation

Dec 02, 2020

Abstract:Blastomere instance segmentation is important for analyzing embryos' abnormality. To measure the accurate shapes and sizes of blastomeres, their amodal segmentation is necessary. Amodal instance segmentation aims to recover the complete silhouette of an object even when the object is not fully visible. For each detected object, previous methods directly regress the target mask from input features. However, images of an object under different amounts of occlusion should have the same amodal mask output, which makes it harder to train the regression model. To alleviate the problem, we propose to classify input features into intermediate shape codes and recover complete object shapes from them. First, we pre-train the Vector Quantized Variational Autoencoder (VQ-VAE) model to learn these discrete shape codes from ground truth amodal masks. Then, we incorporate the VQ-VAE model into the amodal instance segmentation pipeline with an additional refinement module. We also detect an occlusion map to integrate occlusion information with a backbone feature. As such, our network faithfully detects bounding boxes of amodal objects. On an internal embryo cell image benchmark, the proposed method outperforms previous state-of-the-art methods. To show generalizability, we show segmentation results on the public KINS natural image benchmark. To examine the learned shape codes and model design choices, we perform ablation studies on a synthetic dataset of simple overlaid shapes. Our method would enable accurate measurement of blastomeres in in vitro fertilization (IVF) clinics, which potentially can increase IVF success rate.

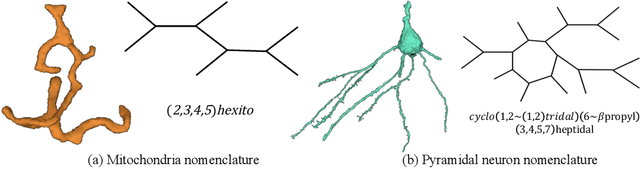

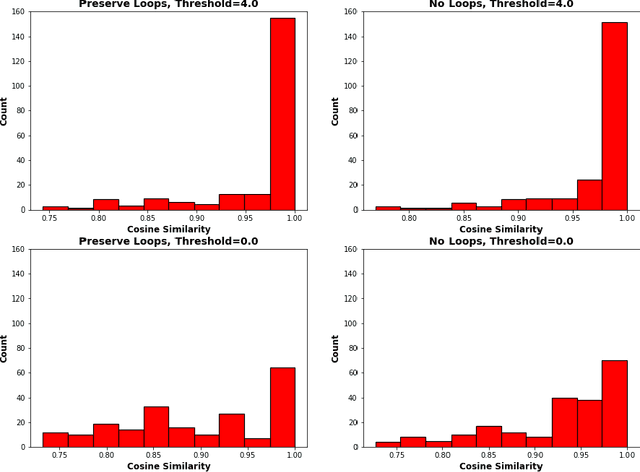

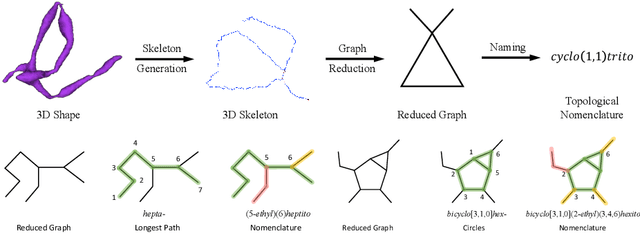

A Topological Nomenclature for 3D Shape Analysis in Connectomics

Sep 27, 2019

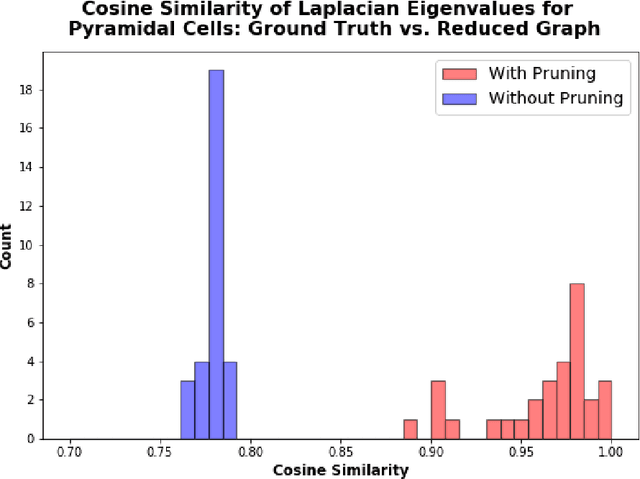

Abstract:An essential task in nano-scale connectomics is the morphology analysis of neurons and organelles like mitochondria to shed light on their biological properties. However, these biological objects often have tangled parts or complex branching patterns, which makes it hard to abstract, categorize, and manipulate their morphology. Here we propose a topological nomenclature to name these objects like chemical compounds for neuroscience analysis. To this end, we convert the volumetric representation into the topology-preserving reduced graph, develop nomenclature rules for pyramidal neurons and mitochondria from the reduced graph, and learn the feature embedding for shape manipulation. In ablation studies, we show that the proposed reduced graph extraction method yield graphs better in accord with the perception of experts. On 3D shape retrieval and decomposition tasks, we show that the encoded topological nomenclature features achieve better results than state-of-the-art shape descriptors. To advance neuroscience, we will release a 3D mesh dataset of mitochondria and pyramidal neurons reconstructed from a 100{\mu}m cube electron microscopy (EM) volume. Code is publicly available at https://github.com/donglaiw/ibexHelper.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge