Haoran Yang

Shandong University of Science and Technology

RP-CATE: Recurrent Perceptron-based Channel Attention Transformer Encoder for Industrial Hybrid Modeling

Dec 22, 2025Abstract:Nowadays, industrial hybrid modeling which integrates both mechanistic modeling and machine learning-based modeling techniques has attracted increasing interest from scholars due to its high accuracy, low computational cost, and satisfactory interpretability. Nevertheless, the existing industrial hybrid modeling methods still face two main limitations. First, current research has mainly focused on applying a single machine learning method to one specific task, failing to develop a comprehensive machine learning architecture suitable for modeling tasks, which limits their ability to effectively represent complex industrial scenarios. Second, industrial datasets often contain underlying associations (e.g., monotonicity or periodicity) that are not adequately exploited by current research, which can degrade model's predictive performance. To address these limitations, this paper proposes the Recurrent Perceptron-based Channel Attention Transformer Encoder (RP-CATE), with three distinctive characteristics: 1: We developed a novel architecture by replacing the self-attention mechanism with channel attention and incorporating our proposed Recurrent Perceptron (RP) Module into Transformer, achieving enhanced effectiveness for industrial modeling tasks compared to the original Transformer. 2: We proposed a new data type called Pseudo-Image Data (PID) tailored for channel attention requirements and developed a cyclic sliding window method for generating PID. 3: We introduced the concept of Pseudo-Sequential Data (PSD) and a method for converting industrial datasets into PSD, which enables the RP Module to capture the underlying associations within industrial dataset more effectively. An experiment aimed at hybrid modeling in chemical engineering was conducted by using RP-CATE and the experimental results demonstrate that RP-CATE achieves the best performance compared to other baseline models.

Lightweight Multi-task CNN for ECG Diagnosis with GRU-Diffusion

Nov 18, 2025Abstract:With the increasing demand for real-time Electrocardiogram (ECG) classification on edge devices, existing models face challenges of high computational cost and limited accuracy on imbalanced datasets.This paper presents Multi-task DFNet, a lightweight multi-task framework for ECG classification across the MIT-BIH Arrhythmia Database and the PTB Diagnostic ECG Database, enabling efficient task collaboration by dynamically sharing knowledge across tasks, such as arrhythmia detection, myocardial infarction (MI) classification, and other cardiovascular abnormalities. The proposed method integrates GRU-augmented Diffusion, where the GRU is embedded within the diffusion model to capture temporal dependencies better and generate high-quality synthetic signals for imbalanced classes. The experimental results show that Multi-task DFNet achieves 99.72% and 99.89% accuracy on the MIT-BIH dataset and PTB dataset, respectively, with significantly fewer parameters compared to traditional models, making it suitable for deployment on wearable ECG monitors. This work offers a compact and efficient solution for multi-task ECG diagnosis, providing a promising potential for edge healthcare applications on resource-constrained devices.

EmbodiedOneVision: Interleaved Vision-Text-Action Pretraining for General Robot Control

Aug 28, 2025Abstract:The human ability to seamlessly perform multimodal reasoning and physical interaction in the open world is a core goal for general-purpose embodied intelligent systems. Recent vision-language-action (VLA) models, which are co-trained on large-scale robot and visual-text data, have demonstrated notable progress in general robot control. However, they still fail to achieve human-level flexibility in interleaved reasoning and interaction. In this work, introduce EO-Robotics, consists of EO-1 model and EO-Data1.5M dataset. EO-1 is a unified embodied foundation model that achieves superior performance in multimodal embodied reasoning and robot control through interleaved vision-text-action pre-training. The development of EO-1 is based on two key pillars: (i) a unified architecture that processes multimodal inputs indiscriminately (image, text, video, and action), and (ii) a massive, high-quality multimodal embodied reasoning dataset, EO-Data1.5M, which contains over 1.5 million samples with emphasis on interleaved vision-text-action comprehension. EO-1 is trained through synergies between auto-regressive decoding and flow matching denoising on EO-Data1.5M, enabling seamless robot action generation and multimodal embodied reasoning. Extensive experiments demonstrate the effectiveness of interleaved vision-text-action learning for open-world understanding and generalization, validated through a variety of long-horizon, dexterous manipulation tasks across multiple embodiments. This paper details the architecture of EO-1, the data construction strategy of EO-Data1.5M, and the training methodology, offering valuable insights for developing advanced embodied foundation models.

TR-PTS: Task-Relevant Parameter and Token Selection for Efficient Tuning

Jul 30, 2025Abstract:Large pre-trained models achieve remarkable performance in vision tasks but are impractical for fine-tuning due to high computational and storage costs. Parameter-Efficient Fine-Tuning (PEFT) methods mitigate this issue by updating only a subset of parameters; however, most existing approaches are task-agnostic, failing to fully exploit task-specific adaptations, which leads to suboptimal efficiency and performance. To address this limitation, we propose Task-Relevant Parameter and Token Selection (TR-PTS), a task-driven framework that enhances both computational efficiency and accuracy. Specifically, we introduce Task-Relevant Parameter Selection, which utilizes the Fisher Information Matrix (FIM) to identify and fine-tune only the most informative parameters in a layer-wise manner, while keeping the remaining parameters frozen. Simultaneously, Task-Relevant Token Selection dynamically preserves the most informative tokens and merges redundant ones, reducing computational overhead. By jointly optimizing parameters and tokens, TR-PTS enables the model to concentrate on task-discriminative information. We evaluate TR-PTS on benchmark, including FGVC and VTAB-1k, where it achieves state-of-the-art performance, surpassing full fine-tuning by 3.40% and 10.35%, respectively. The code are available at https://github.com/synbol/TR-PTS.

Focus on Local: Finding Reliable Discriminative Regions for Visual Place Recognition

Apr 14, 2025Abstract:Visual Place Recognition (VPR) is aimed at predicting the location of a query image by referencing a database of geotagged images. For VPR task, often fewer discriminative local regions in an image produce important effects while mundane background regions do not contribute or even cause perceptual aliasing because of easy overlap. However, existing methods lack precisely modeling and full exploitation of these discriminative regions. In this paper, we propose the Focus on Local (FoL) approach to stimulate the performance of image retrieval and re-ranking in VPR simultaneously by mining and exploiting reliable discriminative local regions in images and introducing pseudo-correlation supervision. First, we design two losses, Extraction-Aggregation Spatial Alignment Loss (SAL) and Foreground-Background Contrast Enhancement Loss (CEL), to explicitly model reliable discriminative local regions and use them to guide the generation of global representations and efficient re-ranking. Second, we introduce a weakly-supervised local feature training strategy based on pseudo-correspondences obtained from aggregating global features to alleviate the lack of local correspondences ground truth for the VPR task. Third, we suggest an efficient re-ranking pipeline that is efficiently and precisely based on discriminative region guidance. Finally, experimental results show that our FoL achieves the state-of-the-art on multiple VPR benchmarks in both image retrieval and re-ranking stages and also significantly outperforms existing two-stage VPR methods in terms of computational efficiency. Code and models are available at https://github.com/chenshunpeng/FoL

AHSG: Adversarial Attacks on High-level Semantics in Graph Neural Networks

Dec 10, 2024

Abstract:Graph Neural Networks (GNNs) have garnered significant interest among researchers due to their impressive performance in graph learning tasks. However, like other deep neural networks, GNNs are also vulnerable to adversarial attacks. In existing adversarial attack methods for GNNs, the metric between the attacked graph and the original graph is usually the attack budget or a measure of global graph properties. However, we have found that it is possible to generate attack graphs that disrupt the primary semantics even within these constraints. To address this problem, we propose a Adversarial Attacks on High-level Semantics in Graph Neural Networks (AHSG), which is a graph structure attack model that ensures the retention of primary semantics. The latent representations of each node can extract rich semantic information by applying convolutional operations on graph data. These representations contain both task-relevant primary semantic information and task-irrelevant secondary semantic information. The latent representations of same-class nodes with the same primary semantics can fulfill the objective of modifying secondary semantics while preserving the primary semantics. Finally, the latent representations with attack effects is mapped to an attack graph using Projected Gradient Descent (PGD) algorithm. By attacking graph deep learning models with some advanced defense strategies, we validate that AHSG has superior attack effectiveness compared to other attack methods. Additionally, we employ Contextual Stochastic Block Models (CSBMs) as a proxy for the primary semantics to detect the attacked graph, confirming that AHSG almost does not disrupt the original primary semantics of the graph.

Fast Track to Winning Tickets: Repowering One-Shot Pruning for Graph Neural Networks

Dec 10, 2024

Abstract:Graph Neural Networks (GNNs) demonstrate superior performance in various graph learning tasks, yet their wider real-world application is hindered by the computational overhead when applied to large-scale graphs. To address the issue, the Graph Lottery Hypothesis (GLT) has been proposed, advocating the identification of subgraphs and subnetworks, \textit{i.e.}, winning tickets, without compromising performance. The effectiveness of current GLT methods largely stems from the use of iterative magnitude pruning (IMP), which offers higher stability and better performance than one-shot pruning. However, identifying GLTs is highly computationally expensive, due to the iterative pruning and retraining required by IMP. In this paper, we reevaluate the correlation between one-shot pruning and IMP: while one-shot tickets are suboptimal compared to IMP, they offer a \textit{fast track} to tickets with a stronger performance. We introduce a one-shot pruning and denoising framework to validate the efficacy of the \textit{fast track}. Compared to current IMP-based GLT methods, our framework achieves a double-win situation of graph lottery tickets with \textbf{higher sparsity} and \textbf{faster speeds}. Through extensive experiments across 4 backbones and 6 datasets, our method demonstrates $1.32\% - 45.62\%$ improvement in weight sparsity and a $7.49\% - 22.71\%$ increase in graph sparsity, along with a $1.7-44 \times$ speedup over IMP-based methods and $95.3\%-98.6\%$ MAC savings.

Error estimates between SGD with momentum and underdamped Langevin diffusion

Oct 22, 2024Abstract:Stochastic gradient descent with momentum is a popular variant of stochastic gradient descent, which has recently been reported to have a close relationship with the underdamped Langevin diffusion. In this paper, we establish a quantitative error estimate between them in the 1-Wasserstein and total variation distances.

LATEX-GCL: Large Language Models (LLMs)-Based Data Augmentation for Text-Attributed Graph Contrastive Learning

Sep 02, 2024

Abstract:Graph Contrastive Learning (GCL) is a potent paradigm for self-supervised graph learning that has attracted attention across various application scenarios. However, GCL for learning on Text-Attributed Graphs (TAGs) has yet to be explored. Because conventional augmentation techniques like feature embedding masking cannot directly process textual attributes on TAGs. A naive strategy for applying GCL to TAGs is to encode the textual attributes into feature embeddings via a language model and then feed the embeddings into the following GCL module for processing. Such a strategy faces three key challenges: I) failure to avoid information loss, II) semantic loss during the text encoding phase, and III) implicit augmentation constraints that lead to uncontrollable and incomprehensible results. In this paper, we propose a novel GCL framework named LATEX-GCL to utilize Large Language Models (LLMs) to produce textual augmentations and LLMs' powerful natural language processing (NLP) abilities to address the three limitations aforementioned to pave the way for applying GCL to TAG tasks. Extensive experiments on four high-quality TAG datasets illustrate the superiority of the proposed LATEX-GCL method. The source codes and datasets are released to ease the reproducibility, which can be accessed via this link: https://anonymous.4open.science/r/LATEX-GCL-0712.

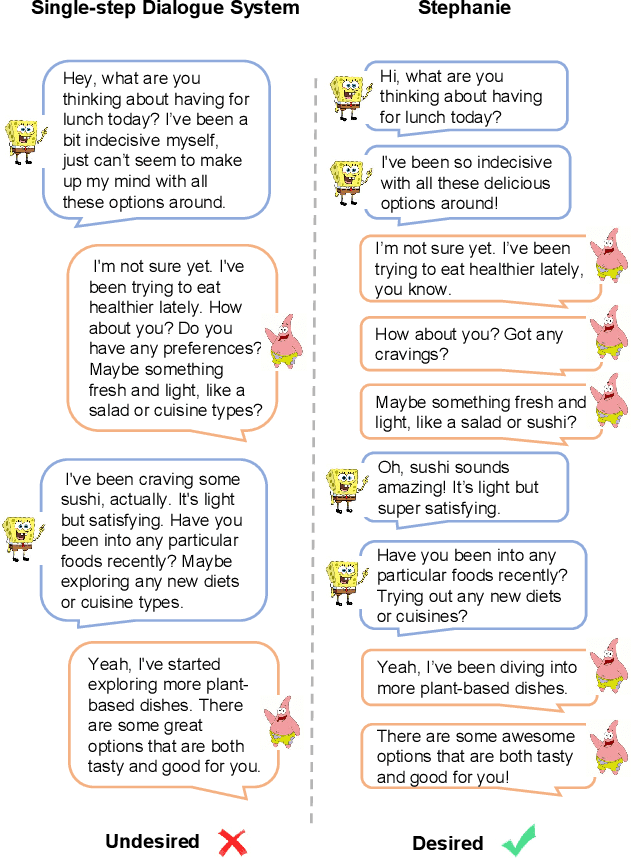

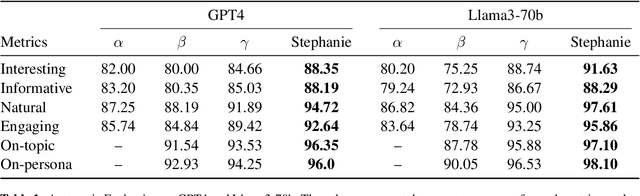

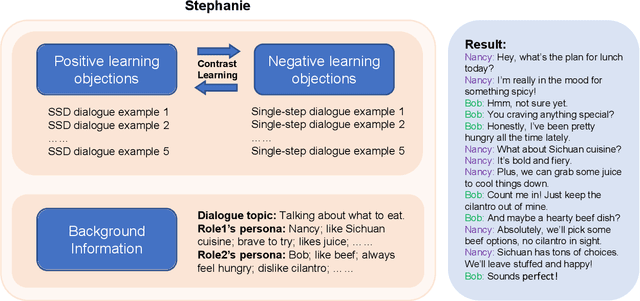

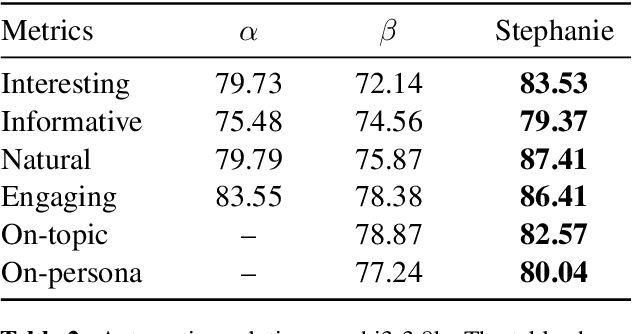

Stephanie: Step-by-Step Dialogues for Mimicking Human Interactions in Social Conversations

Jul 04, 2024

Abstract:In the rapidly evolving field of natural language processing, dialogue systems primarily employ a single-step dialogue paradigm. Although this paradigm is efficient, it lacks the depth and fluidity of human interactions and does not appear natural. We introduce a novel \textbf{Step}-by-Step Dialogue Paradigm (Stephanie), designed to mimic the ongoing dynamic nature of human conversations. By employing a dual learning strategy and a further-split post-editing method, we generated and utilized a high-quality step-by-step dialogue dataset to fine-tune existing large language models, enabling them to perform step-by-step dialogues. We thoroughly present Stephanie. Tailored automatic and human evaluations are conducted to assess its effectiveness compared to the traditional single-step dialogue paradigm. We will release code, Stephanie datasets, and Stephanie LLMs to facilitate the future of chatbot eras.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge