Zhiyang Liu

USS-Nav: Unified Spatio-Semantic Scene Graph for Lightweight UAV Zero-Shot Object Navigation

Feb 03, 2026Abstract:Zero-Shot Object Navigation in unknown environments poses significant challenges for Unmanned Aerial Vehicles (UAVs) due to the conflict between high-level semantic reasoning requirements and limited onboard computational resources. To address this, we present USS-Nav, a lightweight framework that incrementally constructs a Unified Spatio-Semantic scene graph and enables efficient Large Language Model (LLM)-augmented Zero-Shot Object Navigation in unknown environments. Specifically, we introduce an incremental Spatial Connectivity Graph generation method utilizing polyhedral expansion to capture global geometric topology, which is dynamically partitioned into semantic regions via graph clustering. Concurrently, open-vocabulary object semantics are instantiated and anchored to this topology to form a hierarchical environmental representation. Leveraging this hierarchical structure, we present a coarse-to-fine exploration strategy: LLM grounded in the scene graph's semantics to determine global target regions, while a local planner optimizes frontier coverage based on information gain. Experimental results demonstrate that our framework outperforms state-of-the-art methods in terms of computational efficiency and real-time update frequency (15 Hz) on a resource-constrained platform. Furthermore, ablation studies confirm the effectiveness of our framework, showing substantial improvements in Success weighted by Path Length (SPL). The source code will be made publicly available to foster further research.

SeLIP: Similarity Enhanced Contrastive Language Image Pretraining for Multi-modal Head MRI

Mar 25, 2025

Abstract:Despite that deep learning (DL) methods have presented tremendous potential in many medical image analysis tasks, the practical applications of medical DL models are limited due to the lack of enough data samples with manual annotations. By noting that the clinical radiology examinations are associated with radiology reports that describe the images, we propose to develop a foundation model for multi-model head MRI by using contrastive learning on the images and the corresponding radiology findings. In particular, a contrastive learning framework is proposed, where a mixed syntax and semantic similarity matching metric is integrated to reduce the thirst of extreme large dataset in conventional contrastive learning framework. Our proposed similarity enhanced contrastive language image pretraining (SeLIP) is able to effectively extract more useful features. Experiments revealed that our proposed SeLIP performs well in many downstream tasks including image-text retrieval task, classification task, and image segmentation, which highlights the importance of considering the similarities among texts describing different images in developing medical image foundation models.

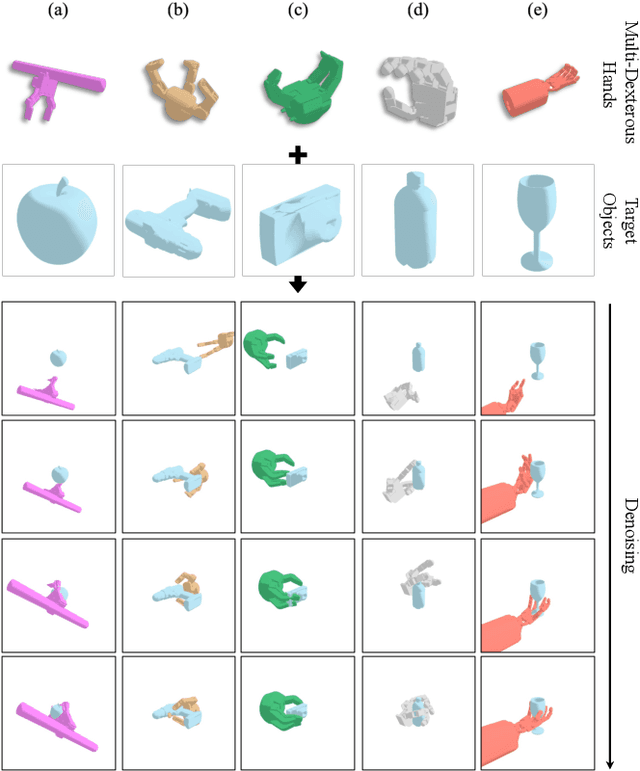

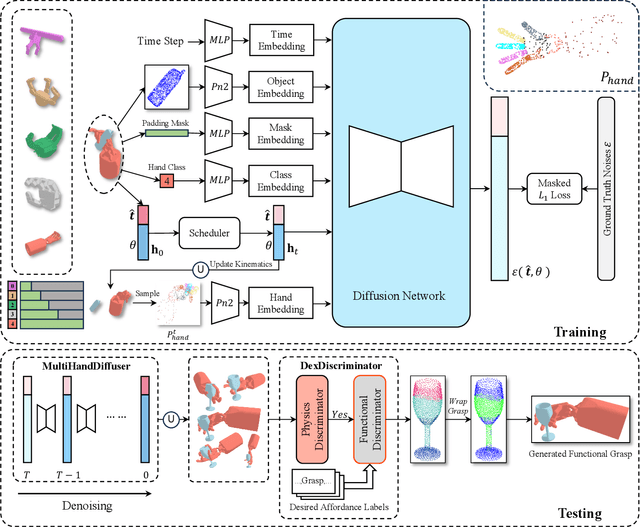

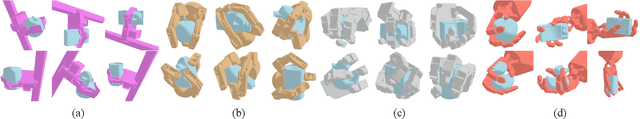

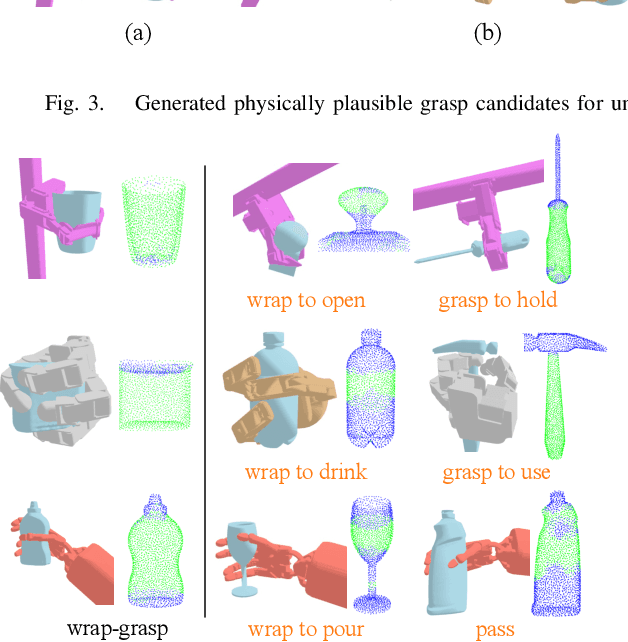

DexGrasp-Diffusion: Diffusion-based Unified Functional Grasp Synthesis Pipeline for Multi-Dexterous Robotic Hands

Jul 13, 2024

Abstract:The versatility and adaptability of human grasping catalyze advancing dexterous robotic manipulation. While significant strides have been made in dexterous grasp generation, current research endeavors pivot towards optimizing object manipulation while ensuring functional integrity, emphasizing the synthesis of functional grasps following desired affordance instructions. This paper addresses the challenge of synthesizing functional grasps tailored to diverse dexterous robotic hands by proposing DexGrasp-Diffusion, an end-to-end modularized diffusion-based pipeline. DexGrasp-Diffusion integrates MultiHandDiffuser, a novel unified data-driven diffusion model for multi-dexterous hands grasp estimation, with DexDiscriminator, which employs a Physics Discriminator and a Functional Discriminator with open-vocabulary setting to filter physically plausible functional grasps based on object affordances. The experimental evaluation conducted on the MultiDex dataset provides substantiating evidence supporting the superior performance of MultiHandDiffuser over the baseline model in terms of success rate, grasp diversity, and collision depth. Moreover, we demonstrate the capacity of DexGrasp-Diffusion to reliably generate functional grasps for household objects aligned with specific affordance instructions.

You Only Scan Once: A Dynamic Scene Reconstruction Pipeline for 6-DoF Robotic Grasping of Novel Objects

Apr 04, 2024

Abstract:In the realm of robotic grasping, achieving accurate and reliable interactions with the environment is a pivotal challenge. Traditional methods of grasp planning methods utilizing partial point clouds derived from depth image often suffer from reduced scene understanding due to occlusion, ultimately impeding their grasping accuracy. Furthermore, scene reconstruction methods have primarily relied upon static techniques, which are susceptible to environment change during manipulation process limits their efficacy in real-time grasping tasks. To address these limitations, this paper introduces a novel two-stage pipeline for dynamic scene reconstruction. In the first stage, our approach takes scene scanning as input to register each target object with mesh reconstruction and novel object pose tracking. In the second stage, pose tracking is still performed to provide object poses in real-time, enabling our approach to transform the reconstructed object point clouds back into the scene. Unlike conventional methodologies, which rely on static scene snapshots, our method continuously captures the evolving scene geometry, resulting in a comprehensive and up-to-date point cloud representation. By circumventing the constraints posed by occlusion, our method enhances the overall grasp planning process and empowers state-of-the-art 6-DoF robotic grasping algorithms to exhibit markedly improved accuracy.

DR-Pose: A Two-stage Deformation-and-Registration Pipeline for Category-level 6D Object Pose Estimation

Sep 05, 2023Abstract:Category-level object pose estimation involves estimating the 6D pose and the 3D metric size of objects from predetermined categories. While recent approaches take categorical shape prior information as reference to improve pose estimation accuracy, the single-stage network design and training manner lead to sub-optimal performance since there are two distinct tasks in the pipeline. In this paper, the advantage of two-stage pipeline over single-stage design is discussed. To this end, we propose a two-stage deformation-and registration pipeline called DR-Pose, which consists of completion-aided deformation stage and scaled registration stage. The first stage uses a point cloud completion method to generate unseen parts of target object, guiding subsequent deformation on the shape prior. In the second stage, a novel registration network is designed to extract pose-sensitive features and predict the representation of object partial point cloud in canonical space based on the deformation results from the first stage. DR-Pose produces superior results to the state-of-the-art shape prior-based methods on both CAMERA25 and REAL275 benchmarks. Codes are available at https://github.com/Zray26/DR-Pose.git.

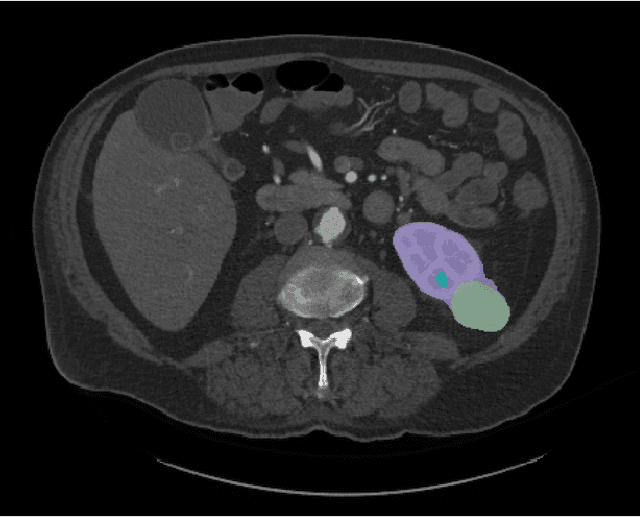

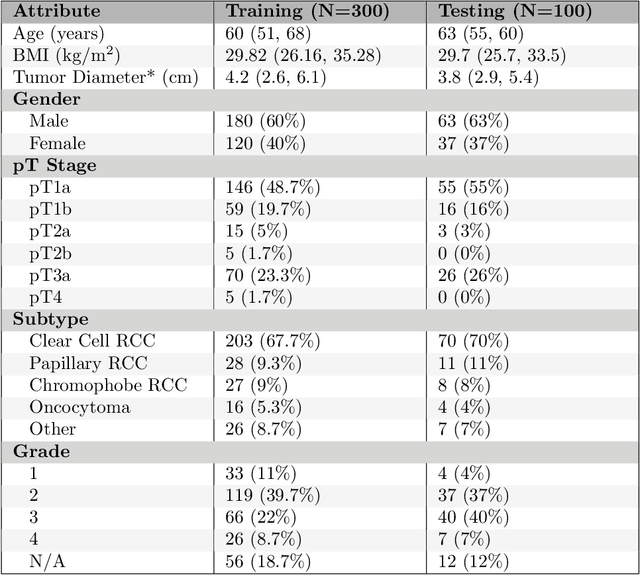

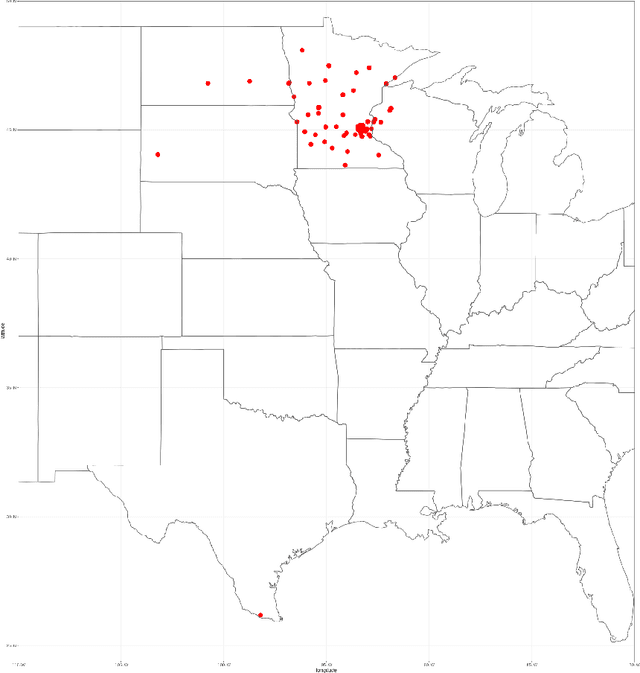

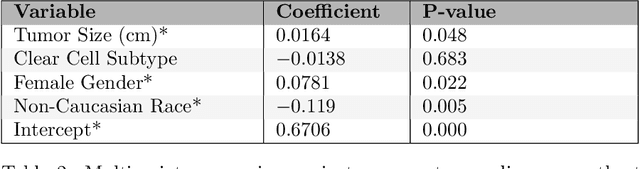

The KiTS21 Challenge: Automatic segmentation of kidneys, renal tumors, and renal cysts in corticomedullary-phase CT

Jul 05, 2023

Abstract:This paper presents the challenge report for the 2021 Kidney and Kidney Tumor Segmentation Challenge (KiTS21) held in conjunction with the 2021 international conference on Medical Image Computing and Computer Assisted Interventions (MICCAI). KiTS21 is a sequel to its first edition in 2019, and it features a variety of innovations in how the challenge was designed, in addition to a larger dataset. A novel annotation method was used to collect three separate annotations for each region of interest, and these annotations were performed in a fully transparent setting using a web-based annotation tool. Further, the KiTS21 test set was collected from an outside institution, challenging participants to develop methods that generalize well to new populations. Nonetheless, the top-performing teams achieved a significant improvement over the state of the art set in 2019, and this performance is shown to inch ever closer to human-level performance. An in-depth meta-analysis is presented describing which methods were used and how they faired on the leaderboard, as well as the characteristics of which cases generally saw good performance, and which did not. Overall KiTS21 facilitated a significant advancement in the state of the art in kidney tumor segmentation, and provides useful insights that are applicable to the field of semantic segmentation as a whole.

Learning-Free Grasping of Unknown Objects Using Hidden Superquadrics

May 24, 2023Abstract:Robotic grasping is an essential and fundamental task and has been studied extensively over the past several decades. Traditional work analyzes physical models of the objects and computes force-closure grasps. Such methods require pre-knowledge of the complete 3D model of an object, which can be hard to obtain. Recently with significant progress in machine learning, data-driven methods have dominated the area. Although impressive improvements have been achieved, those methods require a vast amount of training data and suffer from limited generalizability. In this paper, we propose a novel two-stage approach to predicting and synthesizing grasping poses directly from the point cloud of an object without database knowledge or learning. Firstly, multiple superquadrics are recovered at different positions within the object, representing the local geometric features of the object surface. Subsequently, our algorithm exploits the tri-symmetry feature of superquadrics and synthesizes a list of antipodal grasps from each recovered superquadric. An evaluation model is designed to assess and quantify the quality of each grasp candidate. The grasp candidate with the highest score is then selected as the final grasping pose. We conduct experiments on isolated and packed scenes to corroborate the effectiveness of our method. The results indicate that our method demonstrates competitive performance compared with the state-of-the-art without the need for either a full model or prior training.

GET-DIPP: Graph-Embedded Transformer for Differentiable Integrated Prediction and Planning

Nov 11, 2022

Abstract:Accurately predicting interactive road agents' future trajectories and planning a socially compliant and human-like trajectory accordingly are important for autonomous vehicles. In this paper, we propose a planning-centric prediction neural network, which takes surrounding agents' historical states and map context information as input, and outputs the joint multi-modal prediction trajectories for surrounding agents, as well as a sequence of control commands for the ego vehicle by imitation learning. An agent-agent interaction module along the time axis is proposed in our network architecture to better comprehend the relationship among all the other intelligent agents on the road. To incorporate the map's topological information, a Dynamic Graph Convolutional Neural Network (DGCNN) is employed to process the road network topology. Besides, the whole architecture can serve as a backbone for the Differentiable Integrated motion Prediction with Planning (DIPP) method by providing accurate prediction results and initial planning commands. Experiments are conducted on real-world datasets to demonstrate the improvements made by our proposed method in both planning and prediction accuracy compared to the previous state-of-the-art methods.

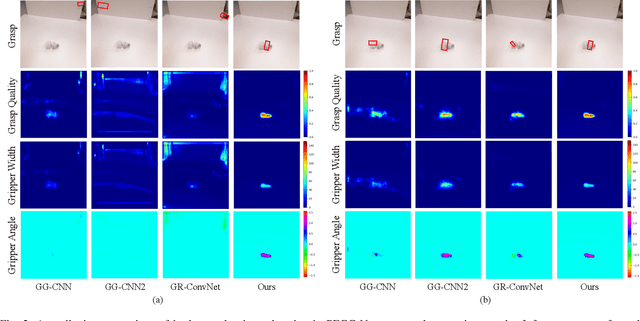

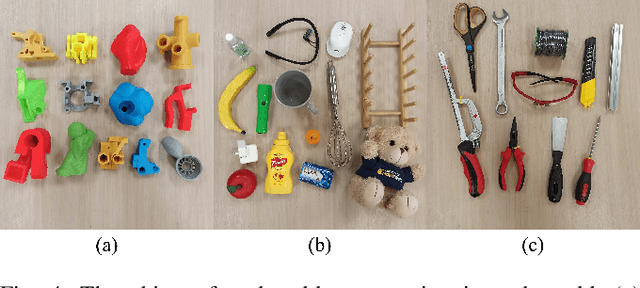

PEGG-Net: Background Agnostic Pixel-Wise Efficient Grasp Generation Under Closed-Loop Conditions

Mar 30, 2022

Abstract:Performing closed-loop grasping at close proximity to an object requires a large field of view. However, such images will inevitably bring large amounts of unnecessary background information, especially when the camera is far away from the target object at the initial stage, resulting in performance degradation of the grasping network. To address this problem, we design a novel PEGG-Net, a real-time, pixel-wise, robotic grasp generation network. The proposed lightweight network is inherently able to learn to remove background noise that can reduce grasping accuracy. Our proposed PEGG-Net achieves improved state-of-the-art performance on both Cornell dataset (98.9%) and Jacquard dataset (93.8%). In the real-world tests, PEGG-Net can support closed-loop grasping at up to 50Hz using an image size of 480x480 in dynamic environments. The trained model also generalizes to previously unseen objects with complex geometrical shapes, household objects and workshop tools and achieved an overall grasp success rate of 91.2% in our real-world grasping experiments.

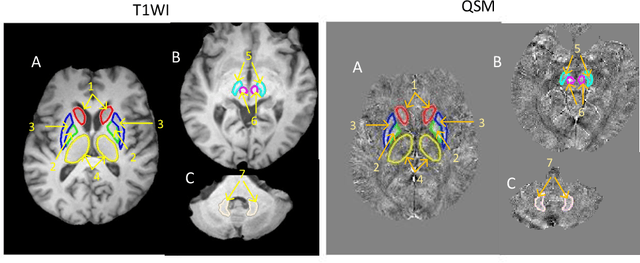

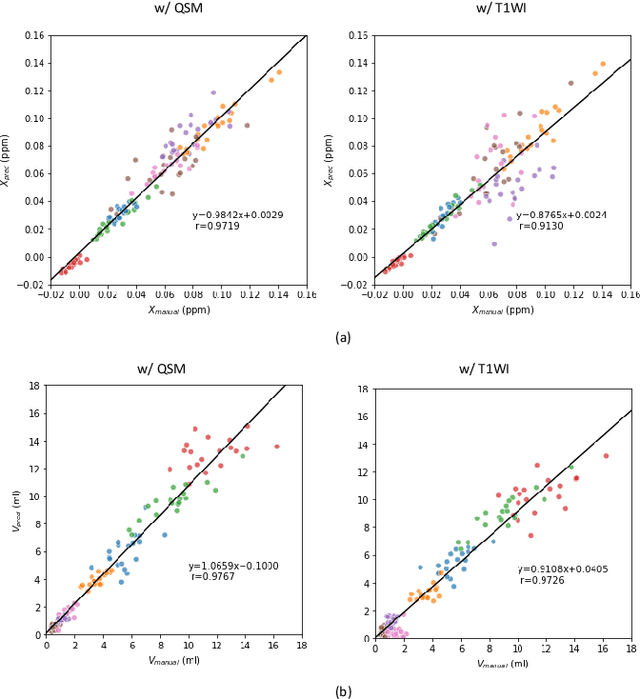

Automated Segmentation of Brain Gray Matter Nuclei on Quantitative Susceptibility Mapping Using Deep Convolutional Neural Network

Aug 03, 2020

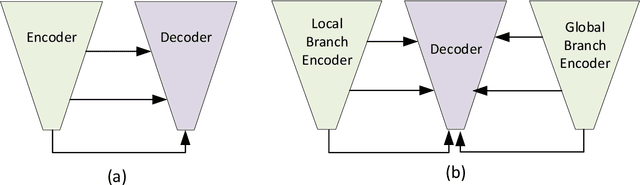

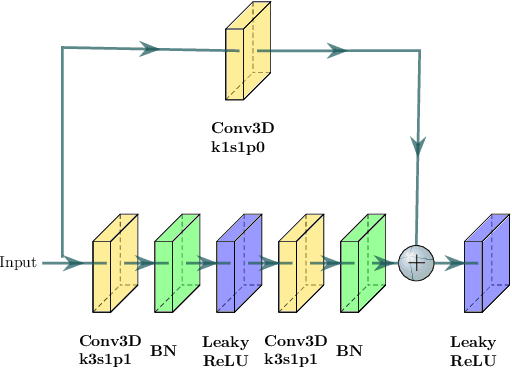

Abstract:Abnormal iron accumulation in the brain subcortical nuclei has been reported to be correlated to various neurodegenerative diseases, which can be measured through the magnetic susceptibility from the quantitative susceptibility mapping (QSM). To quantitively measure the magnetic susceptibility, the nuclei should be accurately segmented, which is a tedious task for clinicians. In this paper, we proposed a double-branch residual-structured U-Net (DB-ResUNet) based on 3D convolutional neural network (CNN) to automatically segment such brain gray matter nuclei. To better tradeoff between segmentation accuracy and the memory efficiency, the proposed DB-ResUNet fed image patches with high resolution and the patches with low resolution but larger field of view into the local and global branches, respectively. Experimental results revealed that by jointly using QSM and T$_\text{1}$ weighted imaging (T$_\text{1}$WI) as inputs, the proposed method was able to achieve better segmentation accuracy over its single-branch counterpart, as well as the conventional atlas-based method and the classical 3D-UNet structure. The susceptibility values and the volumes were also measured, which indicated that the measurements from the proposed DB-ResUNet are able to present high correlation with values from the manually annotated regions of interest.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge