Keng Peng Tee

RMP-YOLO: A Robust Motion Predictor for Partially Observable Scenarios even if You Only Look Once

Sep 18, 2024

Abstract:We introduce RMP-YOLO, a unified framework designed to provide robust motion predictions even with incomplete input data. Our key insight stems from the observation that complete and reliable historical trajectory data plays a pivotal role in ensuring accurate motion prediction. Therefore, we propose a new paradigm that prioritizes the reconstruction of intact historical trajectories before feeding them into the prediction modules. Our approach introduces a novel scene tokenization module to enhance the extraction and fusion of spatial and temporal features. Following this, our proposed recovery module reconstructs agents' incomplete historical trajectories by leveraging local map topology and interactions with nearby agents. The reconstructed, clean historical data is then integrated into the downstream prediction modules. Our framework is able to effectively handle missing data of varying lengths and remains robust against observation noise, while maintaining high prediction accuracy. Furthermore, our recovery module is compatible with existing prediction models, ensuring seamless integration. Extensive experiments validate the effectiveness of our approach, and deployment in real-world autonomous vehicles confirms its practical utility. In the 2024 Waymo Motion Prediction Competition, our method, RMP-YOLO, achieves state-of-the-art performance, securing third place.

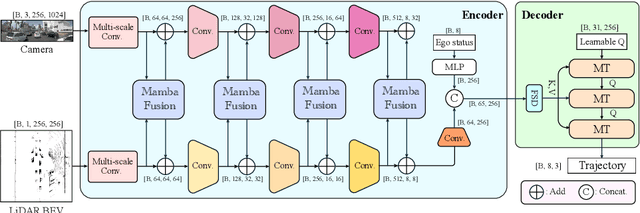

DRAMA: An Efficient End-to-end Motion Planner for Autonomous Driving with Mamba

Aug 07, 2024

Abstract:Motion planning is a challenging task to generate safe and feasible trajectories in highly dynamic and complex environments, forming a core capability for autonomous vehicles. In this paper, we propose DRAMA, the first Mamba-based end-to-end motion planner for autonomous vehicles. DRAMA fuses camera, LiDAR Bird's Eye View images in the feature space, as well as ego status information, to generate a series of future ego trajectories. Unlike traditional transformer-based methods with quadratic attention complexity for sequence length, DRAMA is able to achieve a less computationally intensive attention complexity, demonstrating potential to deal with increasingly complex scenarios. Leveraging our Mamba fusion module, DRAMA efficiently and effectively fuses the features of the camera and LiDAR modalities. In addition, we introduce a Mamba-Transformer decoder that enhances the overall planning performance. This module is universally adaptable to any Transformer-based model, especially for tasks with long sequence inputs. We further introduce a novel feature state dropout which improves the planner's robustness without increasing training and inference times. Extensive experimental results show that DRAMA achieves higher accuracy on the NAVSIM dataset compared to the baseline Transfuser, with fewer parameters and lower computational costs.

ControlMTR: Control-Guided Motion Transformer with Scene-Compliant Intention Points for Feasible Motion Prediction

Apr 17, 2024

Abstract:The ability to accurately predict feasible multimodal future trajectories of surrounding traffic participants is crucial for behavior planning in autonomous vehicles. The Motion Transformer (MTR), a state-of-the-art motion prediction method, alleviated mode collapse and instability during training and enhanced overall prediction performance by replacing conventional dense future endpoints with a small set of fixed prior motion intention points. However, the fixed prior intention points make the MTR multi-modal prediction distribution over-scattered and infeasible in many scenarios. In this paper, we propose the ControlMTR framework to tackle the aforementioned issues by generating scene-compliant intention points and additionally predicting driving control commands, which are then converted into trajectories by a simple kinematic model with soft constraints. These control-generated trajectories will guide the directly predicted trajectories by an auxiliary loss function. Together with our proposed scene-compliant intention points, they can effectively restrict the prediction distribution within the road boundaries and suppress infeasible off-road predictions while enhancing prediction performance. Remarkably, without resorting to additional model ensemble techniques, our method surpasses the baseline MTR model across all performance metrics, achieving notable improvements of 5.22% in SoftmAP and a 4.15% reduction in MissRate. Our approach notably results in a 41.85% reduction in the cross-boundary rate of the MTR, effectively ensuring that the prediction distribution is confined within the drivable area.

GET-DIPP: Graph-Embedded Transformer for Differentiable Integrated Prediction and Planning

Nov 11, 2022

Abstract:Accurately predicting interactive road agents' future trajectories and planning a socially compliant and human-like trajectory accordingly are important for autonomous vehicles. In this paper, we propose a planning-centric prediction neural network, which takes surrounding agents' historical states and map context information as input, and outputs the joint multi-modal prediction trajectories for surrounding agents, as well as a sequence of control commands for the ego vehicle by imitation learning. An agent-agent interaction module along the time axis is proposed in our network architecture to better comprehend the relationship among all the other intelligent agents on the road. To incorporate the map's topological information, a Dynamic Graph Convolutional Neural Network (DGCNN) is employed to process the road network topology. Besides, the whole architecture can serve as a backbone for the Differentiable Integrated motion Prediction with Planning (DIPP) method by providing accurate prediction results and initial planning commands. Experiments are conducted on real-world datasets to demonstrate the improvements made by our proposed method in both planning and prediction accuracy compared to the previous state-of-the-art methods.

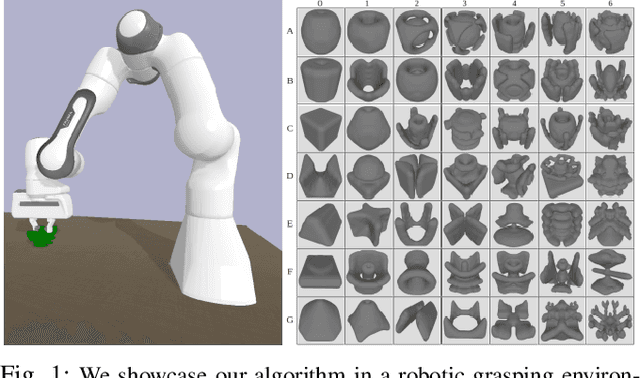

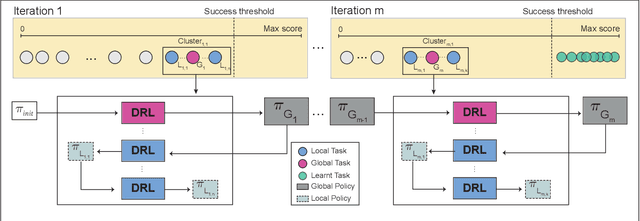

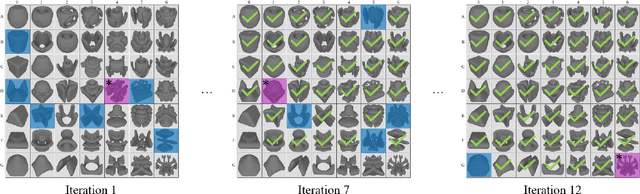

GloCAL: Glocalized Curriculum-Aided Learning of Multiple Tasks with Application to Robotic Grasping

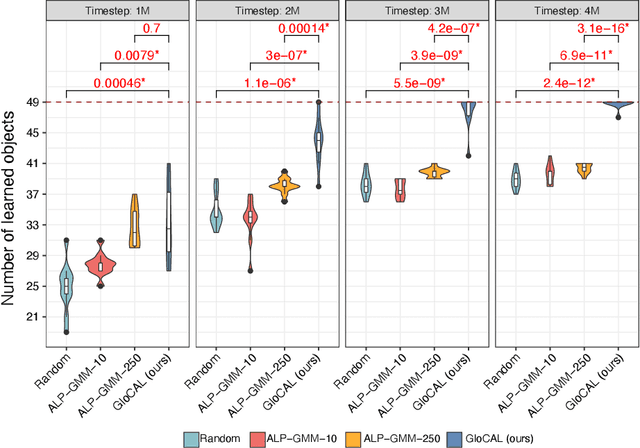

Apr 14, 2022

Abstract:The domain of robotics is challenging to apply deep reinforcement learning due to the need for large amounts of data and for ensuring safety during learning. Curriculum learning has shown good performance in terms of sample- efficient deep learning. In this paper, we propose an algorithm (named GloCAL) that creates a curriculum for an agent to learn multiple discrete tasks, based on clustering tasks according to their evaluation scores. From the highest-performing cluster, a global task representative of the cluster is identified for learning a global policy that transfers to subsequently formed new clusters, while the remaining tasks in the cluster are learned as local policies. The efficacy and efficiency of our GloCAL algorithm are compared with other approaches in the domain of grasp learning for 49 objects with varied object complexity and grasp difficulty from the EGAD! dataset. The results show that GloCAL is able to learn to grasp 100% of the objects, whereas other approaches achieve at most 86% despite being given 1.5 times longer training time.

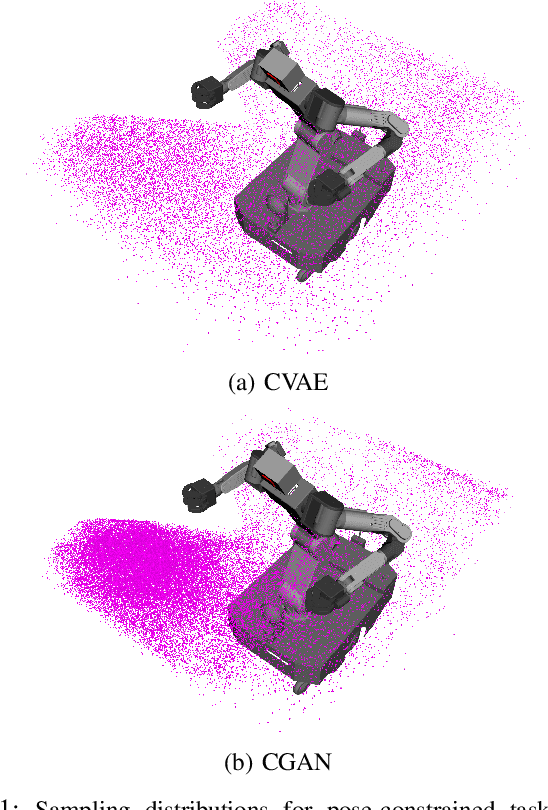

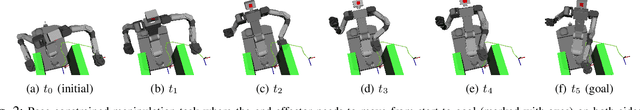

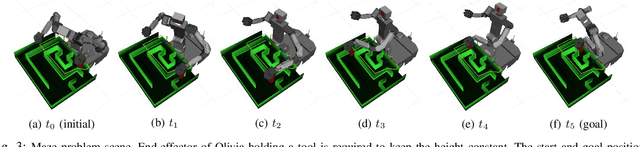

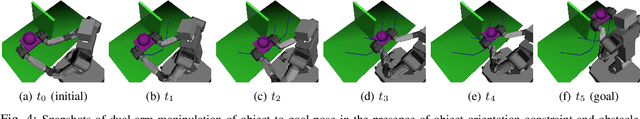

Approximating Constraint Manifolds Using Generative Models for Sampling-Based Constrained Motion Planning

Apr 14, 2022

Abstract:Sampling-based motion planning under task constraints is challenging because the null-measure constraint manifold in the configuration space makes rejection sampling extremely inefficient, if not impossible. This paper presents a learning-based sampling strategy for constrained motion planning problems. We investigate the use of two well-known deep generative models, the Conditional Variational Autoencoder (CVAE) and the Conditional Generative Adversarial Net (CGAN), to generate constraint-satisfying sample configurations. Instead of precomputed graphs, we use generative models conditioned on constraint parameters for approximating the constraint manifold. This approach allows for the efficient drawing of constraint-satisfying samples online without any need for modification of available sampling-based motion planning algorithms. We evaluate the efficiency of these two generative models in terms of their sampling accuracy and coverage of sampling distribution. Simulations and experiments are also conducted for different constraint tasks on two robotic platforms.

KOVIS: Keypoint-based Visual Servoing with Zero-Shot Sim-to-Real Transfer for Robotics Manipulation

Jul 28, 2020

Abstract:We present KOVIS, a novel learning-based, calibration-free visual servoing method for fine robotic manipulation tasks with eye-in-hand stereo camera system. We train the deep neural network only in the simulated environment; and the trained model could be directly used for real-world visual servoing tasks. KOVIS consists of two networks. The first keypoint network learns the keypoint representation from the image using with an autoencoder. Then the visual servoing network learns the motion based on keypoints extracted from the camera image. The two networks are trained end-to-end in the simulated environment by self-supervised learning without manual data labeling. After training with data augmentation, domain randomization, and adversarial examples, we are able to achieve zero-shot sim-to-real transfer to real-world robotic manipulation tasks. We demonstrate the effectiveness of the proposed method in both simulated environment and real-world experiment with different robotic manipulation tasks, including grasping, peg-in-hole insertion with 4mm clearance, and M13 screw insertion. The demo video is available at http://youtu.be/gfBJBR2tDzA

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge