Approximating Constraint Manifolds Using Generative Models for Sampling-Based Constrained Motion Planning

Paper and Code

Apr 14, 2022

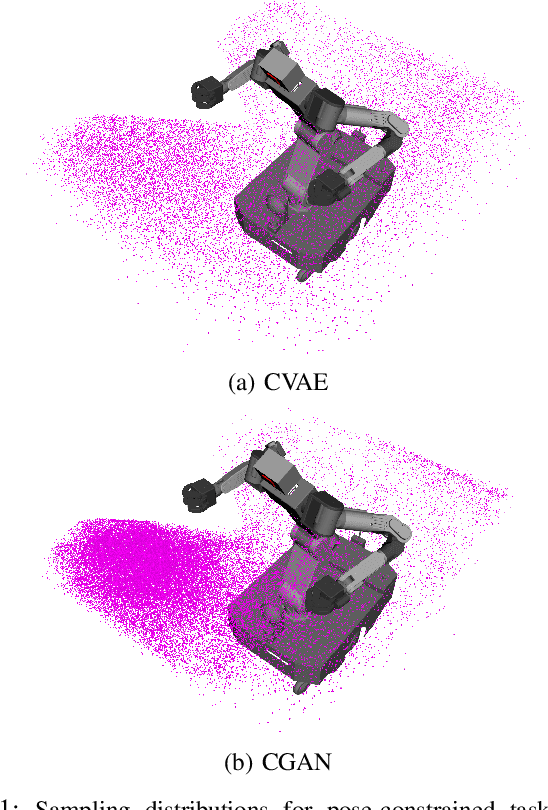

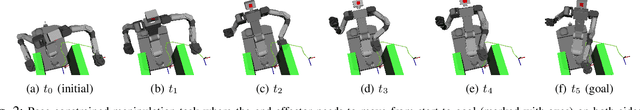

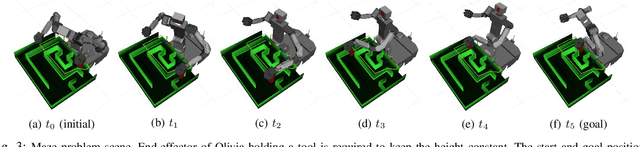

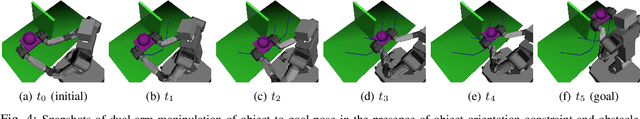

Sampling-based motion planning under task constraints is challenging because the null-measure constraint manifold in the configuration space makes rejection sampling extremely inefficient, if not impossible. This paper presents a learning-based sampling strategy for constrained motion planning problems. We investigate the use of two well-known deep generative models, the Conditional Variational Autoencoder (CVAE) and the Conditional Generative Adversarial Net (CGAN), to generate constraint-satisfying sample configurations. Instead of precomputed graphs, we use generative models conditioned on constraint parameters for approximating the constraint manifold. This approach allows for the efficient drawing of constraint-satisfying samples online without any need for modification of available sampling-based motion planning algorithms. We evaluate the efficiency of these two generative models in terms of their sampling accuracy and coverage of sampling distribution. Simulations and experiments are also conducted for different constraint tasks on two robotic platforms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge