Yuhang Han

Innovator-VL: A Multimodal Large Language Model for Scientific Discovery

Jan 27, 2026Abstract:We present Innovator-VL, a scientific multimodal large language model designed to advance understanding and reasoning across diverse scientific domains while maintaining excellent performance on general vision tasks. Contrary to the trend of relying on massive domain-specific pretraining and opaque pipelines, our work demonstrates that principled training design and transparent methodology can yield strong scientific intelligence with substantially reduced data requirements. (i) First, we provide a fully transparent, end-to-end reproducible training pipeline, covering data collection, cleaning, preprocessing, supervised fine-tuning, reinforcement learning, and evaluation, along with detailed optimization recipes. This facilitates systematic extension by the community. (ii) Second, Innovator-VL exhibits remarkable data efficiency, achieving competitive performance on various scientific tasks using fewer than five million curated samples without large-scale pretraining. These results highlight that effective reasoning can be achieved through principled data selection rather than indiscriminate scaling. (iii) Third, Innovator-VL demonstrates strong generalization, achieving competitive performance on general vision, multimodal reasoning, and scientific benchmarks. This indicates that scientific alignment can be integrated into a unified model without compromising general-purpose capabilities. Our practices suggest that efficient, reproducible, and high-performing scientific multimodal models can be built even without large-scale data, providing a practical foundation for future research.

Compression with Global Guidance: Towards Training-free High-Resolution MLLMs Acceleration

Jan 09, 2025

Abstract:Multimodal large language models (MLLMs) have attracted considerable attention due to their exceptional performance in visual content understanding and reasoning. However, their inference efficiency has been a notable concern, as the increasing length of multimodal contexts leads to quadratic complexity. Token compression techniques, which reduce the number of visual tokens, have demonstrated their effectiveness in reducing computational costs. Yet, these approaches have struggled to keep pace with the rapid advancements in MLLMs, especially the AnyRes strategy in the context of high-resolution image understanding. In this paper, we propose a novel token compression method, GlobalCom$^2$, tailored for high-resolution MLLMs that receive both the thumbnail and multiple crops. GlobalCom$^2$ treats the tokens derived from the thumbnail as the ``commander'' of the entire token compression process, directing the allocation of retention ratios and the specific compression for each crop. In this way, redundant tokens are eliminated while important local details are adaptively preserved to the highest extent feasible. Empirical results across 10 benchmarks reveal that GlobalCom$^2$ achieves an optimal balance between performance and efficiency, and consistently outperforms state-of-the-art token compression methods with LLaVA-NeXT-7B/13B models. Our code is released at \url{https://github.com/xuyang-liu16/GlobalCom2}.

Rethinking Token Reduction in MLLMs: Towards a Unified Paradigm for Training-Free Acceleration

Nov 26, 2024

Abstract:To accelerate the inference of heavy Multimodal Large Language Models (MLLMs), this study rethinks the current landscape of training-free token reduction research. We regret to find that the critical components of existing methods are tightly intertwined, with their interconnections and effects remaining unclear for comparison, transfer, and expansion. Therefore, we propose a unified ''filter-correlate-compress'' paradigm that decomposes the token reduction into three distinct stages within a pipeline, maintaining consistent design objectives and elements while allowing for unique implementations. We additionally demystify the popular works and subsume them into our paradigm to showcase its universality. Finally, we offer a suite of methods grounded in the paradigm, striking a balance between speed and accuracy throughout different phases of the inference. Experimental results across 10 benchmarks indicate that our methods can achieve up to an 82.4% reduction in FLOPs with a minimal impact on performance, simultaneously surpassing state-of-the-art training-free methods. Our project page is at https://ficoco-accelerate.github.io/.

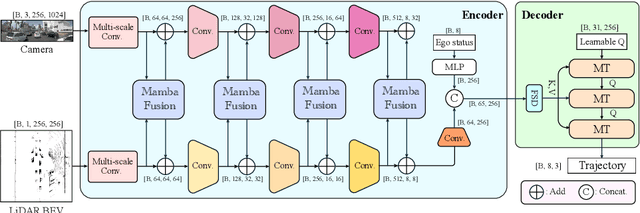

DRAMA: An Efficient End-to-end Motion Planner for Autonomous Driving with Mamba

Aug 07, 2024

Abstract:Motion planning is a challenging task to generate safe and feasible trajectories in highly dynamic and complex environments, forming a core capability for autonomous vehicles. In this paper, we propose DRAMA, the first Mamba-based end-to-end motion planner for autonomous vehicles. DRAMA fuses camera, LiDAR Bird's Eye View images in the feature space, as well as ego status information, to generate a series of future ego trajectories. Unlike traditional transformer-based methods with quadratic attention complexity for sequence length, DRAMA is able to achieve a less computationally intensive attention complexity, demonstrating potential to deal with increasingly complex scenarios. Leveraging our Mamba fusion module, DRAMA efficiently and effectively fuses the features of the camera and LiDAR modalities. In addition, we introduce a Mamba-Transformer decoder that enhances the overall planning performance. This module is universally adaptable to any Transformer-based model, especially for tasks with long sequence inputs. We further introduce a novel feature state dropout which improves the planner's robustness without increasing training and inference times. Extensive experimental results show that DRAMA achieves higher accuracy on the NAVSIM dataset compared to the baseline Transfuser, with fewer parameters and lower computational costs.

ADM: Accelerated Diffusion Model via Estimated Priors for Robust Motion Prediction under Uncertainties

May 01, 2024Abstract:Motion prediction is a challenging problem in autonomous driving as it demands the system to comprehend stochastic dynamics and the multi-modal nature of real-world agent interactions. Diffusion models have recently risen to prominence, and have proven particularly effective in pedestrian motion prediction tasks. However, the significant time consumption and sensitivity to noise have limited the real-time predictive capability of diffusion models. In response to these impediments, we propose a novel diffusion-based, acceleratable framework that adeptly predicts future trajectories of agents with enhanced resistance to noise. The core idea of our model is to learn a coarse-grained prior distribution of trajectory, which can skip a large number of denoise steps. This advancement not only boosts sampling efficiency but also maintains the fidelity of prediction accuracy. Our method meets the rigorous real-time operational standards essential for autonomous vehicles, enabling prompt trajectory generation that is vital for secure and efficient navigation. Through extensive experiments, our method speeds up the inference time to 136ms compared to standard diffusion model, and achieves significant improvement in multi-agent motion prediction on the Argoverse 1 motion forecasting dataset.

ControlMTR: Control-Guided Motion Transformer with Scene-Compliant Intention Points for Feasible Motion Prediction

Apr 17, 2024

Abstract:The ability to accurately predict feasible multimodal future trajectories of surrounding traffic participants is crucial for behavior planning in autonomous vehicles. The Motion Transformer (MTR), a state-of-the-art motion prediction method, alleviated mode collapse and instability during training and enhanced overall prediction performance by replacing conventional dense future endpoints with a small set of fixed prior motion intention points. However, the fixed prior intention points make the MTR multi-modal prediction distribution over-scattered and infeasible in many scenarios. In this paper, we propose the ControlMTR framework to tackle the aforementioned issues by generating scene-compliant intention points and additionally predicting driving control commands, which are then converted into trajectories by a simple kinematic model with soft constraints. These control-generated trajectories will guide the directly predicted trajectories by an auxiliary loss function. Together with our proposed scene-compliant intention points, they can effectively restrict the prediction distribution within the road boundaries and suppress infeasible off-road predictions while enhancing prediction performance. Remarkably, without resorting to additional model ensemble techniques, our method surpasses the baseline MTR model across all performance metrics, achieving notable improvements of 5.22% in SoftmAP and a 4.15% reduction in MissRate. Our approach notably results in a 41.85% reduction in the cross-boundary rate of the MTR, effectively ensuring that the prediction distribution is confined within the drivable area.

DriveSceneGen: Generating Diverse and Realistic Driving Scenarios from Scratch

Sep 26, 2023

Abstract:Realistic and diverse traffic scenarios in large quantities are crucial for the development and validation of autonomous driving systems. However, owing to numerous difficulties in the data collection process and the reliance on intensive annotations, real-world datasets lack sufficient quantity and diversity to support the increasing demand for data. This work introduces DriveSceneGen, a data-driven driving scenario generation method that learns from the real-world driving dataset and generates entire dynamic driving scenarios from scratch. DriveSceneGen is able to generate novel driving scenarios that align with real-world data distributions with high fidelity and diversity. Experimental results on 5k generated scenarios highlight the generation quality, diversity, and scalability compared to real-world datasets. To the best of our knowledge, DriveSceneGen is the first method that generates novel driving scenarios involving both static map elements and dynamic traffic participants from scratch.

CARLA-Loc: Synthetic SLAM Dataset with Full-stack Sensor Setup in Challenging Weather and Dynamic Environments

Sep 16, 2023Abstract:The robustness of SLAM algorithms in challenging environmental conditions is crucial for autonomous driving, but the impact of these conditions are unknown while given the difficulty of arbitrarily changing the relevant environmental parameters of the same environment in the real world. Therefore, we propose CARLA-Loc, a synthetic dataset of challenging and dynamic environments built on CARLA simulator. We integrate multiple sensors into the dataset with strict calibration, synchronization and precise timestamping. 7 maps and 42 sequences are posed in our dataset with different dynamic levels and weather conditions. Objects in both stereo images and point clouds are well-segmented with their class labels. We evaluate 5 visual-based and 4 LiDAR-based approaches on varies sequences and analyze the effect of challenging environmental factors on the localization accuracy, showing the applicability of proposed dataset for validating SLAM algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge