Gregory S. Chirikjian

INGRID: Intelligent Generative Robotic Design Using Large Language Models

Sep 04, 2025

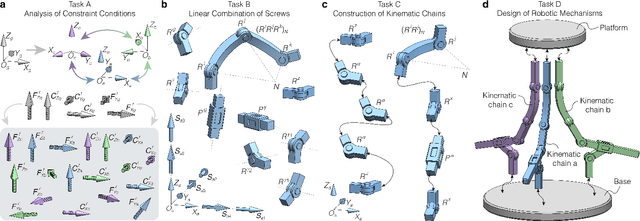

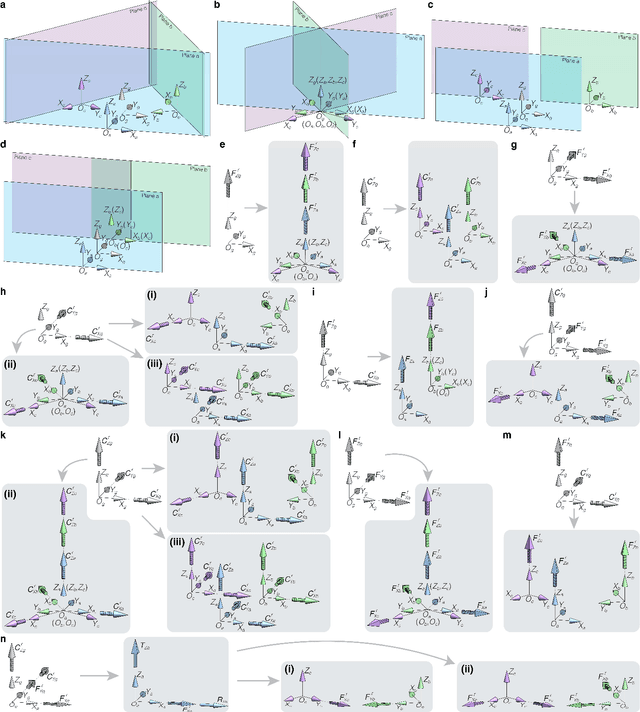

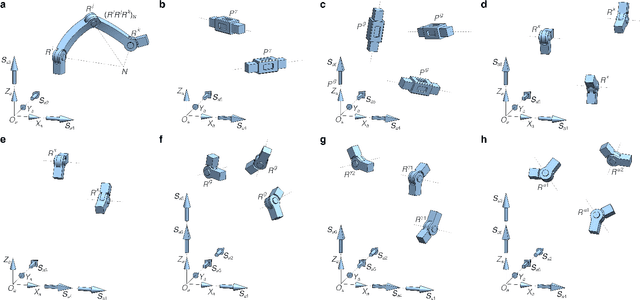

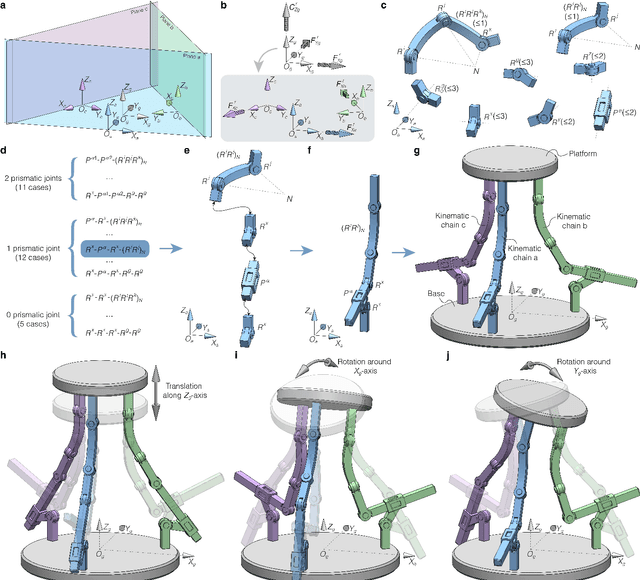

Abstract:The integration of large language models (LLMs) into robotic systems has accelerated progress in embodied artificial intelligence, yet current approaches remain constrained by existing robotic architectures, particularly serial mechanisms. This hardware dependency fundamentally limits the scope of robotic intelligence. Here, we present INGRID (Intelligent Generative Robotic Design), a framework that enables the automated design of parallel robotic mechanisms through deep integration with reciprocal screw theory and kinematic synthesis methods. We decompose the design challenge into four progressive tasks: constraint analysis, kinematic joint generation, chain construction, and complete mechanism design. INGRID demonstrates the ability to generate novel parallel mechanisms with both fixed and variable mobility, discovering kinematic configurations not previously documented in the literature. We validate our approach through three case studies demonstrating how INGRID assists users in designing task-specific parallel robots based on desired mobility requirements. By bridging the gap between mechanism theory and machine learning, INGRID enables researchers without specialized robotics training to create custom parallel mechanisms, thereby decoupling advances in robotic intelligence from hardware constraints. This work establishes a foundation for mechanism intelligence, where AI systems actively design robotic hardware, potentially transforming the development of embodied AI systems.

Reasoning and Learning a Perceptual Metric for Self-Training of Reflective Objects in Bin-Picking with a Low-cost Camera

Mar 26, 2025Abstract:Bin-picking of metal objects using low-cost RGB-D cameras often suffers from sparse depth information and reflective surface textures, leading to errors and the need for manual labeling. To reduce human intervention, we propose a two-stage framework consisting of a metric learning stage and a self-training stage. Specifically, to automatically process data captured by a low-cost camera (LC), we introduce a Multi-object Pose Reasoning (MoPR) algorithm that optimizes pose hypotheses under depth, collision, and boundary constraints. To further refine pose candidates, we adopt a Symmetry-aware Lie-group based Bayesian Gaussian Mixture Model (SaL-BGMM), integrated with the Expectation-Maximization (EM) algorithm, for symmetry-aware filtering. Additionally, we propose a Weighted Ranking Information Noise Contrastive Estimation (WR-InfoNCE) loss to enable the LC to learn a perceptual metric from reconstructed data, supporting self-training on untrained or even unseen objects. Experimental results show that our approach outperforms several state-of-the-art methods on both the ROBI dataset and our newly introduced Self-ROBI dataset.

Parameter Estimation on Homogeneous Spaces

Oct 31, 2024

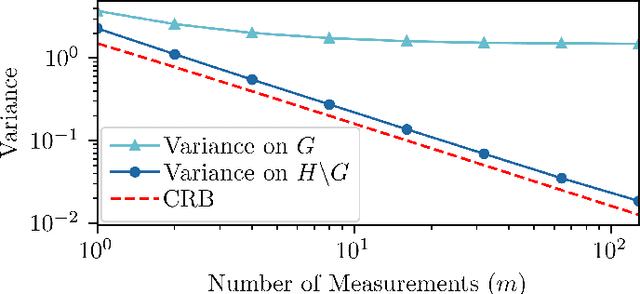

Abstract:The Fisher Information Metric (FIM) and the associated Cram\'er-Rao Bound (CRB) are fundamental tools in statistical signal processing, which inform the efficient design of experiments and algorithms for estimating the underlying parameters. In this article, we investigate these concepts for the case where the parameters lie on a homogeneous space. Unlike the existing Fisher-Rao theory for general Riemannian manifolds, our focus is to leverage the group-theoretic structure of homogeneous spaces, which is often much easier to work with than their Riemannian structure. The FIM is characterized by identifying the homogeneous space with a coset space, the group-theoretic CRB and its corollaries are presented, and its relationship to the Riemannian CRB is clarified. The application of our theory is illustrated using two examples from engineering: (i) estimation of the pose of a robot and (ii) sensor network localization. In particular, these examples demonstrate that homogeneous spaces provide a natural framework for studying statistical models that are invariant with respect to a group of symmetries.

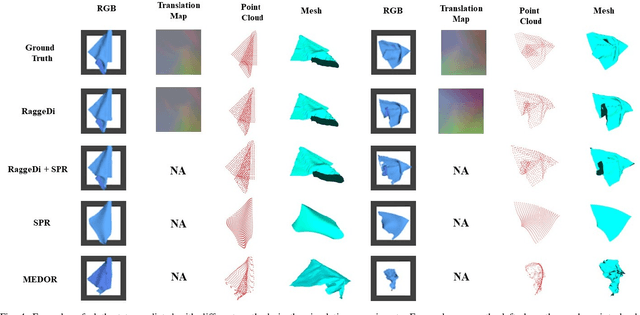

RaggeDi: Diffusion-based State Estimation of Disordered Rags, Sheets, Towels and Blankets

Sep 18, 2024

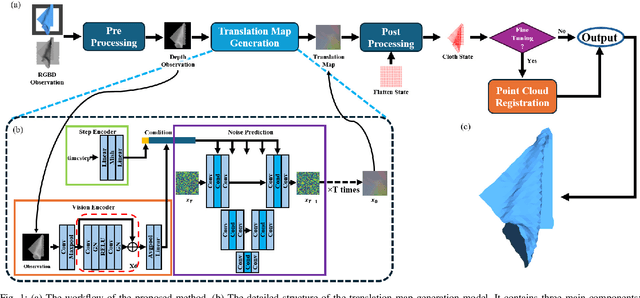

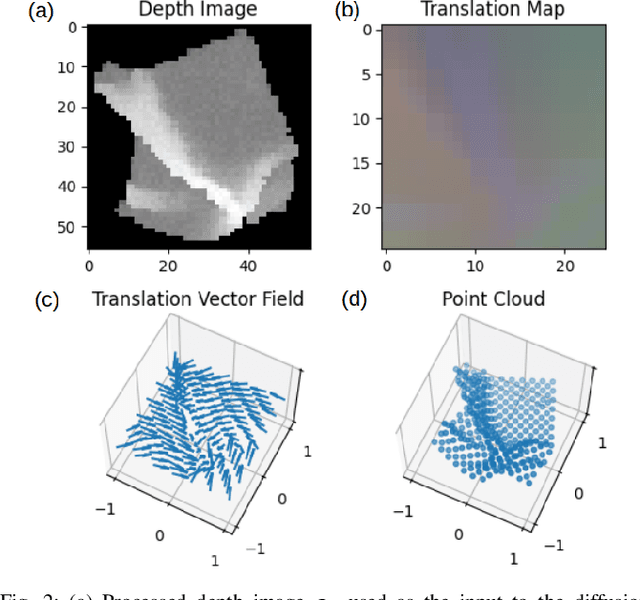

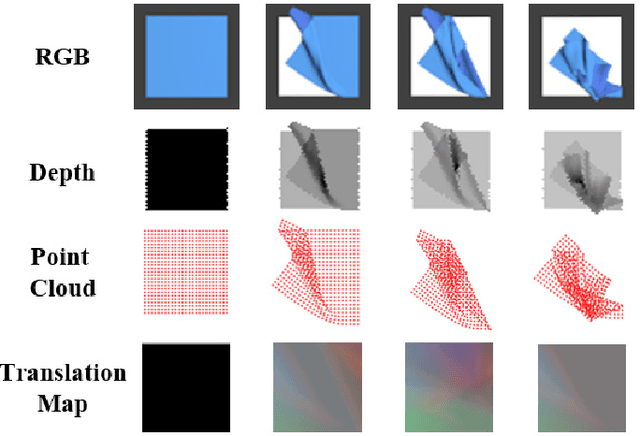

Abstract:Cloth state estimation is an important problem in robotics. It is essential for the robot to know the accurate state to manipulate cloth and execute tasks such as robotic dressing, stitching, and covering/uncovering human beings. However, estimating cloth state accurately remains challenging due to its high flexibility and self-occlusion. This paper proposes a diffusion model-based pipeline that formulates the cloth state estimation as an image generation problem by representing the cloth state as an RGB image that describes the point-wise translation (translation map) between a pre-defined flattened mesh and the deformed mesh in a canonical space. Then we train a conditional diffusion-based image generation model to predict the translation map based on an observation. Experiments are conducted in both simulation and the real world to validate the performance of our method. Results indicate that our method outperforms two recent methods in both accuracy and speed.

Grasping by Hanging: a Learning-Free Grasping Detection Method for Previously Unseen Objects

Aug 13, 2024Abstract:This paper proposes a novel learning-free three-stage method that predicts grasping poses, enabling robots to pick up and transfer previously unseen objects. Our method first identifies potential structures that can afford the action of hanging by analyzing the hanging mechanics and geometric properties. Then 6D poses are detected for a parallel gripper retrofitted with an extending bar, which when closed forms loops to hook each hangable structure. Finally, an evaluation policy qualities and rank grasp candidates for execution attempts. Compared to the traditional physical model-based and deep learning-based methods, our approach is closer to the human natural action of grasping unknown objects. And it also eliminates the need for a vast amount of training data. To evaluate the effectiveness of the proposed method, we conducted experiments with a real robot. Experimental results indicate that the grasping accuracy and stability are significantly higher than the state-of-the-art learning-based method, especially for thin and flat objects.

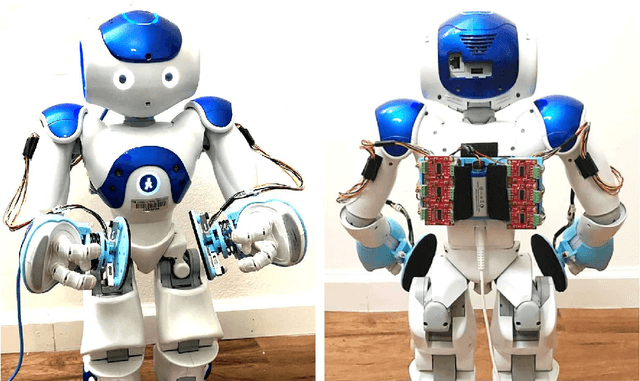

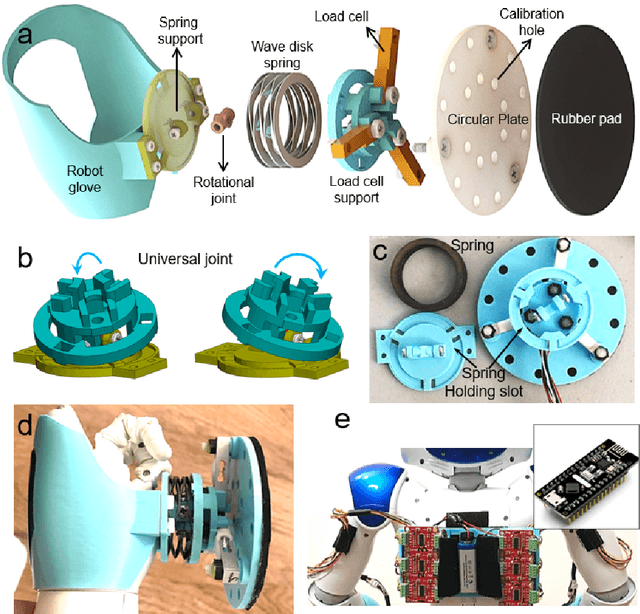

Design, Calibration, and Control of Compliant Force-sensing Gripping Pads for Humanoid Robots

May 31, 2024

Abstract:This paper introduces a pair of low-cost, light-weight and compliant force-sensing gripping pads used for manipulating box-like objects with smaller-sized humanoid robots. These pads measure normal gripping forces and center of pressure (CoP). A calibration method is developed to improve the CoP measurement accuracy. A hybrid force-alignment-position control framework is proposed to regulate the gripping forces and to ensure the surface alignment between the grippers and the object. Limit surface theory is incorporated as a contact friction modeling approach to determine the magnitude of gripping forces for slippage avoidance. The integrated hardware and software system is demonstrated with a NAO humanoid robot. Experiments show the effectiveness of the overall approach.

* 21 pages, 16 figures, Published in ASME Journal of Mechanisms and Robotics

RAIL: Robot Affordance Imagination with Large Language Models

Mar 28, 2024Abstract:This paper introduces an automatic affordance reasoning paradigm tailored to minimal semantic inputs, addressing the critical challenges of classifying and manipulating unseen classes of objects in household settings. Inspired by human cognitive processes, our method integrates generative language models and physics-based simulators to foster analytical thinking and creative imagination of novel affordances. Structured with a tripartite framework consisting of analysis, imagination, and evaluation, our system "analyzes" the requested affordance names into interaction-based definitions, "imagines" the virtual scenarios, and "evaluates" the object affordance. If an object is recognized as possessing the requested affordance, our method also predicts the optimal pose for such functionality, and how a potential user can interact with it. Tuned on only a few synthetic examples across 3 affordance classes, our pipeline achieves a very high success rate on affordance classification and functional pose prediction of 8 classes of novel objects, outperforming learning-based baselines. Validation through real robot manipulating experiments demonstrates the practical applicability of the imagined user interaction, showcasing the system's ability to independently conceptualize unseen affordances and interact with new objects and scenarios in everyday settings.

Uncertainty Propagation on Unimodular Matrix Lie Groups

Dec 06, 2023Abstract:This paper addresses uncertainty propagation on unimodular matrix Lie groups that have a surjective exponential map. We derive the exact formula for the propagation of mean and covariance in a continuous-time setting from the governing Fokker-Planck equation. Two approximate propagation methods are discussed based on the exact formula. One uses numerical quadrature and another utilizes the expansion of moments. A closed-form second-order propagation formula is derived. We apply the general theory to the joint attitude and angular momentum uncertainty propagation problem and numerical experiments demonstrate two approximation methods. These results show that our new methods have high accuracy while being computationally efficient.

Prepare the Chair for the Bear! Robot Imagination of Sitting Affordance to Reorient Previously Unseen Chairs

Jun 20, 2023

Abstract:In this letter, a paradigm for the classification and manipulation of previously unseen objects is established and demonstrated through a real example of chairs. We present a novel robot manipulation method, guided by the understanding of object stability, perceptibility, and affordance, which allows the robot to prepare previously unseen and randomly oriented chairs for a teddy bear to sit on. Specifically, the robot encounters an unknown object and first reconstructs a complete 3D model from perceptual data via active and autonomous manipulation. By inserting this model into a physical simulator (i.e., the robot's "imagination"), the robot assesses whether the object is a chair and determines how to reorient it properly to be used, i.e., how to reorient it to an upright and accessible pose. If the object is classified as a chair, the robot reorients the object to this pose and seats the teddy bear onto the chair. The teddy bear is a proxy for an elderly person, hospital patient, or child. Experiment results show that our method achieves a high success rate on the real robot task of chair preparation. Also, it outperforms several baseline methods on the task of upright pose prediction for chairs.

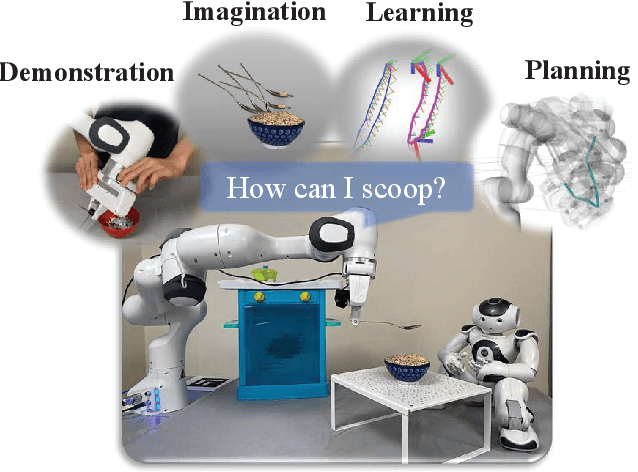

PRIMP: PRobabilistically-Informed Motion Primitives for Efficient Affordance Learning from Demonstration

May 25, 2023

Abstract:This paper proposes a learning-from-demonstration method using probability densities on the workspaces of robot manipulators. The method, named "PRobabilistically-Informed Motion Primitives (PRIMP)", learns the probability distribution of the end effector trajectories in the 6D workspace that includes both positions and orientations. It is able to adapt to new situations such as novel via poses with uncertainty and a change of viewing frame. The method itself is robot-agnostic, in which the learned distribution can be transferred to another robot with the adaptation to its workspace density. The learned trajectory distribution is then used to guide an optimization-based motion planning algorithm to further help the robot avoid novel obstacles that are unseen during the demonstration process. The proposed methods are evaluated by several sets of benchmark experiments. PRIMP runs more than 5 times faster while generalizing trajectories more than twice as close to both the demonstrations and novel desired poses. It is then combined with our robot imagination method that learns object affordances, illustrating the applicability of PRIMP to learn tool use through physical experiments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge