Wanze Li

RaggeDi: Diffusion-based State Estimation of Disordered Rags, Sheets, Towels and Blankets

Sep 18, 2024

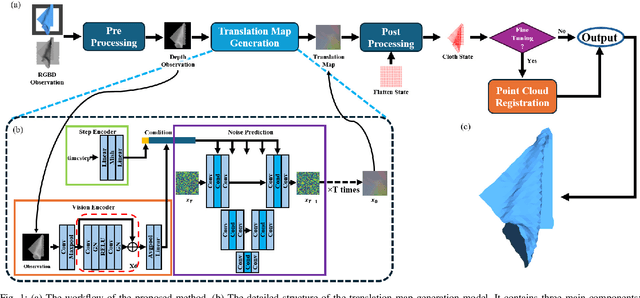

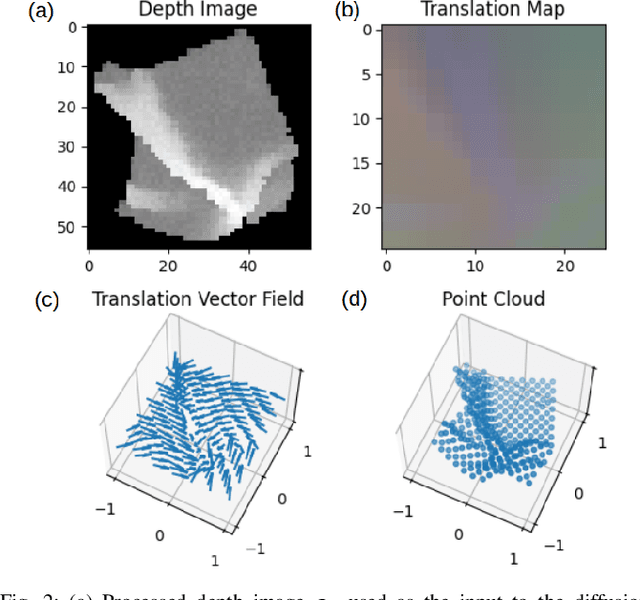

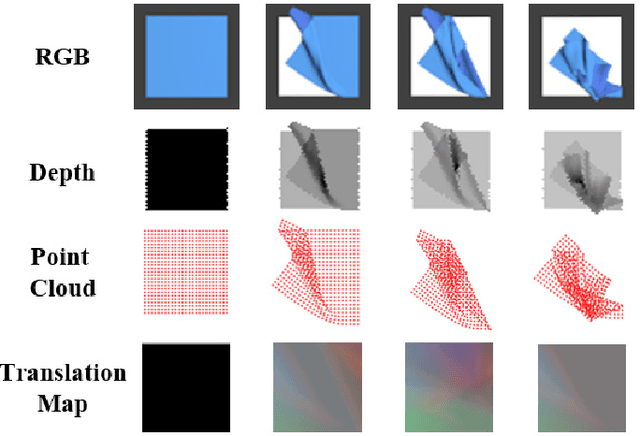

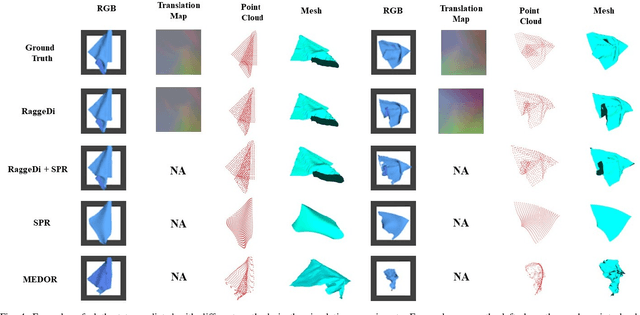

Abstract:Cloth state estimation is an important problem in robotics. It is essential for the robot to know the accurate state to manipulate cloth and execute tasks such as robotic dressing, stitching, and covering/uncovering human beings. However, estimating cloth state accurately remains challenging due to its high flexibility and self-occlusion. This paper proposes a diffusion model-based pipeline that formulates the cloth state estimation as an image generation problem by representing the cloth state as an RGB image that describes the point-wise translation (translation map) between a pre-defined flattened mesh and the deformed mesh in a canonical space. Then we train a conditional diffusion-based image generation model to predict the translation map based on an observation. Experiments are conducted in both simulation and the real world to validate the performance of our method. Results indicate that our method outperforms two recent methods in both accuracy and speed.

Grasping by Hanging: a Learning-Free Grasping Detection Method for Previously Unseen Objects

Aug 13, 2024Abstract:This paper proposes a novel learning-free three-stage method that predicts grasping poses, enabling robots to pick up and transfer previously unseen objects. Our method first identifies potential structures that can afford the action of hanging by analyzing the hanging mechanics and geometric properties. Then 6D poses are detected for a parallel gripper retrofitted with an extending bar, which when closed forms loops to hook each hangable structure. Finally, an evaluation policy qualities and rank grasp candidates for execution attempts. Compared to the traditional physical model-based and deep learning-based methods, our approach is closer to the human natural action of grasping unknown objects. And it also eliminates the need for a vast amount of training data. To evaluate the effectiveness of the proposed method, we conducted experiments with a real robot. Experimental results indicate that the grasping accuracy and stability are significantly higher than the state-of-the-art learning-based method, especially for thin and flat objects.

Model-Free and Learning-Free Proprioceptive Humanoid Movement Control

Feb 28, 2023

Abstract:This paper presents a novel model-free method for humanoid-robot quasi-static movement control. Traditional model-based methods often require precise robot model parameters. Additionally, existing learning-based frameworks often train the policy in simulation environments, thereby indirectly relying on a model. In contrast, we propose a proprioceptive framework based only on sensory outputs. It does not require prior knowledge of a robot's kinematic model or inertial parameters. Our method consists of three steps: 1. Planning different pairs of center of pressure (CoP) and foot position objectives within a single cycle. 2. Searching around the current configuration by slightly moving the robot's leg joints back and forth while recording the sensor measurements of its CoP and foot positions. 3. Updating the robot motion with an optimization algorithm until all objectives are achieved. We demonstrate our approach on a NAO humanoid robot platform. Experiment results show that it can successfully generate stable robot motions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge