Chee Meng Chew

Reasoning and Learning a Perceptual Metric for Self-Training of Reflective Objects in Bin-Picking with a Low-cost Camera

Mar 26, 2025Abstract:Bin-picking of metal objects using low-cost RGB-D cameras often suffers from sparse depth information and reflective surface textures, leading to errors and the need for manual labeling. To reduce human intervention, we propose a two-stage framework consisting of a metric learning stage and a self-training stage. Specifically, to automatically process data captured by a low-cost camera (LC), we introduce a Multi-object Pose Reasoning (MoPR) algorithm that optimizes pose hypotheses under depth, collision, and boundary constraints. To further refine pose candidates, we adopt a Symmetry-aware Lie-group based Bayesian Gaussian Mixture Model (SaL-BGMM), integrated with the Expectation-Maximization (EM) algorithm, for symmetry-aware filtering. Additionally, we propose a Weighted Ranking Information Noise Contrastive Estimation (WR-InfoNCE) loss to enable the LC to learn a perceptual metric from reconstructed data, supporting self-training on untrained or even unseen objects. Experimental results show that our approach outperforms several state-of-the-art methods on both the ROBI dataset and our newly introduced Self-ROBI dataset.

Learning Stable Robot Grasping with Transformer-based Tactile Control Policies

Jul 30, 2024Abstract:Measuring grasp stability is an important skill for dexterous robot manipulation tasks, which can be inferred from haptic information with a tactile sensor. Control policies have to detect rotational displacement and slippage from tactile feedback, and determine a re-grasp strategy in term of location and force. Classic stable grasp task only trains control policies to solve for re-grasp location with objects of fixed center of gravity. In this work, we propose a revamped version of stable grasp task that optimises both re-grasp location and gripping force for objects with unknown and moving center of gravity. We tackle this task with a model-free, end-to-end Transformer-based reinforcement learning framework. We show that our approach is able to solve both objectives after training in both simulation and in a real-world setup with zero-shot transfer. We also provide performance analysis of different models to understand the dynamics of optimizing two opposing objectives.

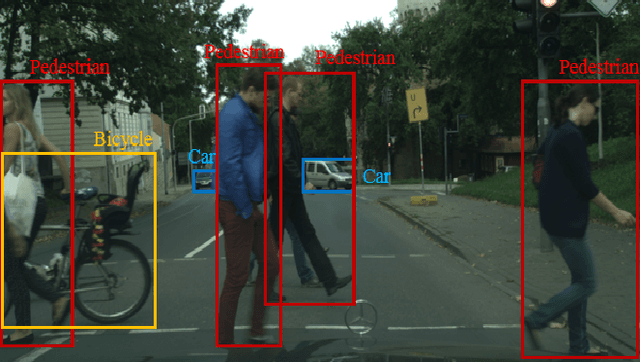

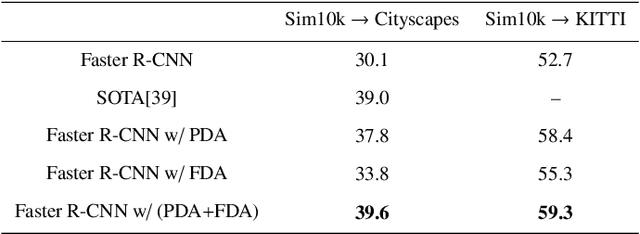

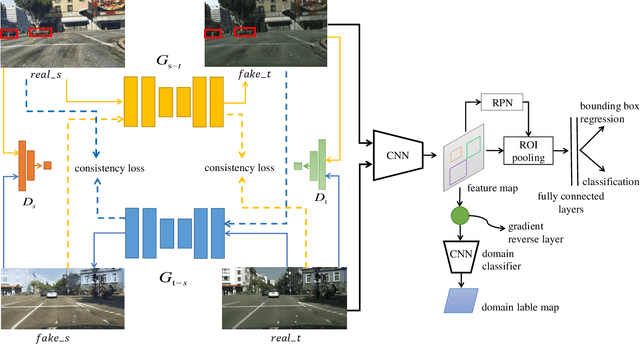

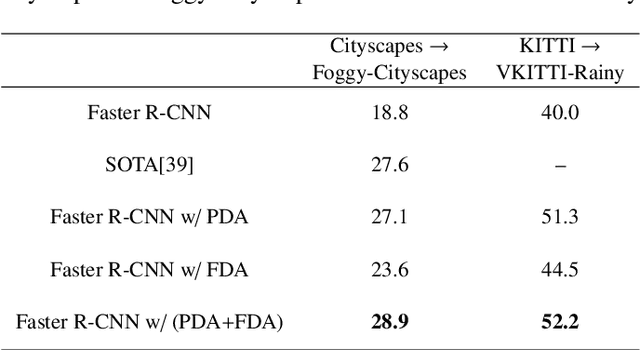

Pixel and Feature Level Based Domain Adaption for Object Detection in Autonomous Driving

Sep 30, 2018

Abstract:Annotating large scale datasets to train modern convolutional neural networks is prohibitively expensive and time-consuming for many real tasks. One alternative is to train the model on labeled synthetic datasets and apply it in the real scenes. However, this straightforward method often fails to generalize well mainly due to the domain bias between the synthetic and real datasets. Many unsupervised domain adaptation (UDA) methods are introduced to address this problem but most of them only focus on the simple classification task. In this paper, we present a novel UDA model to solve the more complex object detection problem in the context of autonomous driving. Our model integrates both pixel level and feature level based transformtions to fulfill the cross domain detection task and can be further trained end-to-end to pursue better performance. We employ objectives of the generative adversarial network and the cycle consistency loss for image translation in the pixel space. To address the potential semantic inconsistency problem, we propose region proposal based feature adversarial training to preserve the semantics of our target objects as well as further minimize the domain shifts. Extensive experiments are conducted on several different datasets, and the results demonstrate the robustness and superiority of our method.

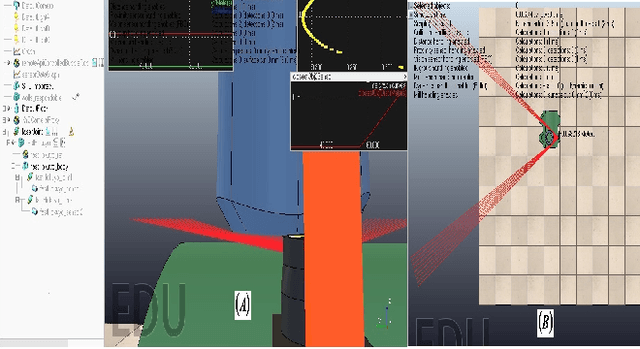

Object Detection and Motion Planning for Automated Welding of Tubular Joints

Sep 27, 2018

Abstract:Automatic welding of tubular TKY joints is an important and challenging task for the marine and offshore industry. In this paper, a framework for tubular joint detection and motion planning is proposed. The pose of the real tubular joint is detected using RGB-D sensors, which is used to obtain a real-to-virtual mapping for positioning the workpiece in a virtual environment. For motion planning, a Bi-directional Transition based Rapidly exploring Random Tree (BiTRRT) algorithm is used to generate trajectories for reaching the desired goals. The complete framework is verified with experiments, and the results show that the robot welding torch is able to transit without collision to desired goals which are close to the tubular joint.

Edge and Corner Detection for Unorganized 3D Point Clouds with Application to Robotic Welding

Sep 27, 2018

Abstract:In this paper, we propose novel edge and corner detection algorithms for unorganized point clouds. Our edge detection method evaluates symmetry in a local neighborhood and uses an adaptive density based threshold to differentiate 3D edge points. We extend this algorithm to propose a novel corner detector that clusters curvature vectors and uses their geometrical statistics to classify a point as corner. We perform rigorous evaluation of the algorithms on RGB-D semantic segmentation and 3D washer models from the ShapeNet dataset and report higher precision and recall scores. Finally, we also demonstrate how our edge and corner detectors can be used as a novel approach towards automatic weld seam detection for robotic welding. We propose to generate weld seams directly from a point cloud as opposed to using 3D models for offline planning of welding paths. For this application, we show a comparison between Harris 3D and our proposed approach on a panel workpiece.

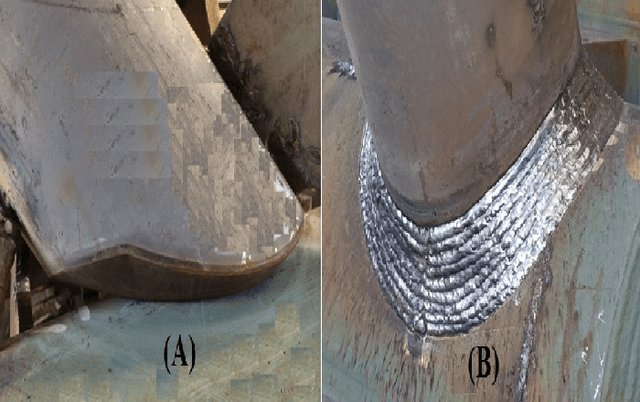

Model-Free 3D Reconstruction of Weld Joint Using Laser Scanning

Jul 08, 2015

Abstract:This article presents a novel utilization of the concept of entropy in information theory to model-free 3D reconstruction of weld joint in presence of noise. We show that our formulation attains its global minimum at the upper edge of this joint. This property significantly simplifies the extraction of this welding joint. Furthermore, we present an approach to compute the volume of this extracted space to facilitate the monitoring of the progress of the welding task. Moreover, we provide a preliminary analysis of the effect of variation of the noise on the extraction process of this space to realize the impact of this noise on the computation of its area and volume.

Application of Deep Neural Network in Estimation of the Weld Bead Parameters

Jul 08, 2015

Abstract:We present a deep learning approach to estimation of the bead parameters in welding tasks. Our model is based on a four-hidden-layer neural network architecture. More specifically, the first three hidden layers of this architecture utilize Sigmoid function to produce their respective intermediate outputs. On the other hand, the last hidden layer uses a linear transformation to generate the final output of this architecture. This transforms our deep network architecture from a classifier to a non-linear regression model. We compare the performance of our deep network with a selected number of results in the literature to show a considerable improvement in reducing the errors in estimation of these values. Furthermore, we show its scalability on estimating the weld bead parameters with same level of accuracy on combination of datasets that pertain to different welding techniques. This is a nontrivial result that is counter-intuitive to the general belief in this field of research.

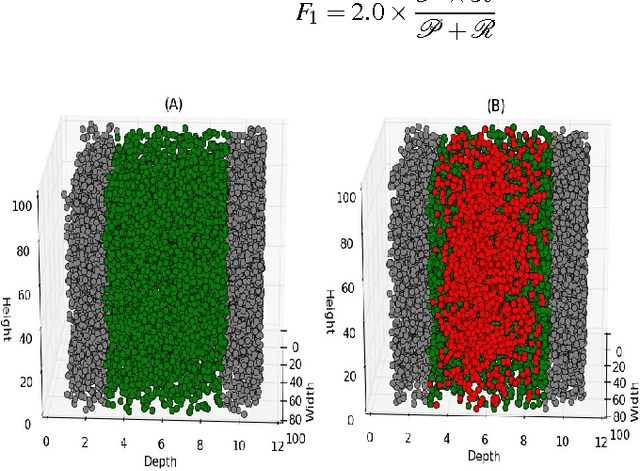

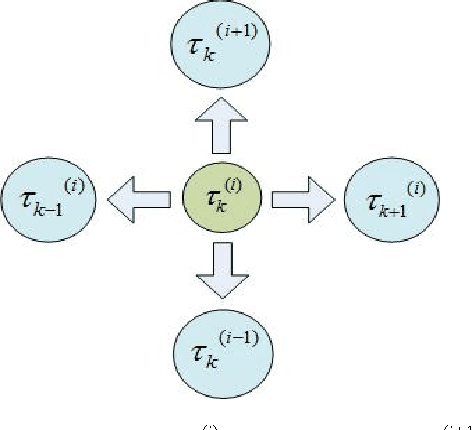

An Adaptive Sampling Approach to 3D Reconstruction of Weld Joint

Jul 08, 2015

Abstract:We present an adaptive sampling approach to 3D reconstruction of the welding joint using the point cloud that is generated by a laser sensor. We start with a randomized strategy to approximate the surface of the volume of interest through selection of a number of pivotal candidates. Furthermore, we introduce three proposal distributions over the neighborhood of each of these pivots to adaptively sample from their neighbors to refine the original randomized approximation to incrementally reconstruct this welding space. We prevent our algorithm from being trapped in a neighborhood via permanently labeling the visited samples. In addition, we accumulate the accepted candidates along with their selected neighbors in a queue structure to allow every selected sample to contribute to the evolution of the reconstructed welding space as the algorithm progresses. We analyze the performance of our adaptive sampling algorithm in contrast to the random sampling, with and without replacement, to show a significant improvement in total number of samples that are drawn to identify the region of interest, thereby expanding upon neighboring samples to extract the entire region in a fewer iterations and a shorter computation time.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge