Yuhu Shan

Distilling Pixel-Wise Feature Similarities for Semantic Segmentation

Oct 31, 2019

Abstract:Among the neural network compression techniques, knowledge distillation is an effective one which forces a simpler student network to mimic the output of a larger teacher network. However, most of such model distillation methods focus on the image-level classification task. Directly adapting these methods to the task of semantic segmentation only brings marginal improvements. In this paper, we propose a simple, yet effective knowledge representation referred to as pixel-wise feature similarities (PFS) to tackle the challenging distillation problem of semantic segmentation. The developed PFS encodes spatial structural information for each pixel location of the high-level convolutional features, which helps guide the distillation process in an easier way. Furthermore, a novel weighted pixel-level soft prediction imitation approach is proposed to enable the student network to selectively mimic the teacher network's output, according to their pixel-wise knowledge-gaps. Extensive experiments are conducted on the challenging datasets of Pascal VOC 2012, ADE20K and Pascal Context. Our approach brings significant performance improvements compared to several strong baselines and achieves new state-of-the-art results.

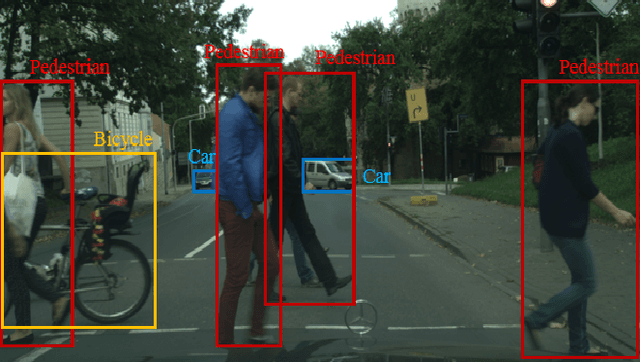

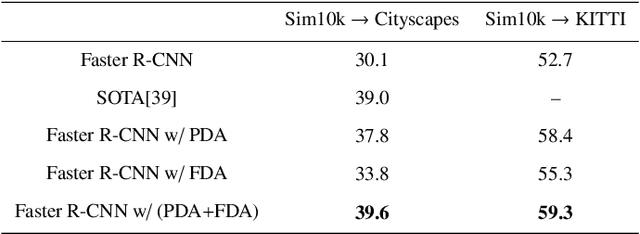

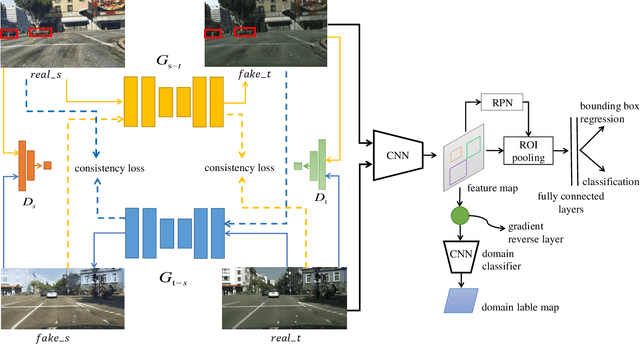

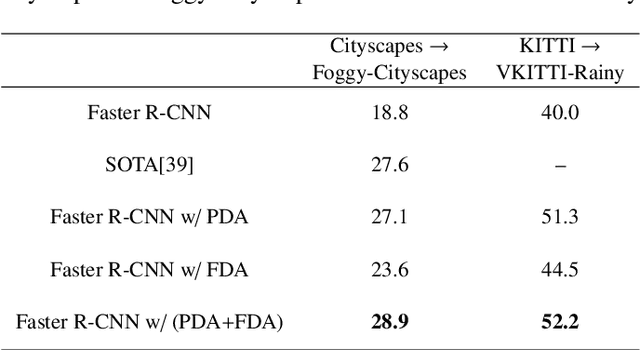

Pixel and Feature Level Based Domain Adaption for Object Detection in Autonomous Driving

Sep 30, 2018

Abstract:Annotating large scale datasets to train modern convolutional neural networks is prohibitively expensive and time-consuming for many real tasks. One alternative is to train the model on labeled synthetic datasets and apply it in the real scenes. However, this straightforward method often fails to generalize well mainly due to the domain bias between the synthetic and real datasets. Many unsupervised domain adaptation (UDA) methods are introduced to address this problem but most of them only focus on the simple classification task. In this paper, we present a novel UDA model to solve the more complex object detection problem in the context of autonomous driving. Our model integrates both pixel level and feature level based transformtions to fulfill the cross domain detection task and can be further trained end-to-end to pursue better performance. We employ objectives of the generative adversarial network and the cycle consistency loss for image translation in the pixel space. To address the potential semantic inconsistency problem, we propose region proposal based feature adversarial training to preserve the semantics of our target objects as well as further minimize the domain shifts. Extensive experiments are conducted on several different datasets, and the results demonstrate the robustness and superiority of our method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge