Yufan Zhou

DeepPersona: A Generative Engine for Scaling Deep Synthetic Personas

Nov 11, 2025Abstract:Simulating human profiles by instilling personas into large language models (LLMs) is rapidly transforming research in agentic behavioral simulation, LLM personalization, and human-AI alignment. However, most existing synthetic personas remain shallow and simplistic, capturing minimal attributes and failing to reflect the rich complexity and diversity of real human identities. We introduce DEEPPERSONA, a scalable generative engine for synthesizing narrative-complete synthetic personas through a two-stage, taxonomy-guided method. First, we algorithmically construct the largest-ever human-attribute taxonomy, comprising over hundreds of hierarchically organized attributes, by mining thousands of real user-ChatGPT conversations. Second, we progressively sample attributes from this taxonomy, conditionally generating coherent and realistic personas that average hundreds of structured attributes and roughly 1 MB of narrative text, two orders of magnitude deeper than prior works. Intrinsic evaluations confirm significant improvements in attribute diversity (32 percent higher coverage) and profile uniqueness (44 percent greater) compared to state-of-the-art baselines. Extrinsically, our personas enhance GPT-4.1-mini's personalized question answering accuracy by 11.6 percent on average across ten metrics and substantially narrow (by 31.7 percent) the gap between simulated LLM citizens and authentic human responses in social surveys. Our generated national citizens reduced the performance gap on the Big Five personality test by 17 percent relative to LLM-simulated citizens. DEEPPERSONA thus provides a rigorous, scalable, and privacy-free platform for high-fidelity human simulation and personalized AI research.

* 12 pages, 5 figures, accepted at LAW 2025 Workshop (NeurIPS 2025) Project page: https://deeppersona-ai.github.io/

Robust Super-Resolution Compressive Sensing: A Two-timescale Alternating MAP Approach

Aug 09, 2025Abstract:The problem of super-resolution compressive sensing (SR-CS) is crucial for various wireless sensing and communication applications. Existing methods often suffer from limited resolution capabilities and sensitivity to hyper-parameters, hindering their ability to accurately recover sparse signals when the grid parameters do not lie precisely on a fixed grid and are close to each other. To overcome these limitations, this paper introduces a novel robust super-resolution compressive sensing algorithmic framework using a two-timescale alternating maximum a posteriori (MAP) approach. At the slow timescale, the proposed framework iterates between a sparse signal estimation module and a grid update module. In the sparse signal estimation module, a hyperbolic-tangent prior distribution based variational Bayesian inference (tanh-VBI) algorithm with a strong sparsity promotion capability is adopted to estimate the posterior probability of the sparse vector and accurately identify active grid components carrying primary energy under a dense grid. Subsequently, the grid update module utilizes the BFGS algorithm to refine these low-dimensional active grid components at a faster timescale to achieve super-resolution estimation of the grid parameters with a low computational cost. The proposed scheme is applied to the channel extrapolation problem, and simulation results demonstrate the superiority of the proposed scheme compared to baseline schemes.

Scaling Up Audio-Synchronized Visual Animation: An Efficient Training Paradigm

Aug 05, 2025Abstract:Recent advances in audio-synchronized visual animation enable control of video content using audios from specific classes. However, existing methods rely heavily on expensive manual curation of high-quality, class-specific training videos, posing challenges to scaling up to diverse audio-video classes in the open world. In this work, we propose an efficient two-stage training paradigm to scale up audio-synchronized visual animation using abundant but noisy videos. In stage one, we automatically curate large-scale videos for pretraining, allowing the model to learn diverse but imperfect audio-video alignments. In stage two, we finetune the model on manually curated high-quality examples, but only at a small scale, significantly reducing the required human effort. We further enhance synchronization by allowing each frame to access rich audio context via multi-feature conditioning and window attention. To efficiently train the model, we leverage pretrained text-to-video generator and audio encoders, introducing only 1.9\% additional trainable parameters to learn audio-conditioning capability without compromising the generator's prior knowledge. For evaluation, we introduce AVSync48, a benchmark with videos from 48 classes, which is 3$\times$ more diverse than previous benchmarks. Extensive experiments show that our method significantly reduces reliance on manual curation by over 10$\times$, while generalizing to many open classes.

OmniPart: Part-Aware 3D Generation with Semantic Decoupling and Structural Cohesion

Jul 08, 2025Abstract:The creation of 3D assets with explicit, editable part structures is crucial for advancing interactive applications, yet most generative methods produce only monolithic shapes, limiting their utility. We introduce OmniPart, a novel framework for part-aware 3D object generation designed to achieve high semantic decoupling among components while maintaining robust structural cohesion. OmniPart uniquely decouples this complex task into two synergistic stages: (1) an autoregressive structure planning module generates a controllable, variable-length sequence of 3D part bounding boxes, critically guided by flexible 2D part masks that allow for intuitive control over part decomposition without requiring direct correspondences or semantic labels; and (2) a spatially-conditioned rectified flow model, efficiently adapted from a pre-trained holistic 3D generator, synthesizes all 3D parts simultaneously and consistently within the planned layout. Our approach supports user-defined part granularity, precise localization, and enables diverse downstream applications. Extensive experiments demonstrate that OmniPart achieves state-of-the-art performance, paving the way for more interpretable, editable, and versatile 3D content.

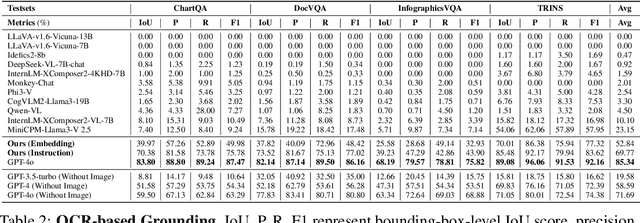

Towards Visual Text Grounding of Multimodal Large Language Model

Apr 07, 2025

Abstract:Despite the existing evolution of Multimodal Large Language Models (MLLMs), a non-neglectable limitation remains in their struggle with visual text grounding, especially in text-rich images of documents. Document images, such as scanned forms and infographics, highlight critical challenges due to their complex layouts and textual content. However, current benchmarks do not fully address these challenges, as they mostly focus on visual grounding on natural images, rather than text-rich document images. Thus, to bridge this gap, we introduce TRIG, a novel task with a newly designed instruction dataset for benchmarking and improving the Text-Rich Image Grounding capabilities of MLLMs in document question-answering. Specifically, we propose an OCR-LLM-human interaction pipeline to create 800 manually annotated question-answer pairs as a benchmark and a large-scale training set of 90$ synthetic data based on four diverse datasets. A comprehensive evaluation of various MLLMs on our proposed benchmark exposes substantial limitations in their grounding capability on text-rich images. In addition, we propose two simple and effective TRIG methods based on general instruction tuning and plug-and-play efficient embedding, respectively. By finetuning MLLMs on our synthetic dataset, they promisingly improve spatial reasoning and grounding capabilities.

A Survey on Mechanistic Interpretability for Multi-Modal Foundation Models

Feb 22, 2025

Abstract:The rise of foundation models has transformed machine learning research, prompting efforts to uncover their inner workings and develop more efficient and reliable applications for better control. While significant progress has been made in interpreting Large Language Models (LLMs), multimodal foundation models (MMFMs) - such as contrastive vision-language models, generative vision-language models, and text-to-image models - pose unique interpretability challenges beyond unimodal frameworks. Despite initial studies, a substantial gap remains between the interpretability of LLMs and MMFMs. This survey explores two key aspects: (1) the adaptation of LLM interpretability methods to multimodal models and (2) understanding the mechanistic differences between unimodal language models and crossmodal systems. By systematically reviewing current MMFM analysis techniques, we propose a structured taxonomy of interpretability methods, compare insights across unimodal and multimodal architectures, and highlight critical research gaps.

FreeBlend: Advancing Concept Blending with Staged Feedback-Driven Interpolation Diffusion

Feb 08, 2025Abstract:Concept blending is a promising yet underexplored area in generative models. While recent approaches, such as embedding mixing and latent modification based on structural sketches, have been proposed, they often suffer from incompatible semantic information and discrepancies in shape and appearance. In this work, we introduce FreeBlend, an effective, training-free framework designed to address these challenges. To mitigate cross-modal loss and enhance feature detail, we leverage transferred image embeddings as conditional inputs. The framework employs a stepwise increasing interpolation strategy between latents, progressively adjusting the blending ratio to seamlessly integrate auxiliary features. Additionally, we introduce a feedback-driven mechanism that updates the auxiliary latents in reverse order, facilitating global blending and preventing rigid or unnatural outputs. Extensive experiments demonstrate that our method significantly improves both the semantic coherence and visual quality of blended images, yielding compelling and coherent results.

Scattering Environment Aware Joint Multi-user Channel Estimation and Localization with Spatially Reused Pilots

Jan 04, 2025Abstract:The increasing number of users leads to an increase in pilot overhead, and the limited pilot resources make it challenging to support all users using orthogonal pilots. By fully capturing the inherent physical characteristics of the multi-user (MU) environment, it is possible to reduce pilot costs and improve the channel estimation performance. In reality, users nearby may share the same scatterer, while users further apart tend to have orthogonal channels. This paper proposes a two-timescale approach for joint MU uplink channel estimation and localization in MIMO-OFDM systems, which fully captures the spatial characteristics of MUs. To accurately represent the structure of the MU channel, the channel is modeled in the 3-D location domain. In the long-timescale phase, the time-space-time multiple signal classification (TST-MUSIC) algorithm initially offers a rough approximation of scatterer positions for each user, which is subsequently refined through the scatterer association algorithm based on density-based spatial clustering of applications with noise (DBSCAN) algorithm. The BS then utilizes this prior information to apply a graph-coloring-based user grouping algorithm, enabling spatial division multiplexing of pilots and reducing pilot overhead. In the short timescale phase, a low-complexity scattering environment aware location-domain turbo channel estimation (SEA-LD-TurboCE) algorithm is introduced to merge the overlapping scatterer information from MUs, facilitating high-precision joint MU channel estimation and localization under spatially reused pilots. Simulation results verify the superior channel estimation and localization performance of our proposed scheme over the baselines.

A High-Quality Text-Rich Image Instruction Tuning Dataset via Hybrid Instruction Generation

Dec 20, 2024Abstract:Large multimodal models still struggle with text-rich images because of inadequate training data. Self-Instruct provides an annotation-free way for generating instruction data, but its quality is poor, as multimodal alignment remains a hurdle even for the largest models. In this work, we propose LLaVAR-2, to enhance multimodal alignment for text-rich images through hybrid instruction generation between human annotators and large language models. Specifically, it involves detailed image captions from human annotators, followed by the use of these annotations in tailored text prompts for GPT-4o to curate a dataset. It also implements several mechanisms to filter out low-quality data, and the resulting dataset comprises 424k high-quality pairs of instructions. Empirical results show that models fine-tuned on this dataset exhibit impressive enhancements over those trained with self-instruct data.

Numerical Pruning for Efficient Autoregressive Models

Dec 17, 2024

Abstract:Transformers have emerged as the leading architecture in deep learning, proving to be versatile and highly effective across diverse domains beyond language and image processing. However, their impressive performance often incurs high computational costs due to their substantial model size. This paper focuses on compressing decoder-only transformer-based autoregressive models through structural weight pruning to improve the model efficiency while preserving performance for both language and image generation tasks. Specifically, we propose a training-free pruning method that calculates a numerical score with Newton's method for the Attention and MLP modules, respectively. Besides, we further propose another compensation algorithm to recover the pruned model for better performance. To verify the effectiveness of our method, we provide both theoretical support and extensive experiments. Our experiments show that our method achieves state-of-the-art performance with reduced memory usage and faster generation speeds on GPUs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge