Wenbo Xu

MARE: Multimodal Alignment and Reinforcement for Explainable Deepfake Detection via Vision-Language Models

Jan 29, 2026Abstract:Deepfake detection is a widely researched topic that is crucial for combating the spread of malicious content, with existing methods mainly modeling the problem as classification or spatial localization. The rapid advancements in generative models impose new demands on Deepfake detection. In this paper, we propose multimodal alignment and reinforcement for explainable Deepfake detection via vision-language models, termed MARE, which aims to enhance the accuracy and reliability of Vision-Language Models (VLMs) in Deepfake detection and reasoning. Specifically, MARE designs comprehensive reward functions, incorporating reinforcement learning from human feedback (RLHF), to incentivize the generation of text-spatially aligned reasoning content that adheres to human preferences. Besides, MARE introduces a forgery disentanglement module to capture intrinsic forgery traces from high-level facial semantics, thereby improving its authenticity detection capability. We conduct thorough evaluations on the reasoning content generated by MARE. Both quantitative and qualitative experimental results demonstrate that MARE achieves state-of-the-art performance in terms of accuracy and reliability.

Hierarchically Block-Sparse Recovery With Prior Support Information

Nov 09, 2025Abstract:We provide new recovery bounds for hierarchical compressed sensing (HCS) based on prior support information (PSI). A detailed PSI-enabled reconstruction model is formulated using various forms of PSI. The hierarchical block orthogonal matching pursuit with PSI (HiBOMP-P) algorithm is designed in a recursive form to reliably recover hierarchically block-sparse signals. We derive exact recovery conditions (ERCs) measured by the mutual incoherence property (MIP), wherein hierarchical MIP concepts are proposed, and further develop reconstructible sparsity levels to reveal sufficient conditions for ERCs. Leveraging these MIP analyses, we present several extended insights, including reliable recovery conditions in noisy scenarios and the optimal hierarchical structure for cases where sparsity is not equal to zero. Our results further confirm that HCS offers improved recovery performance even when the prior information does not overlap with the true support set, whereas existing methods heavily rely on this overlap, thereby compromising performance if it is absent.

A Multimodal Deviation Perceiving Framework for Weakly-Supervised Temporal Forgery Localization

Jul 22, 2025Abstract:Current researches on Deepfake forensics often treat detection as a classification task or temporal forgery localization problem, which are usually restrictive, time-consuming, and challenging to scale for large datasets. To resolve these issues, we present a multimodal deviation perceiving framework for weakly-supervised temporal forgery localization (MDP), which aims to identify temporal partial forged segments using only video-level annotations. The MDP proposes a novel multimodal interaction mechanism (MI) and an extensible deviation perceiving loss to perceive multimodal deviation, which achieves the refined start and end timestamps localization of forged segments. Specifically, MI introduces a temporal property preserving cross-modal attention to measure the relevance between the visual and audio modalities in the probabilistic embedding space. It could identify the inter-modality deviation and construct comprehensive video features for temporal forgery localization. To explore further temporal deviation for weakly-supervised learning, an extensible deviation perceiving loss has been proposed, aiming at enlarging the deviation of adjacent segments of the forged samples and reducing that of genuine samples. Extensive experiments demonstrate the effectiveness of the proposed framework and achieve comparable results to fully-supervised approaches in several evaluation metrics.

FDBPL: Faster Distillation-Based Prompt Learning for Region-Aware Vision-Language Models Adaptation

May 23, 2025

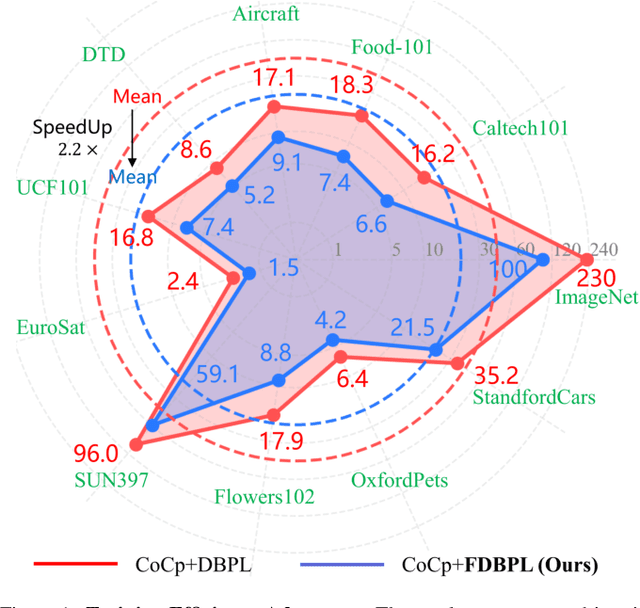

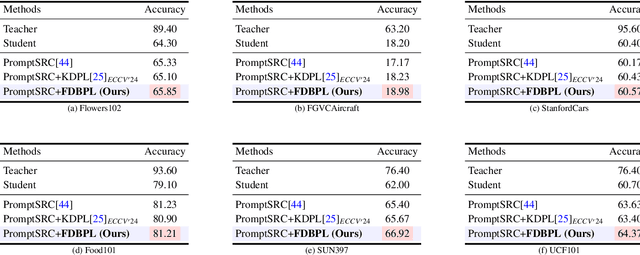

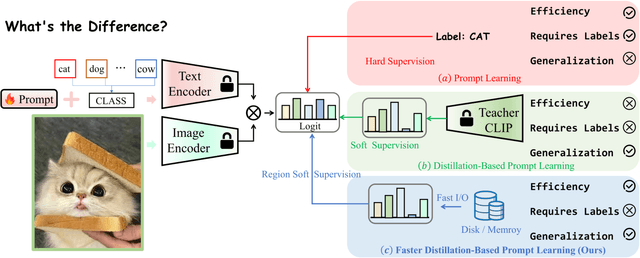

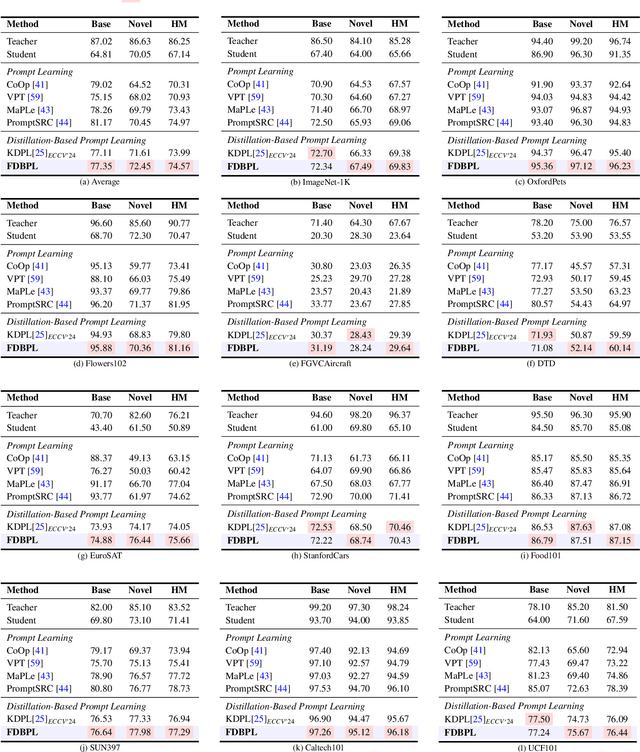

Abstract:Prompt learning as a parameter-efficient method that has been widely adopted to adapt Vision-Language Models (VLMs) to downstream tasks. While hard-prompt design requires domain expertise and iterative optimization, soft-prompt methods rely heavily on task-specific hard labels, limiting their generalization to unseen categories. Recent popular distillation-based prompt learning methods improve generalization by exploiting larger teacher VLMs and unsupervised knowledge transfer, yet their repetitive teacher model online inference sacrifices the inherent training efficiency advantage of prompt learning. In this paper, we propose {{\large {\textbf{F}}}}aster {{\large {\textbf{D}}}}istillation-{{\large {\textbf{B}}}}ased {{\large {\textbf{P}}}}rompt {{\large {\textbf{L}}}}earning (\textbf{FDBPL}), which addresses these issues by sharing soft supervision contexts across multiple training stages and implementing accelerated I/O. Furthermore, FDBPL introduces a region-aware prompt learning paradigm with dual positive-negative prompt spaces to fully exploit randomly cropped regions that containing multi-level information. We propose a positive-negative space mutual learning mechanism based on similarity-difference learning, enabling student CLIP models to recognize correct semantics while learning to reject weakly related concepts, thereby improving zero-shot performance. Unlike existing distillation-based prompt learning methods that sacrifice parameter efficiency for generalization, FDBPL maintains dual advantages of parameter efficiency and strong downstream generalization. Comprehensive evaluations across 11 datasets demonstrate superior performance in base-to-new generalization, cross-dataset transfer, and robustness tests, achieving $2.2\times$ faster training speed.

Image Recognition with Online Lightweight Vision Transformer: A Survey

May 06, 2025Abstract:The Transformer architecture has achieved significant success in natural language processing, motivating its adaptation to computer vision tasks. Unlike convolutional neural networks, vision transformers inherently capture long-range dependencies and enable parallel processing, yet lack inductive biases and efficiency benefits, facing significant computational and memory challenges that limit its real-world applicability. This paper surveys various online strategies for generating lightweight vision transformers for image recognition, focusing on three key areas: Efficient Component Design, Dynamic Network, and Knowledge Distillation. We evaluate the relevant exploration for each topic on the ImageNet-1K benchmark, analyzing trade-offs among precision, parameters, throughput, and more to highlight their respective advantages, disadvantages, and flexibility. Finally, we propose future research directions and potential challenges in the lightweighting of vision transformers with the aim of inspiring further exploration and providing practical guidance for the community. Project Page: https://github.com/ajxklo/Lightweight-VIT

Weakly-supervised Audio Temporal Forgery Localization via Progressive Audio-language Co-learning Network

May 03, 2025Abstract:Audio temporal forgery localization (ATFL) aims to find the precise forgery regions of the partial spoof audio that is purposefully modified. Existing ATFL methods rely on training efficient networks using fine-grained annotations, which are obtained costly and challenging in real-world scenarios. To meet this challenge, in this paper, we propose a progressive audio-language co-learning network (LOCO) that adopts co-learning and self-supervision manners to prompt localization performance under weak supervision scenarios. Specifically, an audio-language co-learning module is first designed to capture forgery consensus features by aligning semantics from temporal and global perspectives. In this module, forgery-aware prompts are constructed by using utterance-level annotations together with learnable prompts, which can incorporate semantic priors into temporal content features dynamically. In addition, a forgery localization module is applied to produce forgery proposals based on fused forgery-class activation sequences. Finally, a progressive refinement strategy is introduced to generate pseudo frame-level labels and leverage supervised semantic contrastive learning to amplify the semantic distinction between real and fake content, thereby continuously optimizing forgery-aware features. Extensive experiments show that the proposed LOCO achieves SOTA performance on three public benchmarks.

Task Consistent Prototype Learning for Incremental Few-shot Semantic Segmentation

Oct 16, 2024

Abstract:Incremental Few-Shot Semantic Segmentation (iFSS) tackles a task that requires a model to continually expand its segmentation capability on novel classes using only a few annotated examples. Typical incremental approaches encounter a challenge that the objective of the base training phase (fitting base classes with sufficient instances) does not align with the incremental learning phase (rapidly adapting to new classes with less forgetting). This disconnect can result in suboptimal performance in the incremental setting. This study introduces a meta-learning-based prototype approach that encourages the model to learn how to adapt quickly while preserving previous knowledge. Concretely, we mimic the incremental evaluation protocol during the base training session by sampling a sequence of pseudo-incremental tasks. Each task in the simulated sequence is trained using a meta-objective to enable rapid adaptation without forgetting. To enhance discrimination among class prototypes, we introduce prototype space redistribution learning, which dynamically updates class prototypes to establish optimal inter-prototype boundaries within the prototype space. Extensive experiments on iFSS datasets built upon PASCAL and COCO benchmarks show the advanced performance of the proposed approach, offering valuable insights for addressing iFSS challenges.

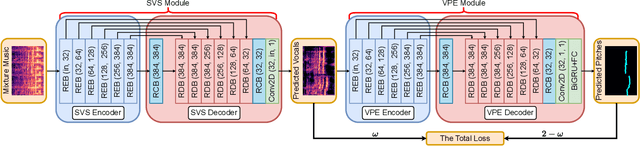

DJCM: A Deep Joint Cascade Model for Singing Voice Separation and Vocal Pitch Estimation

Jan 08, 2024

Abstract:Singing voice separation and vocal pitch estimation are pivotal tasks in music information retrieval. Existing methods for simultaneous extraction of clean vocals and vocal pitches can be classified into two categories: pipeline methods and naive joint learning methods. However, the efficacy of these methods is limited by the following problems: On the one hand, pipeline methods train models for each task independently, resulting a mismatch between the data distributions at the training and testing time. On the other hand, naive joint learning methods simply add the losses of both tasks, possibly leading to a misalignment between the distinct objectives of each task. To solve these problems, we propose a Deep Joint Cascade Model (DJCM) for singing voice separation and vocal pitch estimation. DJCM employs a novel joint cascade model structure to concurrently train both tasks. Moreover, task-specific weights are used to align different objectives of both tasks. Experimental results show that DJCM achieves state-of-the-art performance on both tasks, with great improvements of 0.45 in terms of Signal-to-Distortion Ratio (SDR) for singing voice separation and 2.86% in terms of Overall Accuracy (OA) for vocal pitch estimation. Furthermore, extensive ablation studies validate the effectiveness of each design of our proposed model. The code of DJCM is available at https://github.com/Dream-High/DJCM .

Sparsity-Based Channel Estimation Exploiting Deep Unrolling for Downlink Massive MIMO

Sep 24, 2023

Abstract:Massive multiple-input multiple-output (MIMO) enjoys great advantage in 5G wireless communication systems owing to its spectrum and energy efficiency. However, hundreds of antennas require large volumes of pilot overhead to guarantee reliable channel estimation in FDD massive MIMO system. Compressive sensing (CS) has been applied for channel estimation by exploiting the inherent sparse structure of massive MIMO channel but suffer from high complexity. To overcome this challenge, this paper develops a hybrid channel estimation scheme by integrating the model-driven CS and data-driven deep unrolling technique. The proposed scheme consists of a coarse estimation part and a fine correction part to respectively exploit the inter- and intraframe sparsities of channels to greatly reduce the pilot overhead. Theoretical result is provided to indicate the convergence of the fine correction and coarse estimation net. Simulation results are provided to verify that our scheme can estimate MIMO channels with low pilot overhead while guaranteeing estimation accuracy with relatively low complexity.

Masked Cross-image Encoding for Few-shot Segmentation

Aug 22, 2023Abstract:Few-shot segmentation (FSS) is a dense prediction task that aims to infer the pixel-wise labels of unseen classes using only a limited number of annotated images. The key challenge in FSS is to classify the labels of query pixels using class prototypes learned from the few labeled support exemplars. Prior approaches to FSS have typically focused on learning class-wise descriptors independently from support images, thereby ignoring the rich contextual information and mutual dependencies among support-query features. To address this limitation, we propose a joint learning method termed Masked Cross-Image Encoding (MCE), which is designed to capture common visual properties that describe object details and to learn bidirectional inter-image dependencies that enhance feature interaction. MCE is more than a visual representation enrichment module; it also considers cross-image mutual dependencies and implicit guidance. Experiments on FSS benchmarks PASCAL-$5^i$ and COCO-$20^i$ demonstrate the advanced meta-learning ability of the proposed method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge