Shuo Feng

TeraSim-World: Worldwide Safety-Critical Data Synthesis for End-to-End Autonomous Driving

Sep 16, 2025Abstract:Safe and scalable deployment of end-to-end (E2E) autonomous driving requires extensive and diverse data, particularly safety-critical events. Existing data are mostly generated from simulators with a significant sim-to-real gap or collected from on-road testing that is costly and unsafe. This paper presents TeraSim-World, an automated pipeline that synthesizes realistic and geographically diverse safety-critical data for E2E autonomous driving at anywhere in the world. Starting from an arbitrary location, TeraSim-World retrieves real-world maps and traffic demand from geospatial data sources. Then, it simulates agent behaviors from naturalistic driving datasets, and orchestrates diverse adversities to create corner cases. Informed by street views of the same location, it achieves photorealistic, geographically grounded sensor rendering via the frontier video generation model Cosmos-Drive. By bridging agent and sensor simulations, TeraSim-World provides a scalable and critical~data synthesis framework for training and evaluation of E2E autonomous driving systems.

FAF: A Feature-Adaptive Framework for Few-Shot Time Series Forecasting

Jun 24, 2025Abstract:Multi-task and few-shot time series forecasting tasks are commonly encountered in scenarios such as the launch of new products in different cities. However, traditional time series forecasting methods suffer from insufficient historical data, which stems from a disregard for the generalized and specific features among different tasks. For the aforementioned challenges, we propose the Feature-Adaptive Time Series Forecasting Framework (FAF), which consists of three key components: the Generalized Knowledge Module (GKM), the Task-Specific Module (TSM), and the Rank Module (RM). During training phase, the GKM is updated through a meta-learning mechanism that enables the model to extract generalized features across related tasks. Meanwhile, the TSM is trained to capture diverse local dynamics through multiple functional regions, each of which learns specific features from individual tasks. During testing phase, the RM dynamically selects the most relevant functional region from the TSM based on input sequence features, which is then combined with the generalized knowledge learned by the GKM to generate accurate forecasts. This design enables FAF to achieve robust and personalized forecasting even with sparse historical observations We evaluate FAF on five diverse real-world datasets under few-shot time series forecasting settings. Experimental results demonstrate that FAF consistently outperforms baselines that include three categories of time series forecasting methods. In particular, FAF achieves a 41.81\% improvement over the best baseline, iTransformer, on the CO$_2$ emissions dataset.

Machine Unlearning for Robust DNNs: Attribution-Guided Partitioning and Neuron Pruning in Noisy Environments

Jun 13, 2025Abstract:Deep neural networks (DNNs) have achieved remarkable success across diverse domains, but their performance can be severely degraded by noisy or corrupted training data. Conventional noise mitigation methods often rely on explicit assumptions about noise distributions or require extensive retraining, which can be impractical for large-scale models. Inspired by the principles of machine unlearning, we propose a novel framework that integrates attribution-guided data partitioning, discriminative neuron pruning, and targeted fine-tuning to mitigate the impact of noisy samples. Our approach employs gradient-based attribution to probabilistically distinguish high-quality examples from potentially corrupted ones without imposing restrictive assumptions on the noise. It then applies regression-based sensitivity analysis to identify and prune neurons that are most vulnerable to noise. Finally, the resulting network is fine-tuned on the high-quality data subset to efficiently recover and enhance its generalization performance. This integrated unlearning-inspired framework provides several advantages over conventional noise-robust learning approaches. Notably, it combines data-level unlearning with model-level adaptation, thereby avoiding the need for full model retraining or explicit noise modeling. We evaluate our method on representative tasks (e.g., CIFAR-10 image classification and speech recognition) under various noise levels and observe substantial gains in both accuracy and efficiency. For example, our framework achieves approximately a 10% absolute accuracy improvement over standard retraining on CIFAR-10 with injected label noise, while reducing retraining time by up to 47% in some settings. These results demonstrate the effectiveness and scalability of the proposed approach for achieving robust generalization in noisy environments.

Towards provable probabilistic safety for scalable embodied AI systems

Jun 05, 2025Abstract:Embodied AI systems, comprising AI models and physical plants, are increasingly prevalent across various applications. Due to the rarity of system failures, ensuring their safety in complex operating environments remains a major challenge, which severely hinders their large-scale deployment in safety-critical domains, such as autonomous vehicles, medical devices, and robotics. While achieving provable deterministic safety--verifying system safety across all possible scenarios--remains theoretically ideal, the rarity and complexity of corner cases make this approach impractical for scalable embodied AI systems. To address this challenge, we introduce provable probabilistic safety, which aims to ensure that the residual risk of large-scale deployment remains below a predefined threshold. Instead of attempting exhaustive safety proof across all corner cases, this paradigm establishes a probabilistic safety boundary on overall system performance, leveraging statistical methods to enhance feasibility and scalability. A well-defined probabilistic safety boundary enables embodied AI systems to be deployed at scale while allowing for continuous refinement of safety guarantees. Our work focuses on three core questions: what is provable probabilistic safety, how to prove the probabilistic safety, and how to achieve the provable probabilistic safety. By bridging the gap between theoretical safety assurance and practical deployment, our work offers a pathway toward safer, large-scale adoption of embodied AI systems in safety-critical applications.

Improving Brain-to-Image Reconstruction via Fine-Grained Text Bridging

May 29, 2025Abstract:Brain-to-Image reconstruction aims to recover visual stimuli perceived by humans from brain activity. However, the reconstructed visual stimuli often missing details and semantic inconsistencies, which may be attributed to insufficient semantic information. To address this issue, we propose an approach named Fine-grained Brain-to-Image reconstruction (FgB2I), which employs fine-grained text as bridge to improve image reconstruction. FgB2I comprises three key stages: detail enhancement, decoding fine-grained text descriptions, and text-bridged brain-to-image reconstruction. In the detail-enhancement stage, we leverage large vision-language models to generate fine-grained captions for visual stimuli and experimentally validate its importance. We propose three reward metrics (object accuracy, text-image semantic similarity, and image-image semantic similarity) to guide the language model in decoding fine-grained text descriptions from fMRI signals. The fine-grained text descriptions can be integrated into existing reconstruction methods to achieve fine-grained Brain-to-Image reconstruction.

Behavioral Safety Assessment towards Large-scale Deployment of Autonomous Vehicles

May 22, 2025Abstract:Autonomous vehicles (AVs) have significantly advanced in real-world deployment in recent years, yet safety continues to be a critical barrier to widespread adoption. Traditional functional safety approaches, which primarily verify the reliability, robustness, and adequacy of AV hardware and software systems from a vehicle-centric perspective, do not sufficiently address the AV's broader interactions and behavioral impact on the surrounding traffic environment. To overcome this limitation, we propose a paradigm shift toward behavioral safety, a comprehensive approach focused on evaluating AV responses and interactions within the traffic environment. To systematically assess behavioral safety, we introduce a third-party AV safety assessment framework comprising two complementary evaluation components: the Driver Licensing Test and the Driving Intelligence Test. The Driver Licensing Test evaluates the AV's reactive behaviors under controlled scenarios, ensuring basic behavioral competency. In contrast, the Driving Intelligence Test assesses the AV's interactive behaviors within naturalistic traffic conditions, quantifying the frequency of safety-critical events to deliver statistically meaningful safety metrics before large-scale deployment. We validated our proposed framework using Autoware.Universe, an open-source Level 4 AV, tested both in simulated environments and on the physical test track at the University of Michigan's Mcity Testing Facility. The results indicate that Autoware.Universe passed 6 out of 14 scenarios and exhibited a crash rate of 3.01e-3 crashes per mile, approximately 1,000 times higher than the average human driver crash rate. During the tests, we also uncovered several unknown unsafe scenarios for Autoware.Universe. These findings underscore the necessity of behavioral safety evaluations for improving AV safety performance prior to widespread public deployment.

Challenger: Affordable Adversarial Driving Video Generation

May 21, 2025

Abstract:Generating photorealistic driving videos has seen significant progress recently, but current methods largely focus on ordinary, non-adversarial scenarios. Meanwhile, efforts to generate adversarial driving scenarios often operate on abstract trajectory or BEV representations, falling short of delivering realistic sensor data that can truly stress-test autonomous driving (AD) systems. In this work, we introduce Challenger, a framework that produces physically plausible yet photorealistic adversarial driving videos. Generating such videos poses a fundamental challenge: it requires jointly optimizing over the space of traffic interactions and high-fidelity sensor observations. Challenger makes this affordable through two techniques: (1) a physics-aware multi-round trajectory refinement process that narrows down candidate adversarial maneuvers, and (2) a tailored trajectory scoring function that encourages realistic yet adversarial behavior while maintaining compatibility with downstream video synthesis. As tested on the nuScenes dataset, Challenger generates a diverse range of aggressive driving scenarios-including cut-ins, sudden lane changes, tailgating, and blind spot intrusions-and renders them into multiview photorealistic videos. Extensive evaluations show that these scenarios significantly increase the collision rate of state-of-the-art end-to-end AD models (UniAD, VAD, SparseDrive, and DiffusionDrive), and importantly, adversarial behaviors discovered for one model often transfer to others.

Correctness Learning: Deductive Verification Guided Learning for Human-AI Collaboration

Mar 10, 2025Abstract:Despite significant progress in AI and decision-making technologies in safety-critical fields, challenges remain in verifying the correctness of decision output schemes and verification-result driven design. We propose correctness learning (CL) to enhance human-AI collaboration integrating deductive verification methods and insights from historical high-quality schemes. The typical pattern hidden in historical high-quality schemes, such as change of task priorities in shared resources, provides critical guidance for intelligent agents in learning and decision-making. By utilizing deductive verification methods, we proposed patten-driven correctness learning (PDCL), formally modeling and reasoning the adaptive behaviors-or 'correctness pattern'-of system agents based on historical high-quality schemes, capturing the logical relationships embedded within these schemes. Using this logical information as guidance, we establish a correctness judgment and feedback mechanism to steer the intelligent decision model toward the 'correctness pattern' reflected in historical high-quality schemes. Extensive experiments across multiple working conditions and core parameters validate the framework's components and demonstrate its effectiveness in improving decision-making and resource optimization.

Analyzable Parameters Dominated Vehicle Platoon Dynamics Modeling and Analysis: A Physics-Encoded Deep Learning Approach

Feb 09, 2025Abstract:Recently, artificial intelligence (AI)-enabled nonlinear vehicle platoon dynamics modeling plays a crucial role in predicting and optimizing the interactions between vehicles. Existing efforts lack the extraction and capture of vehicle behavior interaction features at the platoon scale. More importantly, maintaining high modeling accuracy without losing physical analyzability remains to be solved. To this end, this paper proposes a novel physics-encoded deep learning network, named PeMTFLN, to model the nonlinear vehicle platoon dynamics. Specifically, an analyzable parameters encoded computational graph (APeCG) is designed to guide the platoon to respond to the driving behavior of the lead vehicle while ensuring local stability. Besides, a multi-scale trajectory feature learning network (MTFLN) is constructed to capture platoon following patterns and infer the physical parameters required for APeCG from trajectory data. The human-driven vehicle trajectory datasets (HIGHSIM) were used to train the proposed PeMTFLN. The trajectories prediction experiments show that PeMTFLN exhibits superior compared to the baseline models in terms of predictive accuracy in speed and gap. The stability analysis result shows that the physical parameters in APeCG is able to reproduce the platoon stability in real-world condition. In simulation experiments, PeMTFLN performs low inference error in platoon trajectories generation. Moreover, PeMTFLN also accurately reproduces ground-truth safety statistics. The code of proposed PeMTFLN is open source.

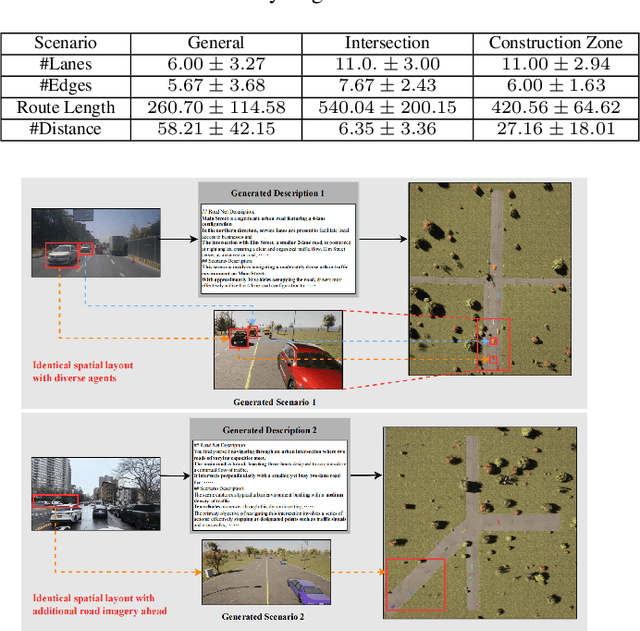

Realistic Corner Case Generation for Autonomous Vehicles with Multimodal Large Language Model

Nov 29, 2024

Abstract:To guarantee the safety and reliability of autonomous vehicle (AV) systems, corner cases play a crucial role in exploring the system's behavior under rare and challenging conditions within simulation environments. However, current approaches often fall short in meeting diverse testing needs and struggle to generalize to novel, high-risk scenarios that closely mirror real-world conditions. To tackle this challenge, we present AutoScenario, a multimodal Large Language Model (LLM)-based framework for realistic corner case generation. It converts safety-critical real-world data from multiple sources into textual representations, enabling the generalization of key risk factors while leveraging the extensive world knowledge and advanced reasoning capabilities of LLMs.Furthermore, it integrates tools from the Simulation of Urban Mobility (SUMO) and CARLA simulators to simplify and execute the code generated by LLMs. Our experiments demonstrate that AutoScenario can generate realistic and challenging test scenarios, precisely tailored to specific testing requirements or textual descriptions. Additionally, we validated its ability to produce diverse and novel scenarios derived from multimodal real-world data involving risky situations, harnessing the powerful generalization capabilities of LLMs to effectively simulate a wide range of corner cases.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge