Ruocheng Guo

ToolPRMBench: Evaluating and Advancing Process Reward Models for Tool-using Agents

Jan 18, 2026Abstract:Reward-guided search methods have demonstrated strong potential in enhancing tool-using agents by effectively guiding sampling and exploration over complex action spaces. As a core design, those search methods utilize process reward models (PRMs) to provide step-level rewards, enabling more fine-grained monitoring. However, there is a lack of systematic and reliable evaluation benchmarks for PRMs in tool-using settings. In this paper, we introduce ToolPRMBench, a large-scale benchmark specifically designed to evaluate PRMs for tool-using agents. ToolPRMBench is built on top of several representative tool-using benchmarks and converts agent trajectories into step-level test cases. Each case contains the interaction history, a correct action, a plausible but incorrect alternative, and relevant tool metadata. We respectively utilize offline sampling to isolate local single-step errors and online sampling to capture realistic multi-step failures from full agent rollouts. A multi-LLM verification pipeline is proposed to reduce label noise and ensure data quality. We conduct extensive experiments across large language models, general PRMs, and tool-specialized PRMs on ToolPRMBench. The results reveal clear differences in PRM effectiveness and highlight the potential of specialized PRMs for tool-using. Code and data will be released at https://github.com/David-Li0406/ToolPRMBench.

RIMRULE: Improving Tool-Using Language Agents via MDL-Guided Rule Learning

Jan 05, 2026Abstract:Large language models (LLMs) often struggle to use tools reliably in domain-specific settings, where APIs may be idiosyncratic, under-documented, or tailored to private workflows. This highlights the need for effective adaptation to task-specific tools. We propose RIMRULE, a neuro-symbolic approach for LLM adaptation based on dynamic rule injection. Compact, interpretable rules are distilled from failure traces and injected into the prompt during inference to improve task performance. These rules are proposed by the LLM itself and consolidated using a Minimum Description Length (MDL) objective that favors generality and conciseness. Each rule is stored in both natural language and a structured symbolic form, supporting efficient retrieval at inference time. Experiments on tool-use benchmarks show that this approach improves accuracy on both seen and unseen tools without modifying LLM weights. It outperforms prompting-based adaptation methods and complements finetuning. Moreover, rules learned from one LLM can be reused to improve others, including long reasoning LLMs, highlighting the portability of symbolic knowledge across architectures.

Renormalization Group Guided Tensor Network Structure Search

Dec 31, 2025Abstract:Tensor network structure search (TN-SS) aims to automatically discover optimal network topologies and rank configurations for efficient tensor decomposition in high-dimensional data representation. Despite recent advances, existing TN-SS methods face significant limitations in computational tractability, structure adaptivity, and optimization robustness across diverse tensor characteristics. They struggle with three key challenges: single-scale optimization missing multi-scale structures, discrete search spaces hindering smooth structure evolution, and separated structure-parameter optimization causing computational inefficiency. We propose RGTN (Renormalization Group guided Tensor Network search), a physics-inspired framework transforming TN-SS via multi-scale renormalization group flows. Unlike fixed-scale discrete search methods, RGTN uses dynamic scale-transformation for continuous structure evolution across resolutions. Its core innovation includes learnable edge gates for optimization-stage topology modification and intelligent proposals based on physical quantities like node tension measuring local stress and edge information flow quantifying connectivity importance. Starting from low-complexity coarse scales and refining to finer ones, RGTN finds compact structures while escaping local minima via scale-induced perturbations. Extensive experiments on light field data, high-order synthetic tensors, and video completion tasks show RGTN achieves state-of-the-art compression ratios and runs 4-600$\times$ faster than existing methods, validating the effectiveness of our physics-inspired approach.

Node-Level Uncertainty Estimation in LLM-Generated SQL

Nov 17, 2025Abstract:We present a practical framework for detecting errors in LLM-generated SQL by estimating uncertainty at the level of individual nodes in the query's abstract syntax tree (AST). Our approach proceeds in two stages. First, we introduce a semantically aware labeling algorithm that, given a generated SQL and a gold reference, assigns node-level correctness without over-penalizing structural containers or alias variation. Second, we represent each node with a rich set of schema-aware and lexical features - capturing identifier validity, alias resolution, type compatibility, ambiguity in scope, and typo signals - and train a supervised classifier to predict per-node error probabilities. We interpret these probabilities as calibrated uncertainty, enabling fine-grained diagnostics that pinpoint exactly where a query is likely to be wrong. Across multiple databases and datasets, our method substantially outperforms token log-probabilities: average AUC improves by +27.44% while maintaining robustness under cross-database evaluation. Beyond serving as an accuracy signal, node-level uncertainty supports targeted repair, human-in-the-loop review, and downstream selective execution. Together, these results establish node-centric, semantically grounded uncertainty estimation as a strong and interpretable alternative to aggregate sequence level confidence measures.

STAR-Rec: Making Peace with Length Variance and Pattern Diversity in Sequential Recommendation

May 06, 2025Abstract:Recent deep sequential recommendation models often struggle to effectively model key characteristics of user behaviors, particularly in handling sequence length variations and capturing diverse interaction patterns. We propose STAR-Rec, a novel architecture that synergistically combines preference-aware attention and state-space modeling through a sequence-level mixture-of-experts framework. STAR-Rec addresses these challenges by: (1) employing preference-aware attention to capture both inherently similar item relationships and diverse preferences, (2) utilizing state-space modeling to efficiently process variable-length sequences with linear complexity, and (3) incorporating a mixture-of-experts component that adaptively routes different behavioral patterns to specialized experts, handling both focused category-specific browsing and diverse category exploration patterns. We theoretically demonstrate how the state space model and attention mechanisms can be naturally unified in recommendation scenarios, where SSM captures temporal dynamics through state compression while attention models both similar and diverse item relationships. Extensive experiments on four real-world datasets demonstrate that STAR-Rec consistently outperforms state-of-the-art sequential recommendation methods, particularly in scenarios involving diverse user behaviors and varying sequence lengths.

Identification and Estimation of Long-Term Treatment Effects with Monotone Missing

Apr 28, 2025Abstract:Estimating long-term treatment effects has a wide range of applications in various domains. A key feature in this context is that collecting long-term outcomes typically involves a multi-stage process and is subject to monotone missing, where individuals missing at an earlier stage remain missing at subsequent stages. Despite its prevalence, monotone missing has been rarely explored in previous studies on estimating long-term treatment effects. In this paper, we address this gap by introducing the sequential missingness assumption for identification. We propose three novel estimation methods, including inverse probability weighting, sequential regression imputation, and sequential marginal structural model (SeqMSM). Considering that the SeqMSM method may suffer from high variance due to severe data sparsity caused by monotone missing, we further propose a novel balancing-enhanced approach, BalanceNet, to improve the stability and accuracy of the estimation methods. Extensive experiments on two widely used benchmark datasets demonstrate the effectiveness of our proposed methods.

Learning Counterfactual Outcomes Under Rank Preservation

Feb 10, 2025Abstract:Counterfactual inference aims to estimate the counterfactual outcome at the individual level given knowledge of an observed treatment and the factual outcome, with broad applications in fields such as epidemiology, econometrics, and management science. Previous methods rely on a known structural causal model (SCM) or assume the homogeneity of the exogenous variable and strict monotonicity between the outcome and exogenous variable. In this paper, we propose a principled approach for identifying and estimating the counterfactual outcome. We first introduce a simple and intuitive rank preservation assumption to identify the counterfactual outcome without relying on a known structural causal model. Building on this, we propose a novel ideal loss for theoretically unbiased learning of the counterfactual outcome and further develop a kernel-based estimator for its empirical estimation. Our theoretical analysis shows that the rank preservation assumption is not stronger than the homogeneity and strict monotonicity assumptions, and shows that the proposed ideal loss is convex, and the proposed estimator is unbiased. Extensive semi-synthetic and real-world experiments are conducted to demonstrate the effectiveness of the proposed method.

Optimal Policy Adaptation under Covariate Shift

Jan 14, 2025

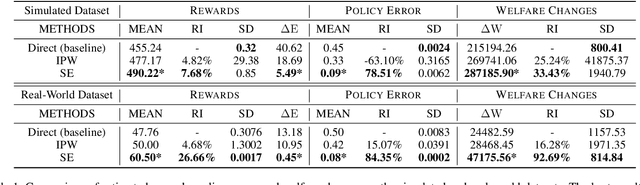

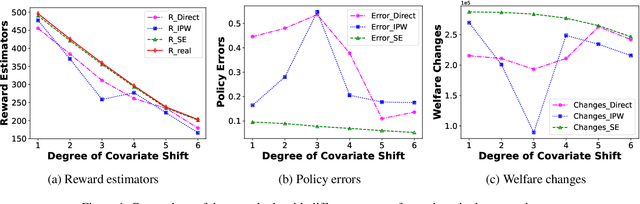

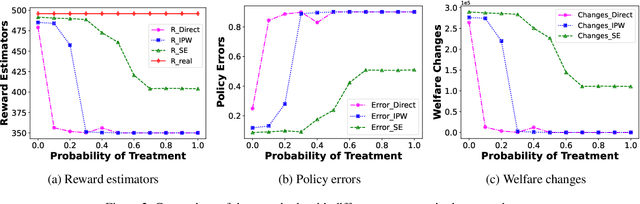

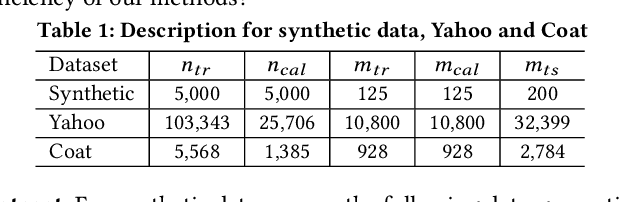

Abstract:Transfer learning of prediction models has been extensively studied, while the corresponding policy learning approaches are rarely discussed. In this paper, we propose principled approaches for learning the optimal policy in the target domain by leveraging two datasets: one with full information from the source domain and the other from the target domain with only covariates. First, under the setting of covariate shift, we formulate the problem from a perspective of causality and present the identifiability assumptions for the reward induced by a given policy. Then, we derive the efficient influence function and the semiparametric efficiency bound for the reward. Based on this, we construct a doubly robust and semiparametric efficient estimator for the reward and then learn the optimal policy by optimizing the estimated reward. Moreover, we theoretically analyze the bias and the generalization error bound for the learned policy. Furthermore, in the presence of both covariate and concept shifts, we propose a novel sensitivity analysis method to evaluate the robustness of the proposed policy learning approach. Extensive experiments demonstrate that the approach not only estimates the reward more accurately but also yields a policy that closely approximates the theoretically optimal policy.

Stepwise Reasoning Error Disruption Attack of LLMs

Dec 16, 2024

Abstract:Large language models (LLMs) have made remarkable strides in complex reasoning tasks, but their safety and robustness in reasoning processes remain underexplored. Existing attacks on LLM reasoning are constrained by specific settings or lack of imperceptibility, limiting their feasibility and generalizability. To address these challenges, we propose the Stepwise rEasoning Error Disruption (SEED) attack, which subtly injects errors into prior reasoning steps to mislead the model into producing incorrect subsequent reasoning and final answers. Unlike previous methods, SEED is compatible with zero-shot and few-shot settings, maintains the natural reasoning flow, and ensures covert execution without modifying the instruction. Extensive experiments on four datasets across four different models demonstrate SEED's effectiveness, revealing the vulnerabilities of LLMs to disruptions in reasoning processes. These findings underscore the need for greater attention to the robustness of LLM reasoning to ensure safety in practical applications.

Conformal Counterfactual Inference under Hidden Confounding

May 20, 2024

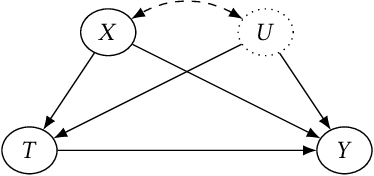

Abstract:Personalized decision making requires the knowledge of potential outcomes under different treatments, and confidence intervals about the potential outcomes further enrich this decision-making process and improve its reliability in high-stakes scenarios. Predicting potential outcomes along with its uncertainty in a counterfactual world poses the foundamental challenge in causal inference. Existing methods that construct confidence intervals for counterfactuals either rely on the assumption of strong ignorability, or need access to un-identifiable lower and upper bounds that characterize the difference between observational and interventional distributions. To overcome these limitations, we first propose a novel approach wTCP-DR based on transductive weighted conformal prediction, which provides confidence intervals for counterfactual outcomes with marginal converage guarantees, even under hidden confounding. With less restrictive assumptions, our approach requires access to a fraction of interventional data (from randomized controlled trials) to account for the covariate shift from observational distributoin to interventional distribution. Theoretical results explicitly demonstrate the conditions under which our algorithm is strictly advantageous to the naive method that only uses interventional data. After ensuring valid intervals on counterfactuals, it is straightforward to construct intervals for individual treatment effects (ITEs). We demonstrate our method across synthetic and real-world data, including recommendation systems, to verify the superiority of our methods compared against state-of-the-art baselines in terms of both coverage and efficiency

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge