Xinjian Zhao

HT-GNN: Hyper-Temporal Graph Neural Network for Customer Lifetime Value Prediction in Baidu Ads

Jan 19, 2026Abstract:Lifetime value (LTV) prediction is crucial for news feed advertising, enabling platforms to optimize bidding and budget allocation for long-term revenue growth. However, it faces two major challenges: (1) demographic-based targeting creates segment-specific LTV distributions with large value variations across user groups; and (2) dynamic marketing strategies generate irregular behavioral sequences where engagement patterns evolve rapidly. We propose a Hyper-Temporal Graph Neural Network (HT-GNN), which jointly models demographic heterogeneity and temporal dynamics through three key components: (i) a hypergraph-supervised module capturing inter-segment relationships; (ii) a transformer-based temporal encoder with adaptive weighting; and (iii) a task-adaptive mixture-of-experts with dynamic prediction towers for multi-horizon LTV forecasting. Experiments on \textit{Baidu Ads} with 15 million users demonstrate that HT-GNN consistently outperforms state-of-the-art methods across all metrics and prediction horizons.

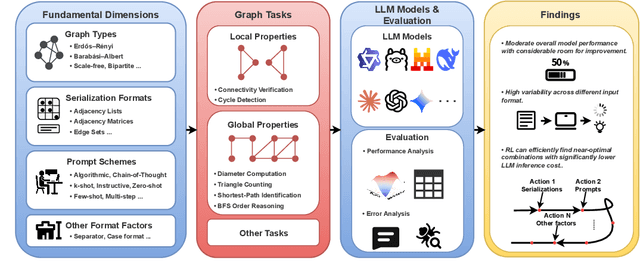

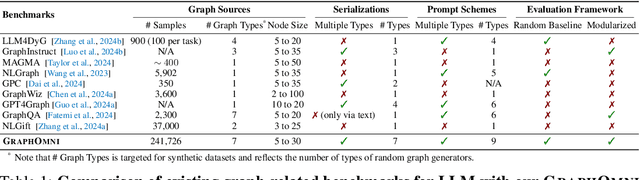

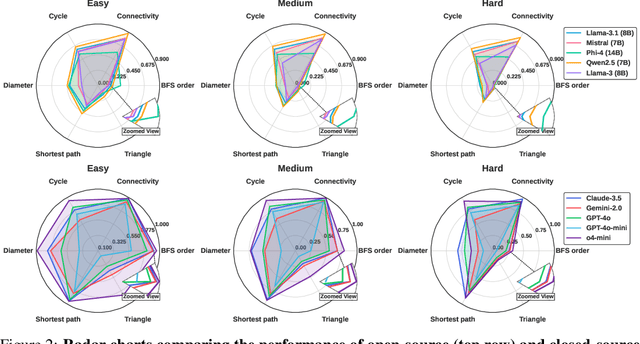

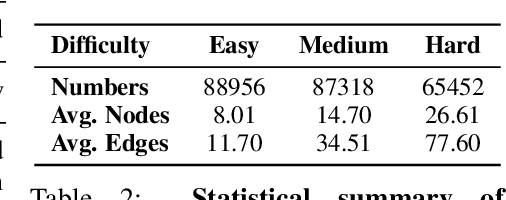

GraphOmni: A Comprehensive and Extendable Benchmark Framework for Large Language Models on Graph-theoretic Tasks

Apr 17, 2025

Abstract:In this paper, we presented GraphOmni, a comprehensive benchmark framework for systematically evaluating the graph reasoning capabilities of LLMs. By analyzing critical dimensions, including graph types, serialization formats, and prompt schemes, we provided extensive insights into the strengths and limitations of current LLMs. Our empirical findings emphasize that no single serialization or prompting strategy consistently outperforms others. Motivated by these insights, we propose a reinforcement learning-based approach that dynamically selects the best serialization-prompt pairings, resulting in significant accuracy improvements. GraphOmni's modular and extensible design establishes a robust foundation for future research, facilitating advancements toward general-purpose graph reasoning models.

Enhancing Graph Self-Supervised Learning with Graph Interplay

Oct 08, 2024

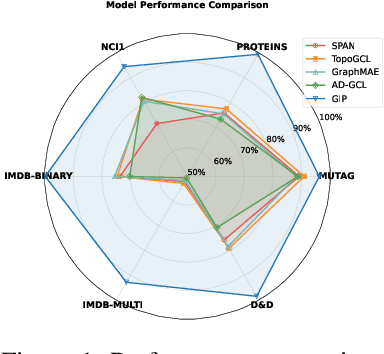

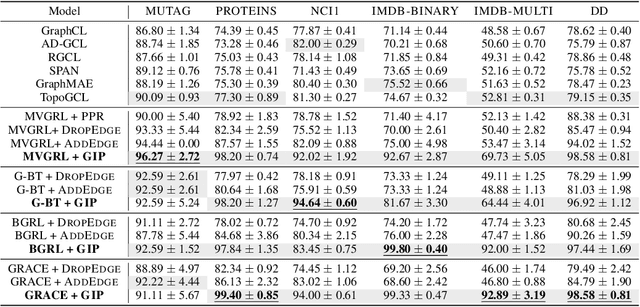

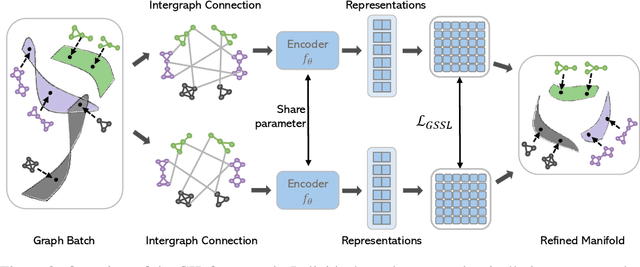

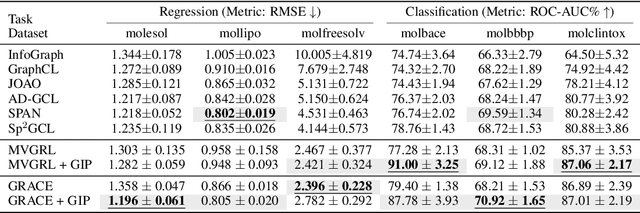

Abstract:Graph self-supervised learning (GSSL) has emerged as a compelling framework for extracting informative representations from graph-structured data without extensive reliance on labeled inputs. In this study, we introduce Graph Interplay (GIP), an innovative and versatile approach that significantly enhances the performance equipped with various existing GSSL methods. To this end, GIP advocates direct graph-level communications by introducing random inter-graph edges within standard batches. Against GIP's simplicity, we further theoretically show that \textsc{GIP} essentially performs a principled manifold separation via combining inter-graph message passing and GSSL, bringing about more structured embedding manifolds and thus benefits a series of downstream tasks. Our empirical study demonstrates that GIP surpasses the performance of prevailing GSSL methods across multiple benchmarks by significant margins, highlighting its potential as a breakthrough approach. Besides, GIP can be readily integrated into a series of GSSL methods and consistently offers additional performance gain. This advancement not only amplifies the capability of GSSL but also potentially sets the stage for a novel graph learning paradigm in a broader sense.

Do spectral cues matter in contrast-based graph self-supervised learning?

May 30, 2024

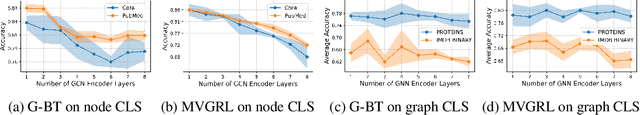

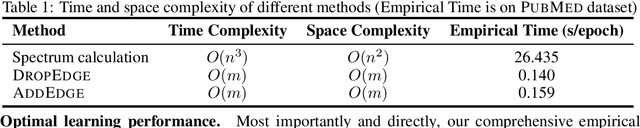

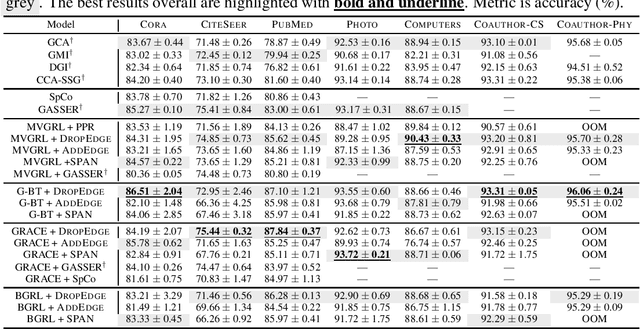

Abstract:The recent surge in contrast-based graph self-supervised learning has prominently featured an intensified exploration of spectral cues. However, an intriguing paradox emerges, as methods grounded in seemingly conflicting assumptions or heuristic approaches regarding the spectral domain demonstrate notable enhancements in learning performance. This paradox prompts a critical inquiry into the genuine contribution of spectral information to contrast-based graph self-supervised learning. This study undertakes an extensive investigation into this inquiry, conducting a thorough study of the relationship between spectral characteristics and the learning outcomes of contemporary methodologies. Based on this analysis, we claim that the effectiveness and significance of spectral information need to be questioned. Instead, we revisit simple edge perturbation: random edge dropping designed for node-level self-supervised learning and random edge adding intended for graph-level self-supervised learning. Compelling evidence is presented that these simple yet effective strategies consistently yield superior performance while demanding significantly fewer computational resources compared to all prior spectral augmentation methods. The proposed insights represent a significant leap forward in the field, potentially reshaping the understanding and implementation of graph self-supervised learning.

Boosting Protein Language Models with Negative Sample Mining

May 28, 2024

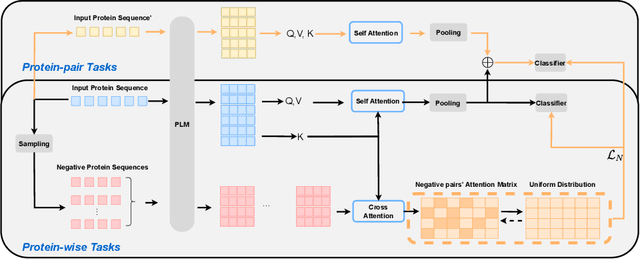

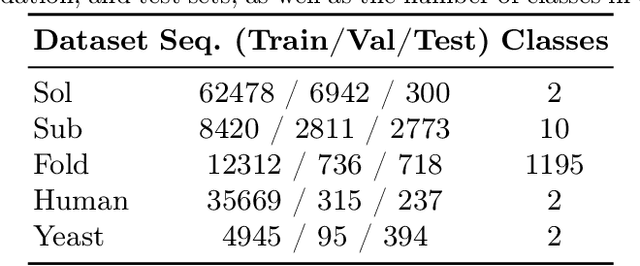

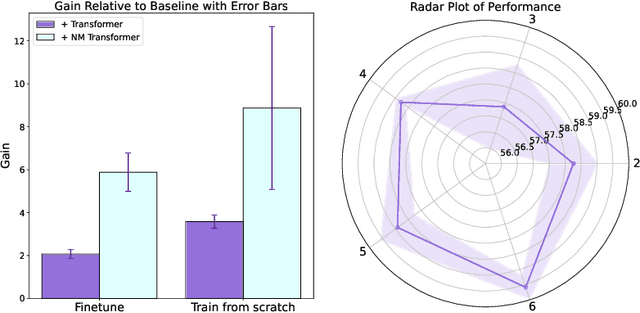

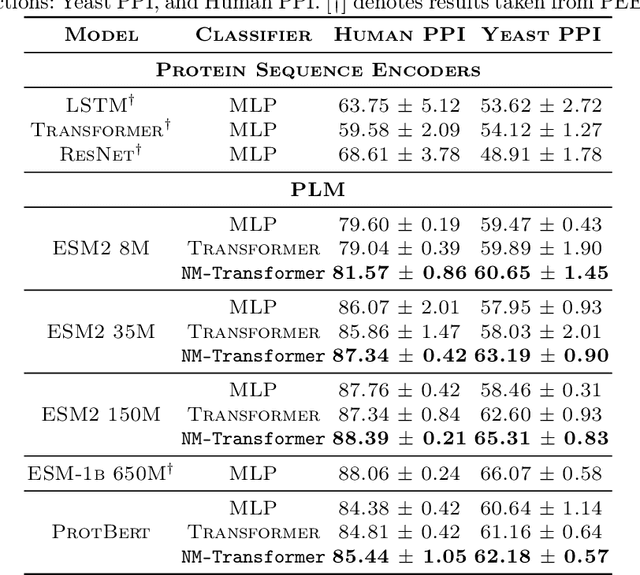

Abstract:We introduce a pioneering methodology for boosting large language models in the domain of protein representation learning. Our primary contribution lies in the refinement process for correlating the over-reliance on co-evolution knowledge, in a way that networks are trained to distill invaluable insights from negative samples, constituted by protein pairs sourced from disparate categories. By capitalizing on this novel approach, our technique steers the training of transformer-based models within the attention score space. This advanced strategy not only amplifies performance but also reflects the nuanced biological behaviors exhibited by proteins, offering aligned evidence with traditional biological mechanisms such as protein-protein interaction. We experimentally observed improved performance on various tasks over datasets, on top of several well-established large protein models. This innovative paradigm opens up promising horizons for further progress in the realms of protein research and computational biology.

Graph Learning with Distributional Edge Layouts

Feb 26, 2024

Abstract:Graph Neural Networks (GNNs) learn from graph-structured data by passing local messages between neighboring nodes along edges on certain topological layouts. Typically, these topological layouts in modern GNNs are deterministically computed (e.g., attention-based GNNs) or locally sampled (e.g., GraphSage) under heuristic assumptions. In this paper, we for the first time pose that these layouts can be globally sampled via Langevin dynamics following Boltzmann distribution equipped with explicit physical energy, leading to higher feasibility in the physical world. We argue that such a collection of sampled/optimized layouts can capture the wide energy distribution and bring extra expressivity on top of WL-test, therefore easing downstream tasks. As such, we propose Distributional Edge Layouts (DELs) to serve as a complement to a variety of GNNs. DEL is a pre-processing strategy independent of subsequent GNN variants, thus being highly flexible. Experimental results demonstrate that DELs consistently and substantially improve a series of GNN baselines, achieving state-of-the-art performance on multiple datasets.

Boosting Graph Pooling with Persistent Homology

Feb 26, 2024

Abstract:Recently, there has been an emerging trend to integrate persistent homology (PH) into graph neural networks (GNNs) to enrich expressive power. However, naively plugging PH features into GNN layers always results in marginal improvement with low interpretability. In this paper, we investigate a novel mechanism for injecting global topological invariance into pooling layers using PH, motivated by the observation that filtration operation in PH naturally aligns graph pooling in a cut-off manner. In this fashion, message passing in the coarsened graph acts along persistent pooled topology, leading to improved performance. Experimentally, we apply our mechanism to a collection of graph pooling methods and observe consistent and substantial performance gain over several popular datasets, demonstrating its wide applicability and flexibility.

Adversarial Curriculum Graph Contrastive Learning with Pair-wise Augmentation

Feb 16, 2024

Abstract:Graph contrastive learning (GCL) has emerged as a pivotal technique in the domain of graph representation learning. A crucial aspect of effective GCL is the caliber of generated positive and negative samples, which is intrinsically dictated by their resemblance to the original data. Nevertheless, precise control over similarity during sample generation presents a formidable challenge, often impeding the effective discovery of representative graph patterns. To address this challenge, we propose an innovative framework: Adversarial Curriculum Graph Contrastive Learning (ACGCL), which capitalizes on the merits of pair-wise augmentation to engender graph-level positive and negative samples with controllable similarity, alongside subgraph contrastive learning to discern effective graph patterns therein. Within the ACGCL framework, we have devised a novel adversarial curriculum training methodology that facilitates progressive learning by sequentially increasing the difficulty of distinguishing the generated samples. Notably, this approach transcends the prevalent sparsity issue inherent in conventional curriculum learning strategies by adaptively concentrating on more challenging training data. Finally, a comprehensive assessment of ACGCL is conducted through extensive experiments on six well-known benchmark datasets, wherein ACGCL conspicuously surpasses a set of state-of-the-art baselines.

Embedding in Recommender Systems: A Survey

Oct 28, 2023Abstract:Recommender systems have become an essential component of many online platforms, providing personalized recommendations to users. A crucial aspect is embedding techniques that coverts the high-dimensional discrete features, such as user and item IDs, into low-dimensional continuous vectors and can enhance the recommendation performance. Applying embedding techniques captures complex entity relationships and has spurred substantial research. In this survey, we provide an overview of the recent literature on embedding techniques in recommender systems. This survey covers embedding methods like collaborative filtering, self-supervised learning, and graph-based techniques. Collaborative filtering generates embeddings capturing user-item preferences, excelling in sparse data. Self-supervised methods leverage contrastive or generative learning for various tasks. Graph-based techniques like node2vec exploit complex relationships in network-rich environments. Addressing the scalability challenges inherent to embedding methods, our survey delves into innovative directions within the field of recommendation systems. These directions aim to enhance performance and reduce computational complexity, paving the way for improved recommender systems. Among these innovative approaches, we will introduce Auto Machine Learning (AutoML), hash techniques, and quantization techniques in this survey. We discuss various architectures and techniques and highlight the challenges and future directions in these aspects. This survey aims to provide a comprehensive overview of the state-of-the-art in this rapidly evolving field and serve as a useful resource for researchers and practitioners working in the area of recommender systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge