Li Jin

ES-Mem: Event Segmentation-Based Memory for Long-Term Dialogue Agents

Jan 13, 2026Abstract:Memory is critical for dialogue agents to maintain coherence and enable continuous adaptation in long-term interactions. While existing memory mechanisms offer basic storage and retrieval capabilities, they are hindered by two primary limitations: (1) rigid memory granularity often disrupts semantic integrity, resulting in fragmented and incoherent memory units; (2) prevalent flat retrieval paradigms rely solely on surface-level semantic similarity, neglecting the structural cues of discourse required to navigate and locate specific episodic contexts. To mitigate these limitations, drawing inspiration from Event Segmentation Theory, we propose ES-Mem, a framework incorporating two core components: (1) a dynamic event segmentation module that partitions long-term interactions into semantically coherent events with distinct boundaries; (2) a hierarchical memory architecture that constructs multi-layered memories and leverages boundary semantics to anchor specific episodic memory for precise context localization. Evaluations on two memory benchmarks demonstrate that ES-Mem yields consistent performance gains over baseline methods. Furthermore, the proposed event segmentation module exhibits robust applicability on dialogue segmentation datasets.

Rectify Evaluation Preference: Improving LLMs' Critique on Math Reasoning via Perplexity-aware Reinforcement Learning

Nov 13, 2025Abstract:To improve Multi-step Mathematical Reasoning (MsMR) of Large Language Models (LLMs), it is crucial to obtain scalable supervision from the corpus by automatically critiquing mistakes in the reasoning process of MsMR and rendering a final verdict of the problem-solution. Most existing methods rely on crafting high-quality supervised fine-tuning demonstrations for critiquing capability enhancement and pay little attention to delving into the underlying reason for the poor critiquing performance of LLMs. In this paper, we orthogonally quantify and investigate the potential reason -- imbalanced evaluation preference, and conduct a statistical preference analysis. Motivated by the analysis of the reason, a novel perplexity-aware reinforcement learning algorithm is proposed to rectify the evaluation preference, elevating the critiquing capability. Specifically, to probe into LLMs' critiquing characteristics, a One-to-many Problem-Solution (OPS) benchmark is meticulously constructed to quantify the behavior difference of LLMs when evaluating the problem solutions generated by itself and others. Then, to investigate the behavior difference in depth, we conduct a statistical preference analysis oriented on perplexity and find an intriguing phenomenon -- ``LLMs incline to judge solutions with lower perplexity as correct'', which is dubbed as \textit{imbalanced evaluation preference}. To rectify this preference, we regard perplexity as the baton in the algorithm of Group Relative Policy Optimization, supporting the LLMs to explore trajectories that judge lower perplexity as wrong and higher perplexity as correct. Extensive experimental results on our built OPS and existing available critic benchmarks demonstrate the validity of our method.

Embodied World Models Emerge from Navigational Task in Open-Ended Environments

Apr 15, 2025Abstract:Understanding how artificial systems can develop spatial awareness and reasoning has long been a challenge in AI research. Traditional models often rely on passive observation, but embodied cognition theory suggests that deeper understanding emerges from active interaction with the environment. This study investigates whether neural networks can autonomously internalize spatial concepts through interaction, focusing on planar navigation tasks. Using Gated Recurrent Units (GRUs) combined with Meta-Reinforcement Learning (Meta-RL), we show that agents can learn to encode spatial properties like direction, distance, and obstacle avoidance. We introduce Hybrid Dynamical Systems (HDS) to model the agent-environment interaction as a closed dynamical system, revealing stable limit cycles that correspond to optimal navigation strategies. Ridge Representation allows us to map navigation paths into a fixed-dimensional behavioral space, enabling comparison with neural states. Canonical Correlation Analysis (CCA) confirms strong alignment between these representations, suggesting that the agent's neural states actively encode spatial knowledge. Intervention experiments further show that specific neural dimensions are causally linked to navigation performance. This work provides an approach to bridging the gap between action and perception in AI, offering new insights into building adaptive, interpretable models that can generalize across complex environments. The causal validation of neural representations also opens new avenues for understanding and controlling the internal mechanisms of AI systems, pushing the boundaries of how machines learn and reason in dynamic, real-world scenarios.

Semi-Gradient SARSA Routing with Theoretical Guarantee on Traffic Stability and Weight Convergence

Mar 19, 2025

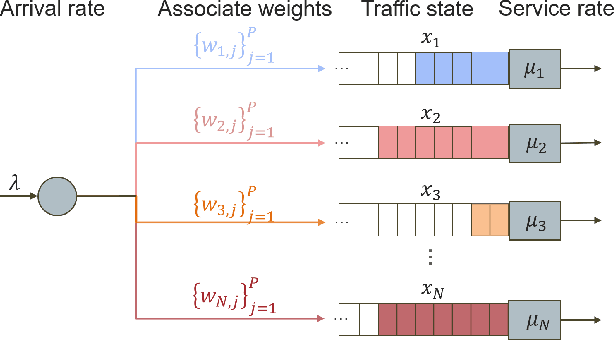

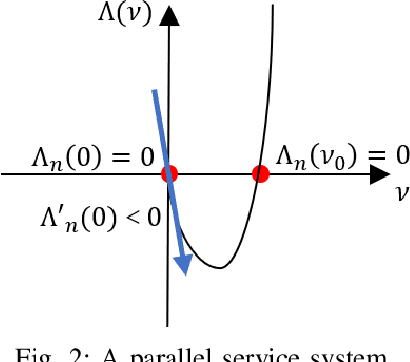

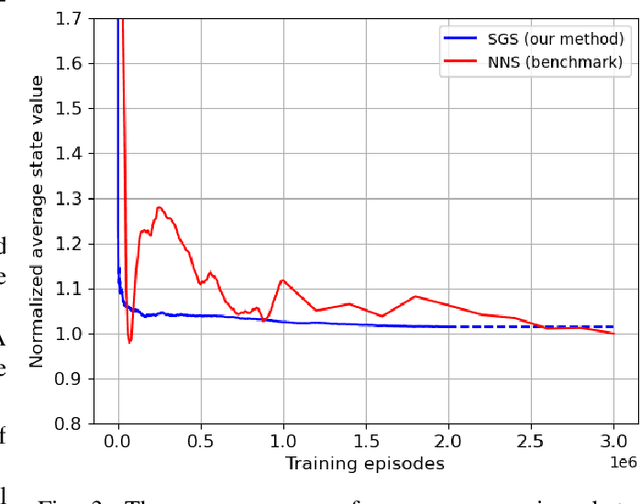

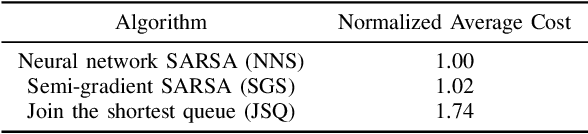

Abstract:We consider the traffic control problem of dynamic routing over parallel servers, which arises in a variety of engineering systems such as transportation and data transmission. We propose a semi-gradient, on-policy algorithm that learns an approximate optimal routing policy. The algorithm uses generic basis functions with flexible weights to approximate the value function across the unbounded state space. Consequently, the training process lacks Lipschitz continuity of the gradient, boundedness of the temporal-difference error, and a prior guarantee on ergodicity, which are the standard prerequisites in existing literature on reinforcement learning theory. To address this, we combine a Lyapunov approach and an ordinary differential equation-based method to jointly characterize the behavior of traffic state and approximation weights. Our theoretical analysis proves that the training scheme guarantees traffic state stability and ensures almost surely convergence of the weights to the approximate optimum. We also demonstrate via simulations that our algorithm attains significantly faster convergence than neural network-based methods with an insignificant approximation error.

Benchmarking Multimodal RAG through a Chart-based Document Question-Answering Generation Framework

Feb 20, 2025Abstract:Multimodal Retrieval-Augmented Generation (MRAG) enhances reasoning capabilities by integrating external knowledge. However, existing benchmarks primarily focus on simple image-text interactions, overlooking complex visual formats like charts that are prevalent in real-world applications. In this work, we introduce a novel task, Chart-based MRAG, to address this limitation. To semi-automatically generate high-quality evaluation samples, we propose CHARt-based document question-answering GEneration (CHARGE), a framework that produces evaluation data through structured keypoint extraction, crossmodal verification, and keypoint-based generation. By combining CHARGE with expert validation, we construct Chart-MRAG Bench, a comprehensive benchmark for chart-based MRAG evaluation, featuring 4,738 question-answering pairs across 8 domains from real-world documents. Our evaluation reveals three critical limitations in current approaches: (1) unified multimodal embedding retrieval methods struggles in chart-based scenarios, (2) even with ground-truth retrieval, state-of-the-art MLLMs achieve only 58.19% Correctness and 73.87% Coverage scores, and (3) MLLMs demonstrate consistent text-over-visual modality bias during Chart-based MRAG reasoning. The CHARGE and Chart-MRAG Bench are released at https://github.com/Nomothings/CHARGE.git.

COT: A Generative Approach for Hate Speech Counter-Narratives via Contrastive Optimal Transport

Jun 18, 2024

Abstract:Counter-narratives, which are direct responses consisting of non-aggressive fact-based arguments, have emerged as a highly effective approach to combat the proliferation of hate speech. Previous methodologies have primarily focused on fine-tuning and post-editing techniques to ensure the fluency of generated contents, while overlooking the critical aspects of individualization and relevance concerning the specific hatred targets, such as LGBT groups, immigrants, etc. This research paper introduces a novel framework based on contrastive optimal transport, which effectively addresses the challenges of maintaining target interaction and promoting diversification in generating counter-narratives. Firstly, an Optimal Transport Kernel (OTK) module is leveraged to incorporate hatred target information in the token representations, in which the comparison pairs are extracted between original and transported features. Secondly, a self-contrastive learning module is employed to address the issue of model degeneration. This module achieves this by generating an anisotropic distribution of token representations. Finally, a target-oriented search method is integrated as an improved decoding strategy to explicitly promote domain relevance and diversification in the inference process. This strategy modifies the model's confidence score by considering both token similarity and target relevance. Quantitative and qualitative experiments have been evaluated on two benchmark datasets, which demonstrate that our proposed model significantly outperforms current methods evaluated by metrics from multiple aspects.

Dyna-Style Learning with A Macroscopic Model for Vehicle Platooning in Mixed-Autonomy Traffic

May 03, 2024

Abstract:Platooning of connected and autonomous vehicles (CAVs) plays a vital role in modernizing highways, ushering in enhanced efficiency and safety. This paper explores the significance of platooning in smart highways, employing a coupled partial differential equation (PDE) and ordinary differential equation (ODE) model to elucidate the complex interaction between bulk traffic flow and CAV platoons. Our study focuses on developing a Dyna-style planning and learning framework tailored for platoon control, with a specific goal of reducing fuel consumption. By harnessing the coupled PDE-ODE model, we improve data efficiency in Dyna-style learning through virtual experiences. Simulation results validate the effectiveness of our macroscopic model in modeling platoons within mixed-autonomy settings, demonstrating a notable $10.11\%$ reduction in vehicular fuel consumption compared to conventional approaches.

V2X-Real: a Largs-Scale Dataset for Vehicle-to-Everything Cooperative Perception

Mar 24, 2024Abstract:Recent advancements in Vehicle-to-Everything (V2X) technologies have enabled autonomous vehicles to share sensing information to see through occlusions, greatly boosting the perception capability. However, there are no real-world datasets to facilitate the real V2X cooperative perception research -- existing datasets either only support Vehicle-to-Infrastructure cooperation or Vehicle-to-Vehicle cooperation. In this paper, we propose a dataset that has a mixture of multiple vehicles and smart infrastructure simultaneously to facilitate the V2X cooperative perception development with multi-modality sensing data. Our V2X-Real is collected using two connected automated vehicles and two smart infrastructures, which are all equipped with multi-modal sensors including LiDAR sensors and multi-view cameras. The whole dataset contains 33K LiDAR frames and 171K camera data with over 1.2M annotated bounding boxes of 10 categories in very challenging urban scenarios. According to the collaboration mode and ego perspective, we derive four types of datasets for Vehicle-Centric, Infrastructure-Centric, Vehicle-to-Vehicle, and Infrastructure-to-Infrastructure cooperative perception. Comprehensive multi-class multi-agent benchmarks of SOTA cooperative perception methods are provided. The V2X-Real dataset and benchmark codes will be released.

Chain-of-Specificity: An Iteratively Refining Method for Eliciting Knowledge from Large Language Models

Feb 20, 2024

Abstract:Large Language Models (LLMs) exhibit remarkable generative capabilities, enabling the generation of valuable information. Despite these advancements, previous research found that LLMs sometimes struggle with adhering to specific constraints (e.g., in specific place or at specific time), at times even overlooking them, which leads to responses that are either too generic or not fully satisfactory. Existing approaches attempted to address this issue by decomposing or rewriting input instructions, yet they fall short in adequately emphasizing specific constraints and in unlocking the underlying knowledge (e.g., programming within the context of software development). In response, this paper proposes a simple yet effective method named Chain-of-Specificity (CoS). Specifically, CoS iteratively emphasizes the specific constraints in the input instructions, unlocks knowledge within LLMs, and refines responses. Experiments conducted on publicly available and self-build complex datasets demonstrate that CoS outperforms existing methods in enhancing generated content especially for the specificity. Besides, as the number of specific constraints increase, other baselines falter, while CoS still performs well. Moreover, we show that distilling responses generated by CoS effectively enhances the ability of smaller models to follow the constrained instructions. Resources of this paper will be released for further research.

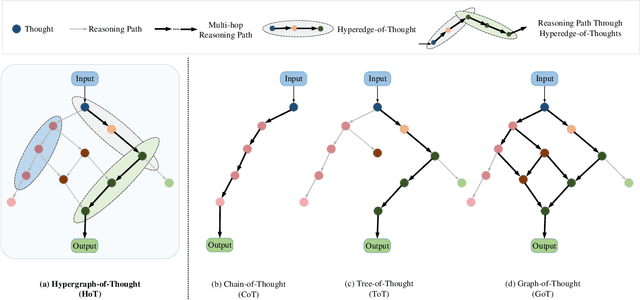

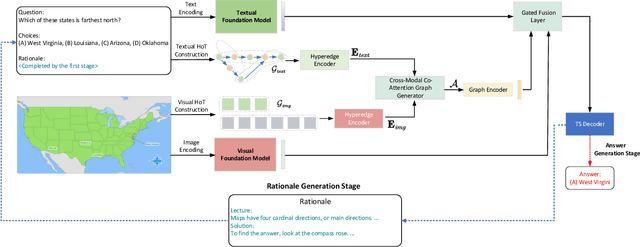

Thinking Like an Expert:Multimodal Hypergraph-of-Thought (HoT) Reasoning to boost Foundation Modals

Aug 11, 2023

Abstract:Reasoning ability is one of the most crucial capabilities of a foundation model, signifying its capacity to address complex reasoning tasks. Chain-of-Thought (CoT) technique is widely regarded as one of the effective methods for enhancing the reasoning ability of foundation models and has garnered significant attention. However, the reasoning process of CoT is linear, step-by-step, similar to personal logical reasoning, suitable for solving general and slightly complicated problems. On the contrary, the thinking pattern of an expert owns two prominent characteristics that cannot be handled appropriately in CoT, i.e., high-order multi-hop reasoning and multimodal comparative judgement. Therefore, the core motivation of this paper is transcending CoT to construct a reasoning paradigm that can think like an expert. The hyperedge of a hypergraph could connect various vertices, making it naturally suitable for modelling high-order relationships. Inspired by this, this paper innovatively proposes a multimodal Hypergraph-of-Thought (HoT) reasoning paradigm, which enables the foundation models to possess the expert-level ability of high-order multi-hop reasoning and multimodal comparative judgement. Specifically, a textual hypergraph-of-thought is constructed utilizing triple as the primary thought to model higher-order relationships, and a hyperedge-of-thought is generated through multi-hop walking paths to achieve multi-hop inference. Furthermore, we devise a visual hypergraph-of-thought to interact with the textual hypergraph-of-thought via Cross-modal Co-Attention Graph Learning for multimodal comparative verification. Experimentations on the ScienceQA benchmark demonstrate the proposed HoT-based T5 outperforms CoT-based GPT3.5 and chatGPT, which is on par with CoT-based GPT4 with a lower model size.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge