Kevin Jamieson

Improved Regret Bounds for Linear Bandits with Heavy-Tailed Rewards

Jun 05, 2025Abstract:We study stochastic linear bandits with heavy-tailed rewards, where the rewards have a finite $(1+\epsilon)$-absolute central moment bounded by $\upsilon$ for some $\epsilon \in (0,1]$. We improve both upper and lower bounds on the minimax regret compared to prior work. When $\upsilon = \mathcal{O}(1)$, the best prior known regret upper bound is $\tilde{\mathcal{O}}(d T^{\frac{1}{1+\epsilon}})$. While a lower with the same scaling has been given, it relies on a construction using $\upsilon = \mathcal{O}(d)$, and adapting the construction to the bounded-moment regime with $\upsilon = \mathcal{O}(1)$ yields only a $\Omega(d^{\frac{\epsilon}{1+\epsilon}} T^{\frac{1}{1+\epsilon}})$ lower bound. This matches the known rate for multi-armed bandits and is generally loose for linear bandits, in particular being $\sqrt{d}$ below the optimal rate in the finite-variance case ($\epsilon = 1$). We propose a new elimination-based algorithm guided by experimental design, which achieves regret $\tilde{\mathcal{O}}(d^{\frac{1+3\epsilon}{2(1+\epsilon)}} T^{\frac{1}{1+\epsilon}})$, thus improving the dependence on $d$ for all $\epsilon \in (0,1)$ and recovering a known optimal result for $\epsilon = 1$. We also establish a lower bound of $\Omega(d^{\frac{2\epsilon}{1+\epsilon}} T^{\frac{1}{1+\epsilon}})$, which strictly improves upon the multi-armed bandit rate and highlights the hardness of heavy-tailed linear bandit problems. For finite action sets, we derive similarly improved upper and lower bounds for regret. Finally, we provide action set dependent regret upper bounds showing that for some geometries, such as $l_p$-norm balls for $p \le 1 + \epsilon$, we can further reduce the dependence on $d$, and we can handle infinite-dimensional settings via the kernel trick, in particular establishing new regret bounds for the Mat\'ern kernel that are the first to be sublinear for all $\epsilon \in (0, 1]$.

Learning to Incentivize in Repeated Principal-Agent Problems with Adversarial Agent Arrivals

May 29, 2025Abstract:We initiate the study of a repeated principal-agent problem over a finite horizon $T$, where a principal sequentially interacts with $K\geq 2$ types of agents arriving in an adversarial order. At each round, the principal strategically chooses one of the $N$ arms to incentivize for an arriving agent of unknown type. The agent then chooses an arm based on its own utility and the provided incentive, and the principal receives a corresponding reward. The objective is to minimize regret against the best incentive in hindsight. Without prior knowledge of agent behavior, we show that the problem becomes intractable, leading to linear regret. We analyze two key settings where sublinear regret is achievable. In the first setting, the principal knows the arm each agent type would select greedily for any given incentive. Under this setting, we propose an algorithm that achieves a regret bound of $O(\min\{\sqrt{KT\log N},K\sqrt{T}\})$ and provide a matching lower bound up to a $\log K$ factor. In the second setting, an agent's response varies smoothly with the incentive and is governed by a Lipschitz constant $L\geq 1$. Under this setting, we show that there is an algorithm with a regret bound of $\tilde{O}((LN)^{1/3}T^{2/3})$ and establish a matching lower bound up to logarithmic factors. Finally, we extend our algorithmic results for both settings by allowing the principal to incentivize multiple arms simultaneously in each round.

Stow: Robotic Packing of Items into Fabric Pods

May 07, 2025

Abstract:This paper presents a compliant manipulation system capable of placing items onto densely packed shelves. The wide diversity of items and strict business requirements for high producing rates and low defect generation have prohibited warehouse robotics from performing this task. Our innovations in hardware, perception, decision-making, motion planning, and control have enabled this system to perform over 500,000 stows in a large e-commerce fulfillment center. The system achieves human levels of packing density and speed while prioritizing work on overhead shelves to enhance the safety of humans working alongside the robots.

Efficient Near-Optimal Algorithm for Online Shortest Paths in Directed Acyclic Graphs with Bandit Feedback Against Adaptive Adversaries

Apr 01, 2025Abstract:In this paper, we study the online shortest path problem in directed acyclic graphs (DAGs) under bandit feedback against an adaptive adversary. Given a DAG $G = (V, E)$ with a source node $v_{\mathsf{s}}$ and a sink node $v_{\mathsf{t}}$, let $X \subseteq \{0,1\}^{|E|}$ denote the set of all paths from $v_{\mathsf{s}}$ to $v_{\mathsf{t}}$. At each round $t$, we select a path $\mathbf{x}_t \in X$ and receive bandit feedback on our loss $\langle \mathbf{x}_t, \mathbf{y}_t \rangle \in [-1,1]$, where $\mathbf{y}_t$ is an adversarially chosen loss vector. Our goal is to minimize regret with respect to the best path in hindsight over $T$ rounds. We propose the first computationally efficient algorithm to achieve a near-minimax optimal regret bound of $\tilde O(\sqrt{|E|T\log |X|})$ with high probability against any adaptive adversary, where $\tilde O(\cdot)$ hides logarithmic factors in the number of edges $|E|$. Our algorithm leverages a novel loss estimator and a centroid-based decomposition in a nontrivial manner to attain this regret bound. As an application, we show that our algorithm for DAGs provides state-of-the-art efficient algorithms for $m$-sets, extensive-form games, the Colonel Blotto game, shortest walks in directed graphs, hypercubes, and multi-task multi-armed bandits, achieving improved high-probability regret guarantees in all these settings.

Active learning of neural population dynamics using two-photon holographic optogenetics

Dec 03, 2024

Abstract:Recent advances in techniques for monitoring and perturbing neural populations have greatly enhanced our ability to study circuits in the brain. In particular, two-photon holographic optogenetics now enables precise photostimulation of experimenter-specified groups of individual neurons, while simultaneous two-photon calcium imaging enables the measurement of ongoing and induced activity across the neural population. Despite the enormous space of potential photostimulation patterns and the time-consuming nature of photostimulation experiments, very little algorithmic work has been done to determine the most effective photostimulation patterns for identifying the neural population dynamics. Here, we develop methods to efficiently select which neurons to stimulate such that the resulting neural responses will best inform a dynamical model of the neural population activity. Using neural population responses to photostimulation in mouse motor cortex, we demonstrate the efficacy of a low-rank linear dynamical systems model, and develop an active learning procedure which takes advantage of low-rank structure to determine informative photostimulation patterns. We demonstrate our approach on both real and synthetic data, obtaining in some cases as much as a two-fold reduction in the amount of data required to reach a given predictive power. Our active stimulation design method is based on a novel active learning procedure for low-rank regression, which may be of independent interest.

Overcoming the Sim-to-Real Gap: Leveraging Simulation to Learn to Explore for Real-World RL

Oct 26, 2024

Abstract:In order to mitigate the sample complexity of real-world reinforcement learning, common practice is to first train a policy in a simulator where samples are cheap, and then deploy this policy in the real world, with the hope that it generalizes effectively. Such \emph{direct sim2real} transfer is not guaranteed to succeed, however, and in cases where it fails, it is unclear how to best utilize the simulator. In this work, we show that in many regimes, while direct sim2real transfer may fail, we can utilize the simulator to learn a set of \emph{exploratory} policies which enable efficient exploration in the real world. In particular, in the setting of low-rank MDPs, we show that coupling these exploratory policies with simple, practical approaches -- least-squares regression oracles and naive randomized exploration -- yields a polynomial sample complexity in the real world, an exponential improvement over direct sim2real transfer, or learning without access to a simulator. To the best of our knowledge, this is the first evidence that simulation transfer yields a provable gain in reinforcement learning in settings where direct sim2real transfer fails. We validate our theoretical results on several realistic robotic simulators and a real-world robotic sim2real task, demonstrating that transferring exploratory policies can yield substantial gains in practice as well.

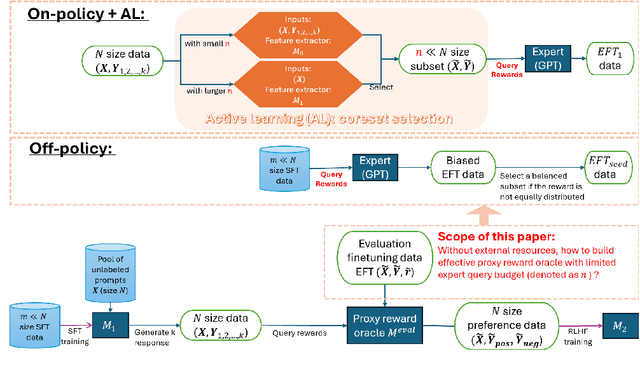

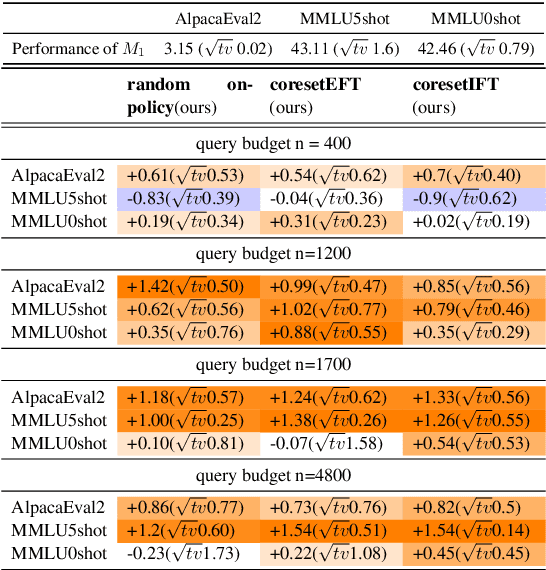

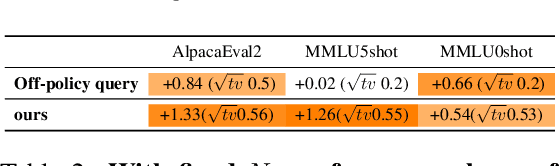

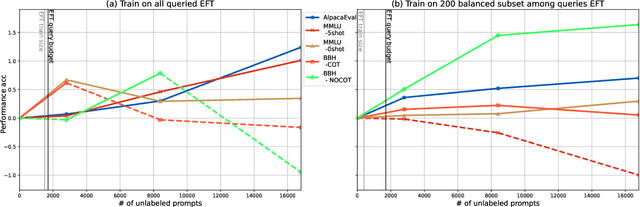

Cost-Effective Proxy Reward Model Construction with On-Policy and Active Learning

Jul 02, 2024

Abstract:Reinforcement learning with human feedback (RLHF), as a widely adopted approach in current large language model pipelines, is \textit{bottlenecked by the size of human preference data}. While traditional methods rely on offline preference dataset constructions, recent approaches have shifted towards online settings, where a learner uses a small amount of labeled seed data and a large pool of unlabeled prompts to iteratively construct new preference data through self-generated responses and high-quality reward/preference feedback. However, most current online algorithms still focus on preference labeling during policy model updating with given feedback oracles, which incurs significant expert query costs. \textit{We are the first to explore cost-effective proxy reward oracles construction strategies for further labeling preferences or rewards with extremely limited labeled data and expert query budgets}. Our approach introduces two key innovations: (1) on-policy query to avoid OOD and imbalance issues in seed data, and (2) active learning to select the most informative data for preference queries. Using these methods, we train a evaluation model with minimal expert-labeled data, which then effectively labels nine times more preference pairs for further RLHF training. For instance, our model using Direct Preference Optimization (DPO) gains around over 1% average improvement on AlpacaEval2, MMLU-5shot and MMLU-0shot, with only 1.7K query cost. Our methodology is orthogonal to other direct expert query-based strategies and therefore might be integrated with them to further reduce query costs.

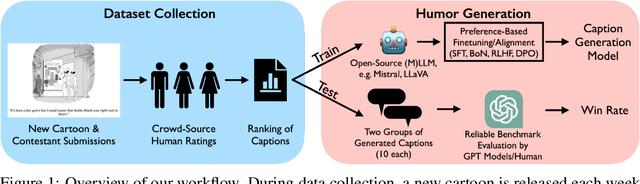

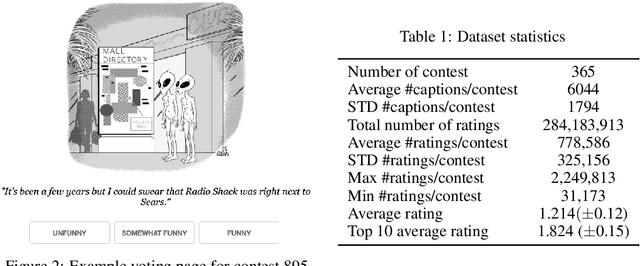

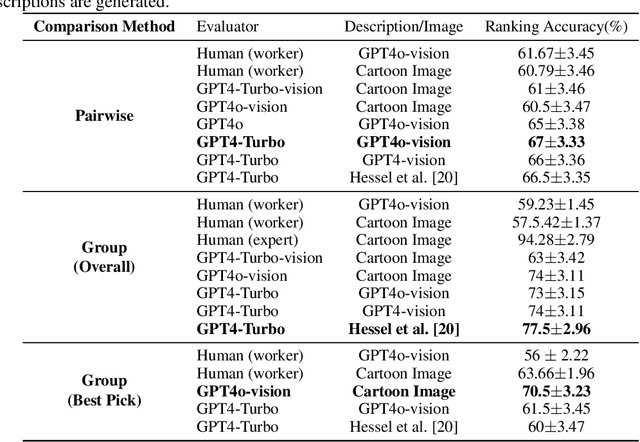

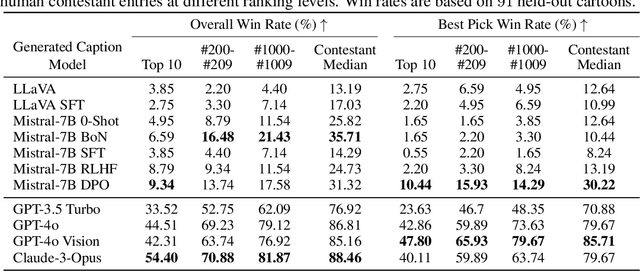

Humor in AI: Massive Scale Crowd-Sourced Preferences and Benchmarks for Cartoon Captioning

Jun 15, 2024

Abstract:We present a novel multimodal preference dataset for creative tasks, consisting of over 250 million human ratings on more than 2.2 million captions, collected through crowdsourcing rating data for The New Yorker's weekly cartoon caption contest over the past eight years. This unique dataset supports the development and evaluation of multimodal large language models and preference-based fine-tuning algorithms for humorous caption generation. We propose novel benchmarks for judging the quality of model-generated captions, utilizing both GPT4 and human judgments to establish ranking-based evaluation strategies. Our experimental results highlight the limitations of current fine-tuning methods, such as RLHF and DPO, when applied to creative tasks. Furthermore, we demonstrate that even state-of-the-art models like GPT4 and Claude currently underperform top human contestants in generating humorous captions. As we conclude this extensive data collection effort, we release the entire preference dataset to the research community, fostering further advancements in AI humor generation and evaluation.

Sample Complexity Reduction via Policy Difference Estimation in Tabular Reinforcement Learning

Jun 11, 2024

Abstract:In this paper, we study the non-asymptotic sample complexity for the pure exploration problem in contextual bandits and tabular reinforcement learning (RL): identifying an epsilon-optimal policy from a set of policies with high probability. Existing work in bandits has shown that it is possible to identify the best policy by estimating only the difference between the behaviors of individual policies, which can be substantially cheaper than estimating the behavior of each policy directly. However, the best-known complexities in RL fail to take advantage of this and instead estimate the behavior of each policy directly. Does it suffice to estimate only the differences in the behaviors of policies in RL? We answer this question positively for contextual bandits but in the negative for tabular RL, showing a separation between contextual bandits and RL. However, inspired by this, we show that it almost suffices to estimate only the differences in RL: if we can estimate the behavior of a single reference policy, it suffices to only estimate how any other policy deviates from this reference policy. We develop an algorithm which instantiates this principle and obtains, to the best of our knowledge, the tightest known bound on the sample complexity of tabular RL.

CLIPLoss and Norm-Based Data Selection Methods for Multimodal Contrastive Learning

May 29, 2024Abstract:Data selection has emerged as a core issue for large-scale visual-language model pretaining (e.g., CLIP), particularly with noisy web-curated datasets. Three main data selection approaches are: (1) leveraging external non-CLIP models to aid data selection, (2) training new CLIP-style embedding models that are more effective at selecting high-quality data than the original OpenAI CLIP model, and (3) designing better metrics or strategies universally applicable to any CLIP embedding without requiring specific model properties (e.g., CLIPScore is one popular metric). While the first two approaches have been extensively studied, the third remains under-explored. In this paper, we advance the third approach by proposing two new methods. Firstly, instead of classical CLIP scores that only consider the alignment between two modalities from a single sample, we introduce negCLIPLoss, a CLIP loss-inspired method that adds the alignment between one sample and its contrastive pairs as an extra normalization term for better quality measurement. Secondly, when downstream tasks are known, we propose a new norm-based metric, NormSim, to measure the similarity between pretraining data and target data. We test our methods on the data selection benchmark, DataComp~\cite{gadre2023datacomp}. Compared to the best baseline using only OpenAI's CLIP-L/14, our methods achieve a 5.3\% improvement on ImageNet-1k and a 2.8\% improvement on 38 downstream evaluation tasks. Moreover, both negCLIPLoss and NormSim are compatible with existing techniques. By combining our methods with the current best methods DFN~\cite{fang2023data} and HYPE~\cite{kim2024hype}, we can boost average performance on downstream tasks by 0.9\%, achieving a new state-of-the-art.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge