Arnab Maiti

Learning to Incentivize in Repeated Principal-Agent Problems with Adversarial Agent Arrivals

May 29, 2025Abstract:We initiate the study of a repeated principal-agent problem over a finite horizon $T$, where a principal sequentially interacts with $K\geq 2$ types of agents arriving in an adversarial order. At each round, the principal strategically chooses one of the $N$ arms to incentivize for an arriving agent of unknown type. The agent then chooses an arm based on its own utility and the provided incentive, and the principal receives a corresponding reward. The objective is to minimize regret against the best incentive in hindsight. Without prior knowledge of agent behavior, we show that the problem becomes intractable, leading to linear regret. We analyze two key settings where sublinear regret is achievable. In the first setting, the principal knows the arm each agent type would select greedily for any given incentive. Under this setting, we propose an algorithm that achieves a regret bound of $O(\min\{\sqrt{KT\log N},K\sqrt{T}\})$ and provide a matching lower bound up to a $\log K$ factor. In the second setting, an agent's response varies smoothly with the incentive and is governed by a Lipschitz constant $L\geq 1$. Under this setting, we show that there is an algorithm with a regret bound of $\tilde{O}((LN)^{1/3}T^{2/3})$ and establish a matching lower bound up to logarithmic factors. Finally, we extend our algorithmic results for both settings by allowing the principal to incentivize multiple arms simultaneously in each round.

Efficient Near-Optimal Algorithm for Online Shortest Paths in Directed Acyclic Graphs with Bandit Feedback Against Adaptive Adversaries

Apr 01, 2025Abstract:In this paper, we study the online shortest path problem in directed acyclic graphs (DAGs) under bandit feedback against an adaptive adversary. Given a DAG $G = (V, E)$ with a source node $v_{\mathsf{s}}$ and a sink node $v_{\mathsf{t}}$, let $X \subseteq \{0,1\}^{|E|}$ denote the set of all paths from $v_{\mathsf{s}}$ to $v_{\mathsf{t}}$. At each round $t$, we select a path $\mathbf{x}_t \in X$ and receive bandit feedback on our loss $\langle \mathbf{x}_t, \mathbf{y}_t \rangle \in [-1,1]$, where $\mathbf{y}_t$ is an adversarially chosen loss vector. Our goal is to minimize regret with respect to the best path in hindsight over $T$ rounds. We propose the first computationally efficient algorithm to achieve a near-minimax optimal regret bound of $\tilde O(\sqrt{|E|T\log |X|})$ with high probability against any adaptive adversary, where $\tilde O(\cdot)$ hides logarithmic factors in the number of edges $|E|$. Our algorithm leverages a novel loss estimator and a centroid-based decomposition in a nontrivial manner to attain this regret bound. As an application, we show that our algorithm for DAGs provides state-of-the-art efficient algorithms for $m$-sets, extensive-form games, the Colonel Blotto game, shortest walks in directed graphs, hypercubes, and multi-task multi-armed bandits, achieving improved high-probability regret guarantees in all these settings.

Near-Optimal Pure Exploration in Matrix Games: A Generalization of Stochastic Bandits & Dueling Bandits

Oct 25, 2023Abstract:We study the sample complexity of identifying the pure strategy Nash equilibrium (PSNE) in a two-player zero-sum matrix game with noise. Formally, we are given a stochastic model where any learner can sample an entry $(i,j)$ of the input matrix $A\in[-1,1]^{n\times m}$ and observe $A_{i,j}+\eta$ where $\eta$ is a zero-mean 1-sub-Gaussian noise. The aim of the learner is to identify the PSNE of $A$, whenever it exists, with high probability while taking as few samples as possible. Zhou et al. (2017) presents an instance-dependent sample complexity lower bound that depends only on the entries in the row and column in which the PSNE lies. We design a near-optimal algorithm whose sample complexity matches the lower bound, up to log factors. The problem of identifying the PSNE also generalizes the problem of pure exploration in stochastic multi-armed bandits and dueling bandits, and our result matches the optimal bounds, up to log factors, in both the settings.

Logarithmic Regret for Matrix Games against an Adversary with Noisy Bandit Feedback

Jun 22, 2023Abstract:This paper considers a variant of zero-sum matrix games where at each timestep the row player chooses row $i$, the column player chooses column $j$, and the row player receives a noisy reward with mean $A_{i,j}$. The objective of the row player is to accumulate as much reward as possible, even against an adversarial column player. If the row player uses the EXP3 strategy, an algorithm known for obtaining $\sqrt{T}$ regret against an arbitrary sequence of rewards, it is immediate that the row player also achieves $\sqrt{T}$ regret relative to the Nash equilibrium in this game setting. However, partly motivated by the fact that the EXP3 strategy is myopic to the structure of the game, O'Donoghue et al. (2021) proposed a UCB-style algorithm that leverages the game structure and demonstrated that this algorithm greatly outperforms EXP3 empirically. While they showed that this UCB-style algorithm achieved $\sqrt{T}$ regret, in this paper we ask if there exists an algorithm that provably achieves $\text{polylog}(T)$ regret against any adversary, analogous to results from stochastic bandits. We propose a novel algorithm that answers this question in the affirmative for the simple $2 \times 2$ setting, providing the first instance-dependent guarantees for games in the regret setting. Our algorithm overcomes two major hurdles: 1) obtaining logarithmic regret even though the Nash equilibrium is estimable only at a $1/\sqrt{T}$ rate, and 2) designing row-player strategies that guarantee that either the adversary provides information about the Nash equilibrium, or the row player incurs negative regret. Moreover, in the full information case we address the general $n \times m$ case where the first hurdle is still relevant. Finally, we show that EXP3 and the UCB-based algorithm necessarily cannot perform better than $\sqrt{T}$.

Instance-dependent Sample Complexity Bounds for Zero-sum Matrix Games

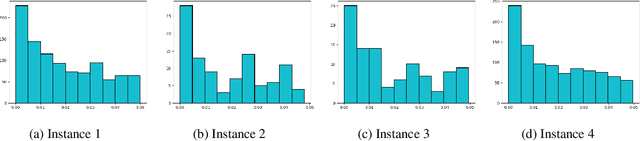

Mar 19, 2023Abstract:We study the sample complexity of identifying an approximate equilibrium for two-player zero-sum $n\times 2$ matrix games. That is, in a sequence of repeated game plays, how many rounds must the two players play before reaching an approximate equilibrium (e.g., Nash)? We derive instance-dependent bounds that define an ordering over game matrices that captures the intuition that the dynamics of some games converge faster than others. Specifically, we consider a stochastic observation model such that when the two players choose actions $i$ and $j$, respectively, they both observe each other's played actions and a stochastic observation $X_{ij}$ such that $\mathbb E[ X_{ij}] = A_{ij}$. To our knowledge, our work is the first case of instance-dependent lower bounds on the number of rounds the players must play before reaching an approximate equilibrium in the sense that the number of rounds depends on the specific properties of the game matrix $A$ as well as the desired accuracy. We also prove a converse statement: there exist player strategies that achieve this lower bound.

Fairness and Welfare Quantification for Regret in Multi-Armed Bandits

May 27, 2022Abstract:We extend the notion of regret with a welfarist perspective. Focussing on the classic multi-armed bandit (MAB) framework, the current work quantifies the performance of bandit algorithms by applying a fundamental welfare function, namely the Nash social welfare (NSW) function. This corresponds to equating algorithm's performance to the geometric mean of its expected rewards and leads us to the study of Nash regret, defined as the difference between the -- a priori unknown -- optimal mean (among the arms) and the algorithm's performance. Since NSW is known to satisfy fairness axioms, our approach complements the utilitarian considerations of average (cumulative) regret, wherein the algorithm is evaluated via the arithmetic mean of its expected rewards. This work develops an algorithm that, given the horizon of play $T$, achieves a Nash regret of $O \left( \sqrt{\frac{{k \log T}}{T}} \right)$, here $k$ denotes the number of arms in the MAB instance. Since, for any algorithm, the Nash regret is at least as much as its average regret (the AM-GM inequality), the known lower bound on average regret holds for Nash regret as well. Therefore, our Nash regret guarantee is essentially tight. In addition, we develop an anytime algorithm with a Nash regret guarantee of $O \left( \sqrt{\frac{{k\log T}}{T}} \log T \right)$.

Streaming Algorithms for Stochastic Multi-armed Bandits

Dec 09, 2020

Abstract:We study the Stochastic Multi-armed Bandit problem under bounded arm-memory. In this setting, the arms arrive in a stream, and the number of arms that can be stored in the memory at any time, is bounded. The decision-maker can only pull arms that are present in the memory. We address the problem from the perspective of two standard objectives: 1) regret minimization, and 2) best-arm identification. For regret minimization, we settle an important open question by showing an almost tight hardness. We show {\Omega}(T^{2/3}) cumulative regret in expectation for arm-memory size of (n-1), where n is the number of arms. For best-arm identification, we study two algorithms. First, we present an O(r) arm-memory r-round adaptive streaming algorithm to find an {\epsilon}-best arm. In r-round adaptive streaming algorithm for best-arm identification, the arm pulls in each round are decided based on the observed outcomes in the earlier rounds. The best-arm is the output at the end of r rounds. The upper bound on the sample complexity of our algorithm matches with the lower bound for any r-round adaptive streaming algorithm. Secondly, we present a heuristic to find the {\epsilon}-best arm with optimal sample complexity, by storing only one extra arm in the memory.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge