Jiankun Wang

Robust Docking Maneuvers for Autonomous Trolley Collection: An Optimization-Based Visual Servoing Scheme

Sep 09, 2025

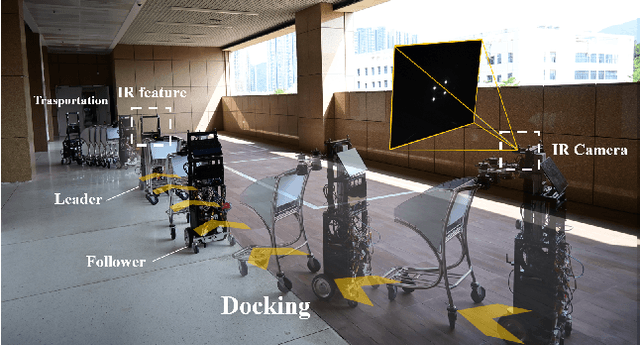

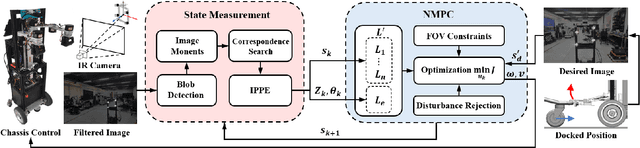

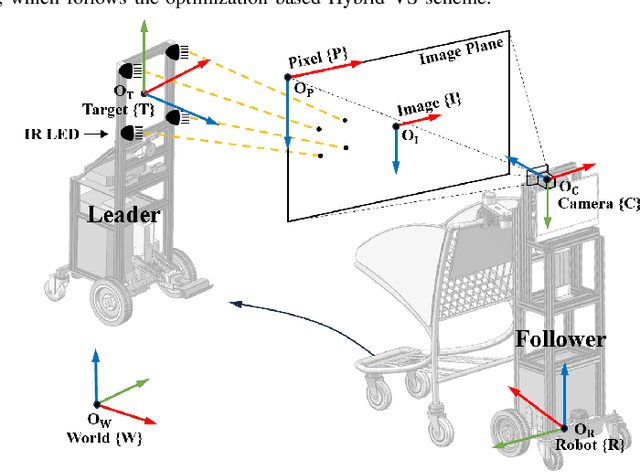

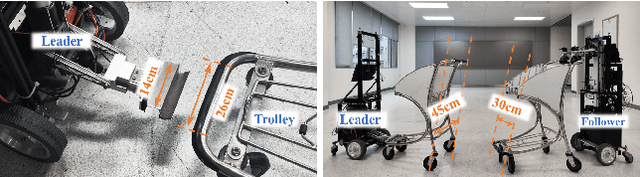

Abstract:Service robots have demonstrated significant potential for autonomous trolley collection and redistribution in public spaces like airports or warehouses to improve efficiency and reduce cost. Usually, a fully autonomous system for the collection and transportation of multiple trolleys is based on a Leader-Follower formation of mobile manipulators, where reliable docking maneuvers of the mobile base are essential to align trolleys into organized queues. However, developing a vision-based robotic docking system faces significant challenges: high precision requirements, environmental disturbances, and inherent robot constraints. To address these challenges, we propose an optimization-based Visual Servoing scheme that incorporates active infrared markers for robust feature extraction across diverse lighting conditions. This framework explicitly models nonholonomic kinematics and visibility constraints within the Hybrid Visual Servoing problem, augmented with an observer for disturbance rejection to ensure precise and stable docking. Experimental results across diverse environments demonstrate the robustness of this system, with quantitative evaluations confirming high docking accuracy.

Socially Aware Robot Crowd Navigation via Online Uncertainty-Driven Risk Adaptation

Jun 17, 2025Abstract:Navigation in human-robot shared crowded environments remains challenging, as robots are expected to move efficiently while respecting human motion conventions. However, many existing approaches emphasize safety or efficiency while overlooking social awareness. This article proposes Learning-Risk Model Predictive Control (LR-MPC), a data-driven navigation algorithm that balances efficiency, safety, and social awareness. LR-MPC consists of two phases: an offline risk learning phase, where a Probabilistic Ensemble Neural Network (PENN) is trained using risk data from a heuristic MPC-based baseline (HR-MPC), and an online adaptive inference phase, where local waypoints are sampled and globally guided by a Multi-RRT planner. Each candidate waypoint is evaluated for risk by PENN, and predictions are filtered using epistemic and aleatoric uncertainty to ensure robust decision-making. The safest waypoint is selected as the MPC input for real-time navigation. Extensive experiments demonstrate that LR-MPC outperforms baseline methods in success rate and social awareness, enabling robots to navigate complex crowds with high adaptability and low disruption. A website about this work is available at https://sites.google.com/view/lr-mpc.

Uni-AIMS: AI-Powered Microscopy Image Analysis

May 11, 2025Abstract:This paper presents a systematic solution for the intelligent recognition and automatic analysis of microscopy images. We developed a data engine that generates high-quality annotated datasets through a combination of the collection of diverse microscopy images from experiments, synthetic data generation and a human-in-the-loop annotation process. To address the unique challenges of microscopy images, we propose a segmentation model capable of robustly detecting both small and large objects. The model effectively identifies and separates thousands of closely situated targets, even in cluttered visual environments. Furthermore, our solution supports the precise automatic recognition of image scale bars, an essential feature in quantitative microscopic analysis. Building upon these components, we have constructed a comprehensive intelligent analysis platform and validated its effectiveness and practicality in real-world applications. This study not only advances automatic recognition in microscopy imaging but also ensures scalability and generalizability across multiple application domains, offering a powerful tool for automated microscopic analysis in interdisciplinary research.

PierGuard: A Planning Framework for Underwater Robotic Inspection of Coastal Piers

May 07, 2025Abstract:Using underwater robots instead of humans for the inspection of coastal piers can enhance efficiency while reducing risks. A key challenge in performing these tasks lies in achieving efficient and rapid path planning within complex environments. Sampling-based path planning methods, such as Rapidly-exploring Random Tree* (RRT*), have demonstrated notable performance in high-dimensional spaces. In recent years, researchers have begun designing various geometry-inspired heuristics and neural network-driven heuristics to further enhance the effectiveness of RRT*. However, the performance of these general path planning methods still requires improvement when applied to highly cluttered underwater environments. In this paper, we propose PierGuard, which combines the strengths of bidirectional search and neural network-driven heuristic regions. We design a specialized neural network to generate high-quality heuristic regions in cluttered maps, thereby improving the performance of the path planning. Through extensive simulation and real-world ocean field experiments, we demonstrate the effectiveness and efficiency of our proposed method compared with previous research. Our method achieves approximately 2.6 times the performance of the state-of-the-art geometric-based sampling method and nearly 4.9 times that of the state-of-the-art learning-based sampling method. Our results provide valuable insights for the automation of pier inspection and the enhancement of maritime safety. The updated experimental video is available in the supplementary materials.

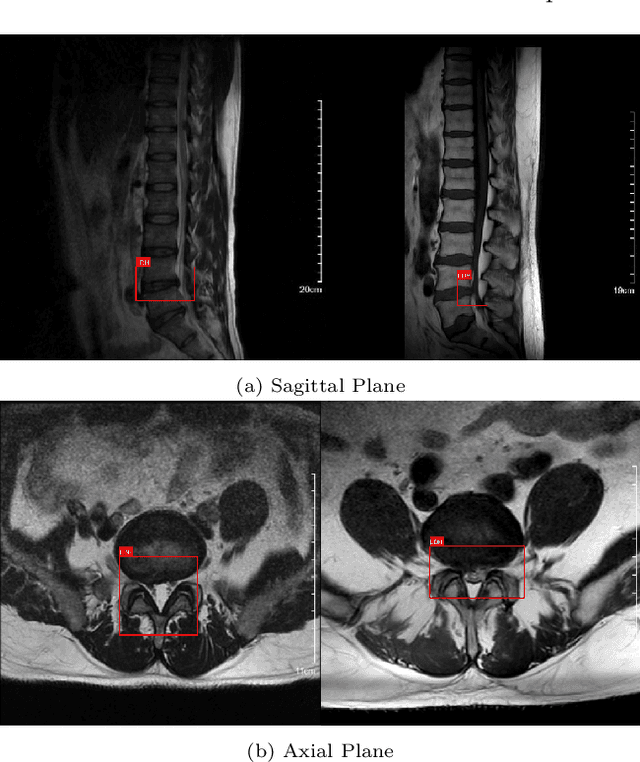

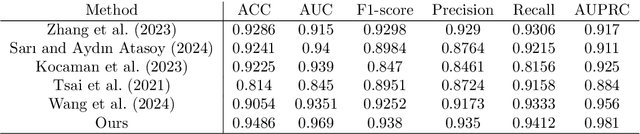

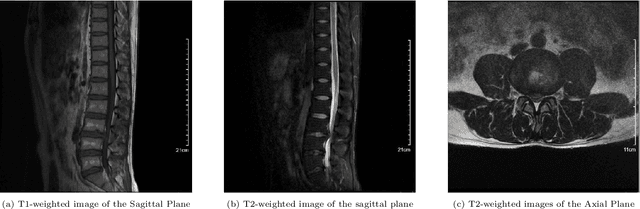

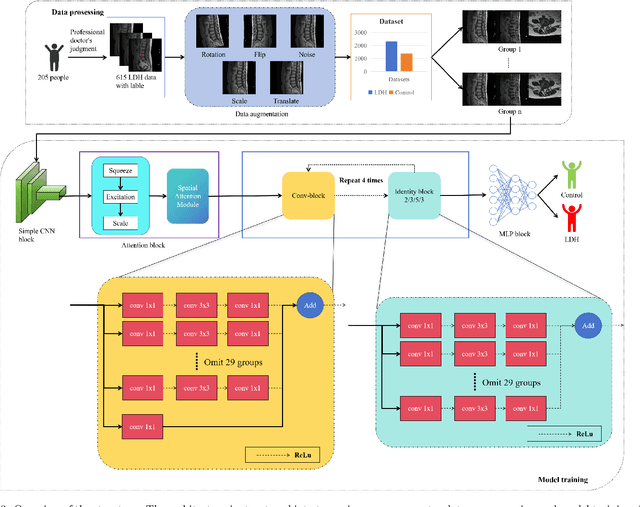

Dual Attention Driven Lumbar Magnetic Resonance Image Feature Enhancement and Automatic Diagnosis of Herniation

Apr 28, 2025

Abstract:Lumbar disc herniation (LDH) is a common musculoskeletal disease that requires magnetic resonance imaging (MRI) for effective clinical management. However, the interpretation of MRI images heavily relies on the expertise of radiologists, leading to delayed diagnosis and high costs for training physicians. Therefore, this paper proposes an innovative automated LDH classification framework. To address these key issues, the framework utilizes T1-weighted and T2-weighted MRI images from 205 people. The framework extracts clinically actionable LDH features and generates standardized diagnostic outputs by leveraging data augmentation and channel and spatial attention mechanisms. These outputs can help physicians make confident and time-effective care decisions when needed. The proposed framework achieves an area under the receiver operating characteristic curve (AUC-ROC) of 0.969 and an accuracy of 0.9486 for LDH detection. The experimental results demonstrate the performance of the proposed framework. Our framework only requires a small number of datasets for training to demonstrate high diagnostic accuracy. This is expected to be a solution to enhance the LDH detection capabilities of primary hospitals.

Endo-TTAP: Robust Endoscopic Tissue Tracking via Multi-Facet Guided Attention and Hybrid Flow-point Supervision

Mar 28, 2025Abstract:Accurate tissue point tracking in endoscopic videos is critical for robotic-assisted surgical navigation and scene understanding, but remains challenging due to complex deformations, instrument occlusion, and the scarcity of dense trajectory annotations. Existing methods struggle with long-term tracking under these conditions due to limited feature utilization and annotation dependence. We present Endo-TTAP, a novel framework addressing these challenges through: (1) A Multi-Facet Guided Attention (MFGA) module that synergizes multi-scale flow dynamics, DINOv2 semantic embeddings, and explicit motion patterns to jointly predict point positions with uncertainty and occlusion awareness; (2) A two-stage curriculum learning strategy employing an Auxiliary Curriculum Adapter (ACA) for progressive initialization and hybrid supervision. Stage I utilizes synthetic data with optical flow ground truth for uncertainty-occlusion regularization, while Stage II combines unsupervised flow consistency and semi-supervised learning with refined pseudo-labels from off-the-shelf trackers. Extensive validation on two MICCAI Challenge datasets and our collected dataset demonstrates that Endo-TTAP achieves state-of-the-art performance in tissue point tracking, particularly in scenarios characterized by complex endoscopic conditions. The source code and dataset will be available at https://anonymous.4open.science/r/Endo-TTAP-36E5.

Collision Risk Quantification and Conflict Resolution in Trajectory Tracking for Acceleration-Actuated Multi-Robot Systems

Jan 07, 2025

Abstract:One of the pivotal challenges in a multi-robot system is how to give attention to accuracy and efficiency while ensuring safety. Prior arts cannot strictly guarantee collision-free for an arbitrarily large number of robots or the results are considerably conservative. Smoothness of the avoidance trajectory also needs to be further optimized. This paper proposes an accelerationactuated simultaneous obstacle avoidance and trajectory tracking method for arbitrarily large teams of robots, that provides a nonconservative collision avoidance strategy and gives approaches for deadlock avoidance. We propose two ways of deadlock resolution, one involves incorporating an auxiliary velocity vector into the error function of the trajectory tracking module, which is proven to have no influence on global convergence of the tracking error. Furthermore, unlike the traditional methods that they address conflicts after a deadlock occurs, our decision-making mechanism avoids the near-zero velocity, which is much more safer and efficient in crowed environments. Extensive comparison show that the proposed method is superior to the existing studies when deployed in a large-scale robot system, with minimal invasiveness.

Pan-infection Foundation Framework Enables Multiple Pathogen Prediction

Dec 31, 2024Abstract:Host-response-based diagnostics can improve the accuracy of diagnosing bacterial and viral infections, thereby reducing inappropriate antibiotic prescriptions. However, the existing cohorts with limited sample size and coarse infections types are unable to support the exploration of an accurate and generalizable diagnostic model. Here, we curate the largest infection host-response transcriptome data, including 11,247 samples across 89 blood transcriptome datasets from 13 countries and 21 platforms. We build a diagnostic model for pathogen prediction starting from a pan-infection model as foundation (AUC = 0.97) based on the pan-infection dataset. Then, we utilize knowledge distillation to efficiently transfer the insights from this "teacher" model to four lightweight pathogen "student" models, i.e., staphylococcal infection (AUC = 0.99), streptococcal infection (AUC = 0.94), HIV infection (AUC = 0.93), and RSV infection (AUC = 0.94), as well as a sepsis "student" model (AUC = 0.99). The proposed knowledge distillation framework not only facilitates the diagnosis of pathogens using pan-infection data, but also enables an across-disease study from pan-infection to sepsis. Moreover, the framework enables high-degree lightweight design of diagnostic models, which is expected to be adaptively deployed in clinical settings.

Air-Ground Collaborative Robots for Fire and Rescue Missions: Towards Mapping and Navigation Perspective

Dec 30, 2024Abstract:Air-ground collaborative robots have shown great potential in the field of fire and rescue, which can quickly respond to rescue needs and improve the efficiency of task execution. Mapping and navigation, as the key foundation for air-ground collaborative robots to achieve efficient task execution, have attracted a great deal of attention. This growing interest in collaborative robot mapping and navigation is conducive to improving the intelligence of fire and rescue task execution, but there has been no comprehensive investigation of this field to highlight their strengths. In this paper, we present a systematic review of the ground-to-ground cooperative robots for fire and rescue from a new perspective of mapping and navigation. First, an air-ground collaborative robots framework for fire and rescue missions based on unmanned aerial vehicle (UAV) mapping and unmanned ground vehicle (UGV) navigation is introduced. Then, the research progress of mapping and navigation under this framework is systematically summarized, including UAV mapping, UAV/UGV co-localization, and UGV navigation, with their main achievements and limitations. Based on the needs of fire and rescue missions, the collaborative robots with different numbers of UAVs and UGVs are classified, and their practicality in fire and rescue tasks is elaborated, with a focus on the discussion of their merits and demerits. In addition, the application examples of air-ground collaborative robots in various firefighting and rescue scenarios are given. Finally, this paper emphasizes the current challenges and potential research opportunities, rounding up references for practitioners and researchers willing to engage in this vibrant area of air-ground collaborative robots.

Intelligent System for Automated Molecular Patent Infringement Assessment

Dec 10, 2024

Abstract:Automated drug discovery offers significant potential for accelerating the development of novel therapeutics by substituting labor-intensive human workflows with machine-driven processes. However, a critical bottleneck persists in the inability of current automated frameworks to assess whether newly designed molecules infringe upon existing patents, posing significant legal and financial risks. We introduce PatentFinder, a novel tool-enhanced and multi-agent framework that accurately and comprehensively evaluates small molecules for patent infringement. It incorporates both heuristic and model-based tools tailored for decomposed subtasks, featuring: MarkushParser, which is capable of optical chemical structure recognition of molecular and Markush structures, and MarkushMatcher, which enhances large language models' ability to extract substituent groups from molecules accurately. On our benchmark dataset MolPatent-240, PatentFinder outperforms baseline approaches that rely solely on large language models, demonstrating a 13.8\% increase in F1-score and a 12\% rise in accuracy. Experimental results demonstrate that PatentFinder mitigates label bias to produce balanced predictions and autonomously generates detailed, interpretable patent infringement reports. This work not only addresses a pivotal challenge in automated drug discovery but also demonstrates the potential of decomposing complex scientific tasks into manageable subtasks for specialized, tool-augmented agents.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge