Ling Shi

A Fast Method for Planning All Optimal Homotopic Configurations for Tethered Robots and Its Extended Applications

Jul 16, 2025Abstract:Tethered robots play a pivotal role in specialized environments such as disaster response and underground exploration, where their stable power supply and reliable communication offer unparalleled advantages. However, their motion planning is severely constrained by tether length limitations and entanglement risks, posing significant challenges to achieving optimal path planning. To address these challenges, this study introduces CDT-TCS (Convex Dissection Topology-based Tethered Configuration Search), a novel algorithm that leverages CDT Encoding as a homotopy invariant to represent topological states of paths. By integrating algebraic topology with geometric optimization, CDT-TCS efficiently computes the complete set of optimal feasible configurations for tethered robots at all positions in 2D environments through a single computation. Building on this foundation, we further propose three application-specific algorithms: i) CDT-TPP for optimal tethered path planning, ii) CDT-TMV for multi-goal visiting with tether constraints, iii) CDT-UTPP for distance-optimal path planning of untethered robots. All theoretical results and propositions underlying these algorithms are rigorously proven and thoroughly discussed in this paper. Extensive simulations demonstrate that the proposed algorithms significantly outperform state-of-the-art methods in their respective problem domains. Furthermore, real-world experiments on robotic platforms validate the practicality and engineering value of the proposed framework.

PierGuard: A Planning Framework for Underwater Robotic Inspection of Coastal Piers

May 07, 2025Abstract:Using underwater robots instead of humans for the inspection of coastal piers can enhance efficiency while reducing risks. A key challenge in performing these tasks lies in achieving efficient and rapid path planning within complex environments. Sampling-based path planning methods, such as Rapidly-exploring Random Tree* (RRT*), have demonstrated notable performance in high-dimensional spaces. In recent years, researchers have begun designing various geometry-inspired heuristics and neural network-driven heuristics to further enhance the effectiveness of RRT*. However, the performance of these general path planning methods still requires improvement when applied to highly cluttered underwater environments. In this paper, we propose PierGuard, which combines the strengths of bidirectional search and neural network-driven heuristic regions. We design a specialized neural network to generate high-quality heuristic regions in cluttered maps, thereby improving the performance of the path planning. Through extensive simulation and real-world ocean field experiments, we demonstrate the effectiveness and efficiency of our proposed method compared with previous research. Our method achieves approximately 2.6 times the performance of the state-of-the-art geometric-based sampling method and nearly 4.9 times that of the state-of-the-art learning-based sampling method. Our results provide valuable insights for the automation of pier inspection and the enhancement of maritime safety. The updated experimental video is available in the supplementary materials.

ProBench: Benchmarking Large Language Models in Competitive Programming

Feb 28, 2025Abstract:With reasoning language models such as OpenAI-o3 and DeepSeek-R1 emerging, large language models (LLMs) have entered a new phase of development. However, existing benchmarks for coding evaluation are gradually inadequate to assess the capability of advanced LLMs in code reasoning. To bridge the gap for high-level code reasoning assessment, we propose ProBench to benchmark LLMs in competitive programming, drawing inspiration from the International Collegiate Programming Contest. ProBench collects a comprehensive set of competitive programming problems from Codeforces, Luogu, and Nowcoder platforms during the period from July to December 2024, obtaining real test results through online submissions to ensure the fairness and accuracy of the evaluation. We establish a unified problem attribute system, including difficulty grading and algorithm tagging. With carefully collected and annotated data in ProBench, we systematically assess 9 latest LLMs in competitive programming across multiple dimensions, including thought chain analysis, error type diagnosis, and reasoning depth evaluation. Experimental results show that QwQ-32B-Preview achieves the best score of 20.93 followed by DeepSeek-V3 with a score of 16.38, suggesting that models trained with specialized reasoning tasks significantly outperform general-purpose models (even larger than reasoning-oriented models) in programming. Further analysis also reveals key areas for programming capability enhancement, e.g., algorithm adaptability and reasoning sufficiency, providing important insights for the future development of reasoning models.

Detecting LLM Fact-conflicting Hallucinations Enhanced by Temporal-logic-based Reasoning

Feb 19, 2025

Abstract:Large language models (LLMs) face the challenge of hallucinations -- outputs that seem coherent but are actually incorrect. A particularly damaging type is fact-conflicting hallucination (FCH), where generated content contradicts established facts. Addressing FCH presents three main challenges: 1) Automatically constructing and maintaining large-scale benchmark datasets is difficult and resource-intensive; 2) Generating complex and efficient test cases that the LLM has not been trained on -- especially those involving intricate temporal features -- is challenging, yet crucial for eliciting hallucinations; and 3) Validating the reasoning behind LLM outputs is inherently difficult, particularly with complex logical relationships, as it requires transparency in the model's decision-making process. This paper presents Drowzee, an innovative end-to-end metamorphic testing framework that utilizes temporal logic to identify fact-conflicting hallucinations (FCH) in large language models (LLMs). Drowzee builds a comprehensive factual knowledge base by crawling sources like Wikipedia and uses automated temporal-logic reasoning to convert this knowledge into a large, extensible set of test cases with ground truth answers. LLMs are tested using these cases through template-based prompts, which require them to generate both answers and reasoning steps. To validate the reasoning, we propose two semantic-aware oracles that compare the semantic structure of LLM outputs to the ground truths. Across nine LLMs in nine different knowledge domains, experimental results show that Drowzee effectively identifies rates of non-temporal-related hallucinations ranging from 24.7% to 59.8%, and rates of temporal-related hallucinations ranging from 16.7% to 39.2%.

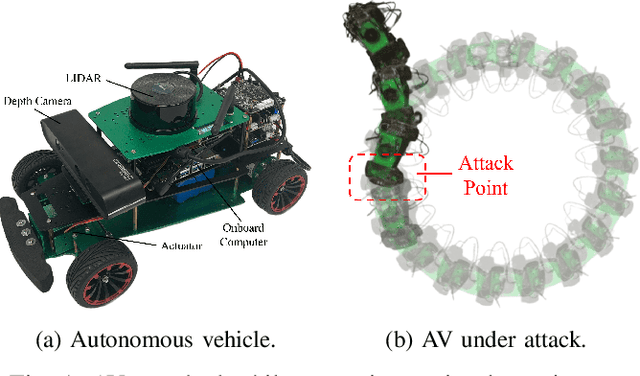

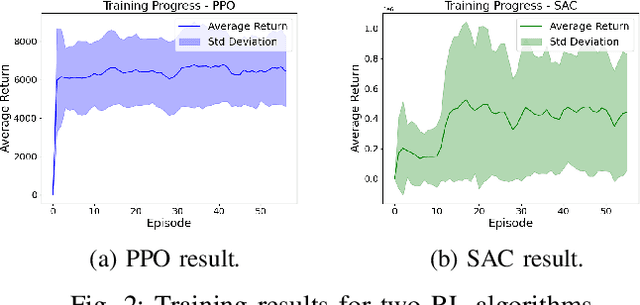

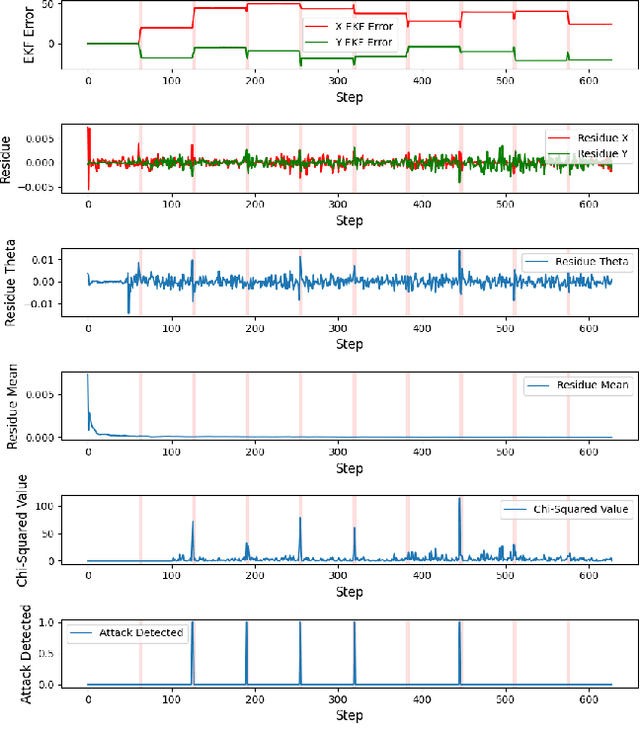

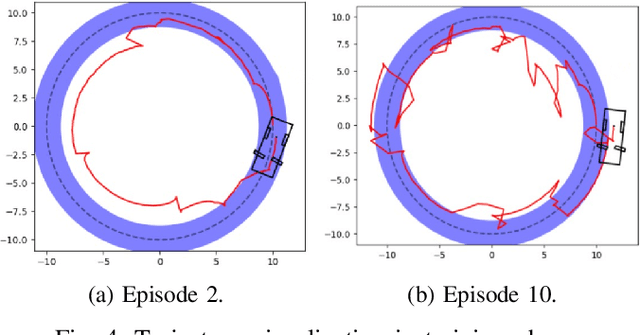

Optimal Actuator Attacks on Autonomous Vehicles Using Reinforcement Learning

Feb 11, 2025

Abstract:With the increasing prevalence of autonomous vehicles (AVs), their vulnerability to various types of attacks has grown, presenting significant security challenges. In this paper, we propose a reinforcement learning (RL)-based approach for designing optimal stealthy integrity attacks on AV actuators. We also analyze the limitations of state-of-the-art RL-based secure controllers developed to counter such attacks. Through extensive simulation experiments, we demonstrate the effectiveness and efficiency of our proposed method.

Lie-algebra Adaptive Tracking Control for Rigid Body Dynamics

Feb 08, 2025Abstract:Adaptive tracking control for rigid body dynamics is of critical importance in control and robotics, particularly for addressing uncertainties or variations in system model parameters. However, most existing adaptive control methods are designed for systems with states in vector spaces, often neglecting the manifold constraints inherent to robotic systems. In this work, we propose a novel Lie-algebra-based adaptive control method that leverages the intrinsic relationship between the special Euclidean group and its associated Lie algebra. By transforming the state space from the group manifold to a vector space, we derive a linear error dynamics model that decouples model parameters from the system state. This formulation enables the development of an adaptive optimal control method that is both geometrically consistent and computationally efficient. Extensive simulations demonstrate the effectiveness and efficiency of the proposed method. We have made our source code publicly available to the community to support further research and collaboration.

Learning-based Detection of GPS Spoofing Attack for Quadrotors

Jan 10, 2025Abstract:Safety-critical cyber-physical systems (CPS), such as quadrotor UAVs, are particularly prone to cyber attacks, which can result in significant consequences if not detected promptly and accurately. During outdoor operations, the nonlinear dynamics of UAV systems, combined with non-Gaussian noise, pose challenges to the effectiveness of conventional statistical and machine learning methods. To overcome these limitations, we present QUADFormer, an advanced attack detection framework for quadrotor UAVs leveraging a transformer-based architecture. This framework features a residue generator that produces sequences sensitive to anomalies, which are then analyzed by the transformer to capture statistical patterns for detection and classification. Furthermore, an alert mechanism ensures UAVs can operate safely even when under attack. Extensive simulations and experimental evaluations highlight that QUADFormer outperforms existing state-of-the-art techniques in detection accuracy.

Large Language Model Safety: A Holistic Survey

Dec 23, 2024

Abstract:The rapid development and deployment of large language models (LLMs) have introduced a new frontier in artificial intelligence, marked by unprecedented capabilities in natural language understanding and generation. However, the increasing integration of these models into critical applications raises substantial safety concerns, necessitating a thorough examination of their potential risks and associated mitigation strategies. This survey provides a comprehensive overview of the current landscape of LLM safety, covering four major categories: value misalignment, robustness to adversarial attacks, misuse, and autonomous AI risks. In addition to the comprehensive review of the mitigation methodologies and evaluation resources on these four aspects, we further explore four topics related to LLM safety: the safety implications of LLM agents, the role of interpretability in enhancing LLM safety, the technology roadmaps proposed and abided by a list of AI companies and institutes for LLM safety, and AI governance aimed at LLM safety with discussions on international cooperation, policy proposals, and prospective regulatory directions. Our findings underscore the necessity for a proactive, multifaceted approach to LLM safety, emphasizing the integration of technical solutions, ethical considerations, and robust governance frameworks. This survey is intended to serve as a foundational resource for academy researchers, industry practitioners, and policymakers, offering insights into the challenges and opportunities associated with the safe integration of LLMs into society. Ultimately, it seeks to contribute to the safe and beneficial development of LLMs, aligning with the overarching goal of harnessing AI for societal advancement and well-being. A curated list of related papers has been publicly available at https://github.com/tjunlp-lab/Awesome-LLM-Safety-Papers.

Model-Editing-Based Jailbreak against Safety-aligned Large Language Models

Dec 11, 2024

Abstract:Large Language Models (LLMs) have transformed numerous fields by enabling advanced natural language interactions but remain susceptible to critical vulnerabilities, particularly jailbreak attacks. Current jailbreak techniques, while effective, often depend on input modifications, making them detectable and limiting their stealth and scalability. This paper presents Targeted Model Editing (TME), a novel white-box approach that bypasses safety filters by minimally altering internal model structures while preserving the model's intended functionalities. TME identifies and removes safety-critical transformations (SCTs) embedded in model matrices, enabling malicious queries to bypass restrictions without input modifications. By analyzing distinct activation patterns between safe and unsafe queries, TME isolates and approximates SCTs through an optimization process. Implemented in the D-LLM framework, our method achieves an average Attack Success Rate (ASR) of 84.86% on four mainstream open-source LLMs, maintaining high performance. Unlike existing methods, D-LLM eliminates the need for specific triggers or harmful response collections, offering a stealthier and more effective jailbreak strategy. This work reveals a covert and robust threat vector in LLM security and emphasizes the need for stronger safeguards in model safety alignment.

Material Property Prediction with Element Attribute Knowledge Graphs and Multimodal Representation Learning

Nov 13, 2024

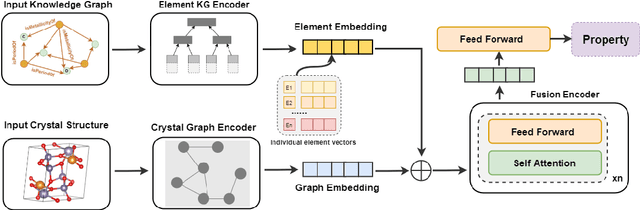

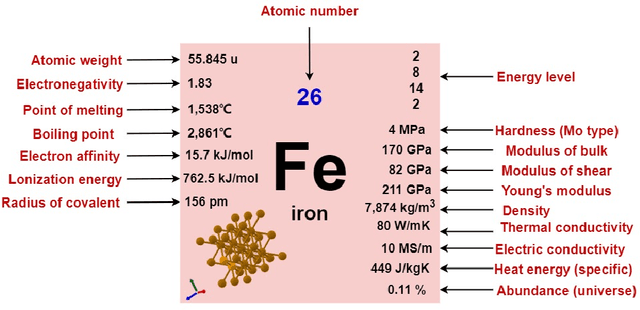

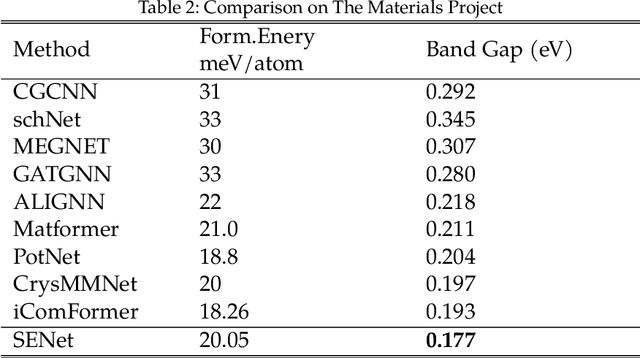

Abstract:Machine learning has become a crucial tool for predicting the properties of crystalline materials. However, existing methods primarily represent material information by constructing multi-edge graphs of crystal structures, often overlooking the chemical and physical properties of elements (such as atomic radius, electronegativity, melting point, and ionization energy), which have a significant impact on material performance. To address this limitation, we first constructed an element property knowledge graph and utilized an embedding model to encode the element attributes within the knowledge graph. Furthermore, we propose a multimodal fusion framework, ESNet, which integrates element property features with crystal structure features to generate joint multimodal representations. This provides a more comprehensive perspective for predicting the performance of crystalline materials, enabling the model to consider both microstructural composition and chemical characteristics of the materials. We conducted experiments on the Materials Project benchmark dataset, which showed leading performance in the bandgap prediction task and achieved results on a par with existing benchmarks in the formation energy prediction task.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge