Dawei Feng

Decoupling Constraint from Two Direction in Evolutionary Constrained Multi-objective Optimization

Dec 30, 2025Abstract:Real-world Constrained Multi-objective Optimization Problems (CMOPs) often contain multiple constraints, and understanding and utilizing the coupling between these constraints is crucial for solving CMOPs. However, existing Constrained Multi-objective Evolutionary Algorithms (CMOEAs) typically ignore these couplings and treat all constraints as a single aggregate, which lacks interpretability regarding the specific geometric roles of constraints. To address this limitation, we first analyze how different constraints interact and show that the final Constrained Pareto Front (CPF) depends not only on the Pareto fronts of individual constraints but also on the boundaries of infeasible regions. This insight implies that CMOPs with different coupling types must be solved from different search directions. Accordingly, we propose a novel algorithm named Decoupling Constraint from Two Directions (DCF2D). This method periodically detects constraint couplings and spawns an auxiliary population for each relevant constraint with an appropriate search direction. Extensive experiments on seven challenging CMOP benchmark suites and on a collection of real-world CMOPs demonstrate that DCF2D outperforms five state-of-the-art CMOEAs, including existing decoupling-based methods.

An Equivariant Graph Network for Interpretable Nanoporous Materials Design

Sep 19, 2025Abstract:Nanoporous materials hold promise for diverse sustainable applications, yet their vast chemical space poses challenges for efficient design. Machine learning offers a compelling pathway to accelerate the exploration, but existing models lack either interpretability or fidelity for elucidating the correlation between crystal geometry and property. Here, we report a three-dimensional periodic space sampling method that decomposes large nanoporous structures into local geometrical sites for combined property prediction and site-wise contribution quantification. Trained with a constructed database and retrieved datasets, our model achieves state-of-the-art accuracy and data efficiency for property prediction on gas storage, separation, and electrical conduction. Meanwhile, this approach enables the interpretation of the prediction and allows for accurate identification of significant local sites for targeted properties. Through identifying transferable high-performance sites across diverse nanoporous frameworks, our model paves the way for interpretable, symmetry-aware nanoporous materials design, which is extensible to other materials, like molecular crystals and beyond.

StreamLink: Large-Language-Model Driven Distributed Data Engineering System

May 27, 2025Abstract:Large Language Models (LLMs) have shown remarkable proficiency in natural language understanding (NLU), opening doors for innovative applications. We introduce StreamLink - an LLM-driven distributed data system designed to improve the efficiency and accessibility of data engineering tasks. We build StreamLink on top of distributed frameworks such as Apache Spark and Hadoop to handle large data at scale. One of the important design philosophies of StreamLink is to respect user data privacy by utilizing local fine-tuned LLMs instead of a public AI service like ChatGPT. With help from domain-adapted LLMs, we can improve our system's understanding of natural language queries from users in various scenarios and simplify the procedure of generating database queries like the Structured Query Language (SQL) for information processing. We also incorporate LLM-based syntax and security checkers to guarantee the reliability and safety of each generated query. StreamLink illustrates the potential of merging generative LLMs with distributed data processing for comprehensive and user-centric data engineering. With this architecture, we allow users to interact with complex database systems at different scales in a user-friendly and security-ensured manner, where the SQL generation reaches over 10\% of execution accuracy compared to baseline methods, and allow users to find the most concerned item from hundreds of millions of items within a few seconds using natural language.

Evolutionary training-free guidance in diffusion model for 3D multi-objective molecular generation

May 16, 2025Abstract:Discovering novel 3D molecular structures that simultaneously satisfy multiple property targets remains a central challenge in materials and drug design. Although recent diffusion-based models can generate 3D conformations, they require expensive retraining for each new property or property-combination and lack flexibility in enforcing structural constraints. We introduce EGD (Evolutionary Guidance in Diffusion), a training-free framework that embeds evolutionary operators directly into the diffusion sampling process. By performing crossover on noise-perturbed samples and then denoising them with a pretrained Unconditional diffusion model, EGD seamlessly blends structural fragments and steers generation toward user-specified objectives without any additional model updates. On both single- and multi-target 3D conditional generation tasks-and on multi-objective optimization of quantum properties EGD outperforms state-of-the-art conditional diffusion methods in accuracy and runs up to five times faster per generation. In the single-objective optimization of protein ligands, EGD enables customized ligand generation. Moreover, EGD can embed arbitrary 3D fragments into the generated molecules while optimizing multiple conflicting properties in one unified process. This combination of efficiency, flexibility, and controllable structure makes EGD a powerful tool for rapid, guided exploration of chemical space.

Pay More Attention to the Robustness of Prompt for Instruction Data Mining

Mar 31, 2025Abstract:Instruction tuning has emerged as a paramount method for tailoring the behaviors of LLMs. Recent work has unveiled the potential for LLMs to achieve high performance through fine-tuning with a limited quantity of high-quality instruction data. Building upon this approach, we further explore the impact of prompt's robustness on the selection of high-quality instruction data. This paper proposes a pioneering framework of high-quality online instruction data mining for instruction tuning, focusing on the impact of prompt's robustness on the data mining process. Our notable innovation, is to generate the adversarial instruction data by conducting the attack for the prompt of online instruction data. Then, we introduce an Adversarial Instruction-Following Difficulty metric to measure how much help the adversarial instruction data can provide to the generation of the corresponding response. Apart from it, we propose a novel Adversarial Instruction Output Embedding Consistency approach to select high-quality online instruction data. We conduct extensive experiments on two benchmark datasets to assess the performance. The experimental results serve to underscore the effectiveness of our proposed two methods. Moreover, the results underscore the critical practical significance of considering prompt's robustness.

AudioCIL: A Python Toolbox for Audio Class-Incremental Learning with Multiple Scenes

Dec 16, 2024Abstract:Deep learning, with its robust aotomatic feature extraction capabilities, has demonstrated significant success in audio signal processing. Typically, these methods rely on static, pre-collected large-scale datasets for training, performing well on a fixed number of classes. However, the real world is characterized by constant change, with new audio classes emerging from streaming or temporary availability due to privacy. This dynamic nature of audio environments necessitates models that can incrementally learn new knowledge for new classes without discarding existing information. Introducing incremental learning to the field of audio signal processing, i.e., Audio Class-Incremental Learning (AuCIL), is a meaningful endeavor. We propose such a toolbox named AudioCIL to align audio signal processing algorithms with real-world scenarios and strengthen research in audio class-incremental learning.

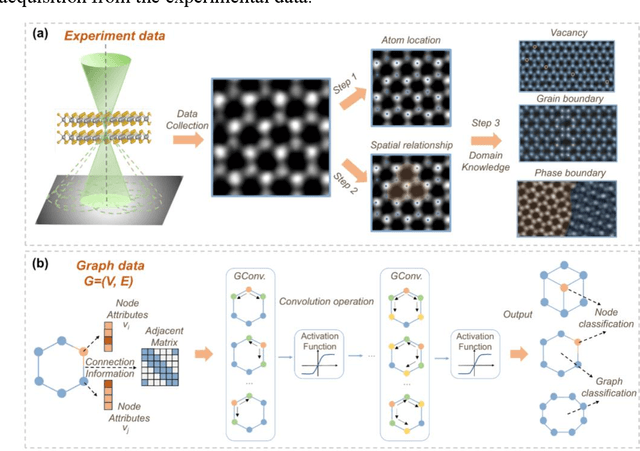

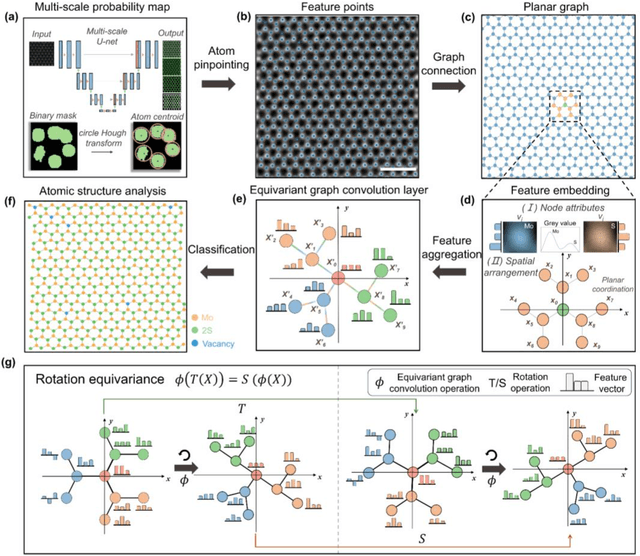

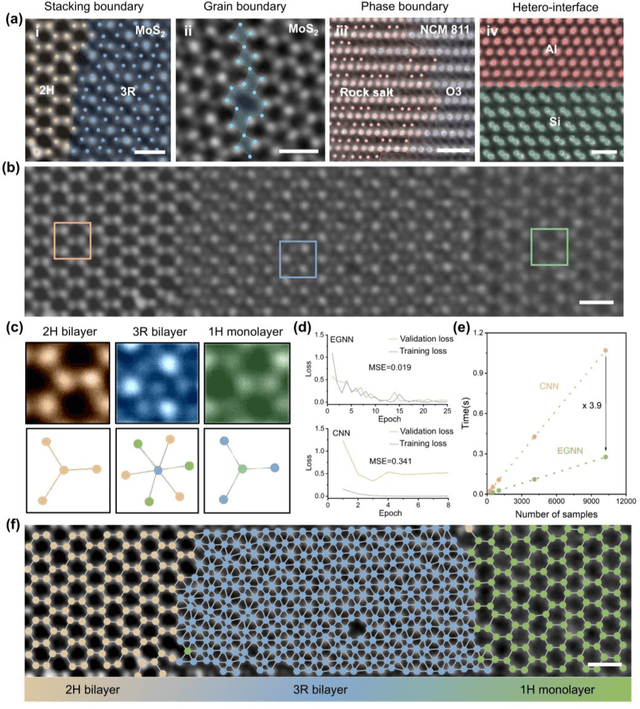

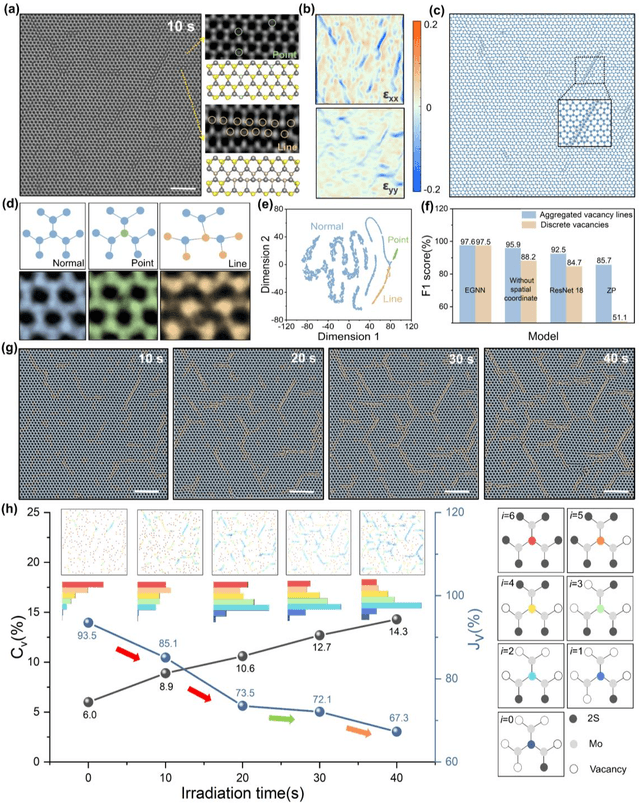

Exploring structure diversity in atomic resolution microscopy with graph neural networks

Oct 23, 2024

Abstract:The emergence of deep learning (DL) has provided great opportunities for the high-throughput analysis of atomic-resolution micrographs. However, the DL models trained by image patches in fixed size generally lack efficiency and flexibility when processing micrographs containing diversified atomic configurations. Herein, inspired by the similarity between the atomic structures and graphs, we describe a few-shot learning framework based on an equivariant graph neural network (EGNN) to analyze a library of atomic structures (e.g., vacancies, phases, grain boundaries, doping, etc.), showing significantly promoted robustness and three orders of magnitude reduced computing parameters compared to the image-driven DL models, which is especially evident for those aggregated vacancy lines with flexible lattice distortion. Besides, the intuitiveness of graphs enables quantitative and straightforward extraction of the atomic-scale structural features in batches, thus statistically unveiling the self-assembly dynamics of vacancy lines under electron beam irradiation. A versatile model toolkit is established by integrating EGNN sub-models for single structure recognition to process images involving varied configurations in the form of a task chain, leading to the discovery of novel doping configurations with superior electrocatalytic properties for hydrogen evolution reactions. This work provides a powerful tool to explore structure diversity in a fast, accurate, and intelligent manner.

AutoFeedback: An LLM-based Framework for Efficient and Accurate API Request Generation

Oct 09, 2024

Abstract:Large Language Models (LLMs) leverage external tools primarily through generating the API request to enhance task completion efficiency. The accuracy of API request generation significantly determines the capability of LLMs to accomplish tasks. Due to the inherent hallucinations within the LLM, it is difficult to efficiently and accurately generate the correct API request. Current research uses prompt-based feedback to facilitate the LLM-based API request generation. However, existing methods lack factual information and are insufficiently detailed. To address these issues, we propose AutoFeedback, an LLM-based framework for efficient and accurate API request generation, with a Static Scanning Component (SSC) and a Dynamic Analysis Component (DAC). SSC incorporates errors detected in the API requests as pseudo-facts into the feedback, enriching the factual information. DAC retrieves information from API documentation, enhancing the level of detail in feedback. Based on this two components, Autofeedback implementes two feedback loops during the process of generating API requests by the LLM. Extensive experiments demonstrate that it significantly improves accuracy of API request generation and reduces the interaction cost. AutoFeedback achieves an accuracy of 100.00\% on a real-world API dataset and reduces the cost of interaction with GPT-3.5 Turbo by 23.44\%, and GPT-4 Turbo by 11.85\%.

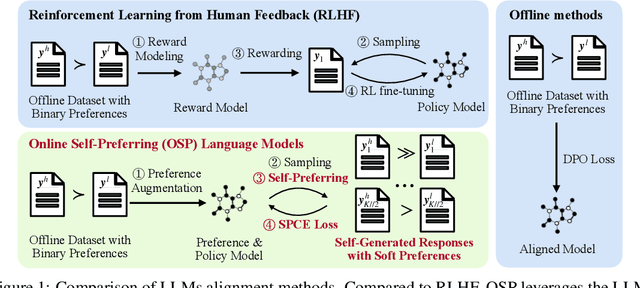

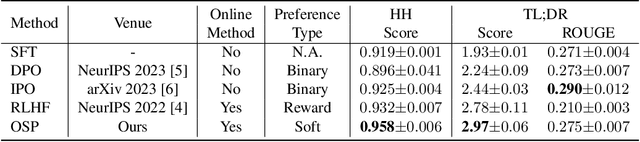

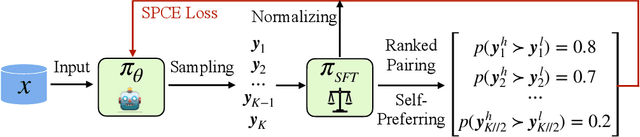

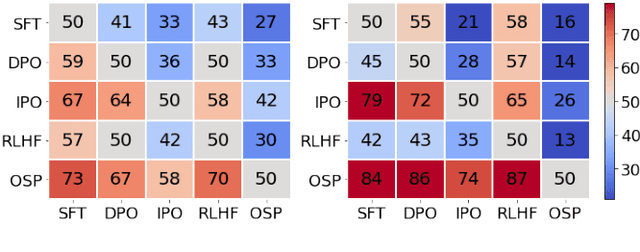

Online Self-Preferring Language Models

May 23, 2024

Abstract:Aligning with human preference datasets has been critical to the success of large language models (LLMs). Reinforcement learning from human feedback (RLHF) employs a costly reward model to provide feedback for on-policy sampling responses. Recently, offline methods that directly fit responses with binary preferences in the dataset have emerged as alternatives. However, existing methods do not explicitly model preference strength information, which is crucial for distinguishing different response pairs. To overcome this limitation, we propose Online Self-Preferring (OSP) language models to learn from self-generated response pairs and self-judged preference strengths. For each prompt and corresponding self-generated responses, we introduce a ranked pairing method to construct multiple response pairs with preference strength information. We then propose the soft-preference cross-entropy loss to leverage such information. Empirically, we demonstrate that leveraging preference strength is crucial for avoiding overfitting and enhancing alignment performance. OSP achieves state-of-the-art alignment performance across various metrics in two widely used human preference datasets. OSP is parameter-efficient and more robust than the dominant online method, RLHF when limited offline data are available and generalizing to out-of-domain tasks. Moreover, OSP language models established by LLMs with proficiency in self-preferring can efficiently self-improve without external supervision.

IGOT: Information Gain Optimized Tokenizer on Domain Adaptive Pretraining

May 16, 2024Abstract:Pretrained Large Language Models (LLM) such as ChatGPT, Claude, etc. have demonstrated strong capabilities in various fields of natural language generation. However, there are still many problems when using LLM in specialized domain-specific fields. When using generative AI to process downstream tasks, a common approach is to add new knowledge (e.g., private domain knowledge, cutting-edge information) to a pretrained model through continued training or fine-tuning. However, whether there is a universal paradigm for domain adaptation training is still an open question. In this article, we proposed Information Gain Optimized Tokenizer (IGOT), which analyzes the special token set of downstream tasks, constructs a new subset using heuristic function $\phi$ with the special token and its information gain, to build new domain-specific tokenizer, and continues pretraining on the downstream task data. We explored the many positive effects of this method's customized tokenizer on domain-adaptive pretraining and verified this method can perform better than the ordinary method of just collecting data and fine-tuning. Based on our experiment, the continued pretraining process of IGOT with LLaMA-7B achieved 11.9\% token saving, 12.2\% training time saving, and 5.8\% maximum GPU VRAM usage saving, combined with the T5 model, we can even reach a 31.5\% of training time saving, making porting general generative AI to specific domains more effective than before. In domain-specific tasks, supervised $IGOT_\tau$ shows great performance on reducing both the convergence radius and convergence point during keep pretraining.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge