Huanxi Liu

AutoFeedback: An LLM-based Framework for Efficient and Accurate API Request Generation

Oct 09, 2024

Abstract:Large Language Models (LLMs) leverage external tools primarily through generating the API request to enhance task completion efficiency. The accuracy of API request generation significantly determines the capability of LLMs to accomplish tasks. Due to the inherent hallucinations within the LLM, it is difficult to efficiently and accurately generate the correct API request. Current research uses prompt-based feedback to facilitate the LLM-based API request generation. However, existing methods lack factual information and are insufficiently detailed. To address these issues, we propose AutoFeedback, an LLM-based framework for efficient and accurate API request generation, with a Static Scanning Component (SSC) and a Dynamic Analysis Component (DAC). SSC incorporates errors detected in the API requests as pseudo-facts into the feedback, enriching the factual information. DAC retrieves information from API documentation, enhancing the level of detail in feedback. Based on this two components, Autofeedback implementes two feedback loops during the process of generating API requests by the LLM. Extensive experiments demonstrate that it significantly improves accuracy of API request generation and reduces the interaction cost. AutoFeedback achieves an accuracy of 100.00\% on a real-world API dataset and reduces the cost of interaction with GPT-3.5 Turbo by 23.44\%, and GPT-4 Turbo by 11.85\%.

Modeling Event Propagation via Graph Biased Temporal Point Process

Aug 05, 2019

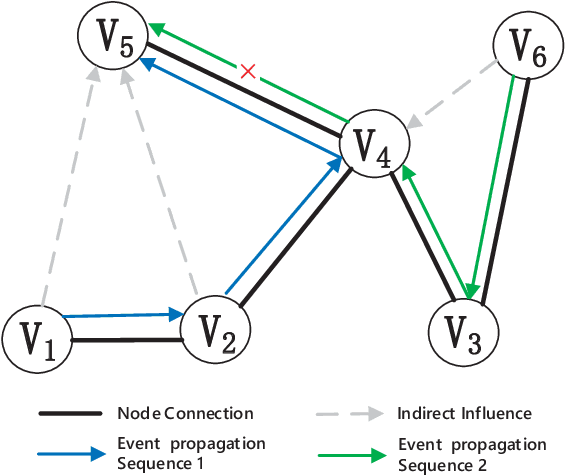

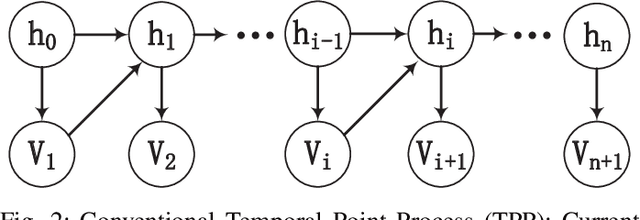

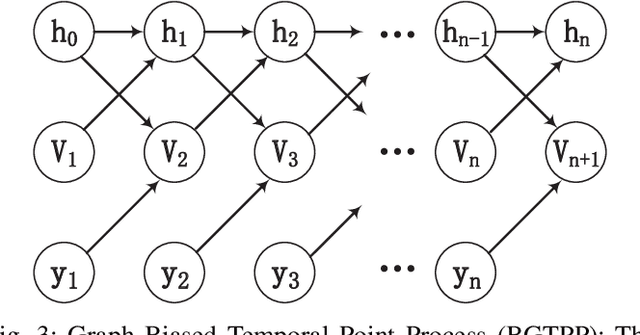

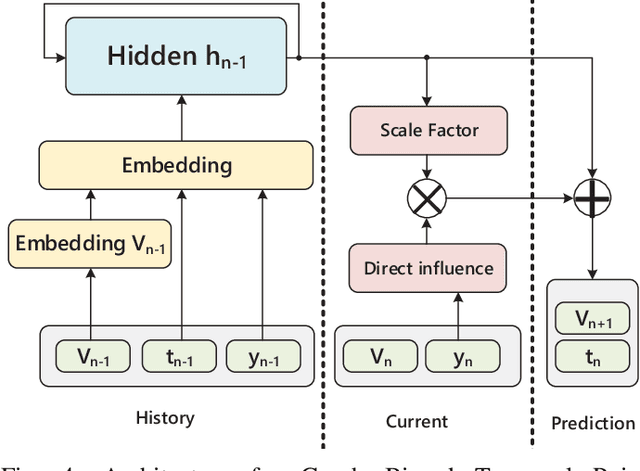

Abstract:Temporal point process is widely used for sequential data modeling. In this paper, we focus on the problem of modeling sequential event propagation in graph, such as retweeting by social network users, news transmitting between websites, etc. Given a collection of event propagation sequences, conventional point process model consider only the event history, i.e. embed event history into a vector, not the latent graph structure. We propose a Graph Biased Temporal Point Process (GBTPP) leveraging the structural information from graph representation learning, where the direct influence between nodes and indirect influence from event history is modeled respectively. Moreover, the learned node embedding vector is also integrated into the embedded event history as side information. Experiments on a synthetic dataset and two real-world datasets show the efficacy of our model compared to conventional methods and state-of-the-art.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge