Dan Guo

UniGeo: A Unified 3D Indoor Object Detection Framework Integrating Geometry-Aware Learning and Dynamic Channel Gating

Jan 30, 2026Abstract:The growing adoption of robotics and augmented reality in real-world applications has driven considerable research interest in 3D object detection based on point clouds. While previous methods address unified training across multiple datasets, they fail to model geometric relationships in sparse point cloud scenes and ignore the feature distribution in significant areas, which ultimately restricts their performance. To deal with this issue, a unified 3D indoor detection framework, called UniGeo, is proposed. To model geometric relations in scenes, we first propose a geometry-aware learning module that establishes a learnable mapping from spatial relationships to feature weights, which enabes explicit geometric feature enhancement. Then, to further enhance point cloud feature representation, we propose a dynamic channel gating mechanism that leverages learnable channel-wise weighting. This mechanism adaptively optimizes features generated by the sparse 3D U-Net network, significantly enhancing key geometric information. Extensive experiments on six different indoor scene datasets clearly validate the superior performance of our method.

A New Paradigm for Trusted Respiratory Monitoring Via Consumer Electronics-grade Radar Signals

Jan 22, 2026Abstract:Respiratory monitoring is an extremely important task in modern medical services. Due to its significant advantages, e.g., non-contact, radar-based respiratory monitoring has attracted widespread attention from both academia and industry. Unfortunately, though it can achieve high monitoring accuracy, consumer electronics-grade radar data inevitably contains User-sensitive Identity Information (USI), which may be maliciously used and further lead to privacy leakage. To track these challenges, by variational mode decomposition (VMD) and adversarial loss-based encryption, we propose a novel Trusted Respiratory Monitoring paradigm, Tru-RM, to perform automated respiratory monitoring through radio signals while effectively anonymizing USI. The key enablers of Tru-RM are Attribute Feature Decoupling (AFD), Flexible Perturbation Encryptor (FPE), and robust Perturbation Tolerable Network (PTN) used for attribute decomposition, identity encryption, and perturbed respiratory monitoring, respectively. Specifically, AFD is designed to decompose the raw radar signals into the universal respiratory component, the personal difference component, and other unrelated components. Then, by using large noise to drown out the other unrelated components, and the phase noise algorithm with a learning intensity parameter to eliminate USI in the personal difference component, FPE is designed to achieve complete user identity information encryption without affecting respiratory features. Finally, by designing the transferred generalized domain-independent network, PTN is employed to accurately detect respiration when waveforms change significantly. Extensive experiments based on various detection distances, respiratory patterns, and durations demonstrate the superior performance of Tru-RM on strong anonymity of USI, and high detection accuracy of perturbed respiratory waveforms.

Towards Seamless Interaction: Causal Turn-Level Modeling of Interactive 3D Conversational Head Dynamics

Dec 17, 2025

Abstract:Human conversation involves continuous exchanges of speech and nonverbal cues such as head nods, gaze shifts, and facial expressions that convey attention and emotion. Modeling these bidirectional dynamics in 3D is essential for building expressive avatars and interactive robots. However, existing frameworks often treat talking and listening as independent processes or rely on non-causal full-sequence modeling, hindering temporal coherence across turns. We present TIMAR (Turn-level Interleaved Masked AutoRegression), a causal framework for 3D conversational head generation that models dialogue as interleaved audio-visual contexts. It fuses multimodal information within each turn and applies turn-level causal attention to accumulate conversational history, while a lightweight diffusion head predicts continuous 3D head dynamics that captures both coordination and expressive variability. Experiments on the DualTalk benchmark show that TIMAR reduces Fréchet Distance and MSE by 15-30% on the test set, and achieves similar gains on out-of-distribution data. The source code will be released in the GitHub repository https://github.com/CoderChen01/towards-seamleass-interaction.

GeoDiffMM: Geometry-Guided Conditional Diffusion for Motion Magnification

Dec 09, 2025

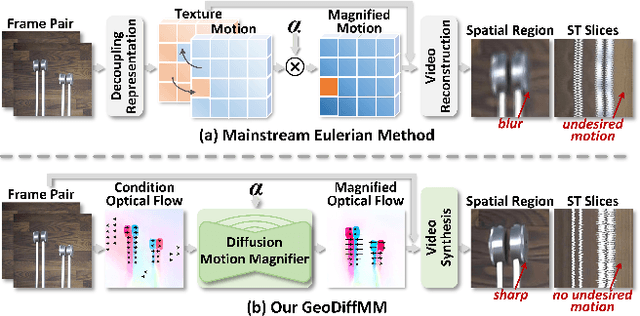

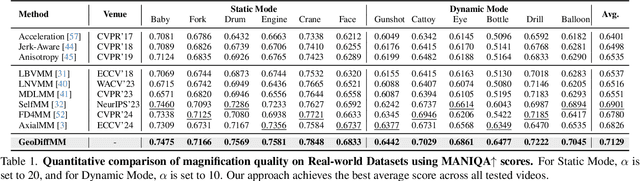

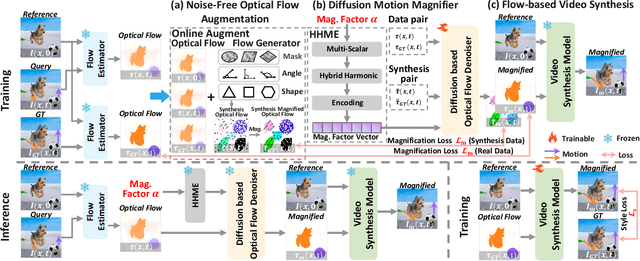

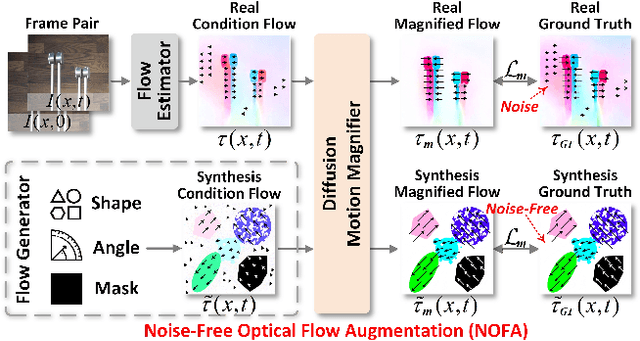

Abstract:Video Motion Magnification (VMM) amplifies subtle macroscopic motions to a perceptible level. Recently, existing mainstream Eulerian approaches address amplification-induced noise via decoupling representation learning such as texture, shape and frequancey schemes, but they still struggle to separate photon noise from true micro-motion when motion displacements are very small. We propose GeoDiffMM, a novel diffusion-based Lagrangian VMM framework conditioned on optical flow as a geometric cue, enabling structurally consistent motion magnification. Specifically, we design a Noise-free Optical Flow Augmentation strategy that synthesizes diverse nonrigid motion fields without photon noise as supervision, helping the model learn more accurate geometry-aware optial flow and generalize better. Next, we develop a Diffusion Motion Magnifier that conditions the denoising process on (i) optical flow as a geometry prior and (ii) a learnable magnification factor controlling magnitude, thereby selectively amplifying motion components consistent with scene semantics and structure while suppressing content-irrelevant perturbations. Finally, we perform Flow-based Video Synthesis to map the amplified motion back to the image domain with high fidelity. Extensive experiments on real and synthetic datasets show that GeoDiffMM outperforms state-of-the-art methods and significantly improves motion magnification.

A Closer Look at Knowledge Distillation in Spiking Neural Network Training

Nov 14, 2025Abstract:Spiking Neural Networks (SNNs) become popular due to excellent energy efficiency, yet facing challenges for effective model training. Recent works improve this by introducing knowledge distillation (KD) techniques, with the pre-trained artificial neural networks (ANNs) used as teachers and the target SNNs as students. This is commonly accomplished through a straightforward element-wise alignment of intermediate features and prediction logits from ANNs and SNNs, often neglecting the intrinsic differences between their architectures. Specifically, ANN's outputs exhibit a continuous distribution, whereas SNN's outputs are characterized by sparsity and discreteness. To mitigate this issue, we introduce two innovative KD strategies. Firstly, we propose the Saliency-scaled Activation Map Distillation (SAMD), which aligns the spike activation map of the student SNN with the class-aware activation map of the teacher ANN. Rather than performing KD directly on the raw %and distinct features of ANN and SNN, our SAMD directs the student to learn from saliency activation maps that exhibit greater semantic and distribution consistency. Additionally, we propose a Noise-smoothed Logits Distillation (NLD), which utilizes Gaussian noise to smooth the sparse logits of student SNN, facilitating the alignment with continuous logits from teacher ANN. Extensive experiments on multiple datasets demonstrate the effectiveness of our methods. Code is available~\footnote{https://github.com/SinoLeu/CKDSNN.git}.

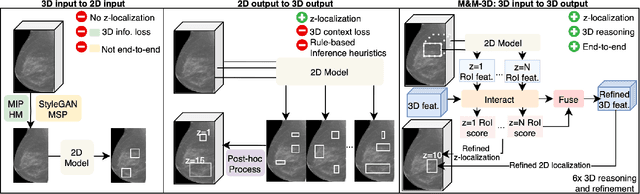

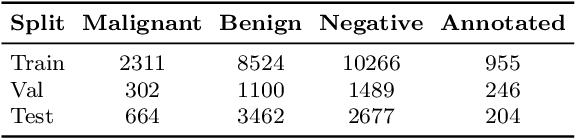

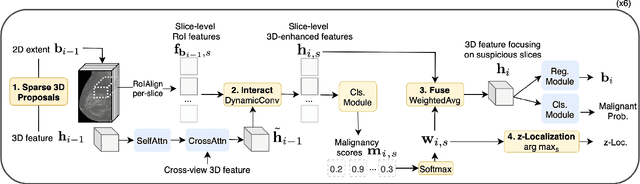

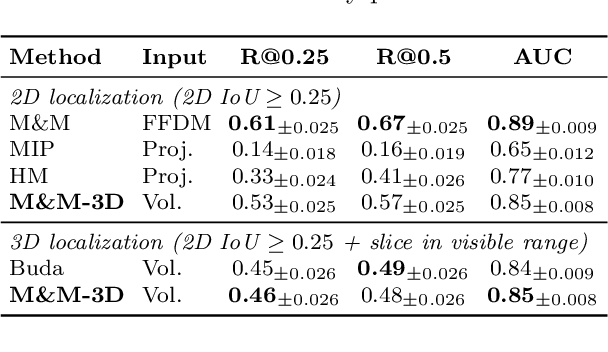

From 2D to 3D Without Extra Baggage: Data-Efficient Cancer Detection in Digital Breast Tomosynthesis

Nov 13, 2025

Abstract:Digital Breast Tomosynthesis (DBT) enhances finding visibility for breast cancer detection by providing volumetric information that reduces the impact of overlapping tissues; however, limited annotated data has constrained the development of deep learning models for DBT. To address data scarcity, existing methods attempt to reuse 2D full-field digital mammography (FFDM) models by either flattening DBT volumes or processing slices individually, thus discarding volumetric information. Alternatively, 3D reasoning approaches introduce complex architectures that require more DBT training data. Tackling these drawbacks, we propose M&M-3D, an architecture that enables learnable 3D reasoning while remaining parameter-free relative to its FFDM counterpart, M&M. M&M-3D constructs malignancy-guided 3D features, and 3D reasoning is learned through repeatedly mixing these 3D features with slice-level information. This is achieved by modifying operations in M&M without adding parameters, thus enabling direct weight transfer from FFDM. Extensive experiments show that M&M-3D surpasses 2D projection and 3D slice-based methods by 11-54% for localization and 3-10% for classification. Additionally, M&M-3D outperforms complex 3D reasoning variants by 20-47% for localization and 2-10% for classification in the low-data regime, while matching their performance in high-data regime. On the popular BCS-DBT benchmark, M&M-3D outperforms previous top baseline by 4% for classification and 10% for localization.

Motion is the Choreographer: Learning Latent Pose Dynamics for Seamless Sign Language Generation

Aug 06, 2025Abstract:Sign language video generation requires producing natural signing motions with realistic appearances under precise semantic control, yet faces two critical challenges: excessive signer-specific data requirements and poor generalization. We propose a new paradigm for sign language video generation that decouples motion semantics from signer identity through a two-phase synthesis framework. First, we construct a signer-independent multimodal motion lexicon, where each gloss is stored as identity-agnostic pose, gesture, and 3D mesh sequences, requiring only one recording per sign. This compact representation enables our second key innovation: a discrete-to-continuous motion synthesis stage that transforms retrieved gloss sequences into temporally coherent motion trajectories, followed by identity-aware neural rendering to produce photorealistic videos of arbitrary signers. Unlike prior work constrained by signer-specific datasets, our method treats motion as a first-class citizen: the learned latent pose dynamics serve as a portable "choreography layer" that can be visually realized through different human appearances. Extensive experiments demonstrate that disentangling motion from identity is not just viable but advantageous - enabling both high-quality synthesis and unprecedented flexibility in signer personalization.

CLASP: Cross-modal Salient Anchor-based Semantic Propagation for Weakly-supervised Dense Audio-Visual Event Localization

Aug 06, 2025Abstract:The Dense Audio-Visual Event Localization (DAVEL) task aims to temporally localize events in untrimmed videos that occur simultaneously in both the audio and visual modalities. This paper explores DAVEL under a new and more challenging weakly-supervised setting (W-DAVEL task), where only video-level event labels are provided and the temporal boundaries of each event are unknown. We address W-DAVEL by exploiting \textit{cross-modal salient anchors}, which are defined as reliable timestamps that are well predicted under weak supervision and exhibit highly consistent event semantics across audio and visual modalities. Specifically, we propose a \textit{Mutual Event Agreement Evaluation} module, which generates an agreement score by measuring the discrepancy between the predicted audio and visual event classes. Then, the agreement score is utilized in a \textit{Cross-modal Salient Anchor Identification} module, which identifies the audio and visual anchor features through global-video and local temporal window identification mechanisms. The anchor features after multimodal integration are fed into an \textit{Anchor-based Temporal Propagation} module to enhance event semantic encoding in the original temporal audio and visual features, facilitating better temporal localization under weak supervision. We establish benchmarks for W-DAVEL on both the UnAV-100 and ActivityNet1.3 datasets. Extensive experiments demonstrate that our method achieves state-of-the-art performance.

Learning Speaker-Invariant Visual Features for Lipreading

Jun 09, 2025Abstract:Lipreading is a challenging cross-modal task that aims to convert visual lip movements into spoken text. Existing lipreading methods often extract visual features that include speaker-specific lip attributes (e.g., shape, color, texture), which introduce spurious correlations between vision and text. These correlations lead to suboptimal lipreading accuracy and restrict model generalization. To address this challenge, we introduce SIFLip, a speaker-invariant visual feature learning framework that disentangles speaker-specific attributes using two complementary disentanglement modules (Implicit Disentanglement and Explicit Disentanglement) to improve generalization. Specifically, since different speakers exhibit semantic consistency between lip movements and phonetic text when pronouncing the same words, our implicit disentanglement module leverages stable text embeddings as supervisory signals to learn common visual representations across speakers, implicitly decoupling speaker-specific features. Additionally, we design a speaker recognition sub-task within the main lipreading pipeline to filter speaker-specific features, then further explicitly disentangle these personalized visual features from the backbone network via gradient reversal. Experimental results demonstrate that SIFLip significantly enhances generalization performance across multiple public datasets. Experimental results demonstrate that SIFLip significantly improves generalization performance across multiple public datasets, outperforming state-of-the-art methods.

EmoSEM: Segment and Explain Emotion Stimuli in Visual Art

Apr 22, 2025Abstract:This paper focuses on a key challenge in visual art understanding: given an art image, the model pinpoints pixel regions that trigger a specific human emotion, and generates linguistic explanations for the emotional arousal. Despite recent advances in art understanding, pixel-level emotion understanding still faces a dual challenge: first, the subjectivity of emotion makes it difficult for general segmentation models like SAM to adapt to emotion-oriented segmentation tasks; and second, the abstract nature of art expression makes it difficult for captioning models to balance pixel-level semantic understanding and emotion reasoning. To solve the above problems, this paper proposes the Emotion stimuli Segmentation and Explanation Model (EmoSEM) to endow the segmentation model SAM with emotion comprehension capability. First, to enable the model to perform segmentation under the guidance of emotional intent well, we introduce an emotional prompt with a learnable mask token as the conditional input for segmentation decoding. Then, we design an emotion projector to establish the association between emotion and visual features. Next, more importantly, to address emotion-visual stimuli alignment, we develop a lightweight prefix projector, a module that fuses the learned emotional mask with the corresponding emotion into a unified representation compatible with the language model. Finally, we input the joint visual, mask, and emotional tokens into the language model and output the emotional explanations. It ensures that the generated interpretations remain semantically and emotionally coherent with the visual stimuli. The method innovatively realizes end-to-end modeling from low-level pixel features to high-level emotion interpretation, providing the first interpretable fine-grained analysis framework for artistic emotion computing. Extensive experiments validate the effectiveness of our model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge