Changtao Miao

Beyond Next-Token Alignment: Distilling Multimodal Large Language Models via Token Interactions

Feb 10, 2026Abstract:Multimodal Large Language Models (MLLMs) demonstrate impressive cross-modal capabilities, yet their substantial size poses significant deployment challenges. Knowledge distillation (KD) is a promising solution for compressing these models, but existing methods primarily rely on static next-token alignment, neglecting the dynamic token interactions, which embed essential capabilities for multimodal understanding and generation. To this end, we introduce Align-TI, a novel KD framework designed from the perspective of Token Interactions. Our approach is motivated by the insight that MLLMs rely on two primary interactions: vision-instruction token interactions to extract relevant visual information, and intra-response token interactions for coherent generation. Accordingly, Align-TI introduces two components: IVA enables the student model to imitate the teacher's instruction-relevant visual information extract capability by aligning on salient visual regions. TPA captures the teacher's dynamic generative logic by aligning the sequential token-to-token transition probabilities. Extensive experiments demonstrate Align-TI's superiority. Notably, our approach achieves $2.6\%$ relative improvement over Vanilla KD, and our distilled Align-TI-2B even outperforms LLaVA-1.5-7B (a much larger MLLM) by $7.0\%$, establishing a new state-of-the-art distillation framework for training parameter-efficient MLLMs. Code is available at https://github.com/lchen1019/Align-TI.

SAPL: Semantic-Agnostic Prompt Learning in CLIP for Weakly Supervised Image Manipulation Localization

Jan 09, 2026Abstract:Malicious image manipulation threatens public safety and requires efficient localization methods. Existing approaches depend on costly pixel-level annotations which make training expensive. Existing weakly supervised methods rely only on image-level binary labels and focus on global classification, often overlooking local edge cues that are critical for precise localization. We observe that feature variations at manipulated boundaries are substantially larger than in interior regions. To address this gap, we propose Semantic-Agnostic Prompt Learning (SAPL) in CLIP, which learns text prompts that intentionally encode non-semantic, boundary-centric cues so that CLIPs multimodal similarity highlights manipulation edges rather than high-level object semantics. SAPL combines two complementary modules Edge-aware Contextual Prompt Learning (ECPL) and Hierarchical Edge Contrastive Learning (HECL) to exploit edge information in both textual and visual spaces. The proposed ECPL leverages edge-enhanced image features to generate learnable textual prompts via an attention mechanism, embedding semantic-irrelevant information into text features, to guide CLIP focusing on manipulation edges. The proposed HECL extract genuine and manipulated edge patches, and utilize contrastive learning to boost the discrimination between genuine edge patches and manipulated edge patches. Finally, we predict the manipulated regions from the similarity map after processing. Extensive experiments on multiple public benchmarks demonstrate that SAPL significantly outperforms existing approaches, achieving state-of-the-art localization performance.

LogicLens: Visual-Logical Co-Reasoning for Text-Centric Forgery Analysis

Dec 25, 2025Abstract:Sophisticated text-centric forgeries, fueled by rapid AIGC advancements, pose a significant threat to societal security and information authenticity. Current methods for text-centric forgery analysis are often limited to coarse-grained visual analysis and lack the capacity for sophisticated reasoning. Moreover, they typically treat detection, grounding, and explanation as discrete sub-tasks, overlooking their intrinsic relationships for holistic performance enhancement. To address these challenges, we introduce LogicLens, a unified framework for Visual-Textual Co-reasoning that reformulates these objectives into a joint task. The deep reasoning of LogicLens is powered by our novel Cross-Cues-aware Chain of Thought (CCT) mechanism, which iteratively cross-validates visual cues against textual logic. To ensure robust alignment across all tasks, we further propose a weighted multi-task reward function for GRPO-based optimization. Complementing this framework, we first designed the PR$^2$ (Perceiver, Reasoner, Reviewer) pipeline, a hierarchical and iterative multi-agent system that generates high-quality, cognitively-aligned annotations. Then, we constructed RealText, a diverse dataset comprising 5,397 images with fine-grained annotations, including textual explanations, pixel-level segmentation, and authenticity labels for model training. Extensive experiments demonstrate the superiority of LogicLens across multiple benchmarks. In a zero-shot evaluation on T-IC13, it surpasses the specialized framework by 41.4% and GPT-4o by 23.4% in macro-average F1 score. Moreover, on the challenging dense-text T-SROIE dataset, it establishes a significant lead over other MLLM-based methods in mF1, CSS, and the macro-average F1. Our dataset, model, and code will be made publicly available.

ViRC: Enhancing Visual Interleaved Mathematical CoT with Reason Chunking

Dec 17, 2025Abstract:CoT has significantly enhanced the reasoning ability of LLMs while it faces challenges when extended to multimodal domains, particularly in mathematical tasks. Existing MLLMs typically perform textual reasoning solely from a single static mathematical image, overlooking dynamic visual acquisition during reasoning. In contrast, humans repeatedly examine visual image and employ step-by-step reasoning to prove intermediate propositions. This strategy of decomposing the problem-solving process into key logical nodes adheres to Miller's Law in cognitive science. Inspired by this insight, we propose a ViRC framework for multimodal mathematical tasks, introducing a Reason Chunking mechanism that structures multimodal mathematical CoT into consecutive Critical Reasoning Units (CRUs) to simulate human expert problem-solving patterns. CRUs ensure intra-unit textual coherence for intermediate proposition verification while integrating visual information across units to generate subsequent propositions and support structured reasoning. To this end, we present CRUX dataset by using three visual tools and four reasoning patterns to provide explicitly annotated CRUs across multiple reasoning paths for each mathematical problem. Leveraging the CRUX dataset, we propose a progressive training strategy inspired by human cognitive learning, which includes Instructional SFT, Practice SFT, and Strategic RL, aimed at further strengthening the Reason Chunking ability of the model. The resulting ViRC-7B model achieves a 18.8% average improvement over baselines across multiple mathematical benchmarks. Code is available at https://github.com/Leon-LihongWang/ViRC.

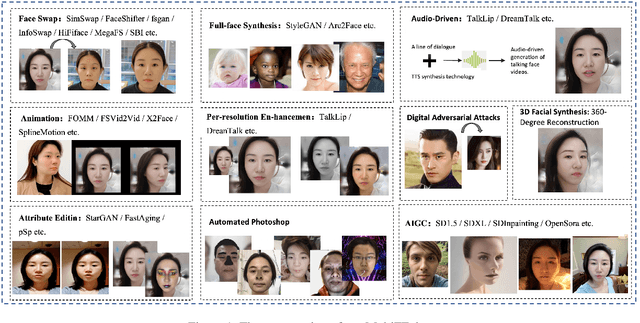

Benchmarking Unified Face Attack Detection via Hierarchical Prompt Tuning

May 19, 2025Abstract:Presentation Attack Detection and Face Forgery Detection are designed to protect face data from physical media-based Presentation Attacks and digital editing-based DeepFakes respectively. But separate training of these two models makes them vulnerable to unknown attacks and burdens deployment environments. The lack of a Unified Face Attack Detection model to handle both types of attacks is mainly due to two factors. First, there's a lack of adequate benchmarks for models to explore. Existing UAD datasets have limited attack types and samples, restricting the model's ability to address advanced threats. To address this, we propose UniAttackDataPlus (UniAttackData+), the most extensive and sophisticated collection of forgery techniques to date. It includes 2,875 identities and their 54 kinds of falsified samples, totaling 697,347 videos. Second, there's a lack of a reliable classification criterion. Current methods try to find an arbitrary criterion within the same semantic space, which fails when encountering diverse attacks. So, we present a novel Visual-Language Model-based Hierarchical Prompt Tuning Framework (HiPTune) that adaptively explores multiple classification criteria from different semantic spaces. We build a Visual Prompt Tree to explore various classification rules hierarchically. Then, by adaptively pruning the prompts, the model can select the most suitable prompts to guide the encoder to extract discriminative features at different levels in a coarse-to-fine way. Finally, to help the model understand the classification criteria in visual space, we propose a Dynamically Prompt Integration module to project the visual prompts to the text encoder for more accurate semantics. Experiments on 12 datasets have shown the potential to inspire further innovations in the UAD field.

SUEDE:Shared Unified Experts for Physical-Digital Face Attack Detection Enhancement

Apr 07, 2025

Abstract:Face recognition systems are vulnerable to physical attacks (e.g., printed photos) and digital threats (e.g., DeepFake), which are currently being studied as independent visual tasks, such as Face Anti-Spoofing and Forgery Detection. The inherent differences among various attack types present significant challenges in identifying a common feature space, making it difficult to develop a unified framework for detecting data from both attack modalities simultaneously. Inspired by the efficacy of Mixture-of-Experts (MoE) in learning across diverse domains, we explore utilizing multiple experts to learn the distinct features of various attack types. However, the feature distributions of physical and digital attacks overlap and differ. This suggests that relying solely on distinct experts to learn the unique features of each attack type may overlook shared knowledge between them. To address these issues, we propose SUEDE, the Shared Unified Experts for Physical-Digital Face Attack Detection Enhancement. SUEDE combines a shared expert (always activated) to capture common features for both attack types and multiple routed experts (selectively activated) for specific attack types. Further, we integrate CLIP as the base network to ensure the shared expert benefits from prior visual knowledge and align visual-text representations in a unified space. Extensive results demonstrate SUEDE achieves superior performance compared to state-of-the-art unified detection methods.

Context-Aware Weakly Supervised Image Manipulation Localization with SAM Refinement

Mar 26, 2025Abstract:Malicious image manipulation poses societal risks, increasing the importance of effective image manipulation detection methods. Recent approaches in image manipulation detection have largely been driven by fully supervised approaches, which require labor-intensive pixel-level annotations. Thus, it is essential to explore weakly supervised image manipulation localization methods that only require image-level binary labels for training. However, existing weakly supervised image manipulation methods overlook the importance of edge information for accurate localization, leading to suboptimal localization performance. To address this, we propose a Context-Aware Boundary Localization (CABL) module to aggregate boundary features and learn context-inconsistency for localizing manipulated areas. Furthermore, by leveraging Class Activation Mapping (CAM) and Segment Anything Model (SAM), we introduce the CAM-Guided SAM Refinement (CGSR) module to generate more accurate manipulation localization maps. By integrating two modules, we present a novel weakly supervised framework based on a dual-branch Transformer-CNN architecture. Our method achieves outstanding localization performance across multiple datasets.

Inclusion 2024 Global Multimedia Deepfake Detection: Towards Multi-dimensional Facial Forgery Detection

Dec 30, 2024

Abstract:In this paper, we present the Global Multimedia Deepfake Detection held concurrently with the Inclusion 2024. Our Multimedia Deepfake Detection aims to detect automatic image and audio-video manipulations including but not limited to editing, synthesis, generation, Photoshop,etc. Our challenge has attracted 1500 teams from all over the world, with about 5000 valid result submission counts. We invite the top 20 teams to present their solutions to the challenge, from which the top 3 teams are awarded prizes in the grand finale. In this paper, we present the solutions from the top 3 teams of the two tracks, to boost the research work in the field of image and audio-video forgery detection. The methodologies developed through the challenge will contribute to the development of next-generation deepfake detection systems and we encourage participants to open source their methods.

Mixture-of-Noises Enhanced Forgery-Aware Predictor for Multi-Face Manipulation Detection and Localization

Aug 05, 2024

Abstract:With the advancement of face manipulation technology, forgery images in multi-face scenarios are gradually becoming a more complex and realistic challenge. Despite this, detection and localization methods for such multi-face manipulations remain underdeveloped. Traditional manipulation localization methods either indirectly derive detection results from localization masks, resulting in limited detection performance, or employ a naive two-branch structure to simultaneously obtain detection and localization results, which cannot effectively benefit the localization capability due to limited interaction between two tasks. This paper proposes a new framework, namely MoNFAP, specifically tailored for multi-face manipulation detection and localization. The MoNFAP primarily introduces two novel modules: the Forgery-aware Unified Predictor (FUP) Module and the Mixture-of-Noises Module (MNM). The FUP integrates detection and localization tasks using a token learning strategy and multiple forgery-aware transformers, which facilitates the use of classification information to enhance localization capability. Besides, motivated by the crucial role of noise information in forgery detection, the MNM leverages multiple noise extractors based on the concept of the mixture of experts to enhance the general RGB features, further boosting the performance of our framework. Finally, we establish a comprehensive benchmark for multi-face detection and localization and the proposed \textit{MoNFAP} achieves significant performance. The codes will be made available.

Vulnerabilities in AI-generated Image Detection: The Challenge of Adversarial Attacks

Jul 30, 2024Abstract:Recent advancements in image synthesis, particularly with the advent of GAN and Diffusion models, have amplified public concerns regarding the dissemination of disinformation. To address such concerns, numerous AI-generated Image (AIGI) Detectors have been proposed and achieved promising performance in identifying fake images. However, there still lacks a systematic understanding of the adversarial robustness of these AIGI detectors. In this paper, we examine the vulnerability of state-of-the-art AIGI detectors against adversarial attack under white-box and black-box settings, which has been rarely investigated so far. For the task of AIGI detection, we propose a new attack containing two main parts. First, inspired by the obvious difference between real images and fake images in the frequency domain, we add perturbations under the frequency domain to push the image away from its original frequency distribution. Second, we explore the full posterior distribution of the surrogate model to further narrow this gap between heterogeneous models, e.g. transferring adversarial examples across CNNs and ViTs. This is achieved by introducing a novel post-train Bayesian strategy that turns a single surrogate into a Bayesian one, capable of simulating diverse victim models using one pre-trained surrogate, without the need for re-training. We name our method as frequency-based post-train Bayesian attack, or FPBA. Through FPBA, we show that adversarial attack is truly a real threat to AIGI detectors, because FPBA can deliver successful black-box attacks across models, generators, defense methods, and even evade cross-generator detection, which is a crucial real-world detection scenario.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge