Zhijun Li

North China University of Technology

Genie Sim 3.0 : A High-Fidelity Comprehensive Simulation Platform for Humanoid Robot

Jan 05, 2026Abstract:The development of robust and generalizable robot learning models is critically contingent upon the availability of large-scale, diverse training data and reliable evaluation benchmarks. Collecting data in the physical world poses prohibitive costs and scalability challenges, and prevailing simulation benchmarks frequently suffer from fragmentation, narrow scope, or insufficient fidelity to enable effective sim-to-real transfer. To address these challenges, we introduce Genie Sim 3.0, a unified simulation platform for robotic manipulation. We present Genie Sim Generator, a large language model (LLM)-powered tool that constructs high-fidelity scenes from natural language instructions. Its principal strength resides in rapid and multi-dimensional generalization, facilitating the synthesis of diverse environments to support scalable data collection and robust policy evaluation. We introduce the first benchmark that pioneers the application of LLM for automated evaluation. It leverages LLM to mass-generate evaluation scenarios and employs Vision-Language Model (VLM) to establish an automated assessment pipeline. We also release an open-source dataset comprising more than 10,000 hours of synthetic data across over 200 tasks. Through systematic experimentation, we validate the robust zero-shot sim-to-real transfer capability of our open-source dataset, demonstrating that synthetic data can server as an effective substitute for real-world data under controlled conditions for scalable policy training. For code and dataset details, please refer to: https://github.com/AgibotTech/genie_sim.

Safe Path Planning and Observation Quality Enhancement Strategy for Unmanned Aerial Vehicles in Water Quality Monitoring Tasks

Dec 24, 2025

Abstract:Unmanned Aerial Vehicle (UAV) spectral remote sensing technology is widely used in water quality monitoring. However, in dynamic environments, varying illumination conditions, such as shadows and specular reflection (sun glint), can cause severe spectral distortion, thereby reducing data availability. To maximize the acquisition of high-quality data while ensuring flight safety, this paper proposes an active path planning method for dynamic light and shadow disturbance avoidance. First, a dynamic prediction model is constructed to transform the time-varying light and shadow disturbance areas into three-dimensional virtual obstacles. Second, an improved Interfered Fluid Dynamical System (IFDS) algorithm is introduced, which generates a smooth initial obstacle avoidance path by building a repulsive force field. Subsequently, a Model Predictive Control (MPC) framework is employed for rolling-horizon path optimization to handle flight dynamics constraints and achieve real-time trajectory tracking. Furthermore, a Dynamic Flight Altitude Adjustment (DFAA) mechanism is designed to actively reduce the flight altitude when the observable area is narrow, thereby enhancing spatial resolution. Simulation results show that, compared with traditional PID and single obstacle avoidance algorithms, the proposed method achieves an obstacle avoidance success rate of 98% in densely disturbed scenarios, significantly improves path smoothness, and increases the volume of effective observation data by approximately 27%. This research provides an effective engineering solution for precise UAV water quality monitoring in complex illumination environments.

FoCTTA: Low-Memory Continual Test-Time Adaptation with Focus

Feb 28, 2025Abstract:Continual adaptation to domain shifts at test time (CTTA) is crucial for enhancing the intelligence of deep learning enabled IoT applications. However, prevailing TTA methods, which typically update all batch normalization (BN) layers, exhibit two memory inefficiencies. First, the reliance on BN layers for adaptation necessitates large batch sizes, leading to high memory usage. Second, updating all BN layers requires storing the activations of all BN layers for backpropagation, exacerbating the memory demand. Both factors lead to substantial memory costs, making existing solutions impractical for IoT devices. In this paper, we present FoCTTA, a low-memory CTTA strategy. The key is to automatically identify and adapt a few drift-sensitive representation layers, rather than blindly update all BN layers. The shift from BN to representation layers eliminates the need for large batch sizes. Also, by updating adaptation-critical layers only, FoCTTA avoids storing excessive activations. This focused adaptation approach ensures that FoCTTA is not only memory-efficient but also maintains effective adaptation. Evaluations show that FoCTTA improves the adaptation accuracy over the state-of-the-arts by 4.5%, 4.9%, and 14.8% on CIFAR10-C, CIFAR100-C, and ImageNet-C under the same memory constraints. Across various batch sizes, FoCTTA reduces the memory usage by 3-fold on average, while improving the accuracy by 8.1%, 3.6%, and 0.2%, respectively, on the three datasets.

FocusDD: Real-World Scene Infusion for Robust Dataset Distillation

Jan 11, 2025

Abstract:Dataset distillation has emerged as a strategy to compress real-world datasets for efficient training. However, it struggles with large-scale and high-resolution datasets, limiting its practicality. This paper introduces a novel resolution-independent dataset distillation method Focus ed Dataset Distillation (FocusDD), which achieves diversity and realism in distilled data by identifying key information patches, thereby ensuring the generalization capability of the distilled dataset across different network architectures. Specifically, FocusDD leverages a pre-trained Vision Transformer (ViT) to extract key image patches, which are then synthesized into a single distilled image. These distilled images, which capture multiple targets, are suitable not only for classification tasks but also for dense tasks such as object detection. To further improve the generalization of the distilled dataset, each synthesized image is augmented with a downsampled view of the original image. Experimental results on the ImageNet-1K dataset demonstrate that, with 100 images per class (IPC), ResNet50 and MobileNet-v2 achieve validation accuracies of 71.0% and 62.6%, respectively, outperforming state-of-the-art methods by 2.8% and 4.7%. Notably, FocusDD is the first method to use distilled datasets for object detection tasks. On the COCO2017 dataset, with an IPC of 50, YOLOv11n and YOLOv11s achieve 24.4% and 32.1% mAP, respectively, further validating the effectiveness of our approach.

MBPU: A Plug-and-Play State Space Model for Point Cloud Upsamping with Fast Point Rendering

Oct 21, 2024

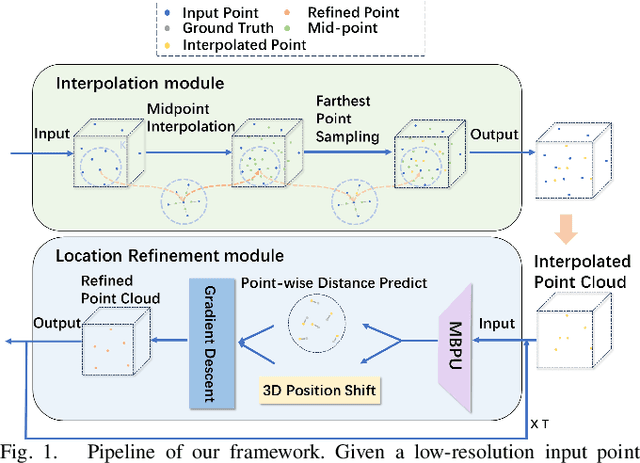

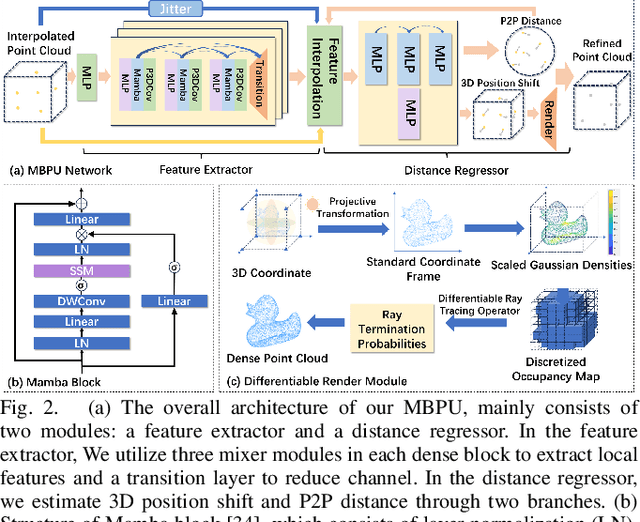

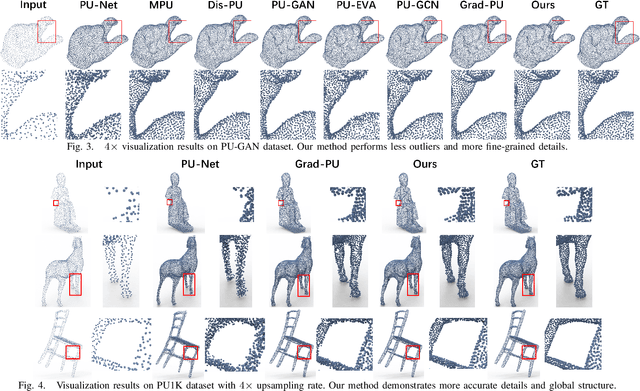

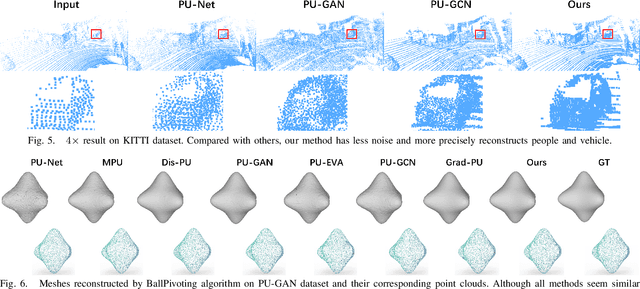

Abstract:The task of point cloud upsampling (PCU) is to generate dense and uniform point clouds from sparse input captured by 3D sensors like LiDAR, holding potential applications in real yet is still a challenging task. Existing deep learning-based methods have shown significant achievements in this field. However, they still face limitations in effectively handling long sequences and addressing the issue of shrinkage artifacts around the surface of the point cloud. Inspired by the newly proposed Mamba, in this paper, we introduce a network named MBPU built on top of the Mamba architecture, which performs well in long sequence modeling, especially for large-scale point cloud upsampling, and achieves fast convergence speed. Moreover, MBPU is an arbitrary-scale upsampling framework as the predictor of point distance in the point refinement phase. At the same time, we simultaneously predict the 3D position shift and 1D point-to-point distance as regression quantities to constrain the global features while ensuring the accuracy of local details. We also introduce a fast differentiable renderer to further enhance the fidelity of the upsampled point cloud and reduce artifacts. It is noted that, by the merits of our fast point rendering, MBPU yields high-quality upsampled point clouds by effectively eliminating surface noise. Extensive experiments have demonstrated that our MBPU outperforms other off-the-shelf methods in terms of point cloud upsampling, especially for large-scale point clouds.

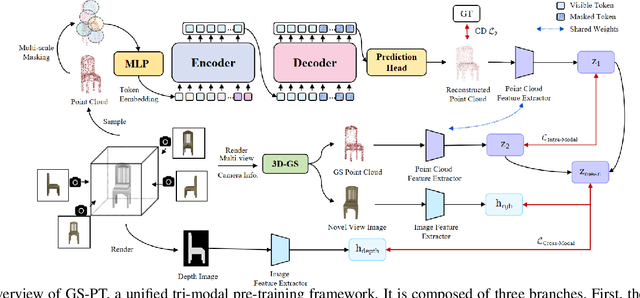

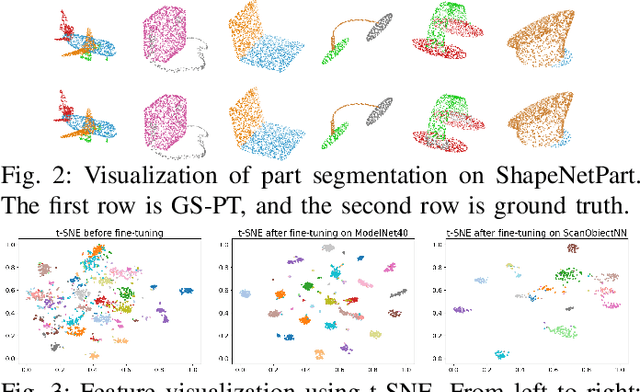

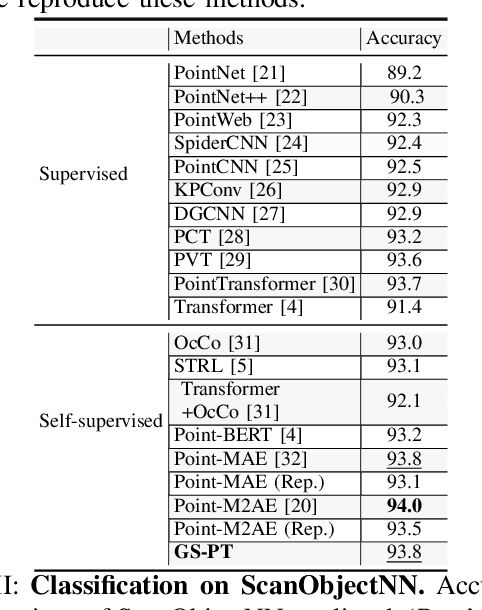

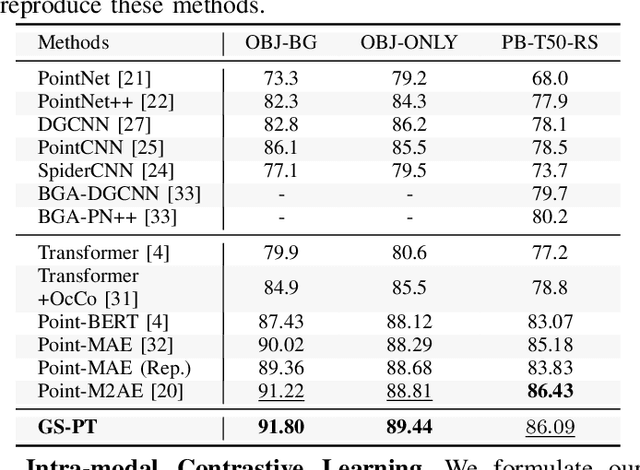

GS-PT: Exploiting 3D Gaussian Splatting for Comprehensive Point Cloud Understanding via Self-supervised Learning

Sep 08, 2024

Abstract:Self-supervised learning of point cloud aims to leverage unlabeled 3D data to learn meaningful representations without reliance on manual annotations. However, current approaches face challenges such as limited data diversity and inadequate augmentation for effective feature learning. To address these challenges, we propose GS-PT, which integrates 3D Gaussian Splatting (3DGS) into point cloud self-supervised learning for the first time. Our pipeline utilizes transformers as the backbone for self-supervised pre-training and introduces novel contrastive learning tasks through 3DGS. Specifically, the transformers aim to reconstruct the masked point cloud. 3DGS utilizes multi-view rendered images as input to generate enhanced point cloud distributions and novel view images, facilitating data augmentation and cross-modal contrastive learning. Additionally, we incorporate features from depth maps. By optimizing these tasks collectively, our method enriches the tri-modal self-supervised learning process, enabling the model to leverage the correlation across 3D point clouds and 2D images from various modalities. We freeze the encoder after pre-training and test the model's performance on multiple downstream tasks. Experimental results indicate that GS-PT outperforms the off-the-shelf self-supervised learning methods on various downstream tasks including 3D object classification, real-world classifications, and few-shot learning and segmentation.

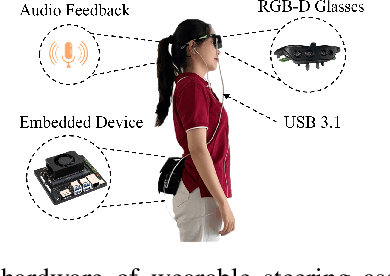

Vision-based Wearable Steering Assistance for People with Impaired Vision in Jogging

Aug 01, 2024

Abstract:Outdoor sports pose a challenge for people with impaired vision. The demand for higher-speed mobility inspired us to develop a vision-based wearable steering assistance. To ensure broad applicability, we focused on a representative sports environment, the athletics track. Our efforts centered on improving the speed and accuracy of perception, enhancing planning adaptability for the real world, and providing swift and safe assistance for people with impaired vision. In perception, we engineered a lightweight multitask network capable of simultaneously detecting track lines and obstacles. Additionally, due to the limitations of existing datasets for supporting multi-task detection in athletics tracks, we diligently collected and annotated a new dataset (MAT) containing 1000 images. In planning, we integrated the methods of sampling and spline curves, addressing the planning challenges of curves. Meanwhile, we utilized the positions of the track lines and obstacles as constraints to guide people with impaired vision safely along the current track. Our system is deployed on an embedded device, Jetson Orin NX. Through outdoor experiments, it demonstrated adaptability in different sports scenarios, assisting users in achieving free movement of 400-meter at an average speed of 1.34 m/s, meeting the level of normal people in jogging. Our MAT dataset is publicly available from https://github.com/snoopy-l/MAT

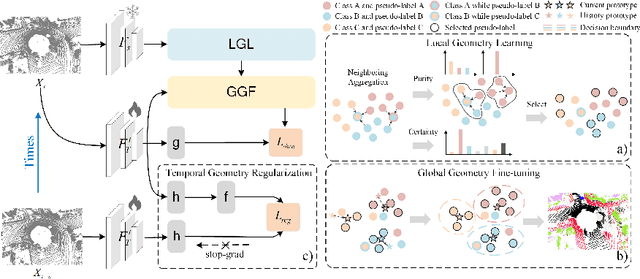

HGL: Hierarchical Geometry Learning for Test-time Adaptation in 3D Point Cloud Segmentation

Jul 17, 2024

Abstract:3D point cloud segmentation has received significant interest for its growing applications. However, the generalization ability of models suffers in dynamic scenarios due to the distribution shift between test and training data. To promote robustness and adaptability across diverse scenarios, test-time adaptation (TTA) has recently been introduced. Nevertheless, most existing TTA methods are developed for images, and limited approaches applicable to point clouds ignore the inherent hierarchical geometric structures in point cloud streams, i.e., local (point-level), global (object-level), and temporal (frame-level) structures. In this paper, we delve into TTA in 3D point cloud segmentation and propose a novel Hierarchical Geometry Learning (HGL) framework. HGL comprises three complementary modules from local, global to temporal learning in a bottom-up manner.Technically, we first construct a local geometry learning module for pseudo-label generation. Next, we build prototypes from the global geometry perspective for pseudo-label fine-tuning. Furthermore, we introduce a temporal consistency regularization module to mitigate negative transfer. Extensive experiments on four datasets demonstrate the effectiveness and superiority of our HGL. Remarkably, on the SynLiDAR to SemanticKITTI task, HGL achieves an overall mIoU of 46.91\%, improving GIPSO by 3.0\% and significantly reducing the required adaptation time by 80\%. The code is available at https://github.com/tpzou/HGL.

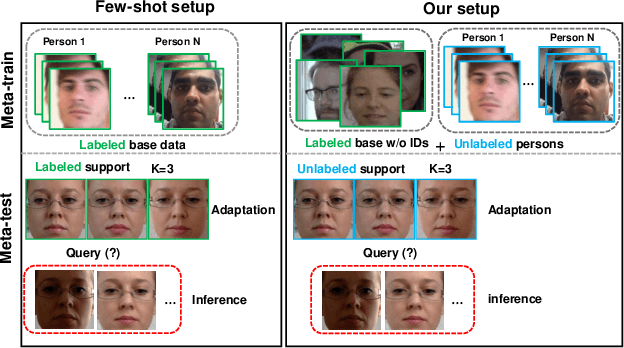

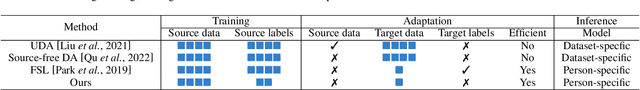

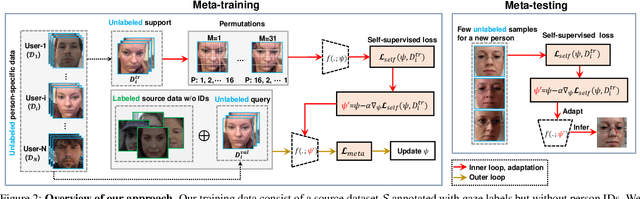

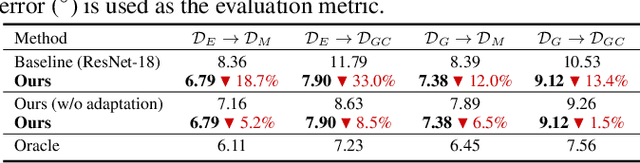

ELF-UA: Efficient Label-Free User Adaptation in Gaze Estimation

Jun 13, 2024

Abstract:We consider the problem of user-adaptive 3D gaze estimation. The performance of person-independent gaze estimation is limited due to interpersonal anatomical differences. Our goal is to provide a personalized gaze estimation model specifically adapted to a target user. Previous work on user-adaptive gaze estimation requires some labeled images of the target person data to fine-tune the model at test time. However, this can be unrealistic in real-world applications, since it is cumbersome for an end-user to provide labeled images. In addition, previous work requires the training data to have both gaze labels and person IDs. This data requirement makes it infeasible to use some of the available data. To tackle these challenges, this paper proposes a new problem called efficient label-free user adaptation in gaze estimation. Our model only needs a few unlabeled images of a target user for the model adaptation. During offline training, we have some labeled source data without person IDs and some unlabeled person-specific data. Our proposed method uses a meta-learning approach to learn how to adapt to a new user with only a few unlabeled images. Our key technical innovation is to use a generalization bound from domain adaptation to define the loss function in meta-learning, so that our method can effectively make use of both the labeled source data and the unlabeled person-specific data during training. Extensive experiments validate the effectiveness of our method on several challenging benchmarks.

Gyroscope-Assisted Motion Deblurring Network

Feb 10, 2024Abstract:Image research has shown substantial attention in deblurring networks in recent years. Yet, their practical usage in real-world deblurring, especially motion blur, remains limited due to the lack of pixel-aligned training triplets (background, blurred image, and blur heat map) and restricted information inherent in blurred images. This paper presents a simple yet efficient framework to synthetic and restore motion blur images using Inertial Measurement Unit (IMU) data. Notably, the framework includes a strategy for training triplet generation, and a Gyroscope-Aided Motion Deblurring (GAMD) network for blurred image restoration. The rationale is that through harnessing IMU data, we can determine the transformation of the camera pose during the image exposure phase, facilitating the deduction of the motion trajectory (aka. blur trajectory) for each point inside the three-dimensional space. Thus, the synthetic triplets using our strategy are inherently close to natural motion blur, strictly pixel-aligned, and mass-producible. Through comprehensive experiments, we demonstrate the advantages of the proposed framework: only two-pixel errors between our synthetic and real-world blur trajectories, a marked improvement (around 33.17%) of the state-of-the-art deblurring method MIMO on Peak Signal-to-Noise Ratio (PSNR).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge