Ruiqi Zhang

Scalable Quantum Error Mitigation with Neighbor-Informed Learning

Dec 14, 2025Abstract:Noise in quantum hardware is the primary obstacle to realizing the transformative potential of quantum computing. Quantum error mitigation (QEM) offers a promising pathway to enhance computational accuracy on near-term devices, yet existing methods face a difficult trade-off between performance, resource overhead, and theoretical guarantees. In this work, we introduce neighbor-informed learning (NIL), a versatile and scalable QEM framework that unifies and strengthens existing methods such as zero-noise extrapolation (ZNE) and probabilistic error cancellation (PEC), while offering improved flexibility, accuracy, efficiency, and robustness. NIL learns to predict the ideal output of a target quantum circuit from the noisy outputs of its structurally related ``neighbor'' circuits. A key innovation is our 2-design training method, which generates training data for our machine learning model. In contrast to conventional learning-based QEM protocols that create training circuits by replacing non-Clifford gates with uniformly random Clifford gates, our approach achieves higher accuracy and efficiency, as demonstrated by both theoretical analysis and numerical simulation. Furthermore, we prove that the required size of the training set scales only \emph{logarithmically} with the total number of neighbor circuits, enabling NIL to be applied to problems involving large-scale quantum circuits. Our work establishes a theoretically grounded and practically efficient framework for QEM, paving a viable path toward achieving quantum advantage on noisy hardware.

On computing and the complexity of computing higher-order $U$-statistics, exactly

Aug 18, 2025Abstract:Higher-order $U$-statistics abound in fields such as statistics, machine learning, and computer science, but are known to be highly time-consuming to compute in practice. Despite their widespread appearance, a comprehensive study of their computational complexity is surprisingly lacking. This paper aims to fill that gap by presenting several results related to the computational aspect of $U$-statistics. First, we derive a useful decomposition from an $m$-th order $U$-statistic to a linear combination of $V$-statistics with orders not exceeding $m$, which are generally more feasible to compute. Second, we explore the connection between exactly computing $V$-statistics and Einstein summation, a tool often used in computational mathematics, quantum computing, and quantum information sciences for accelerating tensor computations. Third, we provide an optimistic estimate of the time complexity for exactly computing $U$-statistics, based on the treewidth of a particular graph associated with the $U$-statistic kernel. The above ingredients lead to a new, much more runtime-efficient algorithm of exactly computing general higher-order $U$-statistics. We also wrap our new algorithm into an open-source Python package called $\texttt{u-stats}$. We demonstrate via three statistical applications that $\texttt{u-stats}$ achieves impressive runtime performance compared to existing benchmarks. This paper aspires to achieve two goals: (1) to capture the interest of researchers in both statistics and other related areas further to advance the algorithmic development of $U$-statistics, and (2) to offer the package $\texttt{u-stats}$ as a valuable tool for practitioners, making the implementation of methods based on higher-order $U$-statistics a more delightful experience.

SPEED-RL: Faster Training of Reasoning Models via Online Curriculum Learning

Jun 10, 2025Abstract:Training large language models with reinforcement learning (RL) against verifiable rewards significantly enhances their reasoning abilities, yet remains computationally expensive due to inefficient uniform prompt sampling. We introduce Selective Prompting with Efficient Estimation of Difficulty (SPEED), an adaptive online RL curriculum that selectively chooses training examples of intermediate difficulty to maximize learning efficiency. Theoretically, we establish that intermediate-difficulty prompts improve the gradient estimator's signal-to-noise ratio, accelerating convergence. Empirically, our efficient implementation leads to 2x to 6x faster training without degrading accuracy, requires no manual tuning, and integrates seamlessly into standard RL algorithms.

Long or short CoT? Investigating Instance-level Switch of Large Reasoning Models

Jun 04, 2025Abstract:With the rapid advancement of large reasoning models, long Chain-of-Thought (CoT) prompting has demonstrated strong performance on complex tasks. However, this often comes with a significant increase in token usage. In this paper, we conduct a comprehensive empirical analysis comparing long and short CoT strategies. Our findings reveal that while long CoT can lead to performance improvements, its benefits are often marginal relative to its significantly higher token consumption. Specifically, long CoT tends to outperform when ample generation budgets are available, whereas short CoT is more effective under tighter budget constraints. These insights underscore the need for a dynamic approach that selects the proper CoT strategy based on task context and resource availability. To address this, we propose SwitchCoT, an automatic framework that adaptively chooses between long and short CoT strategies to balance reasoning accuracy and computational efficiency. Moreover, SwitchCoT is designed to be budget-aware, making it broadly applicable across scenarios with varying resource constraints. Experimental results demonstrate that SwitchCoT can reduce inference costs by up to 50% while maintaining high accuracy. Notably, under limited token budgets, it achieves performance comparable to, or even exceeding, that of using either long or short CoT alone.

MLMC-based Resource Adequacy Assessment with Active Learning Trained Surrogate Models

May 27, 2025Abstract:Multilevel Monte Carlo (MLMC) is a flexible and effective variance reduction technique for accelerating reliability assessments of complex power system. Recently, data-driven surrogate models have been proposed as lower-level models in the MLMC framework due to their high correlation and negligible execution time once trained. However, in resource adequacy assessments, pre-labeled datasets are typically unavailable. For large-scale systems, the efficiency gains from surrogate models are often offset by the substantial time required for labeling training data. Therefore, this paper introduces a speed metric that accounts for training time in evaluating MLMC efficiency. Considering the total time budget is limited, a vote-by-committee active learning approach is proposed to reduce the required labeling calls. A case study demonstrates that, within practical variance thresholds, active learning enables significantly improved MLMC efficiency with reduced training effort, compared to regular surrogate modelling approaches.

Duawlfin: A Drone with Unified Actuation for Wheeled Locomotion and Flight Operation

May 20, 2025Abstract:This paper presents Duawlfin, a drone with unified actuation for wheeled locomotion and flight operation that achieves efficient, bidirectional ground mobility. Unlike existing hybrid designs, Duawlfin eliminates the need for additional actuators or propeller-driven ground propulsion by leveraging only its standard quadrotor motors and introducing a differential drivetrain with one-way bearings. This innovation simplifies the mechanical system, significantly reduces energy usage, and prevents the disturbance caused by propellers spinning near the ground, such as dust interference with sensors. Besides, the one-way bearings minimize the power transfer from motors to propellers in the ground mode, which enables the vehicle to operate safely near humans. We provide a detailed mechanical design, present control strategies for rapid and smooth mode transitions, and validate the concept through extensive experimental testing. Flight-mode tests confirm stable aerial performance comparable to conventional quadcopters, while ground-mode experiments demonstrate efficient slope climbing (up to 30{\deg}) and agile turning maneuvers approaching 1g lateral acceleration. The seamless transitions between aerial and ground modes further underscore the practicality and effectiveness of our approach for applications like urban logistics and indoor navigation. All the materials including 3-D model files, demonstration video and other assets are open-sourced at https://sites.google.com/view/Duawlfin.

Minimax Optimal Convergence of Gradient Descent in Logistic Regression via Large and Adaptive Stepsizes

Apr 05, 2025Abstract:We study $\textit{gradient descent}$ (GD) for logistic regression on linearly separable data with stepsizes that adapt to the current risk, scaled by a constant hyperparameter $\eta$. We show that after at most $1/\gamma^2$ burn-in steps, GD achieves a risk upper bounded by $\exp(-\Theta(\eta))$, where $\gamma$ is the margin of the dataset. As $\eta$ can be arbitrarily large, GD attains an arbitrarily small risk $\textit{immediately after the burn-in steps}$, though the risk evolution may be $\textit{non-monotonic}$. We further construct hard datasets with margin $\gamma$, where any batch or online first-order method requires $\Omega(1/\gamma^2)$ steps to find a linear separator. Thus, GD with large, adaptive stepsizes is $\textit{minimax optimal}$ among first-order batch methods. Notably, the classical $\textit{Perceptron}$ (Novikoff, 1962), a first-order online method, also achieves a step complexity of $1/\gamma^2$, matching GD even in constants. Finally, our GD analysis extends to a broad class of loss functions and certain two-layer networks.

Mitigating Ambiguities in 3D Classification with Gaussian Splatting

Mar 11, 2025Abstract:3D classification with point cloud input is a fundamental problem in 3D vision. However, due to the discrete nature and the insufficient material description of point cloud representations, there are ambiguities in distinguishing wire-like and flat surfaces, as well as transparent or reflective objects. To address these issues, we propose Gaussian Splatting (GS) point cloud-based 3D classification. We find that the scale and rotation coefficients in the GS point cloud help characterize surface types. Specifically, wire-like surfaces consist of multiple slender Gaussian ellipsoids, while flat surfaces are composed of a few flat Gaussian ellipsoids. Additionally, the opacity in the GS point cloud represents the transparency characteristics of objects. As a result, ambiguities in point cloud-based 3D classification can be mitigated utilizing GS point cloud as input. To verify the effectiveness of GS point cloud input, we construct the first real-world GS point cloud dataset in the community, which includes 20 categories with 200 objects in each category. Experiments not only validate the superiority of GS point cloud input, especially in distinguishing ambiguous objects, but also demonstrate the generalization ability across different classification methods.

How Do LLMs Perform Two-Hop Reasoning in Context?

Feb 19, 2025

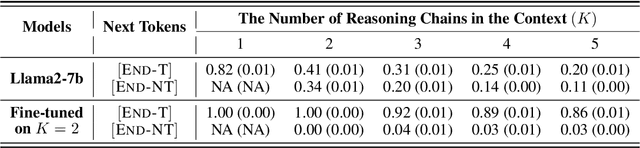

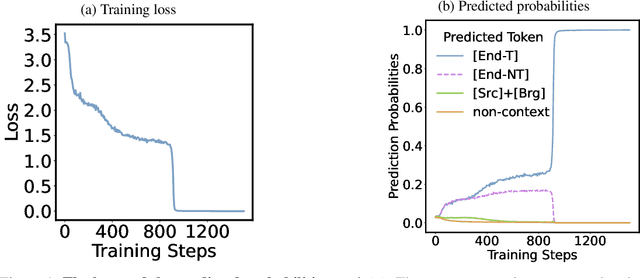

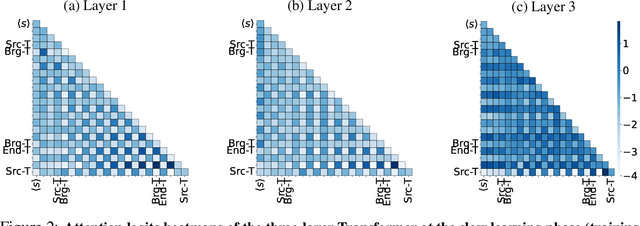

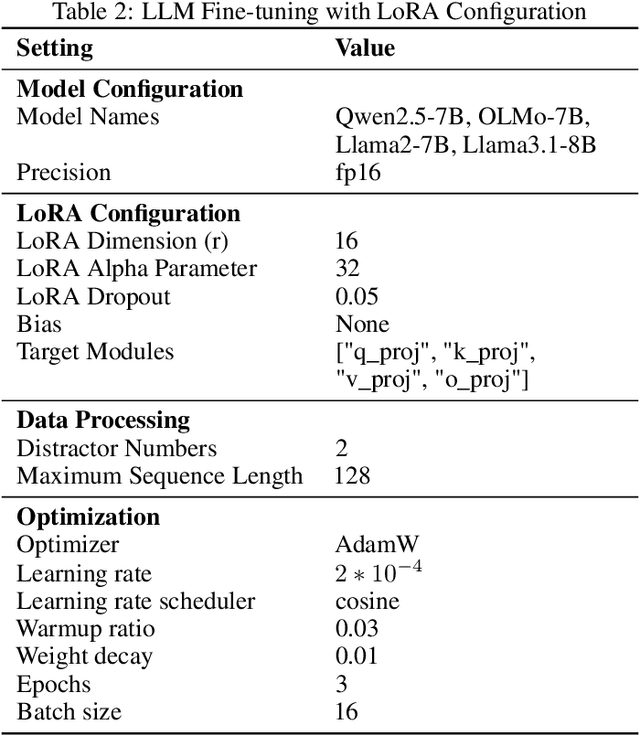

Abstract:"Socrates is human. All humans are mortal. Therefore, Socrates is mortal." This classical example demonstrates two-hop reasoning, where a conclusion logically follows from two connected premises. While transformer-based Large Language Models (LLMs) can make two-hop reasoning, they tend to collapse to random guessing when faced with distracting premises. To understand the underlying mechanism, we train a three-layer transformer on synthetic two-hop reasoning tasks. The training dynamics show two stages: a slow learning phase, where the 3-layer transformer performs random guessing like LLMs, followed by an abrupt phase transitions, where the 3-layer transformer suddenly reaches $100%$ accuracy. Through reverse engineering, we explain the inner mechanisms for how models learn to randomly guess between distractions initially, and how they learn to ignore distractions eventually. We further propose a three-parameter model that supports the causal claims for the mechanisms to the training dynamics of the transformer. Finally, experiments on LLMs suggest that the discovered mechanisms generalize across scales. Our methodologies provide new perspectives for scientific understandings of LLMs and our findings provide new insights into how reasoning emerges during training.

Fast Best-of-N Decoding via Speculative Rejection

Oct 26, 2024Abstract:The safe and effective deployment of Large Language Models (LLMs) involves a critical step called alignment, which ensures that the model's responses are in accordance with human preferences. Prevalent alignment techniques, such as DPO, PPO and their variants, align LLMs by changing the pre-trained model weights during a phase called post-training. While predominant, these post-training methods add substantial complexity before LLMs can be deployed. Inference-time alignment methods avoid the complex post-training step and instead bias the generation towards responses that are aligned with human preferences. The best-known inference-time alignment method, called Best-of-N, is as effective as the state-of-the-art post-training procedures. Unfortunately, Best-of-N requires vastly more resources at inference time than standard decoding strategies, which makes it computationally not viable. In this work, we introduce Speculative Rejection, a computationally-viable inference-time alignment algorithm. It generates high-scoring responses according to a given reward model, like Best-of-N does, while being between 16 to 32 times more computationally efficient.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge