Michael I. Jordan

On Nonasymptotic Confidence Intervals for Treatment Effects in Randomized Experiments

Jan 16, 2026Abstract:We study nonasymptotic (finite-sample) confidence intervals for treatment effects in randomized experiments. In the existing literature, the effective sample sizes of nonasymptotic confidence intervals tend to be looser than the corresponding central-limit-theorem-based confidence intervals by a factor depending on the square root of the propensity score. We show that this performance gap can be closed, designing nonasymptotic confidence intervals that have the same effective sample size as their asymptotic counterparts. Our approach involves systematic exploitation of negative dependence or variance adaptivity (or both). We also show that the nonasymptotic rates that we achieve are unimprovable in an information-theoretic sense.

Stopping Rules for Stochastic Gradient Descent via Anytime-Valid Confidence Sequences

Dec 22, 2025Abstract:We study stopping rules for stochastic gradient descent (SGD) for convex optimization from the perspective of anytime-valid confidence sequences. Classical analyses of SGD provide convergence guarantees in expectation or at a fixed horizon, but offer no statistically valid way to assess, at an arbitrary time, how close the current iterate is to the optimum. We develop an anytime-valid, data-dependent upper confidence sequence for the weighted average suboptimality of projected SGD, constructed via nonnegative supermartingales and requiring no smoothness or strong convexity. This confidence sequence yields a simple stopping rule that is provably $\varepsilon$-optimal with probability at least $1-α$, with explicit bounds on the stopping time under standard stochastic approximation stepsizes. To the best of our knowledge, these are the first rigorous, time-uniform performance guarantees and finite-time $\varepsilon$-optimality certificates for projected SGD with general convex objectives, based solely on observable trajectory quantities.

Conditional Coverage Diagnostics for Conformal Prediction

Dec 12, 2025Abstract:Evaluating conditional coverage remains one of the most persistent challenges in assessing the reliability of predictive systems. Although conformal methods can give guarantees on marginal coverage, no method can guarantee to produce sets with correct conditional coverage, leaving practitioners without a clear way to interpret local deviations. To overcome sample-inefficiency and overfitting issues of existing metrics, we cast conditional coverage estimation as a classification problem. Conditional coverage is violated if and only if any classifier can achieve lower risk than the target coverage. Through the choice of a (proper) loss function, the resulting risk difference gives a conservative estimate of natural miscoverage measures such as L1 and L2 distance, and can even separate the effects of over- and under-coverage, and non-constant target coverages. We call the resulting family of metrics excess risk of the target coverage (ERT). We show experimentally that the use of modern classifiers provides much higher statistical power than simple classifiers underlying established metrics like CovGap. Additionally, we use our metric to benchmark different conformal prediction methods. Finally, we release an open-source package for ERT as well as previous conditional coverage metrics. Together, these contributions provide a new lens for understanding, diagnosing, and improving the conditional reliability of predictive systems.

Structured Matrix Scaling for Multi-Class Calibration

Nov 05, 2025Abstract:Post-hoc recalibration methods are widely used to ensure that classifiers provide faithful probability estimates. We argue that parametric recalibration functions based on logistic regression can be motivated from a simple theoretical setting for both binary and multiclass classification. This insight motivates the use of more expressive calibration methods beyond standard temperature scaling. For multi-class calibration however, a key challenge lies in the increasing number of parameters introduced by more complex models, often coupled with limited calibration data, which can lead to overfitting. Through extensive experiments, we demonstrate that the resulting bias-variance tradeoff can be effectively managed by structured regularization, robust preprocessing and efficient optimization. The resulting methods lead to substantial gains over existing logistic-based calibration techniques. We provide efficient and easy-to-use open-source implementations of our methods, making them an attractive alternative to common temperature, vector, and matrix scaling implementations.

Adaptive Coverage Policies in Conformal Prediction

Oct 05, 2025

Abstract:Traditional conformal prediction methods construct prediction sets such that the true label falls within the set with a user-specified coverage level. However, poorly chosen coverage levels can result in uninformative predictions, either producing overly conservative sets when the coverage level is too high, or empty sets when it is too low. Moreover, the fixed coverage level cannot adapt to the specific characteristics of each individual example, limiting the flexibility and efficiency of these methods. In this work, we leverage recent advances in e-values and post-hoc conformal inference, which allow the use of data-dependent coverage levels while maintaining valid statistical guarantees. We propose to optimize an adaptive coverage policy by training a neural network using a leave-one-out procedure on the calibration set, allowing the coverage level and the resulting prediction set size to vary with the difficulty of each individual example. We support our approach with theoretical coverage guarantees and demonstrate its practical benefits through a series of experiments.

The Statistical Fairness-Accuracy Frontier

Aug 25, 2025

Abstract:Machine learning models must balance accuracy and fairness, but these goals often conflict, particularly when data come from multiple demographic groups. A useful tool for understanding this trade-off is the fairness-accuracy (FA) frontier, which characterizes the set of models that cannot be simultaneously improved in both fairness and accuracy. Prior analyses of the FA frontier provide a full characterization under the assumption of complete knowledge of population distributions -- an unrealistic ideal. We study the FA frontier in the finite-sample regime, showing how it deviates from its population counterpart and quantifying the worst-case gap between them. In particular, we derive minimax-optimal estimators that depend on the designer's knowledge of the covariate distribution. For each estimator, we characterize how finite-sample effects asymmetrically impact each group's risk, and identify optimal sample allocation strategies. Our results transform the FA frontier from a theoretical construct into a practical tool for policymakers and practitioners who must often design algorithms with limited data.

Multivariate Conformal Prediction via Conformalized Gaussian Scoring

Jul 28, 2025

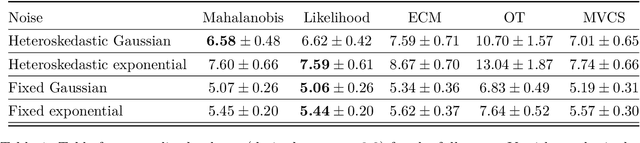

Abstract:While achieving exact conditional coverage in conformal prediction is unattainable without making strong, untestable regularity assumptions, the promise of conformal prediction hinges on finding approximations to conditional guarantees that are realizable in practice. A promising direction for obtaining conditional dependence for conformal sets--in particular capturing heteroskedasticity--is through estimating the conditional density $\mathbb{P}_{Y|X}$ and conformalizing its level sets. Previous work in this vein has focused on nonconformity scores based on the empirical cumulative distribution function (CDF). Such scores are, however, computationally costly, typically requiring expensive sampling methods. To avoid the need for sampling, we observe that the CDF-based score reduces to a Mahalanobis distance in the case of Gaussian scores, yielding a closed-form expression that can be directly conformalized. Moreover, the use of a Gaussian-based score opens the door to a number of extensions of the basic conformal method; in particular, we show how to construct conformal sets with missing output values, refine conformal sets as partial information about $Y$ becomes available, and construct conformal sets on transformations of the output space. Finally, empirical results indicate that our approach produces conformal sets that more closely approximate conditional coverage in multivariate settings compared to alternative methods.

A General Framework for Estimating Preferences Using Response Time Data

Jul 27, 2025Abstract:We propose a general methodology for recovering preference parameters from data on choices and response times. Our methods yield estimates with fast ($1/n$ for $n$ data points) convergence rates when specialized to the popular Drift Diffusion Model (DDM), but are broadly applicable to generalizations of the DDM as well as to alternative models of decision making that make use of response time data. The paper develops an empirical application to an experiment on intertemporal choice, showing that the use of response times delivers predictive accuracy and matters for the estimation of economically relevant parameters.

Generalization or Hallucination? Understanding Out-of-Context Reasoning in Transformers

Jun 12, 2025Abstract:Large language models (LLMs) can acquire new knowledge through fine-tuning, but this process exhibits a puzzling duality: models can generalize remarkably from new facts, yet are also prone to hallucinating incorrect information. However, the reasons for this phenomenon remain poorly understood. In this work, we argue that both behaviors stem from a single mechanism known as out-of-context reasoning (OCR): the ability to deduce implications by associating concepts, even those without a causal link. Our experiments across five prominent LLMs confirm that OCR indeed drives both generalization and hallucination, depending on whether the associated concepts are causally related. To build a rigorous theoretical understanding of this phenomenon, we then formalize OCR as a synthetic factual recall task. We empirically show that a one-layer single-head attention-only transformer with factorized output and value matrices can learn to solve this task, while a model with combined weights cannot, highlighting the crucial role of matrix factorization. Our theoretical analysis shows that the OCR capability can be attributed to the implicit bias of gradient descent, which favors solutions that minimize the nuclear norm of the combined output-value matrix. This mathematical structure explains why the model learns to associate facts and implications with high sample efficiency, regardless of whether the correlation is causal or merely spurious. Ultimately, our work provides a theoretical foundation for understanding the OCR phenomenon, offering a new lens for analyzing and mitigating undesirable behaviors from knowledge injection.

Sample Complexity and Representation Ability of Test-time Scaling Paradigms

Jun 05, 2025Abstract:Test-time scaling paradigms have significantly advanced the capabilities of large language models (LLMs) on complex tasks. Despite their empirical success, theoretical understanding of the sample efficiency of various test-time strategies -- such as self-consistency, best-of-$n$, and self-correction -- remains limited. In this work, we first establish a separation result between two repeated sampling strategies: self-consistency requires $\Theta(1/\Delta^2)$ samples to produce the correct answer, while best-of-$n$ only needs $\Theta(1/\Delta)$, where $\Delta < 1$ denotes the probability gap between the correct and second most likely answers. Next, we present an expressiveness result for the self-correction approach with verifier feedback: it enables Transformers to simulate online learning over a pool of experts at test time. Therefore, a single Transformer architecture can provably solve multiple tasks without prior knowledge of the specific task associated with a user query, extending the representation theory of Transformers from single-task to multi-task settings. Finally, we empirically validate our theoretical results, demonstrating the practical effectiveness of self-correction methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge