Zhiqiang Cao

GRAB: An LLM-Inspired Sequence-First Click-Through Rate Prediction Modeling Paradigm

Feb 03, 2026Abstract:Traditional Deep Learning Recommendation Models (DLRMs) face increasing bottlenecks in performance and efficiency, often struggling with generalization and long-sequence modeling. Inspired by the scaling success of Large Language Models (LLMs), we propose Generative Ranking for Ads at Baidu (GRAB), an end-to-end generative framework for Click-Through Rate (CTR) prediction. GRAB integrates a novel Causal Action-aware Multi-channel Attention (CamA) mechanism to effectively capture temporal dynamics and specific action signals within user behavior sequences. Full-scale online deployment demonstrates that GRAB significantly outperforms established DLRMs, delivering a 3.05% increase in revenue and a 3.49% rise in CTR. Furthermore, the model demonstrates desirable scaling behavior: its expressive power shows a monotonic and approximately linear improvement as longer interaction sequences are utilized.

Cross-Domain Bilingual Lexicon Induction via Pretrained Language Models

May 29, 2025Abstract:Bilingual Lexicon Induction (BLI) is generally based on common domain data to obtain monolingual word embedding, and by aligning the monolingual word embeddings to obtain the cross-lingual embeddings which are used to get the word translation pairs. In this paper, we propose a new task of BLI, which is to use the monolingual corpus of the general domain and target domain to extract domain-specific bilingual dictionaries. Motivated by the ability of Pre-trained models, we propose a method to get better word embeddings that build on the recent work on BLI. This way, we introduce the Code Switch(Qin et al., 2020) firstly in the cross-domain BLI task, which can match differit is yet to be seen whether these methods are suitable for bilingual lexicon extraction in professional fields. As we can see in table 1, the classic and efficient BLI approach, Muse and Vecmap, perform much worse on the Medical dataset than on the Wiki dataset. On one hand, the specialized domain data set is relatively smaller compared to the generic domain data set generally, and specialized words have a lower frequency, which will directly affect the translation quality of bilingual dictionaries. On the other hand, static word embeddings are widely used for BLI, however, in some specific fields, the meaning of words is greatly influenced by context, in this case, using only static word embeddings may lead to greater bias. ent strategies in different contexts, making the model more suitable for this task. Experimental results show that our method can improve performances over robust BLI baselines on three specific domains by averagely improving 0.78 points.

Enhancing Large Language Models'Machine Translation via Dynamic Focus Anchoring

May 29, 2025Abstract:Large language models have demonstrated exceptional performance across multiple crosslingual NLP tasks, including machine translation (MT). However, persistent challenges remain in addressing context-sensitive units (CSUs), such as polysemous words. These CSUs not only affect the local translation accuracy of LLMs, but also affect LLMs' understanding capability for sentences and tasks, and even lead to translation failure. To address this problem, we propose a simple but effective method to enhance LLMs' MT capabilities by acquiring CSUs and applying semantic focus. Specifically, we dynamically analyze and identify translation challenges, then incorporate them into LLMs in a structured manner to mitigate mistranslations or misunderstandings of CSUs caused by information flattening. Efficiently activate LLMs to identify and apply relevant knowledge from its vast data pool in this way, ensuring more accurate translations for translating difficult terms. On a benchmark dataset of MT, our proposed method achieved competitive performance compared to multiple existing open-sourced MT baseline models. It demonstrates effectiveness and robustness across multiple language pairs, including both similar language pairs and distant language pairs. Notably, the proposed method requires no additional model training and enhances LLMs' performance across multiple NLP tasks with minimal resource consumption.

MEF-Explore: Communication-Constrained Multi-Robot Entropy-Field-Based Exploration

May 29, 2025Abstract:Collaborative multiple robots for unknown environment exploration have become mainstream due to their remarkable performance and efficiency. However, most existing methods assume perfect robots' communication during exploration, which is unattainable in real-world settings. Though there have been recent works aiming to tackle communication-constrained situations, substantial room for advancement remains for both information-sharing and exploration strategy aspects. In this paper, we propose a Communication-Constrained Multi-Robot Entropy-Field-Based Exploration (MEF-Explore). The first module of the proposed method is the two-layer inter-robot communication-aware information-sharing strategy. A dynamic graph is used to represent a multi-robot network and to determine communication based on whether it is low-speed or high-speed. Specifically, low-speed communication, which is always accessible between every robot, can only be used to share their current positions. If robots are within a certain range, high-speed communication will be available for inter-robot map merging. The second module is the entropy-field-based exploration strategy. Particularly, robots explore the unknown area distributedly according to the novel forms constructed to evaluate the entropies of frontiers and robots. These entropies can also trigger implicit robot rendezvous to enhance inter-robot map merging if feasible. In addition, we include the duration-adaptive goal-assigning module to manage robots' goal assignment. The simulation results demonstrate that our MEF-Explore surpasses the existing ones regarding exploration time and success rate in all scenarios. For real-world experiments, our method leads to a 21.32% faster exploration time and a 16.67% higher success rate compared to the baseline.

Adaptive AI decision interface for autonomous electronic material discovery

Apr 17, 2025

Abstract:AI-powered autonomous experimentation (AI/AE) can accelerate materials discovery but its effectiveness for electronic materials is hindered by data scarcity from lengthy and complex design-fabricate-test-analyze cycles. Unlike experienced human scientists, even advanced AI algorithms in AI/AE lack the adaptability to make informative real-time decisions with limited datasets. Here, we address this challenge by developing and implementing an AI decision interface on our AI/AE system. The central element of the interface is an AI advisor that performs real-time progress monitoring, data analysis, and interactive human-AI collaboration for actively adapting to experiments in different stages and types. We applied this platform to an emerging type of electronic materials-mixed ion-electron conducting polymers (MIECPs) -- to engineer and study the relationships between multiscale morphology and properties. Using organic electrochemical transistors (OECT) as the testing-bed device for evaluating the mixed-conducting figure-of-merit -- the product of charge-carrier mobility and the volumetric capacitance ({\mu}C*), our adaptive AI/AE platform achieved a 150% increase in {\mu}C* compared to the commonly used spin-coating method, reaching 1,275 F cm-1 V-1 s-1 in just 64 autonomous experimental trials. A study of 10 statistically selected samples identifies two key structural factors for achieving higher volumetric capacitance: larger crystalline lamellar spacing and higher specific surface area, while also uncovering a new polymer polymorph in this material.

FoCTTA: Low-Memory Continual Test-Time Adaptation with Focus

Feb 28, 2025Abstract:Continual adaptation to domain shifts at test time (CTTA) is crucial for enhancing the intelligence of deep learning enabled IoT applications. However, prevailing TTA methods, which typically update all batch normalization (BN) layers, exhibit two memory inefficiencies. First, the reliance on BN layers for adaptation necessitates large batch sizes, leading to high memory usage. Second, updating all BN layers requires storing the activations of all BN layers for backpropagation, exacerbating the memory demand. Both factors lead to substantial memory costs, making existing solutions impractical for IoT devices. In this paper, we present FoCTTA, a low-memory CTTA strategy. The key is to automatically identify and adapt a few drift-sensitive representation layers, rather than blindly update all BN layers. The shift from BN to representation layers eliminates the need for large batch sizes. Also, by updating adaptation-critical layers only, FoCTTA avoids storing excessive activations. This focused adaptation approach ensures that FoCTTA is not only memory-efficient but also maintains effective adaptation. Evaluations show that FoCTTA improves the adaptation accuracy over the state-of-the-arts by 4.5%, 4.9%, and 14.8% on CIFAR10-C, CIFAR100-C, and ImageNet-C under the same memory constraints. Across various batch sizes, FoCTTA reduces the memory usage by 3-fold on average, while improving the accuracy by 8.1%, 3.6%, and 0.2%, respectively, on the three datasets.

FocusDD: Real-World Scene Infusion for Robust Dataset Distillation

Jan 11, 2025

Abstract:Dataset distillation has emerged as a strategy to compress real-world datasets for efficient training. However, it struggles with large-scale and high-resolution datasets, limiting its practicality. This paper introduces a novel resolution-independent dataset distillation method Focus ed Dataset Distillation (FocusDD), which achieves diversity and realism in distilled data by identifying key information patches, thereby ensuring the generalization capability of the distilled dataset across different network architectures. Specifically, FocusDD leverages a pre-trained Vision Transformer (ViT) to extract key image patches, which are then synthesized into a single distilled image. These distilled images, which capture multiple targets, are suitable not only for classification tasks but also for dense tasks such as object detection. To further improve the generalization of the distilled dataset, each synthesized image is augmented with a downsampled view of the original image. Experimental results on the ImageNet-1K dataset demonstrate that, with 100 images per class (IPC), ResNet50 and MobileNet-v2 achieve validation accuracies of 71.0% and 62.6%, respectively, outperforming state-of-the-art methods by 2.8% and 4.7%. Notably, FocusDD is the first method to use distilled datasets for object detection tasks. On the COCO2017 dataset, with an IPC of 50, YOLOv11n and YOLOv11s achieve 24.4% and 32.1% mAP, respectively, further validating the effectiveness of our approach.

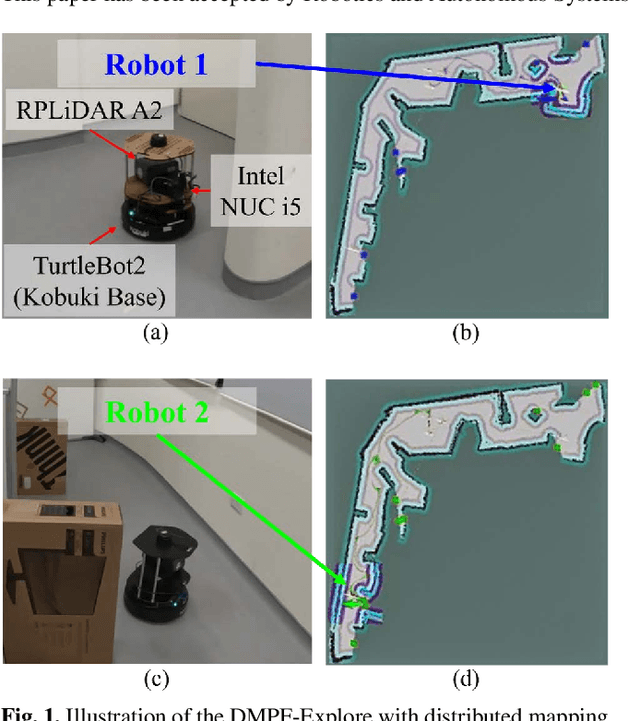

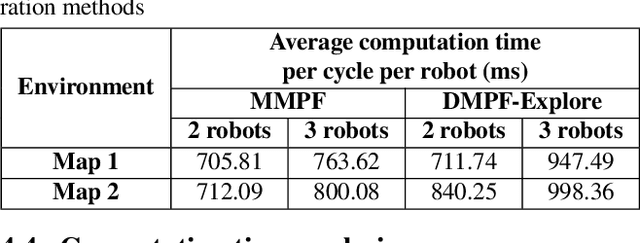

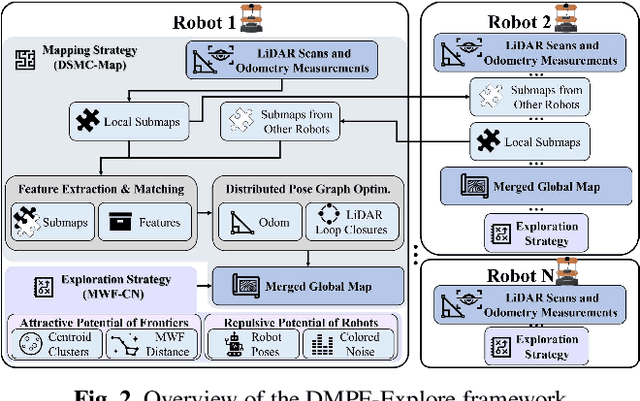

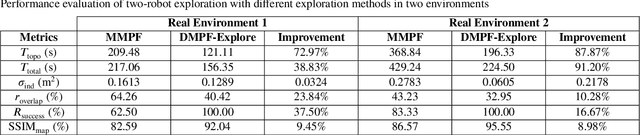

Distributed multi-robot potential-field-based exploration with submap-based mapping and noise-augmented strategy

Jul 10, 2024

Abstract:Multi-robot collaboration has become a needed component in unknown environment exploration due to its ability to accomplish various challenging situations. Potential-field-based methods are widely used for autonomous exploration because of their high efficiency and low travel cost. However, exploration speed and collaboration ability are still challenging topics. Therefore, we propose a Distributed Multi-Robot Potential-Field-Based Exploration (DMPF-Explore). In particular, we first present a Distributed Submap-Based Multi-Robot Collaborative Mapping Method (DSMC-Map), which can efficiently estimate the robot trajectories and construct the global map by merging the local maps from each robot. Second, we introduce a Potential-Field-Based Exploration Strategy Augmented with Modified Wave-Front Distance and Colored Noises (MWF-CN), in which the accessible frontier neighborhood is extended, and the colored noise provokes the enhancement of exploration performance. The proposed exploration method is deployed for simulation and real-world scenarios. The results show that our approach outperforms the existing ones regarding exploration speed and collaboration ability.

GMC-Pos: Graph-Based Multi-Robot Coverage Positioning Method

Oct 18, 2023

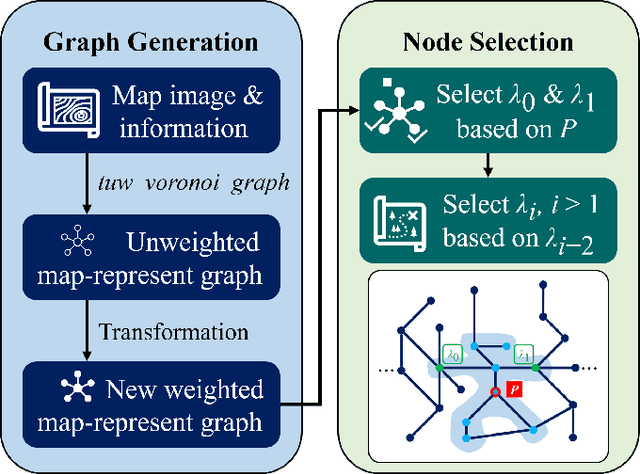

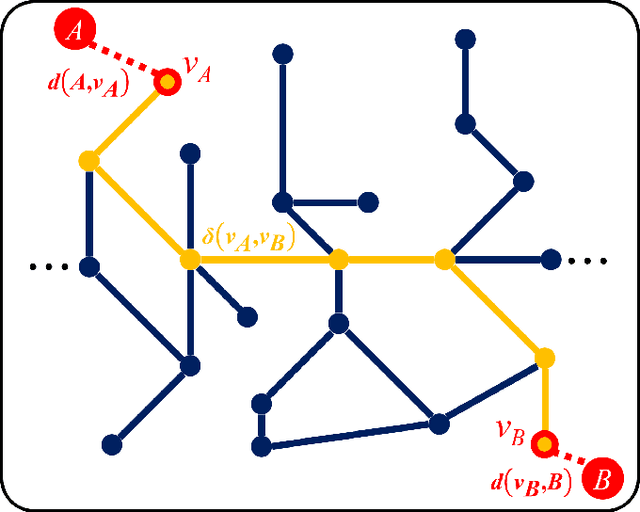

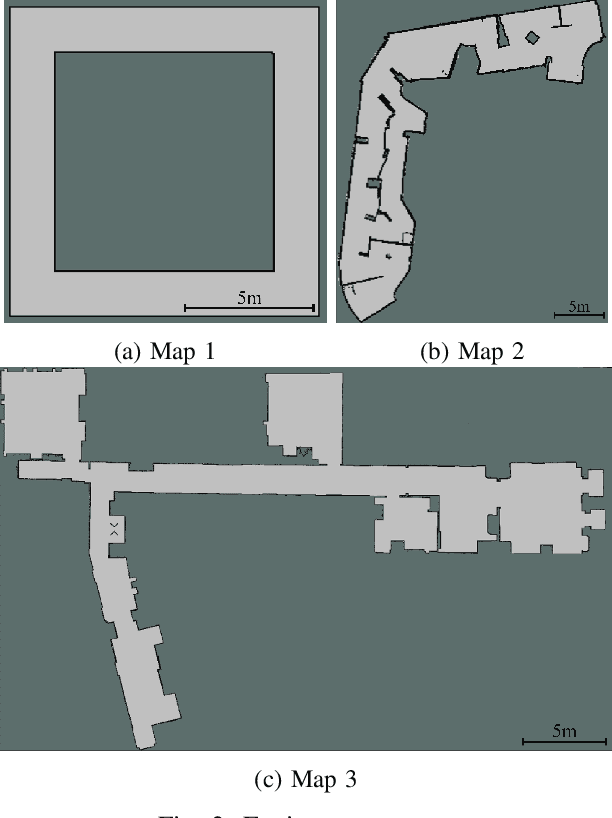

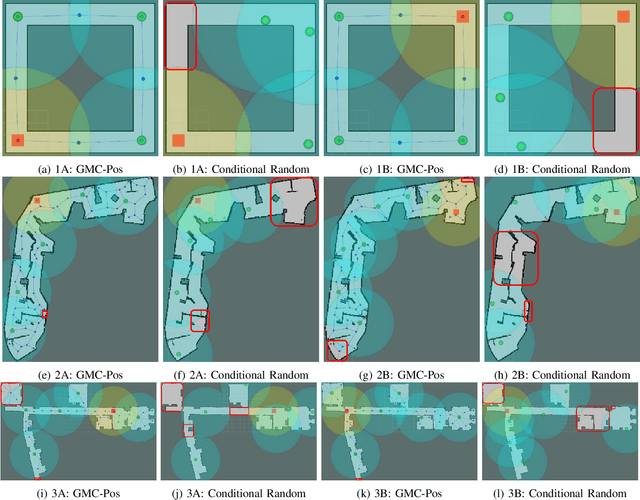

Abstract:Nowadays, several real-world tasks require adequate environment coverage for maintaining communication between multiple robots, for example, target search tasks, environmental monitoring, and post-disaster rescues. In this study, we look into a situation where there are a human operator and multiple robots, and we assume that each human or robot covers a certain range of areas. We want them to maximize their area of coverage collectively. Therefore, in this paper, we propose the Graph-Based Multi-Robot Coverage Positioning Method (GMC-Pos) to find strategic positions for robots that maximize the area coverage. Our novel approach consists of two main modules: graph generation and node selection. Firstly, graph generation represents the environment using a weighted connected graph. Then, we present a novel generalized graph-based distance and utilize it together with the graph degrees to be the conditions for node selection in a recursive manner. Our method is deployed in three environments with different settings. The results show that it outperforms the benchmark method by 15.13% to 24.88% regarding the area coverage percentage.

Moving Object Localization based on the Fusion of Ultra-WideBand and LiDAR with a Mobile Robot

Oct 16, 2023

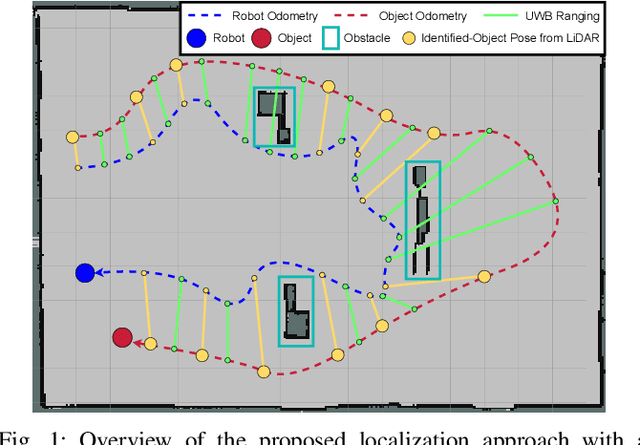

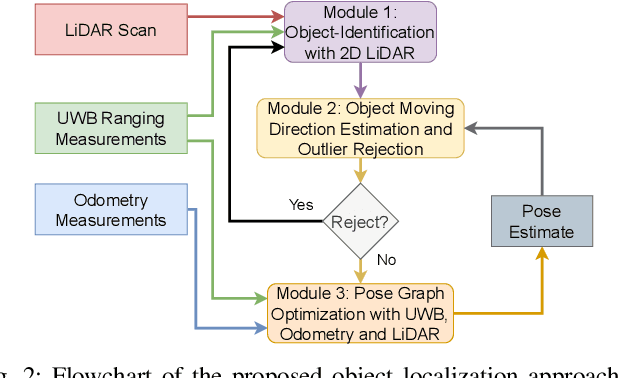

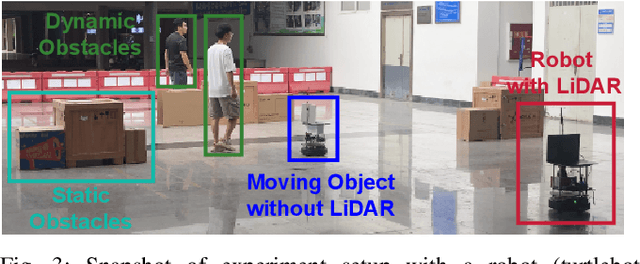

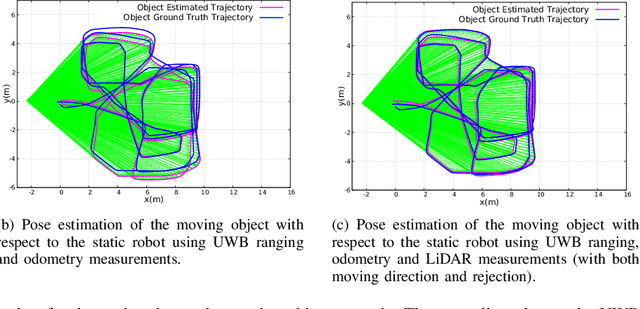

Abstract:Localization of objects is vital for robot-object interaction. Light Detection and Ranging (LiDAR) application in robotics is an emerging and widely used object localization technique due to its accurate distance measurement, long-range, wide field of view, and robustness in different conditions. However, LiDAR is unable to identify the objects when they are obstructed by obstacles, resulting in inaccuracy and noise in localization. To address this issue, we present an approach incorporating LiDAR and Ultra-Wideband (UWB) ranging for object localization. The UWB is popular in sensor fusion localization algorithms due to its low weight and low power consumption. In addition, the UWB is able to return ranging measurements even when the object is not within line-of-sight. Our approach provides an efficient solution to combine an anonymous optical sensor (LiDAR) with an identity-based radio sensor (UWB) to improve the localization accuracy of the object. Our approach consists of three modules. The first module is an object-identification algorithm that compares successive scans from the LiDAR to detect a moving object in the environment and returns the position with the closest range to UWB ranging. The second module estimates the moving object's moving direction using the previous and current estimated position from our object-identification module. It removes the suspicious estimations through an outlier rejection criterion. Lastly, we fuse the LiDAR, UWB ranging, and odometry measurements in pose graph optimization (PGO) to recover the entire trajectory of the robot and object. Extensive experiments were performed to evaluate the performance of the proposed approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge