Henry Chan

Physics-Informed Tree Search for High-Dimensional Computational Design

Jan 10, 2026Abstract:High-dimensional design spaces underpin a wide range of physics-based modeling and computational design tasks in science and engineering. These problems are commonly formulated as constrained black-box searches over rugged objective landscapes, where function evaluations are expensive, and gradients are unavailable or unreliable. Conventional global search engines and optimizers struggle in such settings due to the exponential scaling of design spaces, the presence of multiple local basins, and the absence of physical guidance in sampling. We present a physics-informed Monte Carlo Tree Search (MCTS) framework that extends policy-driven tree-based reinforcement concepts to continuous, high-dimensional scientific optimization. Our method integrates population-level decision trees with surrogate-guided directional sampling, reward shaping, and hierarchical switching between global exploration and local exploitation. These ingredients allow efficient traversal of non-convex, multimodal landscapes where physically meaningful optima are sparse. We benchmark our approach against standard global optimization baselines on a suite of canonical test functions, demonstrating superior or comparable performance in terms of convergence, robustness, and generalization. Beyond synthetic tests, we demonstrate physics-consistent applicability to (i) crystal structure optimization from clusters to bulk, (ii) fitting of classical interatomic potentials, and (iii) constrained engineering design problems. Across all cases, the method converges with high fidelity and evaluation efficiency while preserving physical constraints. Overall, our work establishes physics-informed tree search as a scalable and interpretable paradigm for computational design and high-dimensional scientific optimization, bridging discrete decision-making frameworks with continuous search in scientific design workflows.

Adaptive AI decision interface for autonomous electronic material discovery

Apr 17, 2025

Abstract:AI-powered autonomous experimentation (AI/AE) can accelerate materials discovery but its effectiveness for electronic materials is hindered by data scarcity from lengthy and complex design-fabricate-test-analyze cycles. Unlike experienced human scientists, even advanced AI algorithms in AI/AE lack the adaptability to make informative real-time decisions with limited datasets. Here, we address this challenge by developing and implementing an AI decision interface on our AI/AE system. The central element of the interface is an AI advisor that performs real-time progress monitoring, data analysis, and interactive human-AI collaboration for actively adapting to experiments in different stages and types. We applied this platform to an emerging type of electronic materials-mixed ion-electron conducting polymers (MIECPs) -- to engineer and study the relationships between multiscale morphology and properties. Using organic electrochemical transistors (OECT) as the testing-bed device for evaluating the mixed-conducting figure-of-merit -- the product of charge-carrier mobility and the volumetric capacitance ({\mu}C*), our adaptive AI/AE platform achieved a 150% increase in {\mu}C* compared to the commonly used spin-coating method, reaching 1,275 F cm-1 V-1 s-1 in just 64 autonomous experimental trials. A study of 10 statistically selected samples identifies two key structural factors for achieving higher volumetric capacitance: larger crystalline lamellar spacing and higher specific surface area, while also uncovering a new polymer polymorph in this material.

AutoPhaseNN: Unsupervised Physics-aware Deep Learning of 3D Nanoscale Coherent Imaging

Sep 28, 2021

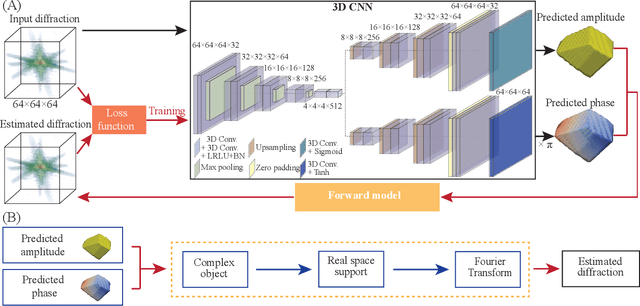

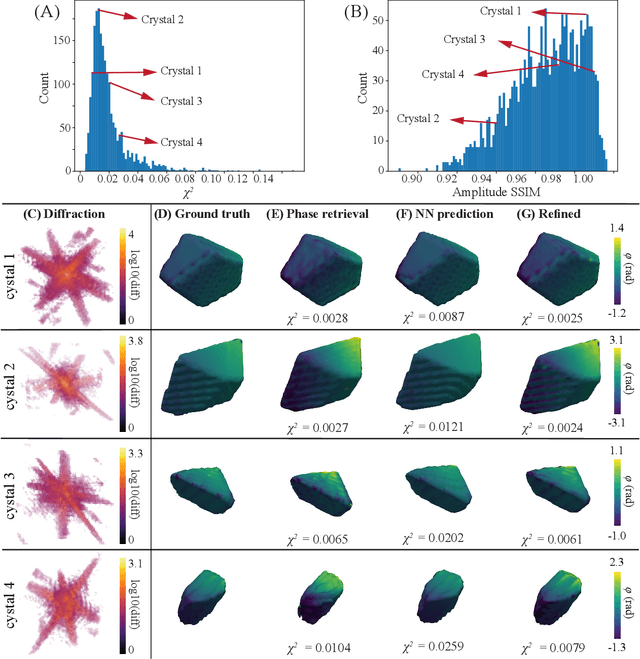

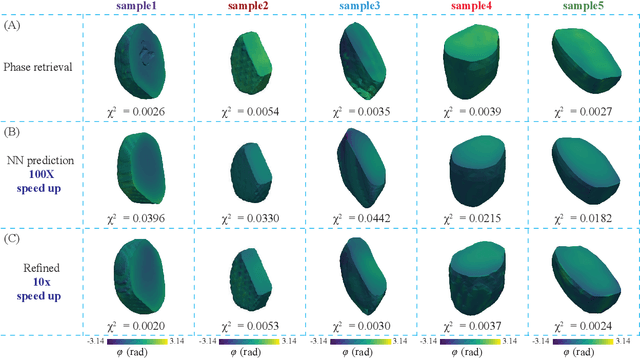

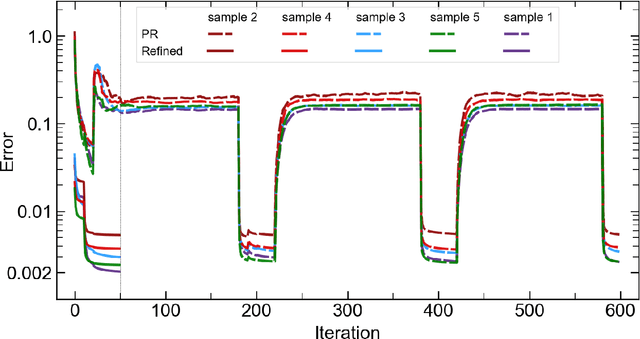

Abstract:The problem of phase retrieval, or the algorithmic recovery of lost phase information from measured intensity alone, underlies various imaging methods from astronomy to nanoscale imaging. Traditional methods of phase retrieval are iterative in nature, and are therefore computationally expensive and time consuming. More recently, deep learning (DL) models have been developed to either provide learned priors to iterative phase retrieval or in some cases completely replace phase retrieval with networks that learn to recover the lost phase information from measured intensity alone. However, such models require vast amounts of labeled data, which can only be obtained through simulation or performing computationally prohibitive phase retrieval on hundreds of or even thousands of experimental datasets. Using a 3D nanoscale X-ray imaging modality (Bragg Coherent Diffraction Imaging or BCDI) as a representative technique, we demonstrate AutoPhaseNN, a DL-based approach which learns to solve the phase problem without labeled data. By incorporating the physics of the imaging technique into the DL model during training, AutoPhaseNN learns to invert 3D BCDI data from reciprocal space to real space in a single shot without ever being shown real space images. Once trained, AutoPhaseNN is about one hundred times faster than traditional iterative phase retrieval methods while providing comparable image quality.

Real-time 3D Nanoscale Coherent Imaging via Physics-aware Deep Learning

Jun 16, 2020

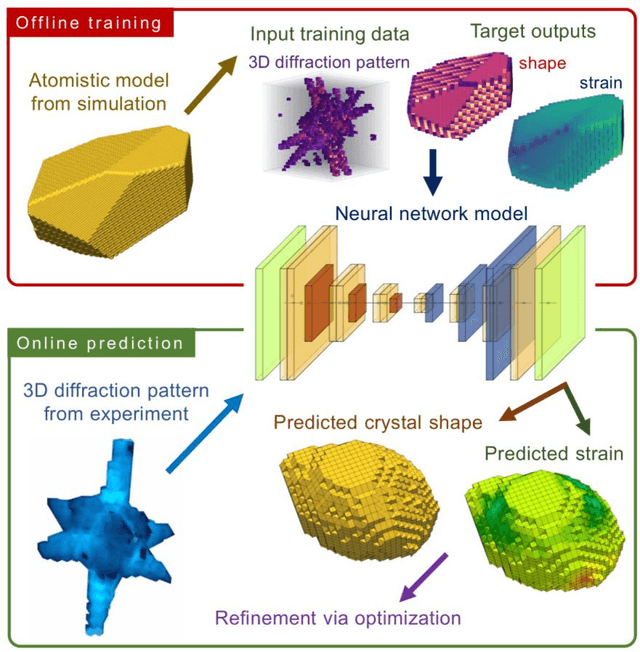

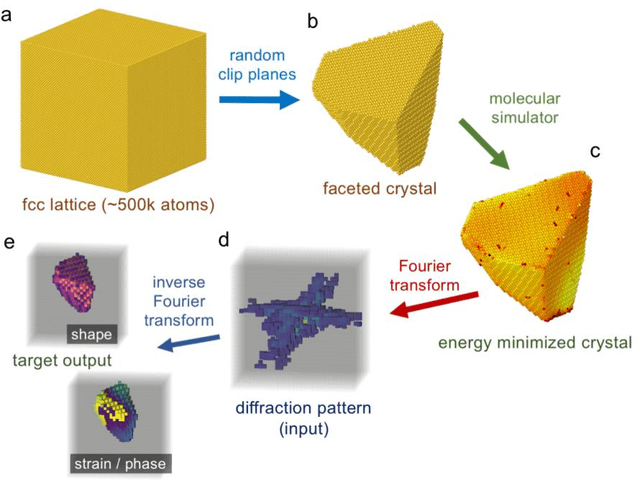

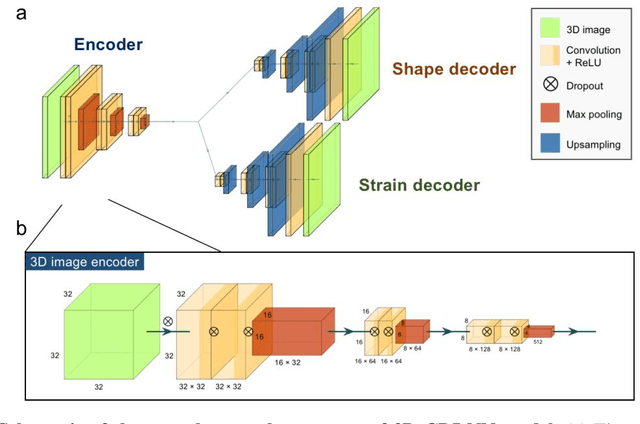

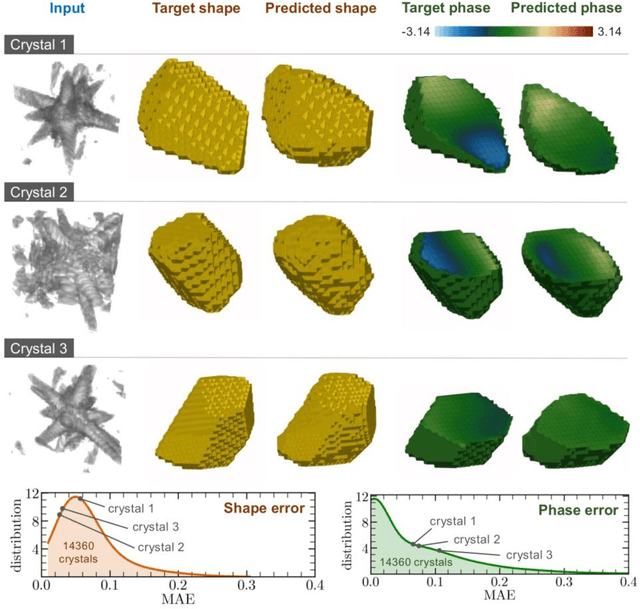

Abstract:Phase retrieval, the problem of recovering lost phase information from measured intensity alone, is an inverse problem that is widely faced in various imaging modalities ranging from astronomy to nanoscale imaging. The current process of phase recovery is iterative in nature. As a result, the image formation is time-consuming and computationally expensive, precluding real-time imaging. Here, we use 3D nanoscale X-ray imaging as a representative example to develop a deep learning model to address this phase retrieval problem. We introduce 3D-CDI-NN, a deep convolutional neural network and differential programming framework trained to predict 3D structure and strain solely from input 3D X-ray coherent scattering data. Our networks are designed to be "physics-aware" in multiple aspects; in that the physics of x-ray scattering process is explicitly enforced in the training of the network, and the training data are drawn from atomistic simulations that are representative of the physics of the material. We further refine the neural network prediction through a physics-based optimization procedure to enable maximum accuracy at lowest computational cost. 3D-CDI-NN can invert a 3D coherent diffraction pattern to real-space structure and strain hundreds of times faster than traditional iterative phase retrieval methods, with negligible loss in accuracy. Our integrated machine learning and differential programming solution to the phase retrieval problem is broadly applicable across inverse problems in other application areas.

Cost-sensitive detection with variational autoencoders for environmental acoustic sensing

Dec 07, 2017

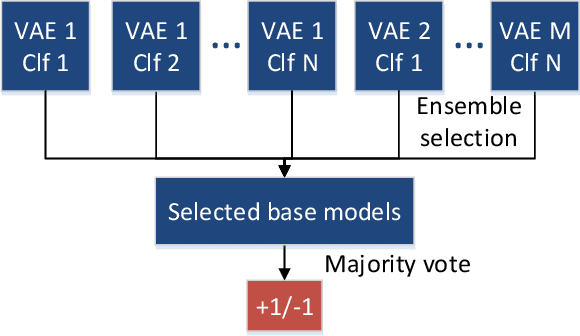

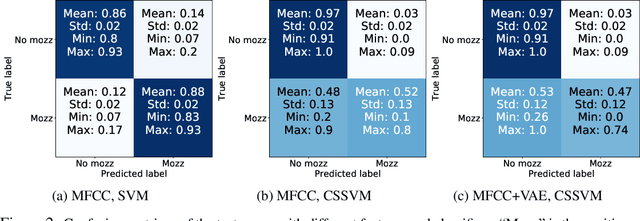

Abstract:Environmental acoustic sensing involves the retrieval and processing of audio signals to better understand our surroundings. While large-scale acoustic data make manual analysis infeasible, they provide a suitable playground for machine learning approaches. Most existing machine learning techniques developed for environmental acoustic sensing do not provide flexible control of the trade-off between the false positive rate and the false negative rate. This paper presents a cost-sensitive classification paradigm, in which the hyper-parameters of classifiers and the structure of variational autoencoders are selected in a principled Neyman-Pearson framework. We examine the performance of the proposed approach using a dataset from the HumBug project which aims to detect the presence of mosquitoes using sound collected by simple embedded devices.

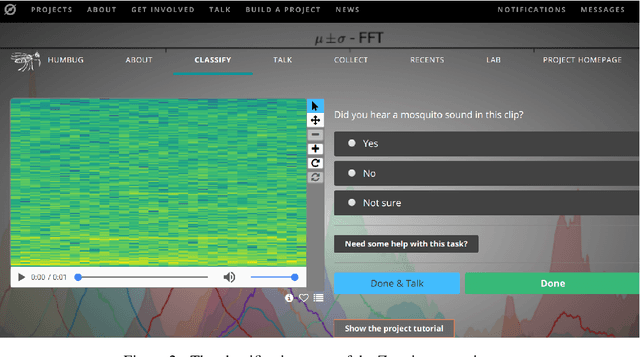

Mosquito detection with low-cost smartphones: data acquisition for malaria research

Dec 06, 2017

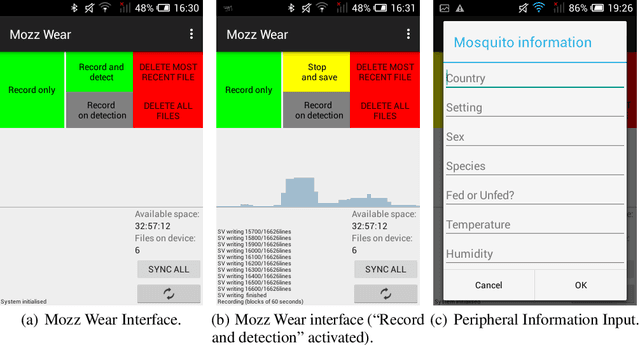

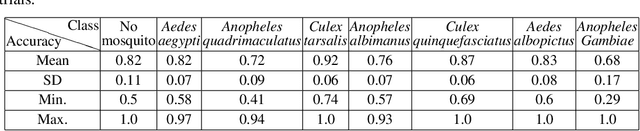

Abstract:Mosquitoes are a major vector for malaria, causing hundreds of thousands of deaths in the developing world each year. Not only is the prevention of mosquito bites of paramount importance to the reduction of malaria transmission cases, but understanding in more forensic detail the interplay between malaria, mosquito vectors, vegetation, standing water and human populations is crucial to the deployment of more effective interventions. Typically the presence and detection of malaria-vectoring mosquitoes is only quantified by hand-operated insect traps or signified by the diagnosis of malaria. If we are to gather timely, large-scale data to improve this situation, we need to automate the process of mosquito detection and classification as much as possible. In this paper, we present a candidate mobile sensing system that acts as both a portable early warning device and an automatic acoustic data acquisition pipeline to help fuel scientific inquiry and policy. The machine learning algorithm that powers the mobile system achieves excellent off-line multi-species detection performance while remaining computationally efficient. Further, we have conducted preliminary live mosquito detection tests using low-cost mobile phones and achieved promising results. The deployment of this system for field usage in Southeast Asia and Africa is planned in the near future. In order to accelerate processing of field recordings and labelling of collected data, we employ a citizen science platform in conjunction with automated methods, the former implemented using the Zooniverse platform, allowing crowdsourcing on a grand scale.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge