Mathew J. Cherukara

Demonstration of an AI-driven workflow for dynamic x-ray spectroscopy

Apr 23, 2025Abstract:X-ray absorption near edge structure (XANES) spectroscopy is a powerful technique for characterizing the chemical state and symmetry of individual elements within materials, but requires collecting data at many energy points which can be time-consuming. While adaptive sampling methods exist for efficiently collecting spectroscopic data, they often lack domain-specific knowledge about XANES spectra structure. Here we demonstrate a knowledge-injected Bayesian optimization approach for adaptive XANES data collection that incorporates understanding of spectral features like absorption edges and pre-edge peaks. We show this method accurately reconstructs the absorption edge of XANES spectra using only 15-20% of the measurement points typically needed for conventional sampling, while maintaining the ability to determine the x-ray energy of the sharp peak after absorption edge with errors less than 0.03 eV, the absorption edge with errors less than 0.1 eV; and overall root-mean-square errors less than 0.005 compared to compared to traditionally sampled spectra. Our experiments on battery materials and catalysts demonstrate the method's effectiveness for both static and dynamic XANES measurements, improving data collection efficiency and enabling better time resolution for tracking chemical changes. This approach advances the degree of automation in XANES experiments reducing the common errors of under- or over-sampling points in near the absorption edge and enabling dynamic experiments that require high temporal resolution or limited measurement time.

Ptychographic Image Reconstruction from Limited Data via Score-Based Diffusion Models with Physics-Guidance

Feb 26, 2025

Abstract:Ptychography is a computational imaging technique that achieves high spatial resolution over large fields of view. It involves scanning a coherent beam across overlapping regions and recording diffraction patterns. Conventional reconstruction algorithms require substantial overlap, increasing data volume and experimental time. We propose a reconstruction method employing a physics-guided score-based diffusion model. Our approach trains a diffusion model on representative object images to learn an object distribution prior. During reconstruction, we modify the reverse diffusion process to enforce data consistency, guiding reverse diffusion toward a physically plausible solution. This method requires a single pretraining phase, allowing it to generalize across varying scan overlap ratios and positions. Our results demonstrate that the proposed method achieves high-fidelity reconstructions with only a 20% overlap, while the widely employed rPIE method requires a 62% overlap to achieve similar accuracy. This represents a significant reduction in data requirements, offering an alternative to conventional techniques.

Predicting ptychography probe positions using single-shot phase retrieval neural network

May 31, 2024Abstract:Ptychography is a powerful imaging technique that is used in a variety of fields, including materials science, biology, and nanotechnology. However, the accuracy of the reconstructed ptychography image is highly dependent on the accuracy of the recorded probe positions which often contain errors. These errors are typically corrected jointly with phase retrieval through numerical optimization approaches. When the error accumulates along the scan path or when the error magnitude is large, these approaches may not converge with satisfactory result. We propose a fundamentally new approach for ptychography probe position prediction for data with large position errors, where a neural network is used to make single-shot phase retrieval on individual diffraction patterns, yielding the object image at each scan point. The pairwise offsets among these images are then found using a robust image registration method, and the results are combined to yield the complete scan path by constructing and solving a linear equation. We show that our method can achieve good position prediction accuracy for data with large and accumulating errors on the order of $10^2$ pixels, a magnitude that often makes optimization-based algorithms fail to converge. For ptychography instruments without sophisticated position control equipment such as interferometers, our method is of significant practical potential.

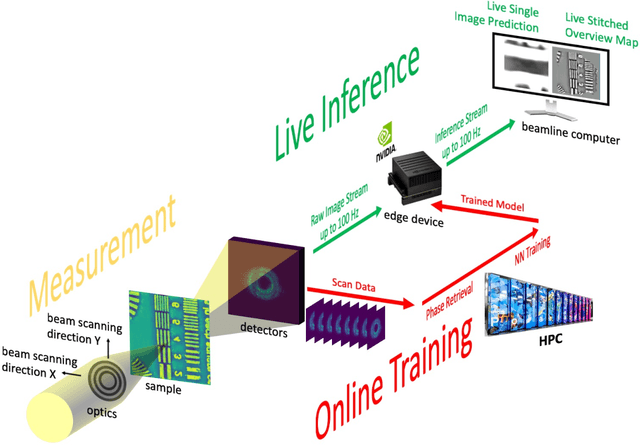

Deep learning at the edge enables real-time streaming ptychographic imaging

Sep 20, 2022

Abstract:Coherent microscopy techniques provide an unparalleled multi-scale view of materials across scientific and technological fields, from structural materials to quantum devices, from integrated circuits to biological cells. Driven by the construction of brighter sources and high-rate detectors, coherent X-ray microscopy methods like ptychography are poised to revolutionize nanoscale materials characterization. However, associated significant increases in data and compute needs mean that conventional approaches no longer suffice for recovering sample images in real-time from high-speed coherent imaging experiments. Here, we demonstrate a workflow that leverages artificial intelligence at the edge and high-performance computing to enable real-time inversion on X-ray ptychography data streamed directly from a detector at up to 2 kHz. The proposed AI-enabled workflow eliminates the sampling constraints imposed by traditional ptychography, allowing low dose imaging using orders of magnitude less data than required by traditional methods.

Real-time X-ray Phase-contrast Imaging Using SPINNet -- A Speckle-based Phase-contrast Imaging Neural Network

Jan 18, 2022

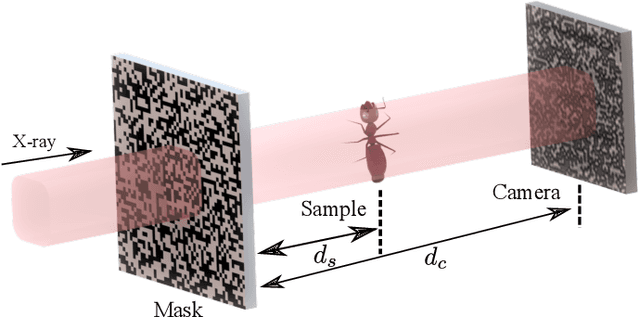

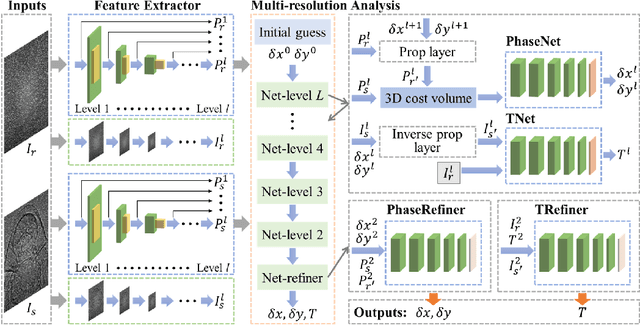

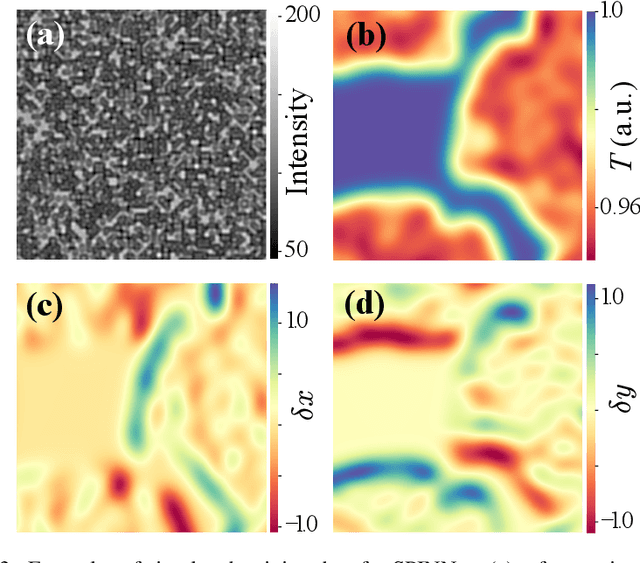

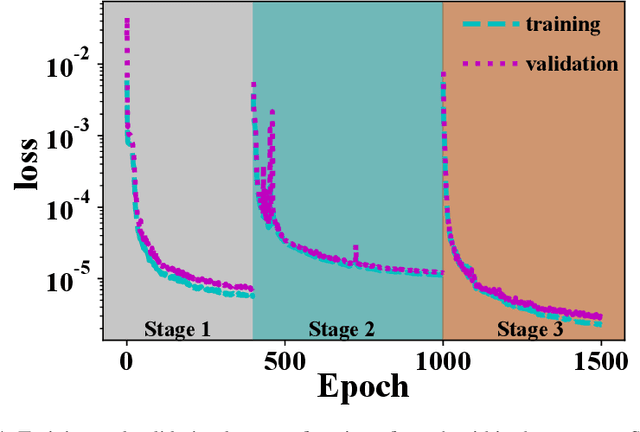

Abstract:X-ray phase-contrast imaging has become indispensable for visualizing samples with low absorption contrast. In this regard, speckle-based techniques have shown significant advantages in spatial resolution, phase sensitivity, and implementation flexibility compared with traditional methods. However, their computational cost has hindered their wider adoption. By exploiting the power of deep learning, we developed a new speckle-based phase-contrast imaging neural network (SPINNet) that boosts the phase retrieval speed by at least two orders of magnitude compared to existing methods. To achieve this performance, we combined SPINNet with a novel coded-mask-based technique, an enhanced version of the speckle-based method. Using this scheme, we demonstrate a simultaneous reconstruction of absorption and phase images on the order of 100 ms, where a traditional correlation-based analysis would take several minutes even with a cluster. In addition to significant improvement in speed, our experimental results show that the imaging resolution and phase retrieval quality of SPINNet outperform existing single-shot speckle-based methods. Furthermore, we successfully demonstrate its application in 3D X-ray phase-contrast tomography. Our result shows that SPINNet could enable many applications requiring high-resolution and fast data acquisition and processing, such as in-situ and in-operando 2D and 3D phase-contrast imaging and real-time at-wavelength metrology and wavefront sensing.

AutoPhaseNN: Unsupervised Physics-aware Deep Learning of 3D Nanoscale Coherent Imaging

Sep 28, 2021

Abstract:The problem of phase retrieval, or the algorithmic recovery of lost phase information from measured intensity alone, underlies various imaging methods from astronomy to nanoscale imaging. Traditional methods of phase retrieval are iterative in nature, and are therefore computationally expensive and time consuming. More recently, deep learning (DL) models have been developed to either provide learned priors to iterative phase retrieval or in some cases completely replace phase retrieval with networks that learn to recover the lost phase information from measured intensity alone. However, such models require vast amounts of labeled data, which can only be obtained through simulation or performing computationally prohibitive phase retrieval on hundreds of or even thousands of experimental datasets. Using a 3D nanoscale X-ray imaging modality (Bragg Coherent Diffraction Imaging or BCDI) as a representative technique, we demonstrate AutoPhaseNN, a DL-based approach which learns to solve the phase problem without labeled data. By incorporating the physics of the imaging technique into the DL model during training, AutoPhaseNN learns to invert 3D BCDI data from reciprocal space to real space in a single shot without ever being shown real space images. Once trained, AutoPhaseNN is about one hundred times faster than traditional iterative phase retrieval methods while providing comparable image quality.

Real-time 3D Nanoscale Coherent Imaging via Physics-aware Deep Learning

Jun 16, 2020

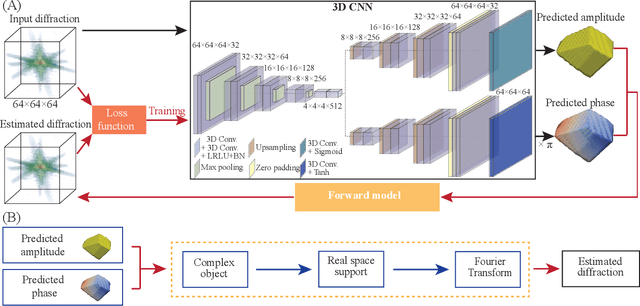

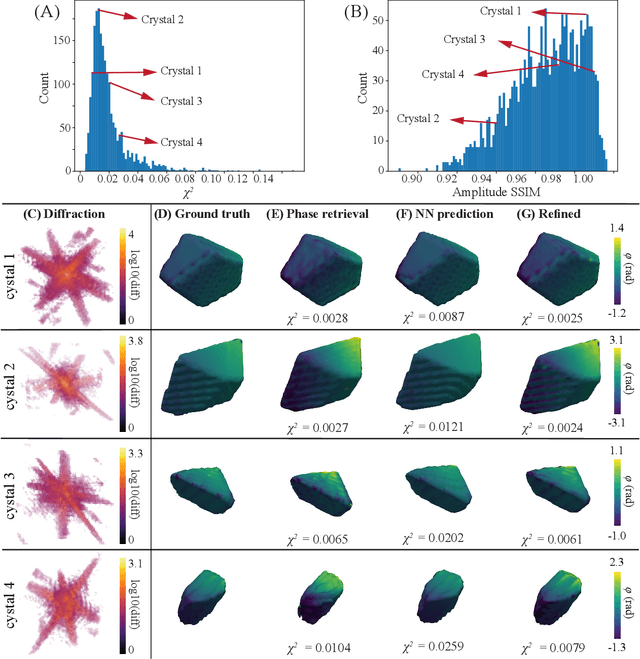

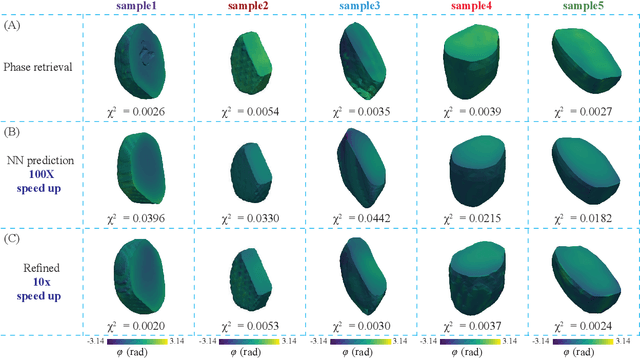

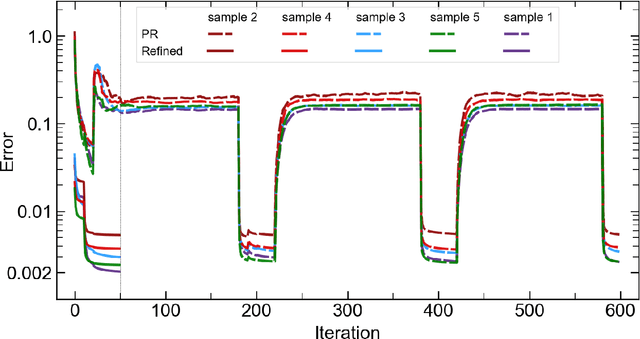

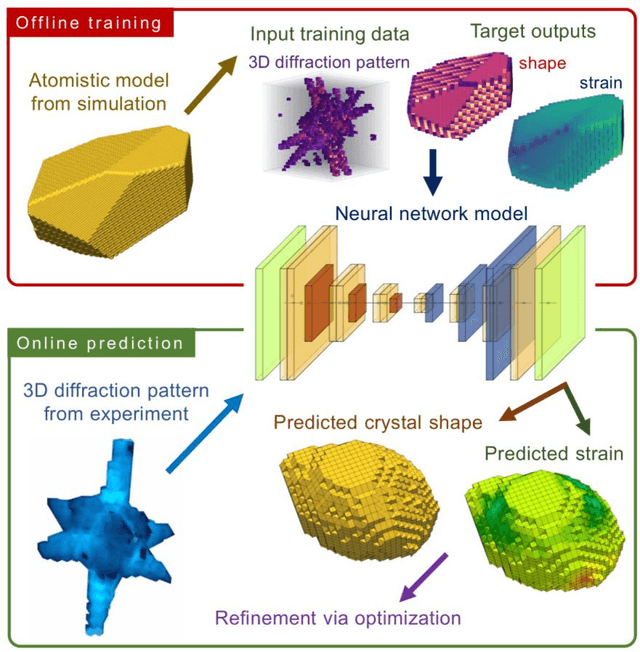

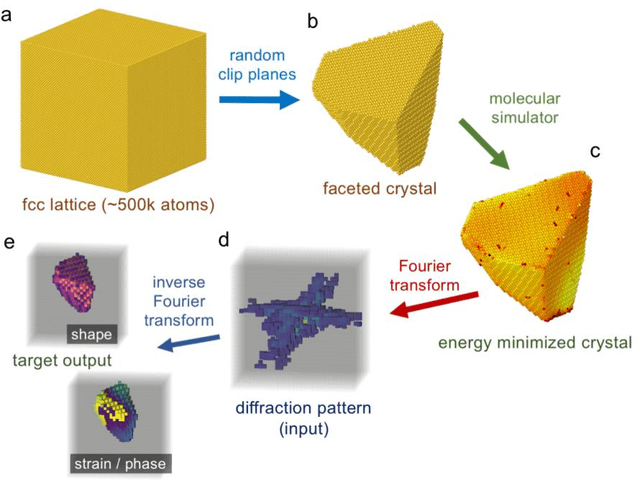

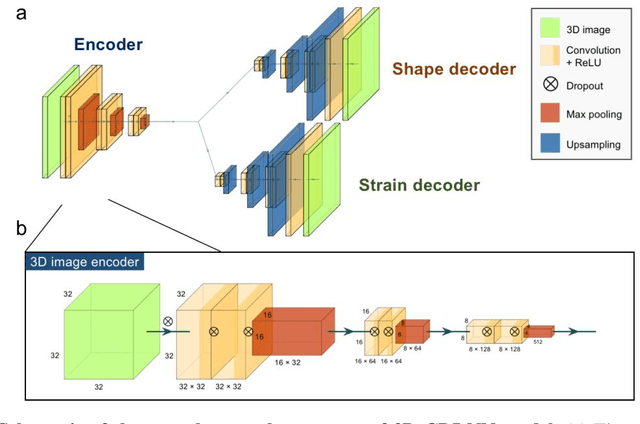

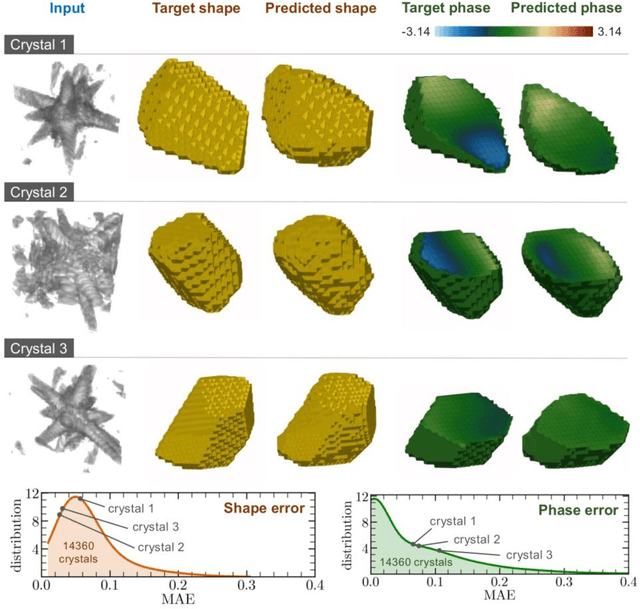

Abstract:Phase retrieval, the problem of recovering lost phase information from measured intensity alone, is an inverse problem that is widely faced in various imaging modalities ranging from astronomy to nanoscale imaging. The current process of phase recovery is iterative in nature. As a result, the image formation is time-consuming and computationally expensive, precluding real-time imaging. Here, we use 3D nanoscale X-ray imaging as a representative example to develop a deep learning model to address this phase retrieval problem. We introduce 3D-CDI-NN, a deep convolutional neural network and differential programming framework trained to predict 3D structure and strain solely from input 3D X-ray coherent scattering data. Our networks are designed to be "physics-aware" in multiple aspects; in that the physics of x-ray scattering process is explicitly enforced in the training of the network, and the training data are drawn from atomistic simulations that are representative of the physics of the material. We further refine the neural network prediction through a physics-based optimization procedure to enable maximum accuracy at lowest computational cost. 3D-CDI-NN can invert a 3D coherent diffraction pattern to real-space structure and strain hundreds of times faster than traditional iterative phase retrieval methods, with negligible loss in accuracy. Our integrated machine learning and differential programming solution to the phase retrieval problem is broadly applicable across inverse problems in other application areas.

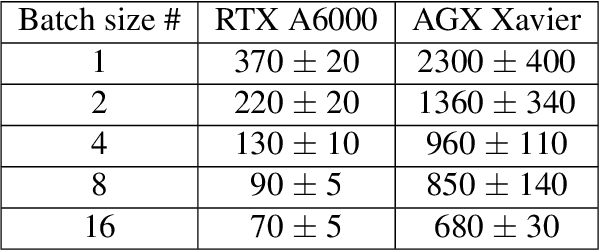

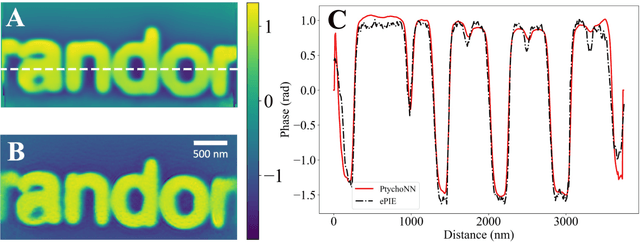

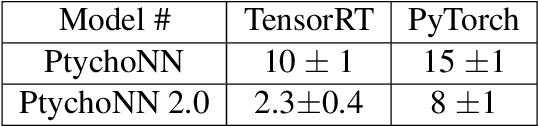

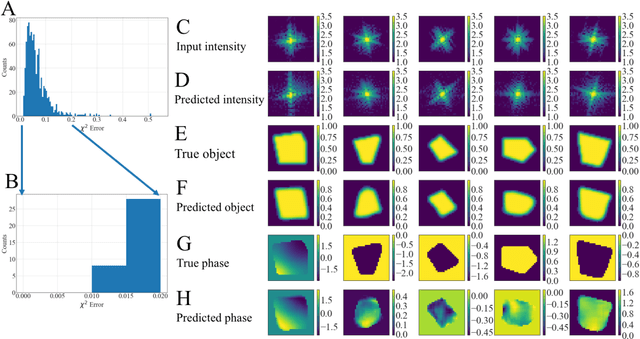

Real-time sparse-sampled Ptychographic imaging through deep neural networks

Apr 15, 2020

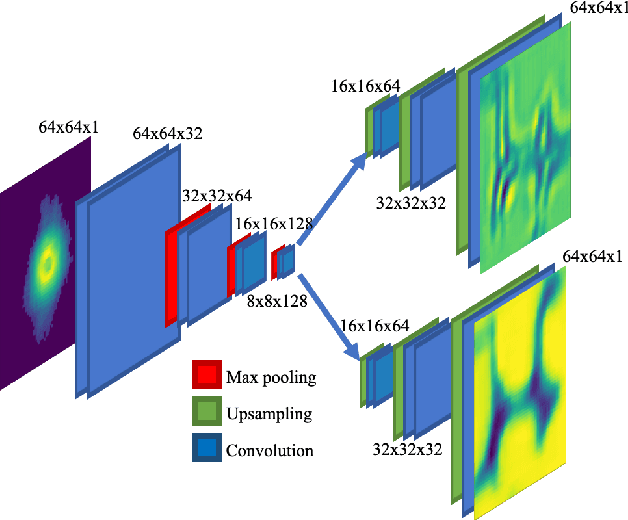

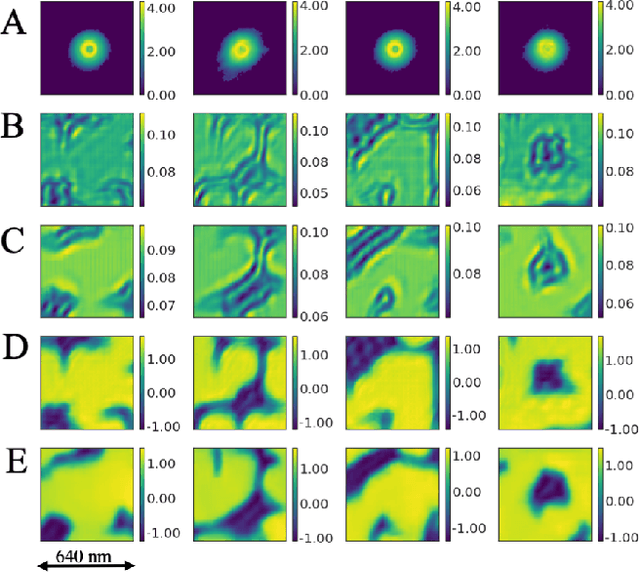

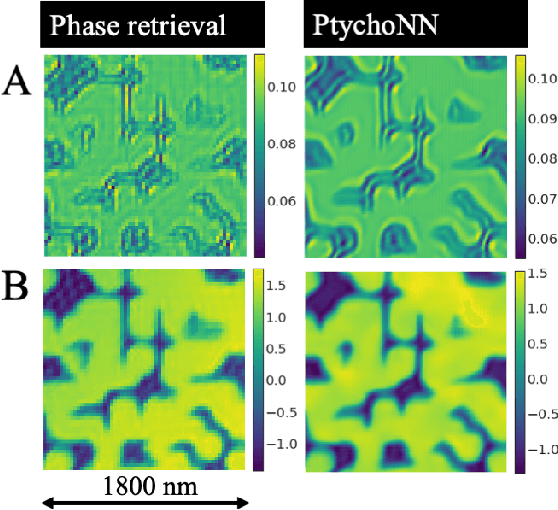

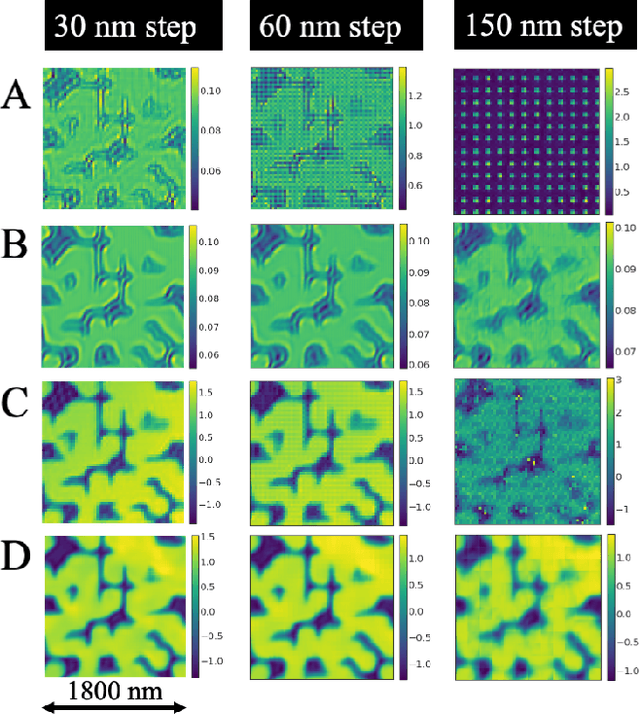

Abstract:Ptychography has rapidly grown in the fields of X-ray and electron imaging for its unprecedented ability to achieve nano or atomic scale resolution while simultaneously retrieving chemical or magnetic information from a sample. A ptychographic reconstruction is achieved by means of solving a complex inverse problem that imposes constraints both on the acquisition and on the analysis of the data, which typically precludes real-time imaging due to computational cost involved in solving this inverse problem. In this work we propose PtychoNN, a novel approach to solve the ptychography reconstruction problem based on deep convolutional neural networks. We demonstrate how the proposed method can be used to predict real-space structure and phase at each scan point solely from the corresponding far-field diffraction data. The presented results demonstrate how PtychoNN can effectively be used on experimental data, being able to generate high quality reconstructions of a sample up to hundreds of times faster than state-of-the-art ptychography reconstruction solutions once trained. By surpassing the typical constraints of iterative model-based methods, we can significantly relax the data acquisition sampling conditions and produce equally satisfactory reconstructions. Besides drastically accelerating acquisition and analysis, this capability can enable new imaging scenarios that were not possible before, in cases of dose sensitive, dynamic and extremely voluminous samples.

Real-time coherent diffraction inversion using deep generative networks

Jun 07, 2018

Abstract:Phase retrieval, or the process of recovering phase information in reciprocal space to reconstruct images from measured intensity alone, is the underlying basis to a variety of imaging applications including coherent diffraction imaging (CDI). Typical phase retrieval algorithms are iterative in nature, and hence, are time-consuming and computationally expensive, precluding real-time imaging. Furthermore, iterative phase retrieval algorithms struggle to converge to the correct solution especially in the presence of strong phase structures. In this work, we demonstrate the training and testing of CDI NN, a pair of deep deconvolutional networks trained to predict structure and phase in real space of a 2D object from its corresponding far-field diffraction intensities alone. Once trained, CDI NN can invert a diffraction pattern to an image within a few milliseconds of compute time on a standard desktop machine, opening the door to real-time imaging.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge