Tekin Bicer

HiSin: Efficient High-Resolution Sinogram Inpainting via Resolution-Guided Progressive Inference

Jun 10, 2025

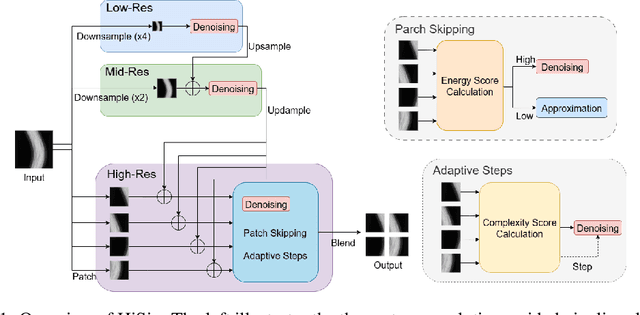

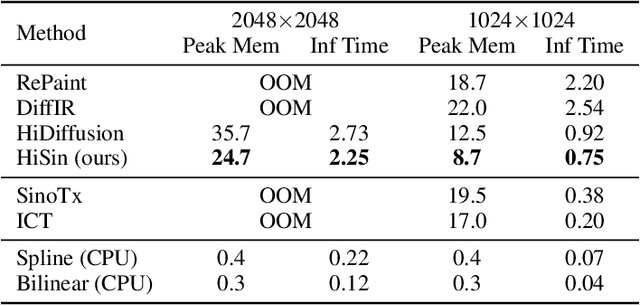

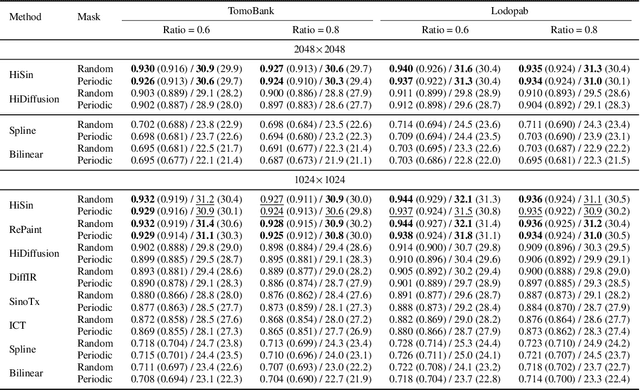

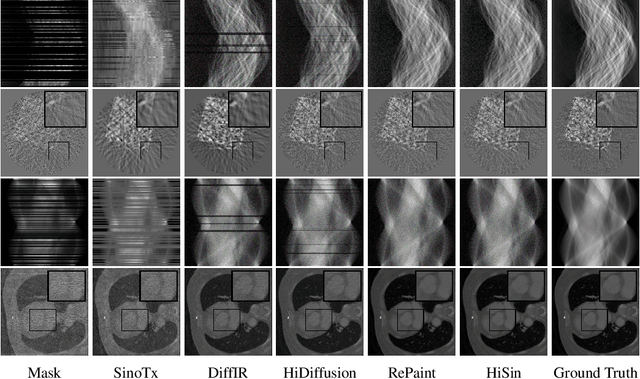

Abstract:High-resolution sinogram inpainting is essential for computed tomography reconstruction, as missing high-frequency projections can lead to visible artifacts and diagnostic errors. Diffusion models are well-suited for this task due to their robustness and detail-preserving capabilities, but their application to high-resolution inputs is limited by excessive memory and computational demands. To address this limitation, we propose HiSin, a novel diffusion based framework for efficient sinogram inpainting via resolution-guided progressive inference. It progressively extracts global structure at low resolution and defers high-resolution inference to small patches, enabling memory-efficient inpainting. It further incorporates frequency-aware patch skipping and structure-adaptive step allocation to reduce redundant computation. Experimental results show that HiSin reduces peak memory usage by up to 31.25% and inference time by up to 18.15%, and maintains inpainting accuracy across datasets, resolutions, and mask conditions.

ZenFlow: Enabling Stall-Free Offloading Training via Asynchronous Updates

May 18, 2025Abstract:Fine-tuning large language models (LLMs) often exceeds GPU memory limits, prompting systems to offload model states to CPU memory. However, existing offloaded training frameworks like ZeRO-Offload treat all parameters equally and update the full model on the CPU, causing severe GPU stalls, where fast, expensive GPUs sit idle waiting for slow CPU updates and limited-bandwidth PCIe transfers. We present ZenFlow, a new offloading framework that prioritizes important parameters and decouples updates between GPU and CPU. ZenFlow performs in-place updates of important gradients on GPU, while asynchronously offloading and accumulating less important ones on CPU, fully overlapping CPU work with GPU computation. To scale across GPUs, ZenFlow introduces a lightweight gradient selection method that exploits a novel spatial and temporal locality property of important gradients, avoiding costly global synchronization. ZenFlow achieves up to 5x end-to-end speedup, 2x lower PCIe traffic, and reduces GPU stalls by over 85 percent, all while preserving accuracy.

Ptychographic Image Reconstruction from Limited Data via Score-Based Diffusion Models with Physics-Guidance

Feb 26, 2025

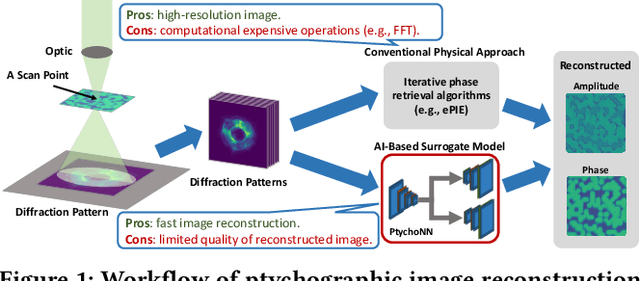

Abstract:Ptychography is a computational imaging technique that achieves high spatial resolution over large fields of view. It involves scanning a coherent beam across overlapping regions and recording diffraction patterns. Conventional reconstruction algorithms require substantial overlap, increasing data volume and experimental time. We propose a reconstruction method employing a physics-guided score-based diffusion model. Our approach trains a diffusion model on representative object images to learn an object distribution prior. During reconstruction, we modify the reverse diffusion process to enforce data consistency, guiding reverse diffusion toward a physically plausible solution. This method requires a single pretraining phase, allowing it to generalize across varying scan overlap ratios and positions. Our results demonstrate that the proposed method achieves high-fidelity reconstructions with only a 20% overlap, while the widely employed rPIE method requires a 62% overlap to achieve similar accuracy. This represents a significant reduction in data requirements, offering an alternative to conventional techniques.

Integrating Generative and Physics-Based Models for Ptychographic Imaging with Uncertainty Quantification

Dec 14, 2024Abstract:Ptychography is a scanning coherent diffractive imaging technique that enables imaging nanometer-scale features in extended samples. One main challenge is that widely used iterative image reconstruction methods often require significant amount of overlap between adjacent scan locations, leading to large data volumes and prolonged acquisition times. To address this key limitation, this paper proposes a Bayesian inversion method for ptychography that performs effectively even with less overlap between neighboring scan locations. Furthermore, the proposed method can quantify the inherent uncertainty on the ptychographic object, which is created by the ill-posed nature of the ptychographic inverse problem. At a high level, the proposed method first utilizes a deep generative model to learn the prior distribution of the object and then generates samples from the posterior distribution of the object by using a Markov Chain Monte Carlo algorithm. Our results from simulated ptychography experiments show that the proposed framework can consistently outperform a widely used iterative reconstruction algorithm in cases of reduced overlap. Moreover, the proposed framework can provide uncertainty estimates that closely correlate with the true error, which is not available in practice. The project website is available here.

Deep learning-based spatio-temporal fusion for high-fidelity ultra-high-speed x-ray radiography

Nov 27, 2024Abstract:Full-field ultra-high-speed (UHS) x-ray imaging experiments have been well established to characterize various processes and phenomena. However, the potential of UHS experiments through the joint acquisition of x-ray videos with distinct configurations has not been fully exploited. In this paper, we investigate the use of a deep learning-based spatio-temporal fusion (STF) framework to fuse two complementary sequences of x-ray images and reconstruct the target image sequence with high spatial resolution, high frame rate, and high fidelity. We applied a transfer learning strategy to train the model and compared the peak signal-to-noise ratio (PSNR), average absolute difference (AAD), and structural similarity (SSIM) of the proposed framework on two independent x-ray datasets with those obtained from a baseline deep learning model, a Bayesian fusion framework, and the bicubic interpolation method. The proposed framework outperformed the other methods with various configurations of the input frame separations and image noise levels. With 3 subsequent images from the low resolution (LR) sequence of a 4-time lower spatial resolution and another 2 images from the high resolution (HR) sequence of a 20-time lower frame rate, the proposed approach achieved an average PSNR of 37.57 dB and 35.15 dB, respectively. When coupled with the appropriate combination of high-speed cameras, the proposed approach will enhance the performance and therefore scientific value of the UHS x-ray imaging experiments.

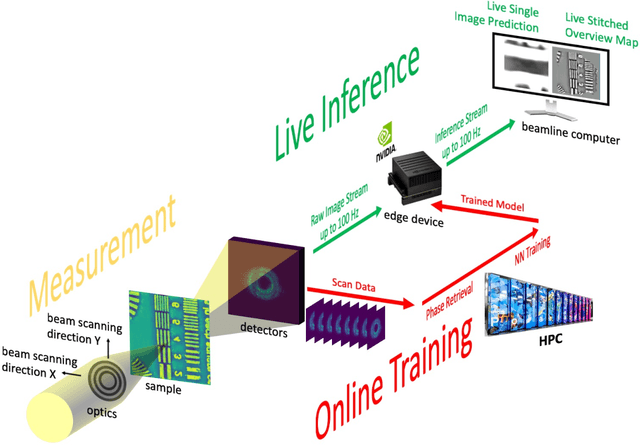

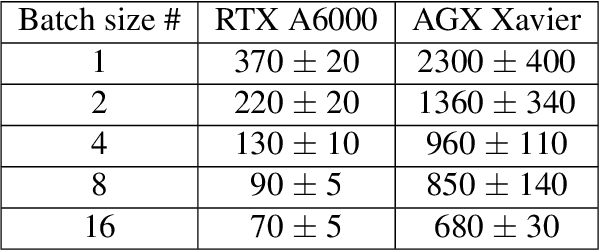

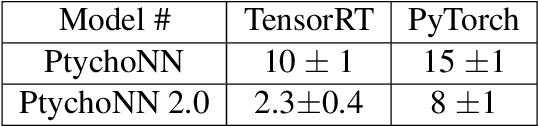

AI-assisted Automated Workflow for Real-time X-ray Ptychography Data Analysis via Federated Resources

Apr 09, 2023

Abstract:We present an end-to-end automated workflow that uses large-scale remote compute resources and an embedded GPU platform at the edge to enable AI/ML-accelerated real-time analysis of data collected for x-ray ptychography. Ptychography is a lensless method that is being used to image samples through a simultaneous numerical inversion of a large number of diffraction patterns from adjacent overlapping scan positions. This acquisition method can enable nanoscale imaging with x-rays and electrons, but this often requires very large experimental datasets and commensurately high turnaround times, which can limit experimental capabilities such as real-time experimental steering and low-latency monitoring. In this work, we introduce a software system that can automate ptychography data analysis tasks. We accelerate the data analysis pipeline by using a modified version of PtychoNN -- an ML-based approach to solve phase retrieval problem that shows two orders of magnitude speedup compared to traditional iterative methods. Further, our system coordinates and overlaps different data analysis tasks to minimize synchronization overhead between different stages of the workflow. We evaluate our workflow system with real-world experimental workloads from the 26ID beamline at Advanced Photon Source and ThetaGPU cluster at Argonne Leadership Computing Resources.

SOLAR: A Highly Optimized Data Loading Framework for Distributed Training of CNN-based Scientific Surrogates

Nov 04, 2022

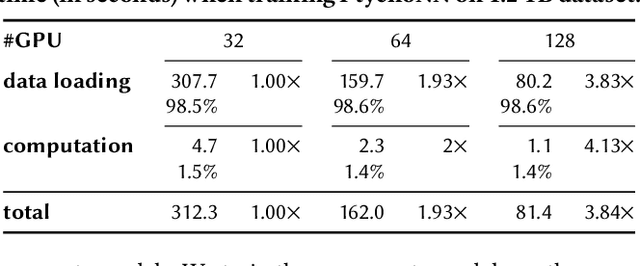

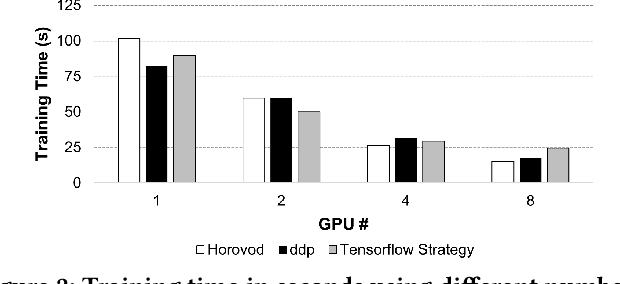

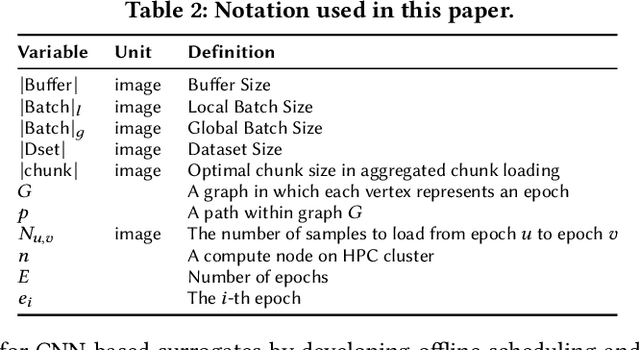

Abstract:CNN-based surrogates have become prevalent in scientific applications to replace conventional time-consuming physical approaches. Although these surrogates can yield satisfactory results with significantly lower computation costs over small training datasets, our benchmarking results show that data-loading overhead becomes the major performance bottleneck when training surrogates with large datasets. In practice, surrogates are usually trained with high-resolution scientific data, which can easily reach the terabyte scale. Several state-of-the-art data loaders are proposed to improve the loading throughput in general CNN training; however, they are sub-optimal when applied to the surrogate training. In this work, we propose SOLAR, a surrogate data loader, that can ultimately increase loading throughput during the training. It leverages our three key observations during the benchmarking and contains three novel designs. Specifically, SOLAR first generates a pre-determined shuffled index list and accordingly optimizes the global access order and the buffer eviction scheme to maximize the data reuse and the buffer hit rate. It then proposes a tradeoff between lightweight computational imbalance and heavyweight loading workload imbalance to speed up the overall training. It finally optimizes its data access pattern with HDF5 to achieve a better parallel I/O throughput. Our evaluation with three scientific surrogates and 32 GPUs illustrates that SOLAR can achieve up to 24.4X speedup over PyTorch Data Loader and 3.52X speedup over state-of-the-art data loaders.

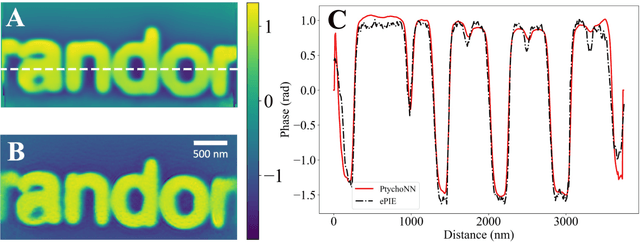

Deep learning at the edge enables real-time streaming ptychographic imaging

Sep 20, 2022

Abstract:Coherent microscopy techniques provide an unparalleled multi-scale view of materials across scientific and technological fields, from structural materials to quantum devices, from integrated circuits to biological cells. Driven by the construction of brighter sources and high-rate detectors, coherent X-ray microscopy methods like ptychography are poised to revolutionize nanoscale materials characterization. However, associated significant increases in data and compute needs mean that conventional approaches no longer suffice for recovering sample images in real-time from high-speed coherent imaging experiments. Here, we demonstrate a workflow that leverages artificial intelligence at the edge and high-performance computing to enable real-time inversion on X-ray ptychography data streamed directly from a detector at up to 2 kHz. The proposed AI-enabled workflow eliminates the sampling constraints imposed by traditional ptychography, allowing low dose imaging using orders of magnitude less data than required by traditional methods.

Scalable and accurate multi-GPU based image reconstruction of large-scale ptychography data

Jun 14, 2021

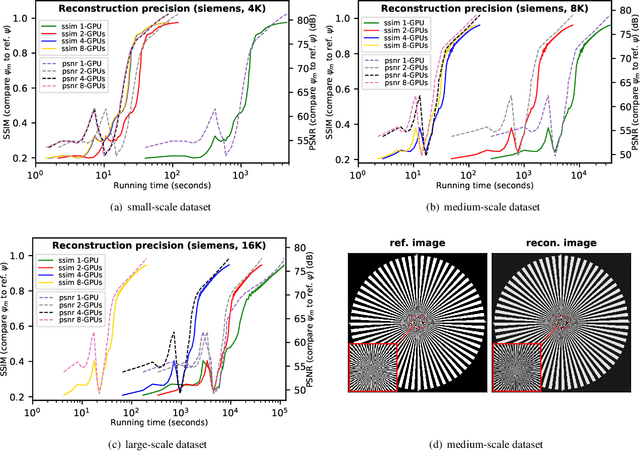

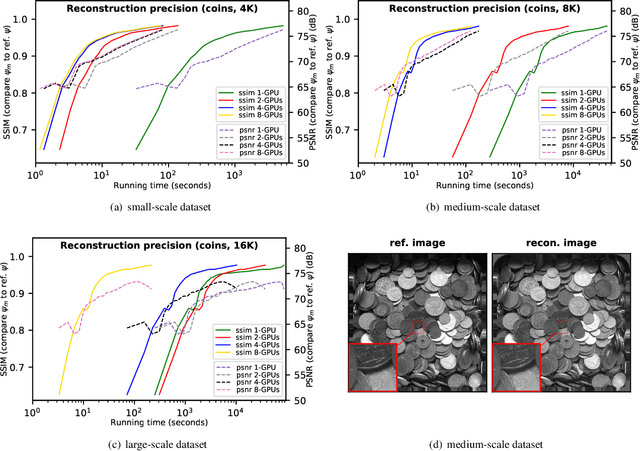

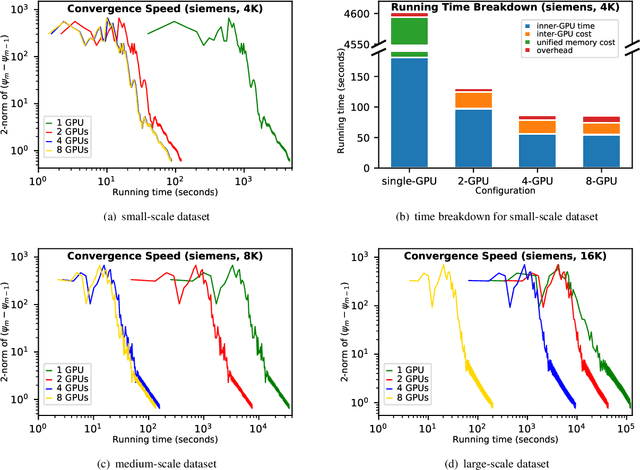

Abstract:While the advances in synchrotron light sources, together with the development of focusing optics and detectors, allow nanoscale ptychographic imaging of materials and biological specimens, the corresponding experiments can yield terabyte-scale large volumes of data that can impose a heavy burden on the computing platform. While Graphical Processing Units (GPUs) provide high performance for such large-scale ptychography datasets, a single GPU is typically insufficient for analysis and reconstruction. Several existing works have considered leveraging multiple GPUs to accelerate the ptychographic reconstruction. However, they utilize only Message Passing Interface (MPI) to handle the communications between GPUs. It poses inefficiency for the configuration that has multiple GPUs in a single node, especially while processing a single large projection, since it provides no optimizations to handle the heterogeneous GPU interconnections containing both low-speed links, e.g., PCIe, and high-speed links, e.g., NVLink. In this paper, we provide a multi-GPU implementation that can effectively solve large-scale ptychographic reconstruction problem with optimized performance on intra-node multi-GPU. We focus on the conventional maximum-likelihood reconstruction problem using conjugate-gradient (CG) for the solution and propose a novel hybrid parallelization model to address the performance bottlenecks in CG solver. Accordingly, we develop a tool called PtyGer (Ptychographic GPU(multiple)-based reconstruction), implementing our hybrid parallelization model design. The comprehensive evaluation verifies that PtyGer can fully preserve the original algorithm's accuracy while achieving outstanding intra-node GPU scalability.

Deep Learning Accelerated Light Source Experiments

Oct 09, 2019

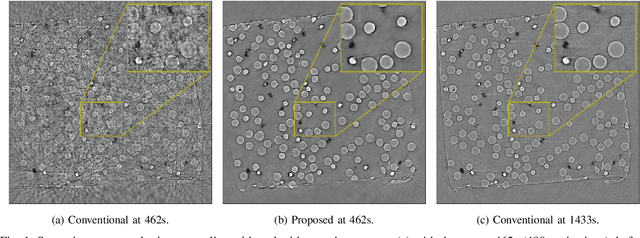

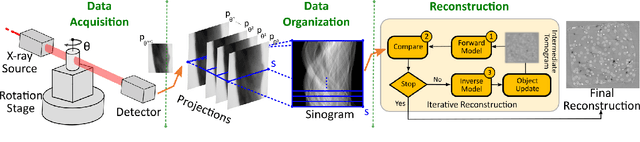

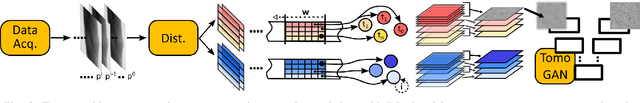

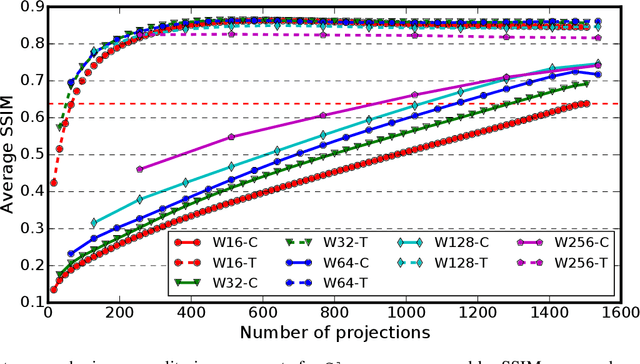

Abstract:Experimental protocols at synchrotron light sources typically process and validate data only after an experiment has completed, which can lead to undetected errors and cannot enable online steering. Real-time data analysis can enable both detection of, and recovery from, errors, and optimization of data acquisition. However, modern scientific instruments, such as detectors at synchrotron light sources, can generate data at GBs/sec rates. Data processing methods such as the widely used computational tomography usually require considerable computational resources, and yield poor quality reconstructions in the early stages of data acquisition when available views are sparse. We describe here how a deep convolutional neural network can be integrated into the real-time streaming tomography pipeline to enable better-quality images in the early stages of data acquisition. Compared with conventional streaming tomography processing, our method can significantly improve tomography image quality, deliver comparable images using only 32% of the data needed for conventional streaming processing, and save 68% experiment time for data acquisition.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge