Srutarshi Banerjee

HiSin: Efficient High-Resolution Sinogram Inpainting via Resolution-Guided Progressive Inference

Jun 10, 2025

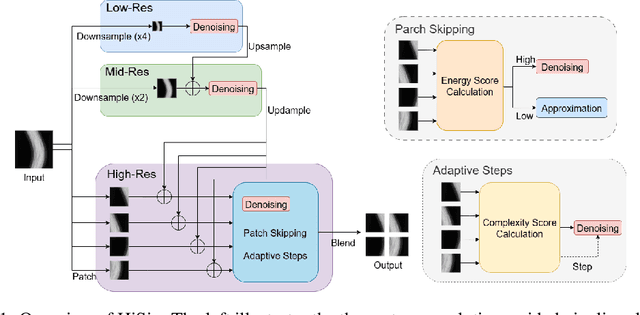

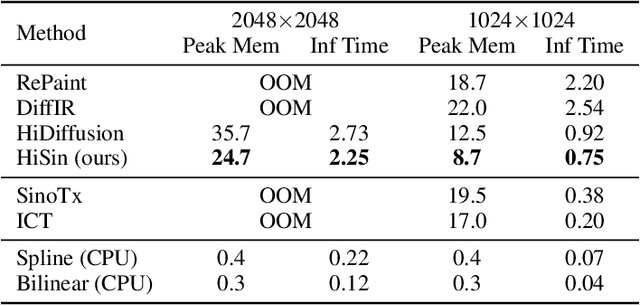

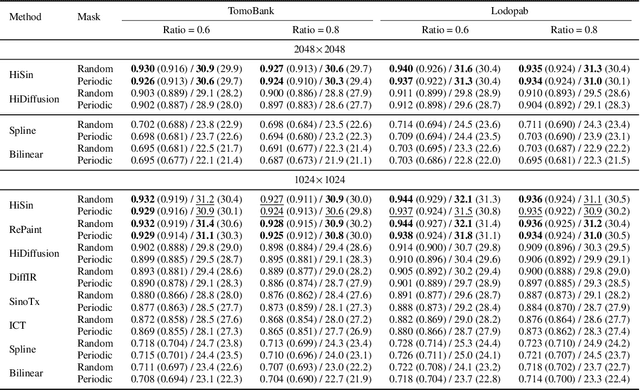

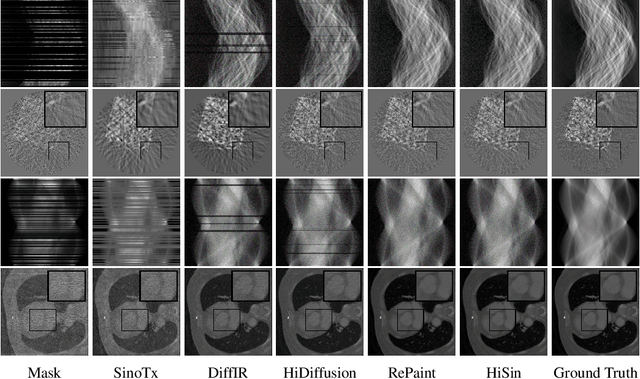

Abstract:High-resolution sinogram inpainting is essential for computed tomography reconstruction, as missing high-frequency projections can lead to visible artifacts and diagnostic errors. Diffusion models are well-suited for this task due to their robustness and detail-preserving capabilities, but their application to high-resolution inputs is limited by excessive memory and computational demands. To address this limitation, we propose HiSin, a novel diffusion based framework for efficient sinogram inpainting via resolution-guided progressive inference. It progressively extracts global structure at low resolution and defers high-resolution inference to small patches, enabling memory-efficient inpainting. It further incorporates frequency-aware patch skipping and structure-adaptive step allocation to reduce redundant computation. Experimental results show that HiSin reduces peak memory usage by up to 31.25% and inference time by up to 18.15%, and maintains inpainting accuracy across datasets, resolutions, and mask conditions.

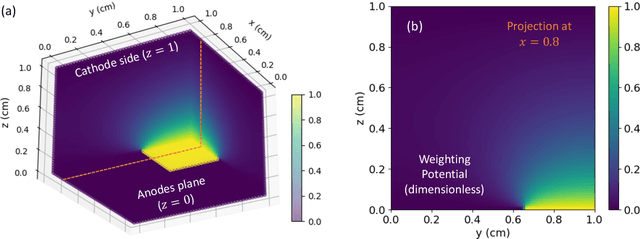

Modeling and Simulation of Charge-Induced Signals in Photon-Counting CZT Detectors for Medical Imaging Applications

May 21, 2024

Abstract:Photon-counting detectors based on CZT are essential in nuclear medical imaging, particularly for SPECT applications. Although CZT detectors are known for their precise energy resolution, defects within the CZT crystals significantly impact their performance. These defects result in inhomogeneous material properties throughout the bulk of the detector. The present work introduces an efficient computational model that simulates the operation of semiconductor detectors, accounting for the spatial variability of the crystal properties. Our simulator reproduces the charge-induced pulse signals generated after the X/gamma-rays interact with the detector. The performance evaluation of the model shows an RMSE in the signal below 0.70%. Our simulator can function as a digital twin to accurately replicate the operation of actual detectors. Thus, it can be used to mitigate and compensate for adverse effects arising from crystal impurities.

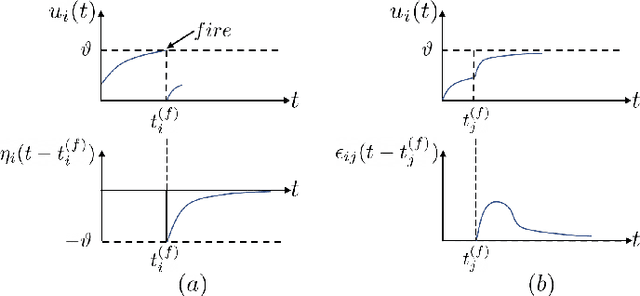

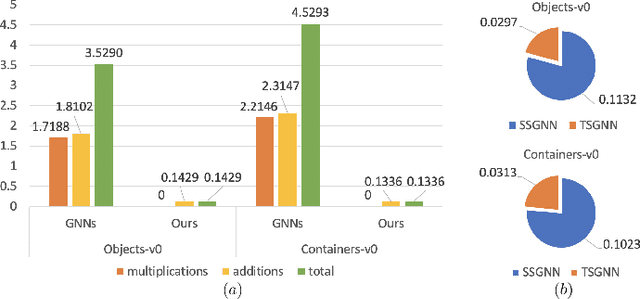

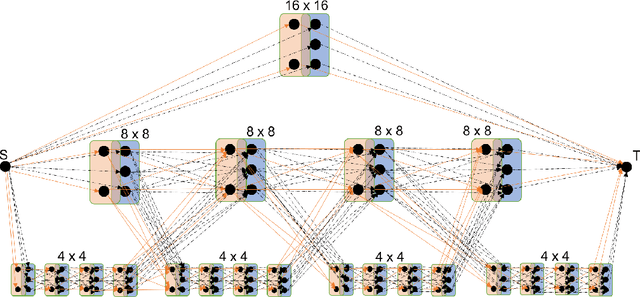

Event-based Shape from Polarization with Spiking Neural Networks

Dec 26, 2023Abstract:Recent advances in event-based shape determination from polarization offer a transformative approach that tackles the trade-off between speed and accuracy in capturing surface geometries. In this paper, we investigate event-based shape from polarization using Spiking Neural Networks (SNNs), introducing the Single-Timestep and Multi-Timestep Spiking UNets for effective and efficient surface normal estimation. Specificially, the Single-Timestep model processes event-based shape as a non-temporal task, updating the membrane potential of each spiking neuron only once, thereby reducing computational and energy demands. In contrast, the Multi-Timestep model exploits temporal dynamics for enhanced data extraction. Extensive evaluations on synthetic and real-world datasets demonstrate that our models match the performance of state-of-the-art Artifical Neural Networks (ANNs) in estimating surface normals, with the added advantage of superior energy efficiency. Our work not only contributes to the advancement of SNNs in event-based sensing but also sets the stage for future explorations in optimizing SNN architectures, integrating multi-modal data, and scaling for applications on neuromorphic hardware.

A Physics based Machine Learning Model to characterize Room Temperature Semiconductor Detectors in 3D

Nov 04, 2023Abstract:Room temperature semiconductor radiation detectors (RTSD) for X-ray and gamma-ray detection are vital tools for medical imaging, astrophysics and other applications. CdZnTe (CZT) has been the main RTSD for more than three decades with desired detection properties. In a typical pixelated configuration, CZT have electrodes on opposite ends. For advanced event reconstruction algorithms at sub-pixel level, detailed characterization of the RTSD is required in three dimensional (3D) space. However, 3D characterization of the material defects and charge transport properties in the sub-pixel regime is a labor-intensive process with skilled manpower and novel experimental setups. Presently, state-of-art characterization is done over the bulk of the RTSD considering homogenous properties. In this paper, we propose a novel physics based machine learning (PBML) model to characterize the RTSD over a discretized sub-pixelated 3D volume which is assumed. Our novel approach is the first to characterize a full 3D charge transport model of the RTSD. In this work, we first discretize the RTSD between a pixelated electrodes spatially in 3D - x, y, and z. The resulting discretizations are termed as voxels in 3D space. In each voxel, the different physics based charge transport properties such as drift, trapping, detrapping and recombination of charges are modeled as trainable model weights. The drift of the charges considers second order non-linear motion which is observed in practice with the RTSDs. Based on the electron-hole pair injections as input to the PBML model, and signals at the electrodes, free and trapped charges (electrons and holes) as outputs of the model, the PBML model determines the trainable weights by backpropagating the loss function. The trained weights of the model represents one-to-one relation to that of the actual physical charge transport properties in a voxelized detector.

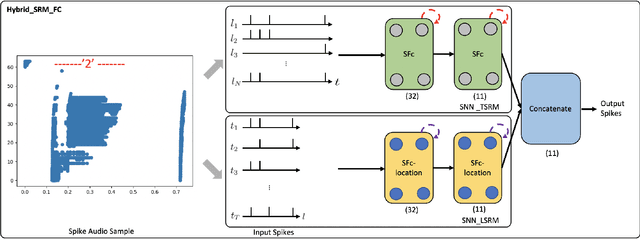

Event-Driven Tactile Learning with Various Location Spiking Neurons

Oct 11, 2022

Abstract:Tactile sensing is essential for a variety of daily tasks. New advances in event-driven tactile sensors and Spiking Neural Networks (SNNs) spur the research in related fields. However, SNN-enabled event-driven tactile learning is still in its infancy due to the limited representation abilities of existing spiking neurons and high spatio-temporal complexity in the data. In this paper, to improve the representation capability of existing spiking neurons, we propose a novel neuron model called "location spiking neuron", which enables us to extract features of event-based data in a novel way. Specifically, based on the classical Time Spike Response Model (TSRM), we develop the Location Spike Response Model (LSRM). In addition, based on the most commonly-used Time Leaky Integrate-and-Fire (TLIF) model, we develop the Location Leaky Integrate-and-Fire (LLIF) model. By exploiting the novel location spiking neurons, we propose several models to capture the complex spatio-temporal dependencies in the event-driven tactile data. Extensive experiments demonstrate the significant improvements of our models over other works on event-driven tactile learning and show the superior energy efficiency of our models and location spiking neurons, which may unlock their potential on neuromorphic hardware.

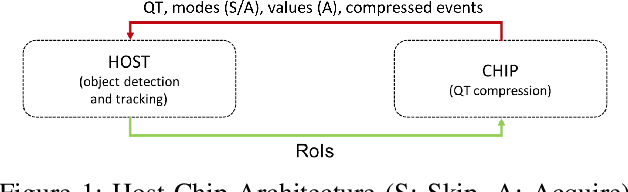

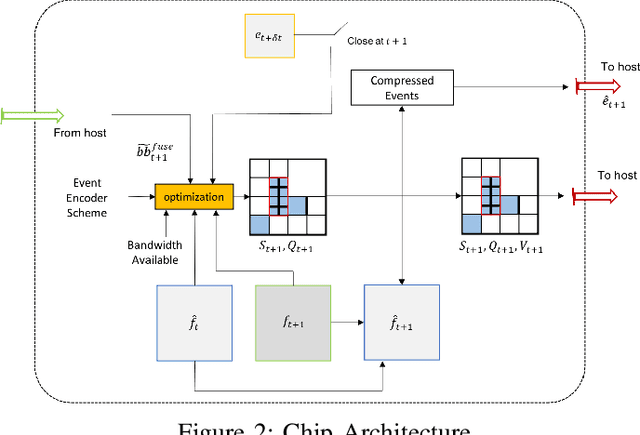

A Joint Intensity-Neuromorphic Event Imaging System for Resource Constrained Devices

May 29, 2021

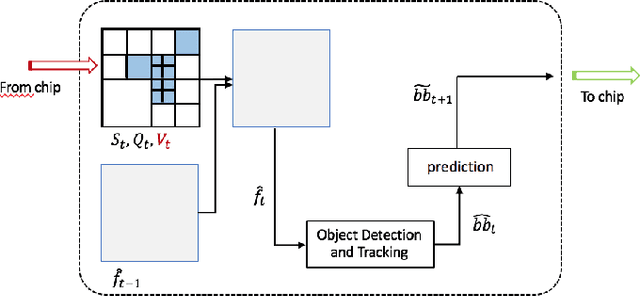

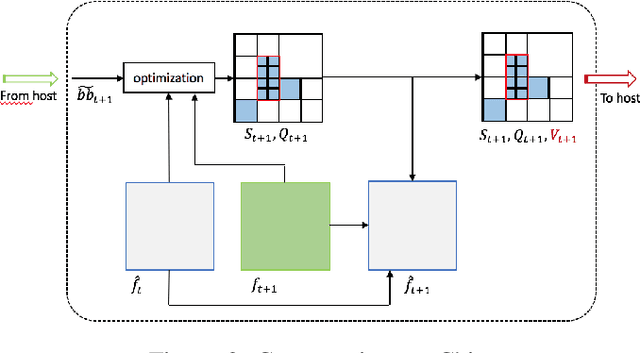

Abstract:We present a novel adaptive multi-modal intensity-event algorithm to optimize an overall objective of object tracking under bit rate constraints for a host-chip architecture. The chip is a computationally resource constrained device acquiring high resolution intensity frames and events, while the host is capable of performing computationally expensive tasks. We develop a joint intensity-neuromorphic event rate-distortion compression framework with a quadtree (QT) based compression of intensity and events scheme. The data acquisition on the chip is driven by the presence of objects of interest in the scene as detected by an object detector. The most informative intensity and event data are communicated to the host under rate constraints, so that the best possible tracking performance is obtained. The detection and tracking of objects in the scene are done on the distorted data at the host. Intensity and events are jointly used in a fusion framework to enhance the quality of the distorted images, so as to improve the object detection and tracking performance. The performance assessment of the overall system is done in terms of the multiple object tracking accuracy (MOTA) score. Compared to using intensity modality only, there is an improvement in MOTA using both these modalities in different scenarios.

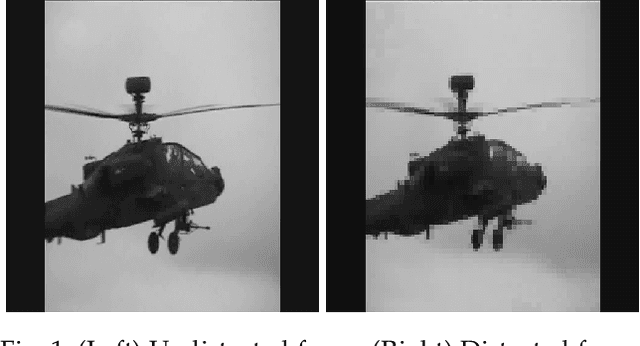

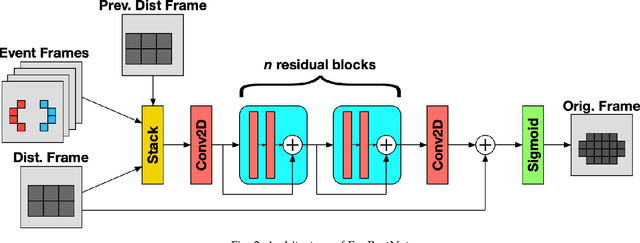

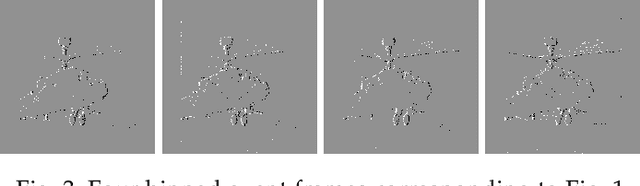

Removing Blocking Artifacts in Video Streams Using Event Cameras

May 12, 2021

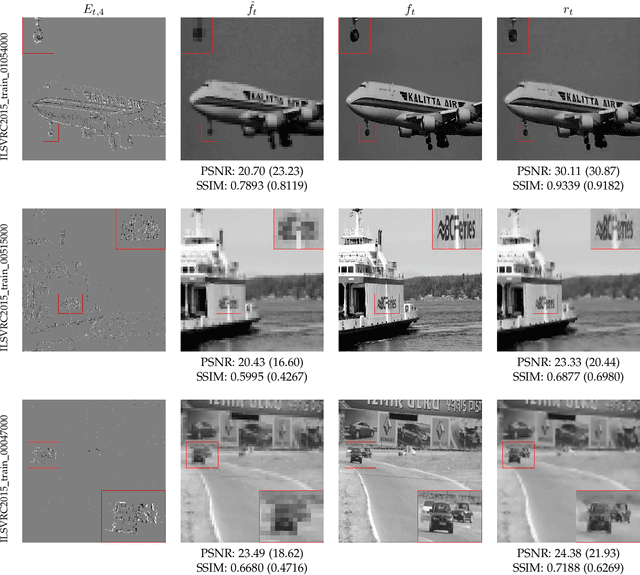

Abstract:In this paper, we propose EveRestNet, a convolutional neural network designed to remove blocking artifacts in videostreams using events from neuromorphic sensors. We first degrade the video frame using a quadtree structure to produce the blocking artifacts to simulate transmitting a video under a heavily constrained bandwidth. Events from the neuromorphic sensor are also simulated, but are transmitted in full. Using the distorted frames and the event stream, EveRestNet is able to improve the image quality.

An Adaptive Video Acquisition Scheme for Object Tracking and its Performance Optimization

Feb 24, 2021

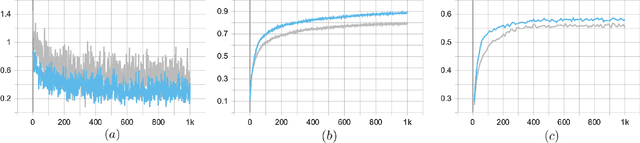

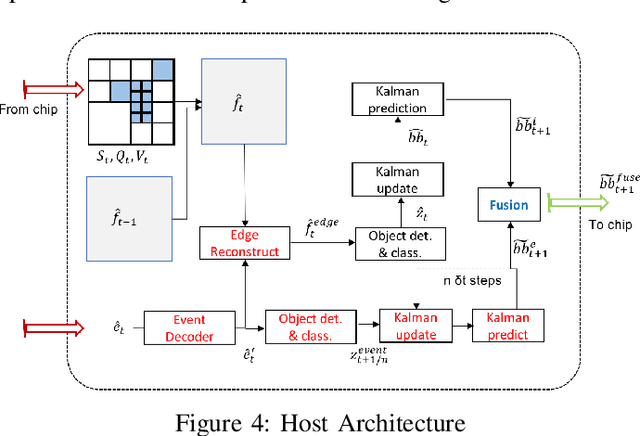

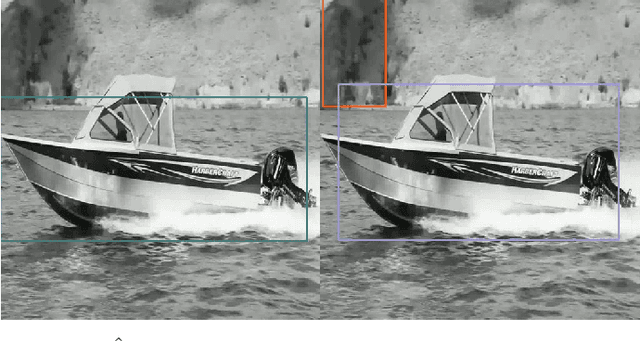

Abstract:We present a novel adaptive host-chip modular architecture for video acquisition to optimize an overall objective task constrained under a given bit rate. The chip is a high resolution imaging sensor such as gigapixel focal plane array (FPA) with low computational power deployed on the field remotely, while the host is a server with high computational power. The communication channel data bandwidth between the chip and host is constrained to accommodate transfer of all captured data from the chip. The host performs objective task specific computations and also intelligently guides the chip to optimize (compress) the data sent to host. This proposed system is modular and highly versatile in terms of flexibility in re-orienting the objective task. In this work, object tracking is the objective task. While our architecture supports any form of compression/distortion, in this paper we use quadtree (QT)-segmented video frames. We use Viterbi (Dynamic Programming) algorithm to minimize the area normalized weighted rate-distortion allocation of resources. The host receives only these degraded frames for analysis. An object detector is used to detect objects, and a Kalman Filter based tracker is used to track those objects. Evaluation of system performance is done in terms of Multiple Object Tracking Accuracy (MOTA) metric. In this proposed novel architecture, performance gains in MOTA is obtained by twice training the object detector with different system generated distortions as a novel 2-step process. Additionally, object detector is assisted by tracker to upscore the region proposals in the detector to further improve the performance.

Quadtree Driven Lossy Event Compression

May 03, 2020

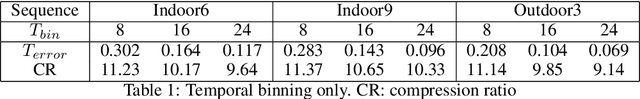

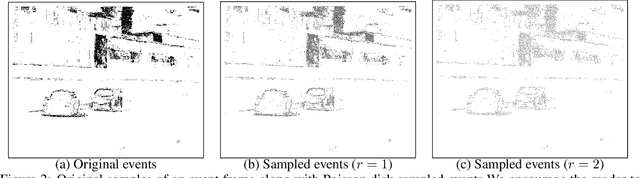

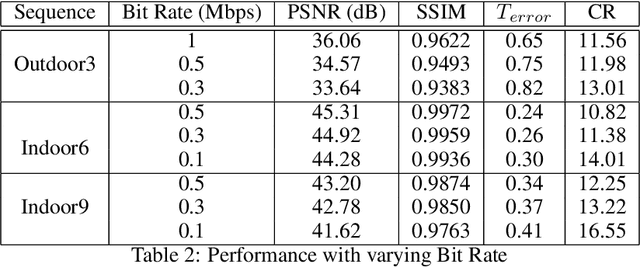

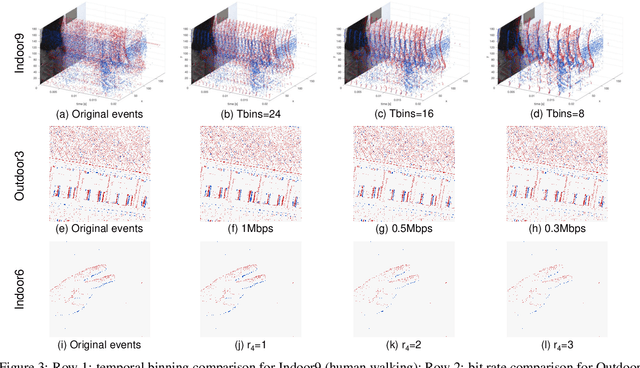

Abstract:Event cameras are emerging bio-inspired sensors that offer salient benefits over traditional cameras. With high speed, high dynamic range, and low power consumption, event cameras have been increasingly employed to solve existing as well as novel visual and robotics tasks. Despite rapid advancement in event-based vision, event data compression is facing growing demand, yet remains elusively challenging and not effectively addressed. The major challenge is the unique data form, \emph{i.e.}, a stream of four-attribute events, encoding the spatial locations and the timestamp of each event, with a polarity representing the brightness increase/decrease. While events encode temporal variations at high speed, they omit rich spatial information, which is critical for image/video compression. In this paper, we perform lossy event compression (LEC) based on a quadtree (QT) segmentation map derived from an adjacent image. The QT structure provides a priority map for the 3D space-time volume, albeit in a 2D manner. LEC is performed by first quantizing the events over time, and then variably compressing the events within each QT block via Poisson Disk Sampling in 2D space for each quantized time. Our QT-LEC has flexibility in accordance with the bit-rate requirement. Experimentally, we show results with state-of-the-art coding performance. We further evaluate the performance in event-based applications such as image reconstruction and corner detection.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge