Henry Chopp

Event-based Shape from Polarization with Spiking Neural Networks

Dec 26, 2023Abstract:Recent advances in event-based shape determination from polarization offer a transformative approach that tackles the trade-off between speed and accuracy in capturing surface geometries. In this paper, we investigate event-based shape from polarization using Spiking Neural Networks (SNNs), introducing the Single-Timestep and Multi-Timestep Spiking UNets for effective and efficient surface normal estimation. Specificially, the Single-Timestep model processes event-based shape as a non-temporal task, updating the membrane potential of each spiking neuron only once, thereby reducing computational and energy demands. In contrast, the Multi-Timestep model exploits temporal dynamics for enhanced data extraction. Extensive evaluations on synthetic and real-world datasets demonstrate that our models match the performance of state-of-the-art Artifical Neural Networks (ANNs) in estimating surface normals, with the added advantage of superior energy efficiency. Our work not only contributes to the advancement of SNNs in event-based sensing but also sets the stage for future explorations in optimizing SNN architectures, integrating multi-modal data, and scaling for applications on neuromorphic hardware.

Event-Driven Tactile Learning with Various Location Spiking Neurons

Oct 11, 2022

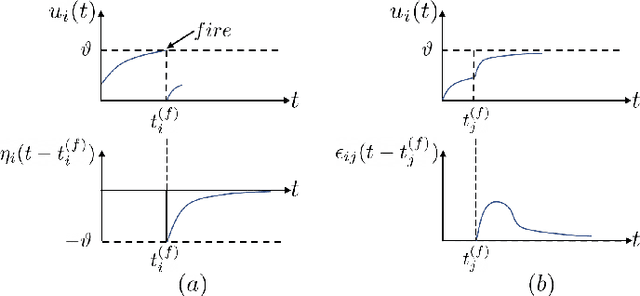

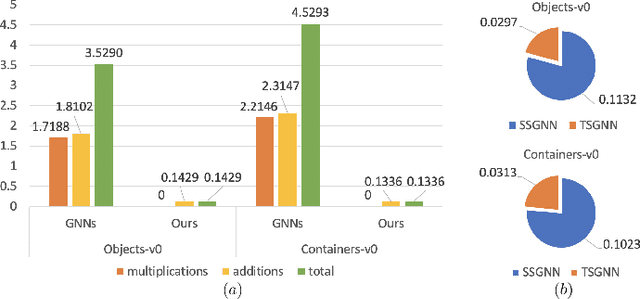

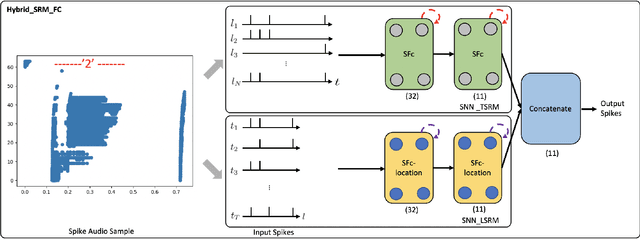

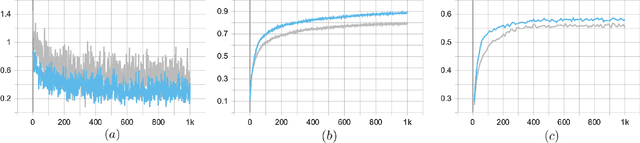

Abstract:Tactile sensing is essential for a variety of daily tasks. New advances in event-driven tactile sensors and Spiking Neural Networks (SNNs) spur the research in related fields. However, SNN-enabled event-driven tactile learning is still in its infancy due to the limited representation abilities of existing spiking neurons and high spatio-temporal complexity in the data. In this paper, to improve the representation capability of existing spiking neurons, we propose a novel neuron model called "location spiking neuron", which enables us to extract features of event-based data in a novel way. Specifically, based on the classical Time Spike Response Model (TSRM), we develop the Location Spike Response Model (LSRM). In addition, based on the most commonly-used Time Leaky Integrate-and-Fire (TLIF) model, we develop the Location Leaky Integrate-and-Fire (LLIF) model. By exploiting the novel location spiking neurons, we propose several models to capture the complex spatio-temporal dependencies in the event-driven tactile data. Extensive experiments demonstrate the significant improvements of our models over other works on event-driven tactile learning and show the superior energy efficiency of our models and location spiking neurons, which may unlock their potential on neuromorphic hardware.

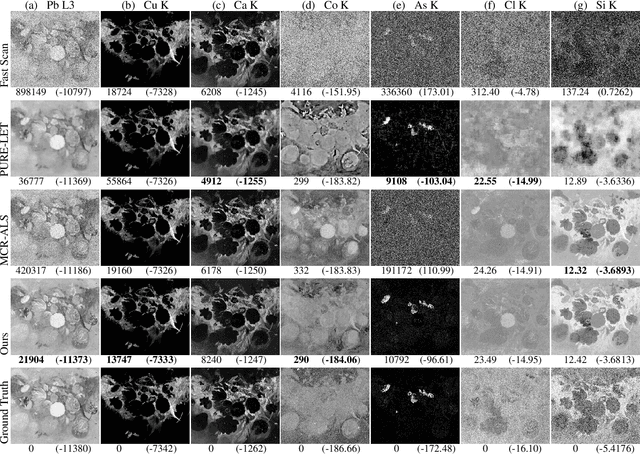

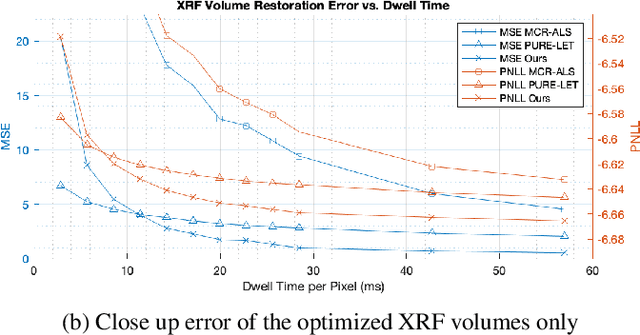

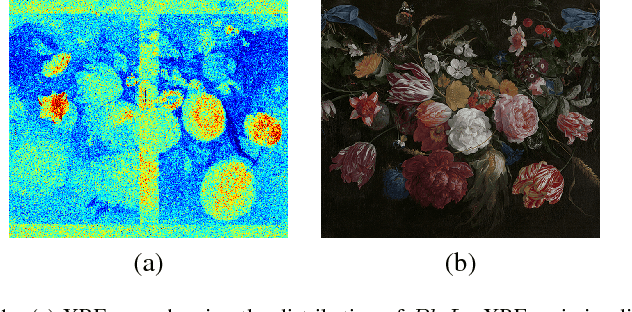

Denoising Fast X-Ray Fluorescence Raster Scans of Paintings

Jun 03, 2022

Abstract:Macro x-ray fluorescence (XRF) imaging of cultural heritage objects, while a popular non-invasive technique for providing elemental distribution maps, is a slow acquisition process in acquiring high signal-to-noise ratio XRF volumes. Typically on the order of tenths of a second per pixel, a raster scanning probe counts the number of photons at different energies emitted by the object under x-ray illumination. In an effort to reduce the scan times without sacrificing elemental map and XRF volume quality, we propose using dictionary learning with a Poisson noise model as well as a color image-based prior to restore noisy, rapidly acquired XRF data.

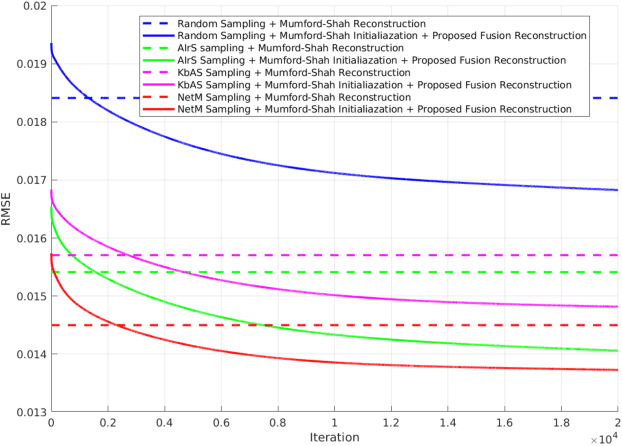

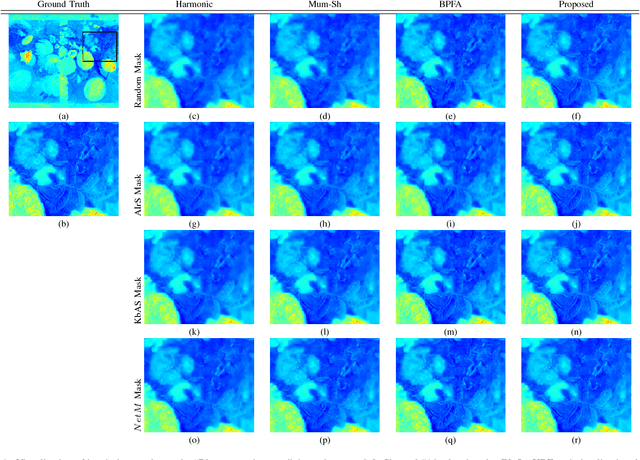

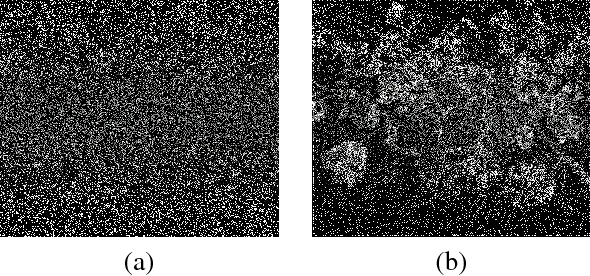

Adaptive Image Sampling using Deep Learning and its Application on X-Ray Fluorescence Image Reconstruction

Jan 04, 2019

Abstract:This paper presents an adaptive image sampling algorithm based on Deep Learning (DL). The adaptive sampling mask generation network is jointly trained with an image inpainting network. The sampling rate is controlled in the mask generation network, and a binarization strategy is investigated to make the sampling mask binary. Besides the image sampling and reconstruction application, we show that the proposed adaptive sampling algorithm is able to speed up raster scan processes such as the X-Ray fluorescence (XRF) image scanning process. Recently XRF laboratory-based systems have evolved to lightweight and portable instruments thanks to technological advancements in both X-Ray generation and detection. However, the scanning time of an XRF image is usually long due to the long exposures requires (e.g., $100 \mu s-1ms$ per point). We propose an XRF image inpainting approach to address the issue of long scanning time, thus speeding up the scanning process while still maintaining the possibility to reconstruct a high quality XRF image. The proposed adaptive image sampling algorithm is applied to the RGB image of the scanning target to generate the sampling mask. The XRF scanner is then driven according to the sampling mask to scan a subset of the total image pixels. Finally, we inpaint the scanned XRF image by fusing the RGB image to reconstruct the full scan XRF image. The experiments show that the proposed adaptive sampling algorithm is able to effectively sample the image and achieve a better reconstruction accuracy than that of the existing methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge