Ross J. Harder

AutoPhaseNN: Unsupervised Physics-aware Deep Learning of 3D Nanoscale Coherent Imaging

Sep 28, 2021

Abstract:The problem of phase retrieval, or the algorithmic recovery of lost phase information from measured intensity alone, underlies various imaging methods from astronomy to nanoscale imaging. Traditional methods of phase retrieval are iterative in nature, and are therefore computationally expensive and time consuming. More recently, deep learning (DL) models have been developed to either provide learned priors to iterative phase retrieval or in some cases completely replace phase retrieval with networks that learn to recover the lost phase information from measured intensity alone. However, such models require vast amounts of labeled data, which can only be obtained through simulation or performing computationally prohibitive phase retrieval on hundreds of or even thousands of experimental datasets. Using a 3D nanoscale X-ray imaging modality (Bragg Coherent Diffraction Imaging or BCDI) as a representative technique, we demonstrate AutoPhaseNN, a DL-based approach which learns to solve the phase problem without labeled data. By incorporating the physics of the imaging technique into the DL model during training, AutoPhaseNN learns to invert 3D BCDI data from reciprocal space to real space in a single shot without ever being shown real space images. Once trained, AutoPhaseNN is about one hundred times faster than traditional iterative phase retrieval methods while providing comparable image quality.

3D coherent x-ray imaging via deep convolutional neural networks

Feb 26, 2021

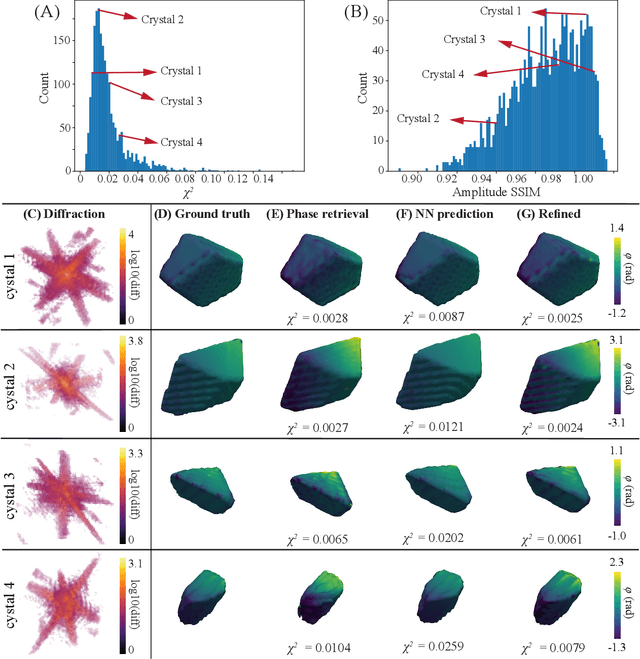

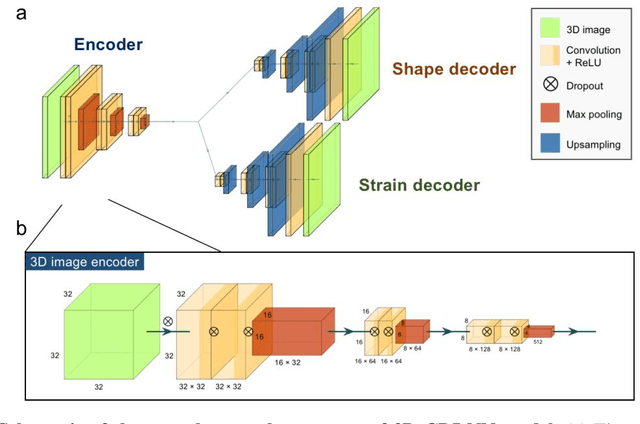

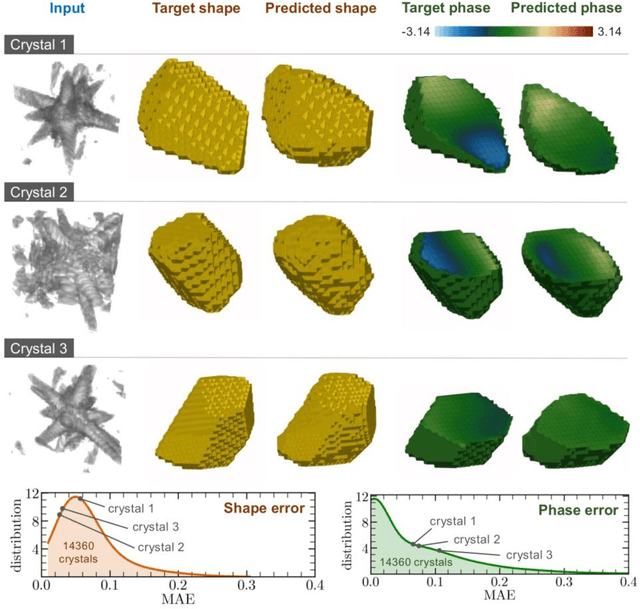

Abstract:As a critical component of coherent X-ray diffraction imaging (CDI), phase retrieval has been extensively applied in X-ray structural science to recover the 3D morphological information inside measured particles. Despite meeting all the oversampling requirements of Sayre and Shannon, current phase retrieval approaches still have trouble achieving a unique inversion of experimental data in the presence of noise. Here, we propose to overcome this limitation by incorporating a 3D Machine Learning (ML) model combining (optional) supervised training with unsupervised refinement. The trained ML model can rapidly provide an immediate result with high accuracy, which will benefit real-time experiments. More significantly, the Neural Network model can be used without any prior training to learn the missing phases of an image based on minimization of an appropriate loss function alone. We demonstrate significantly improved performance with experimental Bragg CDI data over traditional iterative phase retrieval algorithms.

Real-time 3D Nanoscale Coherent Imaging via Physics-aware Deep Learning

Jun 16, 2020

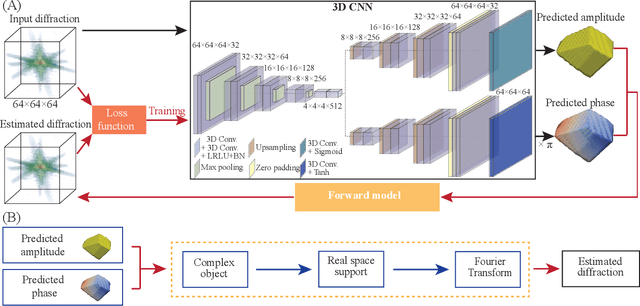

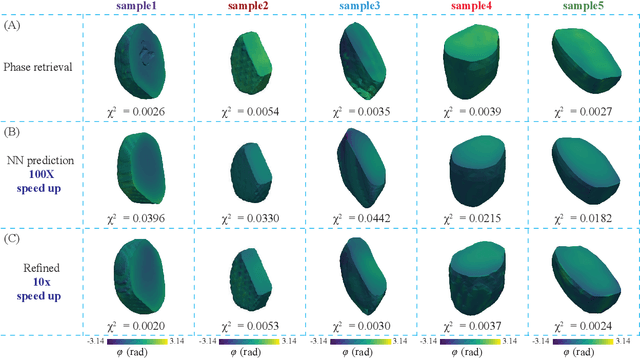

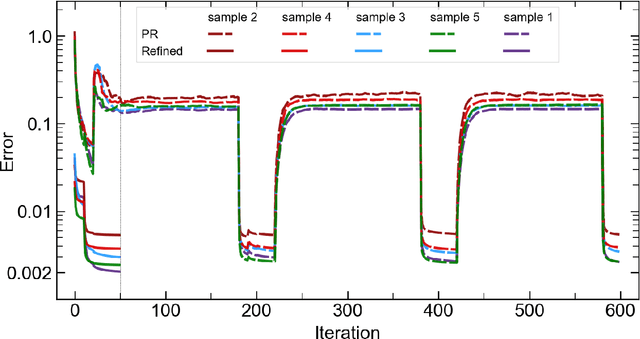

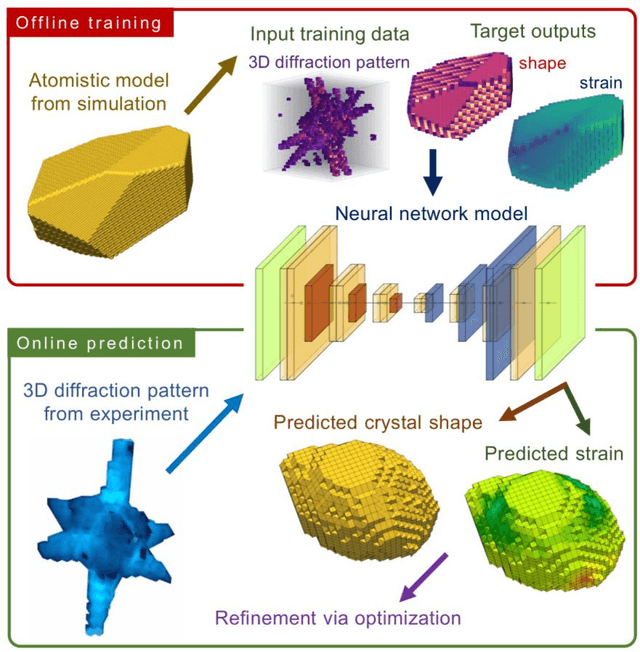

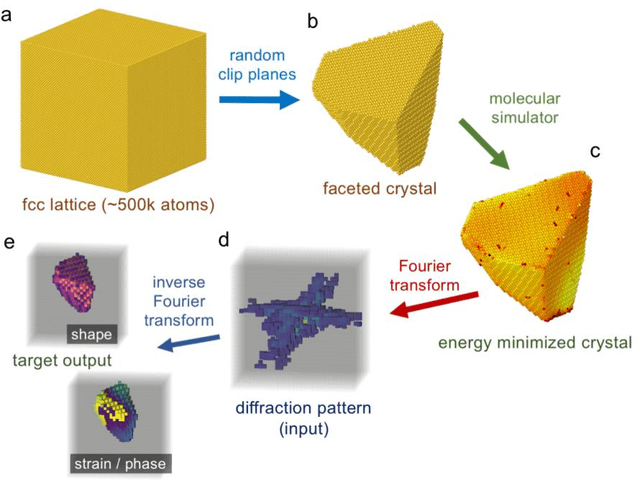

Abstract:Phase retrieval, the problem of recovering lost phase information from measured intensity alone, is an inverse problem that is widely faced in various imaging modalities ranging from astronomy to nanoscale imaging. The current process of phase recovery is iterative in nature. As a result, the image formation is time-consuming and computationally expensive, precluding real-time imaging. Here, we use 3D nanoscale X-ray imaging as a representative example to develop a deep learning model to address this phase retrieval problem. We introduce 3D-CDI-NN, a deep convolutional neural network and differential programming framework trained to predict 3D structure and strain solely from input 3D X-ray coherent scattering data. Our networks are designed to be "physics-aware" in multiple aspects; in that the physics of x-ray scattering process is explicitly enforced in the training of the network, and the training data are drawn from atomistic simulations that are representative of the physics of the material. We further refine the neural network prediction through a physics-based optimization procedure to enable maximum accuracy at lowest computational cost. 3D-CDI-NN can invert a 3D coherent diffraction pattern to real-space structure and strain hundreds of times faster than traditional iterative phase retrieval methods, with negligible loss in accuracy. Our integrated machine learning and differential programming solution to the phase retrieval problem is broadly applicable across inverse problems in other application areas.

Real-time sparse-sampled Ptychographic imaging through deep neural networks

Apr 15, 2020

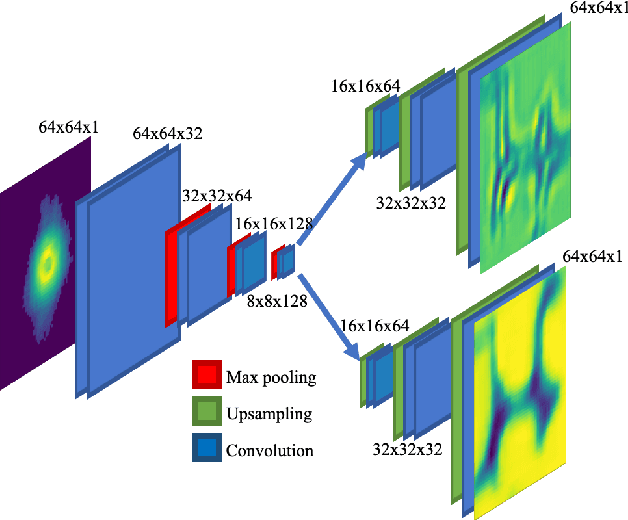

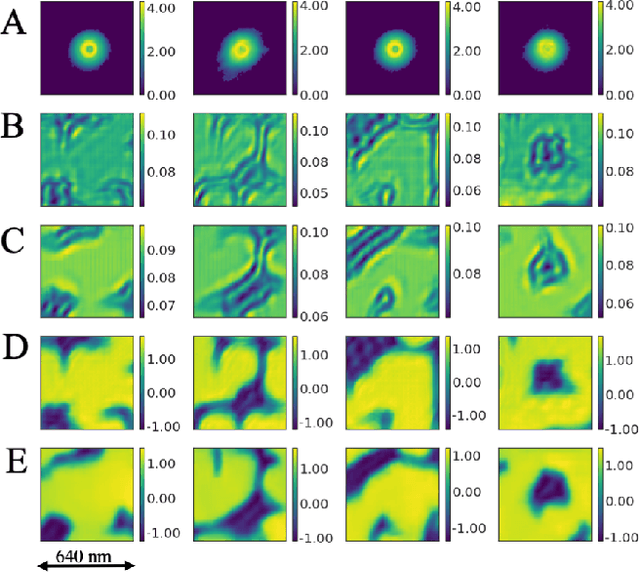

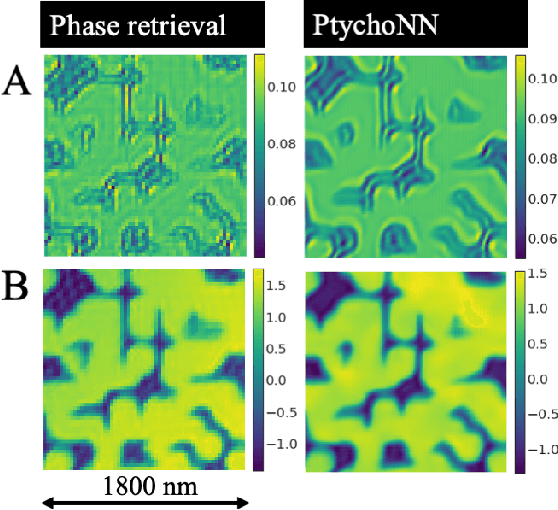

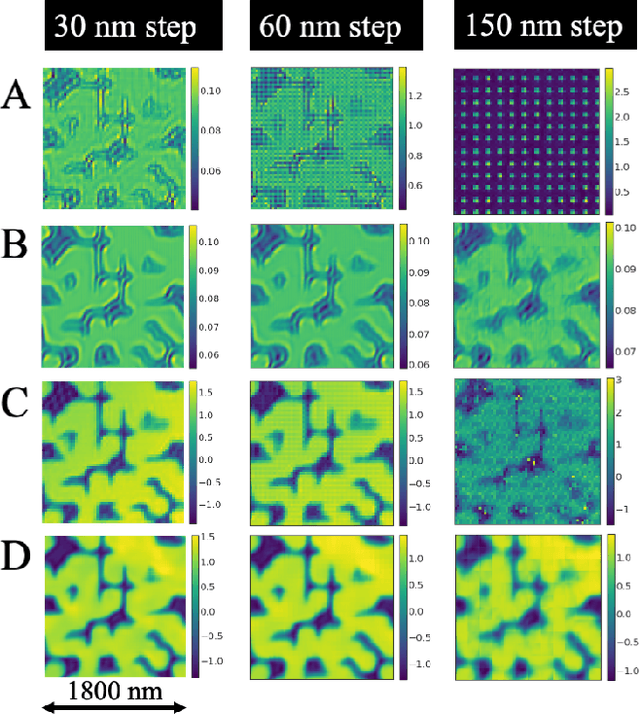

Abstract:Ptychography has rapidly grown in the fields of X-ray and electron imaging for its unprecedented ability to achieve nano or atomic scale resolution while simultaneously retrieving chemical or magnetic information from a sample. A ptychographic reconstruction is achieved by means of solving a complex inverse problem that imposes constraints both on the acquisition and on the analysis of the data, which typically precludes real-time imaging due to computational cost involved in solving this inverse problem. In this work we propose PtychoNN, a novel approach to solve the ptychography reconstruction problem based on deep convolutional neural networks. We demonstrate how the proposed method can be used to predict real-space structure and phase at each scan point solely from the corresponding far-field diffraction data. The presented results demonstrate how PtychoNN can effectively be used on experimental data, being able to generate high quality reconstructions of a sample up to hundreds of times faster than state-of-the-art ptychography reconstruction solutions once trained. By surpassing the typical constraints of iterative model-based methods, we can significantly relax the data acquisition sampling conditions and produce equally satisfactory reconstructions. Besides drastically accelerating acquisition and analysis, this capability can enable new imaging scenarios that were not possible before, in cases of dose sensitive, dynamic and extremely voluminous samples.

Real-time coherent diffraction inversion using deep generative networks

Jun 07, 2018

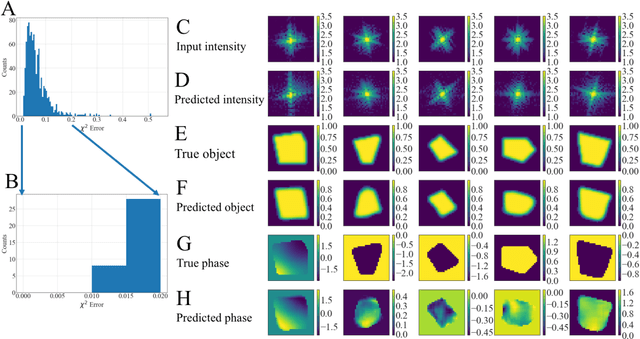

Abstract:Phase retrieval, or the process of recovering phase information in reciprocal space to reconstruct images from measured intensity alone, is the underlying basis to a variety of imaging applications including coherent diffraction imaging (CDI). Typical phase retrieval algorithms are iterative in nature, and hence, are time-consuming and computationally expensive, precluding real-time imaging. Furthermore, iterative phase retrieval algorithms struggle to converge to the correct solution especially in the presence of strong phase structures. In this work, we demonstrate the training and testing of CDI NN, a pair of deep deconvolutional networks trained to predict structure and phase in real space of a 2D object from its corresponding far-field diffraction intensities alone. Once trained, CDI NN can invert a diffraction pattern to an image within a few milliseconds of compute time on a standard desktop machine, opening the door to real-time imaging.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge