Muhammad Shalihan

Distributed multi-robot potential-field-based exploration with submap-based mapping and noise-augmented strategy

Jul 10, 2024

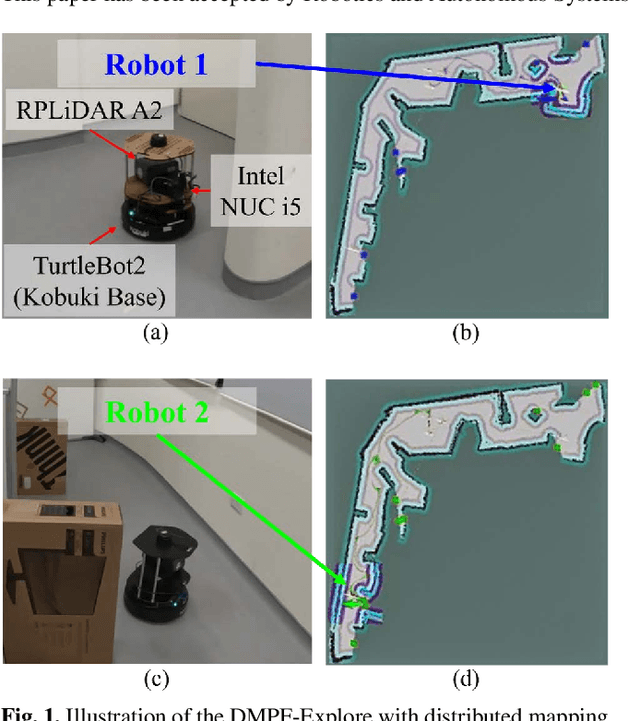

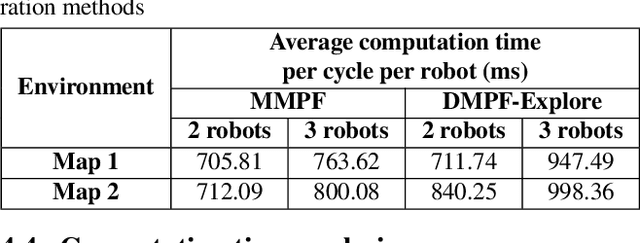

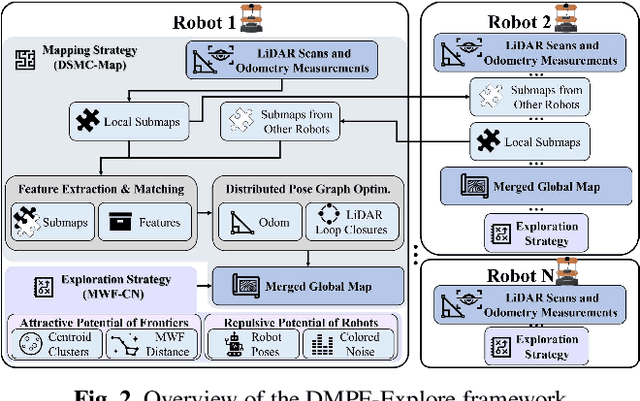

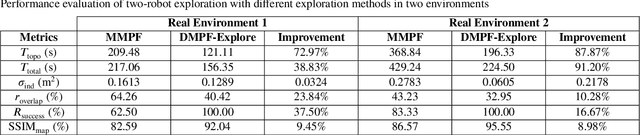

Abstract:Multi-robot collaboration has become a needed component in unknown environment exploration due to its ability to accomplish various challenging situations. Potential-field-based methods are widely used for autonomous exploration because of their high efficiency and low travel cost. However, exploration speed and collaboration ability are still challenging topics. Therefore, we propose a Distributed Multi-Robot Potential-Field-Based Exploration (DMPF-Explore). In particular, we first present a Distributed Submap-Based Multi-Robot Collaborative Mapping Method (DSMC-Map), which can efficiently estimate the robot trajectories and construct the global map by merging the local maps from each robot. Second, we introduce a Potential-Field-Based Exploration Strategy Augmented with Modified Wave-Front Distance and Colored Noises (MWF-CN), in which the accessible frontier neighborhood is extended, and the colored noise provokes the enhancement of exploration performance. The proposed exploration method is deployed for simulation and real-world scenarios. The results show that our approach outperforms the existing ones regarding exploration speed and collaboration ability.

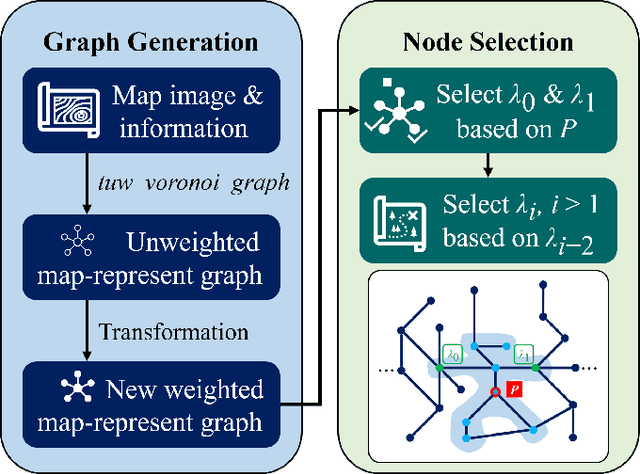

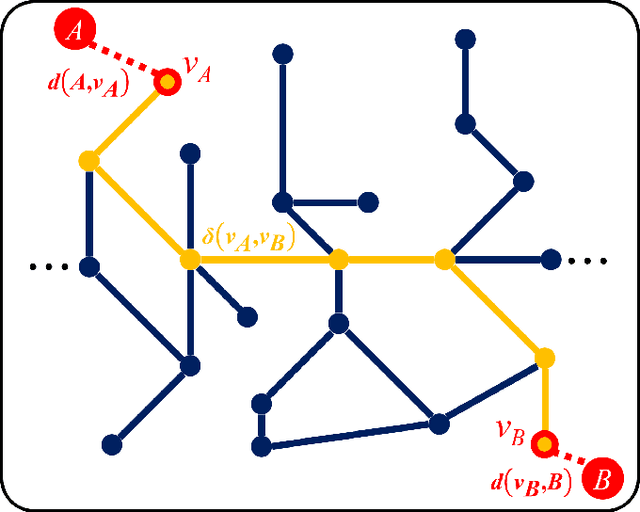

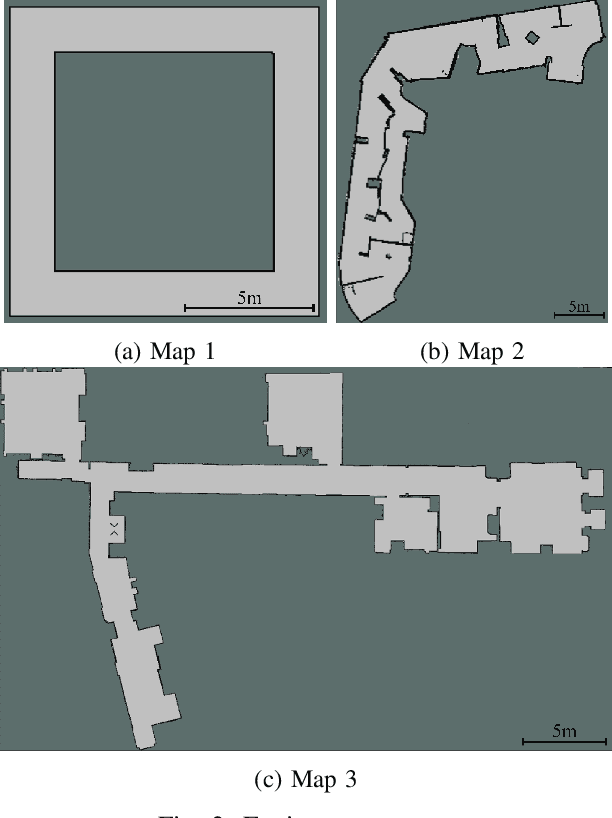

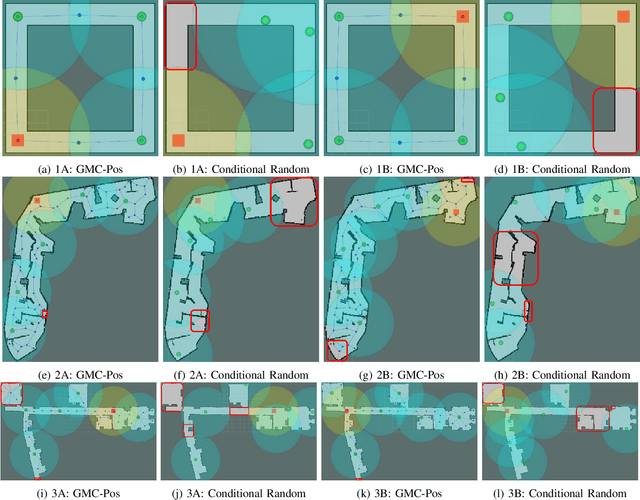

GMC-Pos: Graph-Based Multi-Robot Coverage Positioning Method

Oct 18, 2023

Abstract:Nowadays, several real-world tasks require adequate environment coverage for maintaining communication between multiple robots, for example, target search tasks, environmental monitoring, and post-disaster rescues. In this study, we look into a situation where there are a human operator and multiple robots, and we assume that each human or robot covers a certain range of areas. We want them to maximize their area of coverage collectively. Therefore, in this paper, we propose the Graph-Based Multi-Robot Coverage Positioning Method (GMC-Pos) to find strategic positions for robots that maximize the area coverage. Our novel approach consists of two main modules: graph generation and node selection. Firstly, graph generation represents the environment using a weighted connected graph. Then, we present a novel generalized graph-based distance and utilize it together with the graph degrees to be the conditions for node selection in a recursive manner. Our method is deployed in three environments with different settings. The results show that it outperforms the benchmark method by 15.13% to 24.88% regarding the area coverage percentage.

Moving Object Localization based on the Fusion of Ultra-WideBand and LiDAR with a Mobile Robot

Oct 16, 2023

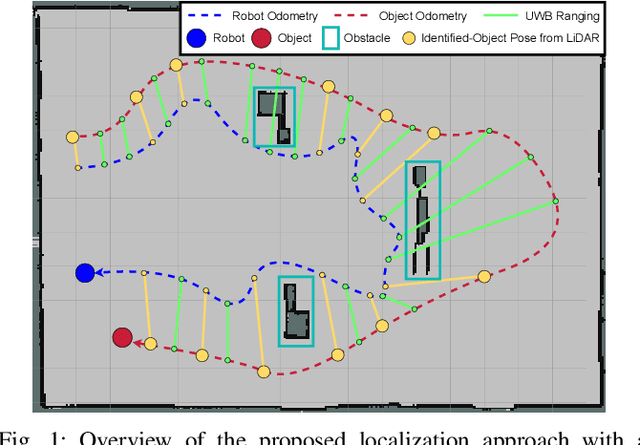

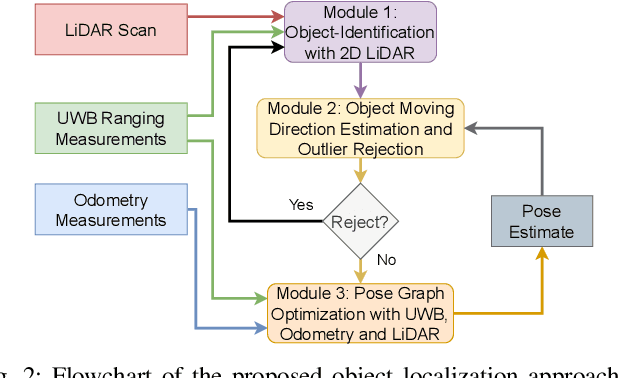

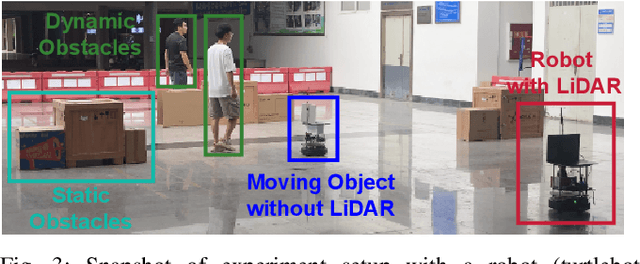

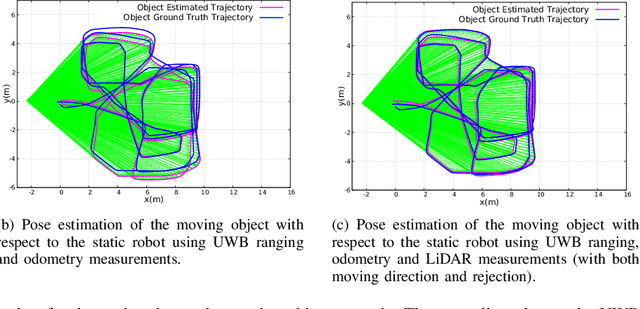

Abstract:Localization of objects is vital for robot-object interaction. Light Detection and Ranging (LiDAR) application in robotics is an emerging and widely used object localization technique due to its accurate distance measurement, long-range, wide field of view, and robustness in different conditions. However, LiDAR is unable to identify the objects when they are obstructed by obstacles, resulting in inaccuracy and noise in localization. To address this issue, we present an approach incorporating LiDAR and Ultra-Wideband (UWB) ranging for object localization. The UWB is popular in sensor fusion localization algorithms due to its low weight and low power consumption. In addition, the UWB is able to return ranging measurements even when the object is not within line-of-sight. Our approach provides an efficient solution to combine an anonymous optical sensor (LiDAR) with an identity-based radio sensor (UWB) to improve the localization accuracy of the object. Our approach consists of three modules. The first module is an object-identification algorithm that compares successive scans from the LiDAR to detect a moving object in the environment and returns the position with the closest range to UWB ranging. The second module estimates the moving object's moving direction using the previous and current estimated position from our object-identification module. It removes the suspicious estimations through an outlier rejection criterion. Lastly, we fuse the LiDAR, UWB ranging, and odometry measurements in pose graph optimization (PGO) to recover the entire trajectory of the robot and object. Extensive experiments were performed to evaluate the performance of the proposed approach.

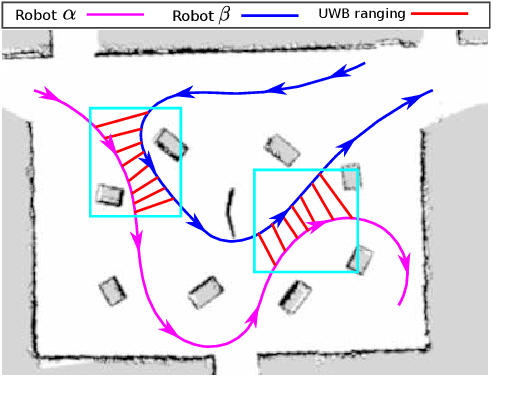

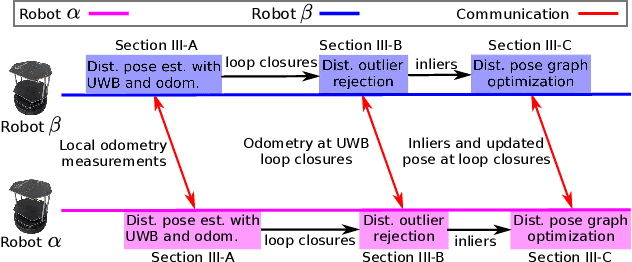

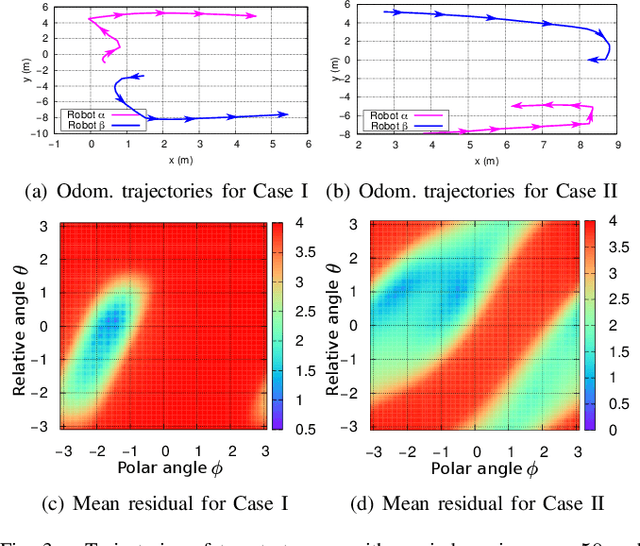

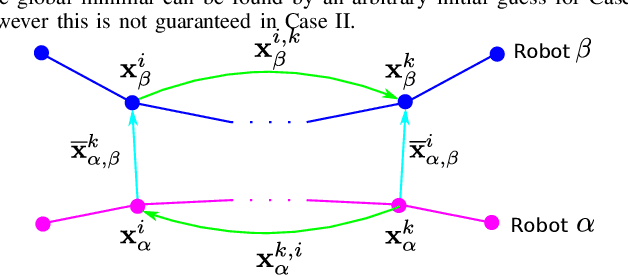

Distributed Ranging SLAM for Multiple Robots with Ultra-WideBand and Odometry Measurements

Jul 08, 2022

Abstract:To accomplish task efficiently in a multiple robots system, a problem that has to be addressed is Simultaneous Localization and Mapping (SLAM). LiDAR (Light Detection and Ranging) has been used for many SLAM solutions due to its superb accuracy, but its performance degrades in featureless environments, like tunnels or long corridors. Centralized SLAM solves the problem with a cloud server, which requires a huge amount of computational resources and lacks robustness against central node failure. To address these issues, we present a distributed SLAM solution to estimate the trajectory of a group of robots using Ultra-WideBand (UWB) ranging and odometry measurements. The proposed approach distributes the processing among the robot team and significantly mitigates the computation concern emerged from the centralized SLAM. Our solution determines the relative pose (also known as loop closure) between two robots by minimizing the UWB ranging measurements taken at different positions when the robots are in close proximity. UWB provides a good distance measure in line-of-sight conditions, but retrieving a precise pose estimation remains a challenge, due to ranging noise and unpredictable path traveled by the robot. To deal with the suspicious loop closures, we use Pairwise Consistency Maximization (PCM) to examine the quality of loop closures and perform outlier rejections. The filtered loop closures are then fused with odometry in a distributed pose graph optimization (DPGO) module to recover the full trajectory of the robot team. Extensive experiments are conducted to validate the effectiveness of the proposed approach.

NLOS Ranging Mitigation with Neural Network Model for UWB Localization

Jun 22, 2022

Abstract:Localization of robots is vital for navigation and path planning, such as in cases where a map of the environment is needed. Ultra-Wideband (UWB) for indoor location systems has been gaining popularity over the years with the introduction of low-cost UWB modules providing centimetre-level accuracy. However, in the presence of obstacles in the environment, Non-Line-Of-Sight (NLOS) measurements from the UWB will produce inaccurate results. As low-cost UWB devices do not provide channel information, we propose an approach to decide if a measurement is within Line-Of-Sight (LOS) or not by using some signal strength information provided by low-cost UWB modules through a Neural Network (NN) model. The result of this model is the probability of a ranging measurement being LOS which was used for localization through the Weighted-Least-Square (WLS) method. Our approach improves localization accuracy by 16.93% on the lobby testing data and 27.97% on the corridor testing data using the NN model trained with all extracted inputs from the office training data.

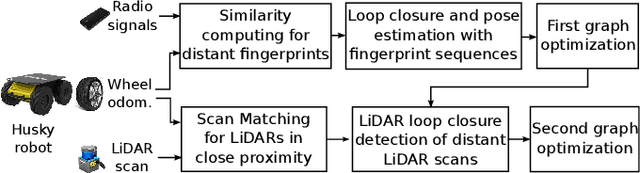

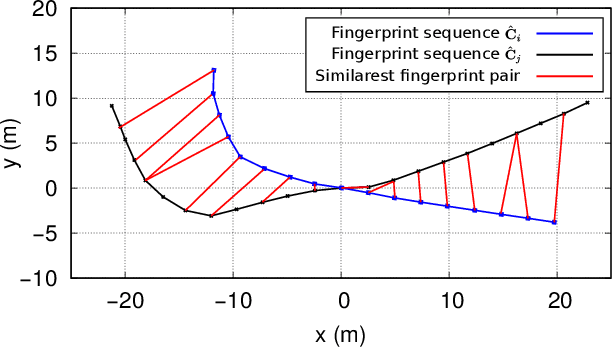

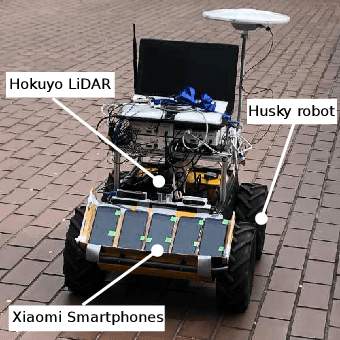

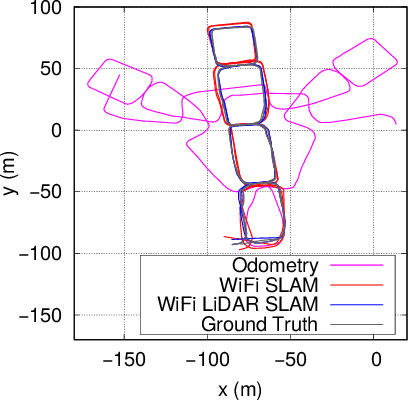

Efficient WiFi LiDAR SLAM for Autonomous Robots in Large Environments

Jun 17, 2022

Abstract:Autonomous robots operating in indoor and GPS denied environments can use LiDAR for SLAM instead. However, LiDARs do not perform well in geometrically-degraded environments, due to the challenge of loop closure detection and computational load to perform scan matching. Existing WiFi infrastructure can be exploited for localization and mapping with low hardware and computational cost. Yet, accurate pose estimation using WiFi is challenging as different signal values can be measured at the same location due to the unpredictability of signal propagation. Therefore, we introduce the use of WiFi fingerprint sequence for pose estimation (i.e. loop closure) in SLAM. This approach exploits the spatial coherence of location fingerprints obtained while a mobile robot is moving. This has better capability of correcting odometry drift. The method also incorporates LiDAR scans and thus, improving computational efficiency for large and geometrically-degraded environments while maintaining the accuracy of LiDAR SLAM. We conducted experiments in an indoor environment to illustrate the effectiveness of the method. The results are evaluated based on Root Mean Square Error (RMSE) and it has achieved an accuracy of 0.88m for the test environment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge