Yutong Liu

LSGQuant: Layer-Sensitivity Guided Quantization for One-Step Diffusion Real-World Video Super-Resolution

Feb 03, 2026Abstract:One-Step Diffusion Models have demonstrated promising capability and fast inference in video super-resolution (VSR) for real-world. Nevertheless, the substantial model size and high computational cost of Diffusion Transformers (DiTs) limit downstream applications. While low-bit quantization is a common approach for model compression, the effectiveness of quantized models is challenged by the high dynamic range of input latent and diverse layer behaviors. To deal with these challenges, we introduce LSGQuant, a layer-sensitivity guided quantizing approach for one-step diffusion-based real-world VSR. Our method incorporates a Dynamic Range Adaptive Quantizer (DRAQ) to fit video token activations. Furthermore, we estimate layer sensitivity and implement a Variance-Oriented Layer Training Strategy (VOLTS) by analyzing layer-wise statistics in calibration. We also introduce Quantization-Aware Optimization (QAO) to jointly refine the quantized branch and a retained high-precision branch. Extensive experiments demonstrate that our method has nearly performance to origin model with full-precision and significantly exceeds existing quantization techniques. Code is available at: https://github.com/zhengchen1999/LSGQuant.

Context-Aware Dynamic Chunking for Streaming Tibetan Speech Recognition

Nov 12, 2025Abstract:In this work, we propose a streaming speech recognition framework for Amdo Tibetan, built upon a hybrid CTC/Atten-tion architecture with a context-aware dynamic chunking mechanism. The proposed strategy adaptively adjusts chunk widths based on encoding states, enabling flexible receptive fields, cross-chunk information exchange, and robust adaptation to varying speaking rates, thereby alleviating the context truncation problem of fixed-chunk methods. To further capture the linguistic characteristics of Tibetan, we construct a lexicon grounded in its orthographic principles, providing linguistically motivated modeling units. During decoding, an external language model is integrated to enhance semantic consistency and improve recognition of long sentences. Experimental results show that the proposed framework achieves a word error rate (WER) of 6.23% on the test set, yielding a 48.15% relative improvement over the fixed-chunk baseline, while significantly reducing recognition latency and maintaining performance close to global decoding.

Tibetan Language and AI: A Comprehensive Survey of Resources, Methods and Challenges

Oct 22, 2025Abstract:Tibetan, one of the major low-resource languages in Asia, presents unique linguistic and sociocultural characteristics that pose both challenges and opportunities for AI research. Despite increasing interest in developing AI systems for underrepresented languages, Tibetan has received limited attention due to a lack of accessible data resources, standardized benchmarks, and dedicated tools. This paper provides a comprehensive survey of the current state of Tibetan AI in the AI domain, covering textual and speech data resources, NLP tasks, machine translation, speech recognition, and recent developments in LLMs. We systematically categorize existing datasets and tools, evaluate methods used across different tasks, and compare performance where possible. We also identify persistent bottlenecks such as data sparsity, orthographic variation, and the lack of unified evaluation metrics. Additionally, we discuss the potential of cross-lingual transfer, multi-modal learning, and community-driven resource creation. This survey aims to serve as a foundational reference for future work on Tibetan AI research and encourages collaborative efforts to build an inclusive and sustainable AI ecosystem for low-resource languages.

Listening, Imagining \& Refining: A Heuristic Optimized ASR Correction Framework with LLMs

Sep 18, 2025Abstract:Automatic Speech Recognition (ASR) systems remain prone to errors that affect downstream applications. In this paper, we propose LIR-ASR, a heuristic optimized iterative correction framework using LLMs, inspired by human auditory perception. LIR-ASR applies a "Listening-Imagining-Refining" strategy, generating phonetic variants and refining them in context. A heuristic optimization with finite state machine (FSM) is introduced to prevent the correction process from being trapped in local optima and rule-based constraints help maintain semantic fidelity. Experiments on both English and Chinese ASR outputs show that LIR-ASR achieves average reductions in CER/WER of up to 1.5 percentage points compared to baselines, demonstrating substantial accuracy gains in transcription.

A Comprehensive Evaluation on Quantization Techniques for Large Language Models

Jul 23, 2025

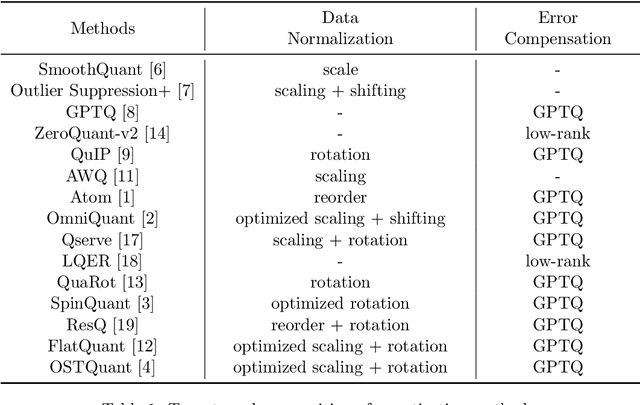

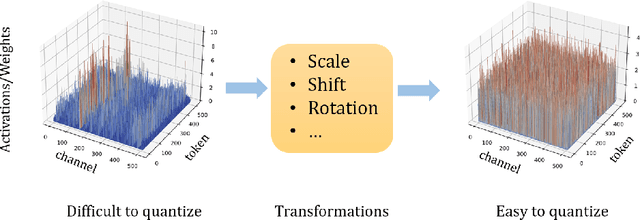

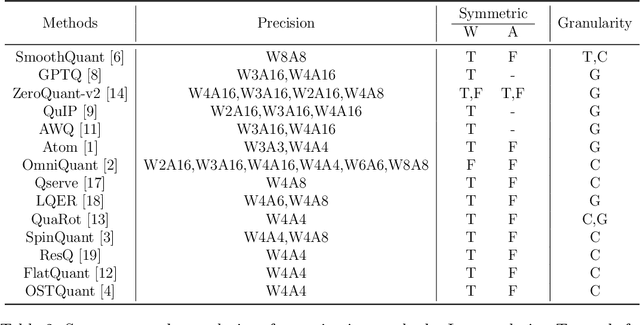

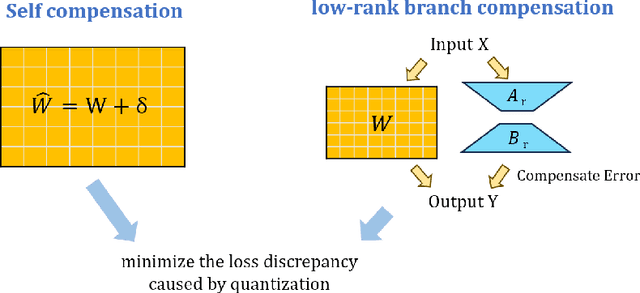

Abstract:For large language models (LLMs), post-training quantization (PTQ) can significantly reduce memory footprint and computational overhead. Model quantization is a rapidly evolving research field. Though many papers have reported breakthrough performance, they may not conduct experiments on the same ground since one quantization method usually contains multiple components. In addition, analyzing the theoretical connections among existing methods is crucial for in-depth understanding. To bridge these gaps, we conduct an extensive review of state-of-the-art methods and perform comprehensive evaluations on the same ground to ensure fair comparisons. To our knowledge, this fair and extensive investigation remains critically important yet underexplored. To better understand the theoretical connections, we decouple the published quantization methods into two steps: pre-quantization transformation and quantization error mitigation. We define the former as a preprocessing step applied before quantization to reduce the impact of outliers, making the data distribution flatter and more suitable for quantization. Quantization error mitigation involves techniques that offset the errors introduced during quantization, thereby enhancing model performance. We evaluate and analyze the impact of different components of quantization methods. Additionally, we analyze and evaluate the latest MXFP4 data format and its performance. Our experimental results demonstrate that optimized rotation and scaling yield the best performance for pre-quantization transformation, and combining low-rank compensation with GPTQ occasionally outperforms using GPTQ alone for quantization error mitigation. Furthermore, we explore the potential of the latest MXFP4 quantization and reveal that the optimal pre-quantization transformation strategy for INT4 does not generalize well to MXFP4, inspiring further investigation.

HonestFace: Towards Honest Face Restoration with One-Step Diffusion Model

May 24, 2025Abstract:Face restoration has achieved remarkable advancements through the years of development. However, ensuring that restored facial images exhibit high fidelity, preserve authentic features, and avoid introducing artifacts or biases remains a significant challenge. This highlights the need for models that are more "honest" in their reconstruction from low-quality inputs, accurately reflecting original characteristics. In this work, we propose HonestFace, a novel approach designed to restore faces with a strong emphasis on such honesty, particularly concerning identity consistency and texture realism. To achieve this, HonestFace incorporates several key components. First, we propose an identity embedder to effectively capture and preserve crucial identity features from both the low-quality input and multiple reference faces. Second, a masked face alignment method is presented to enhance fine-grained details and textural authenticity, thereby preventing the generation of patterned or overly synthetic textures and improving overall clarity. Furthermore, we present a new landmark-based evaluation metric. Based on affine transformation principles, this metric improves the accuracy compared to conventional L2 distance calculations for facial feature alignment. Leveraging these contributions within a one-step diffusion model framework, HonestFace delivers exceptional restoration results in terms of facial fidelity and realism. Extensive experiments demonstrate that our approach surpasses existing state-of-the-art methods, achieving superior performance in both visual quality and quantitative assessments. The code and pre-trained models will be made publicly available at https://github.com/jkwang28/HonestFace .

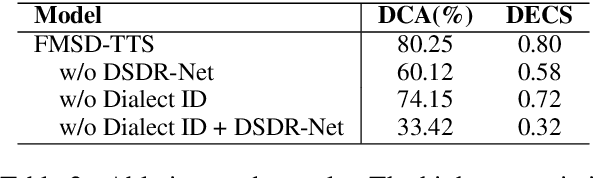

FMSD-TTS: Few-shot Multi-Speaker Multi-Dialect Text-to-Speech Synthesis for Ü-Tsang, Amdo and Kham Speech Dataset Generation

May 20, 2025

Abstract:Tibetan is a low-resource language with minimal parallel speech corpora spanning its three major dialects-\"U-Tsang, Amdo, and Kham-limiting progress in speech modeling. To address this issue, we propose FMSD-TTS, a few-shot, multi-speaker, multi-dialect text-to-speech framework that synthesizes parallel dialectal speech from limited reference audio and explicit dialect labels. Our method features a novel speaker-dialect fusion module and a Dialect-Specialized Dynamic Routing Network (DSDR-Net) to capture fine-grained acoustic and linguistic variations across dialects while preserving speaker identity. Extensive objective and subjective evaluations demonstrate that FMSD-TTS significantly outperforms baselines in both dialectal expressiveness and speaker similarity. We further validate the quality and utility of the synthesized speech through a challenging speech-to-speech dialect conversion task. Our contributions include: (1) a novel few-shot TTS system tailored for Tibetan multi-dialect speech synthesis, (2) the public release of a large-scale synthetic Tibetan speech corpus generated by FMSD-TTS, and (3) an open-source evaluation toolkit for standardized assessment of speaker similarity, dialect consistency, and audio quality.

TiSpell: A Semi-Masked Methodology for Tibetan Spelling Correction covering Multi-Level Error with Data Augmentation

May 14, 2025Abstract:Multi-level Tibetan spelling correction addresses errors at both the character and syllable levels within a unified model. Existing methods focus mainly on single-level correction and lack effective integration of both levels. Moreover, there are no open-source datasets or augmentation methods tailored for this task in Tibetan. To tackle this, we propose a data augmentation approach using unlabeled text to generate multi-level corruptions, and introduce TiSpell, a semi-masked model capable of correcting both character- and syllable-level errors. Although syllable-level correction is more challenging due to its reliance on global context, our semi-masked strategy simplifies this process. We synthesize nine types of corruptions on clean sentences to create a robust training set. Experiments on both simulated and real-world data demonstrate that TiSpell, trained on our dataset, outperforms baseline models and matches the performance of state-of-the-art approaches, confirming its effectiveness.

Kernel Learning Assisted Synthesis Condition Exploration for Ternary Spinel

Mar 25, 2025

Abstract:Machine learning and high-throughput experimentation have greatly accelerated the discovery of mixed metal oxide catalysts by leveraging their compositional flexibility. However, the lack of established synthesis routes for solid-state materials remains a significant challenge in inorganic chemistry. An interpretable machine learning model is therefore essential, as it provides insights into the key factors governing phase formation. Here, we focus on the formation of single-phase Fe$_2$(ZnCo)O$_4$, synthesized via a high-throughput co-precipitation method. We combined a kernel classification model with a novel application of global SHAP analysis to pinpoint the experimental features most critical to single phase synthesizability by interpreting the contributions of each feature. Global SHAP analysis reveals that precursor and precipitating agent contributions to single-phase spinel formation align closely with established crystal growth theories. These results not only underscore the importance of interpretable machine learning in refining synthesis protocols but also establish a framework for data-informed experimental design in inorganic synthesis.

Lumina-Video: Efficient and Flexible Video Generation with Multi-scale Next-DiT

Feb 10, 2025Abstract:Recent advancements have established Diffusion Transformers (DiTs) as a dominant framework in generative modeling. Building on this success, Lumina-Next achieves exceptional performance in the generation of photorealistic images with Next-DiT. However, its potential for video generation remains largely untapped, with significant challenges in modeling the spatiotemporal complexity inherent to video data. To address this, we introduce Lumina-Video, a framework that leverages the strengths of Next-DiT while introducing tailored solutions for video synthesis. Lumina-Video incorporates a Multi-scale Next-DiT architecture, which jointly learns multiple patchifications to enhance both efficiency and flexibility. By incorporating the motion score as an explicit condition, Lumina-Video also enables direct control of generated videos' dynamic degree. Combined with a progressive training scheme with increasingly higher resolution and FPS, and a multi-source training scheme with mixed natural and synthetic data, Lumina-Video achieves remarkable aesthetic quality and motion smoothness at high training and inference efficiency. We additionally propose Lumina-V2A, a video-to-audio model based on Next-DiT, to create synchronized sounds for generated videos. Codes are released at https://www.github.com/Alpha-VLLM/Lumina-Video.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge