Yiru Wang

FlowDrive: Energy Flow Field for End-to-End Autonomous Driving

Sep 17, 2025Abstract:Recent advances in end-to-end autonomous driving leverage multi-view images to construct BEV representations for motion planning. In motion planning, autonomous vehicles need considering both hard constraints imposed by geometrically occupied obstacles (e.g., vehicles, pedestrians) and soft, rule-based semantics with no explicit geometry (e.g., lane boundaries, traffic priors). However, existing end-to-end frameworks typically rely on BEV features learned in an implicit manner, lacking explicit modeling of risk and guidance priors for safe and interpretable planning. To address this, we propose FlowDrive, a novel framework that introduces physically interpretable energy-based flow fields-including risk potential and lane attraction fields-to encode semantic priors and safety cues into the BEV space. These flow-aware features enable adaptive refinement of anchor trajectories and serve as interpretable guidance for trajectory generation. Moreover, FlowDrive decouples motion intent prediction from trajectory denoising via a conditional diffusion planner with feature-level gating, alleviating task interference and enhancing multimodal diversity. Experiments on the NAVSIM v2 benchmark demonstrate that FlowDrive achieves state-of-the-art performance with an EPDMS of 86.3, surpassing prior baselines in both safety and planning quality. The project is available at https://astrixdrive.github.io/FlowDrive.github.io/.

ArbiViewGen: Controllable Arbitrary Viewpoint Camera Data Generation for Autonomous Driving via Stable Diffusion Models

Aug 07, 2025

Abstract:Arbitrary viewpoint image generation holds significant potential for autonomous driving, yet remains a challenging task due to the lack of ground-truth data for extrapolated views, which hampers the training of high-fidelity generative models. In this work, we propose Arbiviewgen, a novel diffusion-based framework for the generation of controllable camera images from arbitrary points of view. To address the absence of ground-truth data in unseen views, we introduce two key components: Feature-Aware Adaptive View Stitching (FAVS) and Cross-View Consistency Self-Supervised Learning (CVC-SSL). FAVS employs a hierarchical matching strategy that first establishes coarse geometric correspondences using camera poses, then performs fine-grained alignment through improved feature matching algorithms, and identifies high-confidence matching regions via clustering analysis. Building upon this, CVC-SSL adopts a self-supervised training paradigm where the model reconstructs the original camera views from the synthesized stitched images using a diffusion model, enforcing cross-view consistency without requiring supervision from extrapolated data. Our framework requires only multi-camera images and their associated poses for training, eliminating the need for additional sensors or depth maps. To our knowledge, Arbiviewgen is the first method capable of controllable arbitrary view camera image generation in multiple vehicle configurations.

DiffVLA: Vision-Language Guided Diffusion Planning for Autonomous Driving

May 26, 2025Abstract:Research interest in end-to-end autonomous driving has surged owing to its fully differentiable design integrating modular tasks, i.e. perception, prediction and planing, which enables optimization in pursuit of the ultimate goal. Despite the great potential of the end-to-end paradigm, existing methods suffer from several aspects including expensive BEV (bird's eye view) computation, action diversity, and sub-optimal decision in complex real-world scenarios. To address these challenges, we propose a novel hybrid sparse-dense diffusion policy, empowered by a Vision-Language Model (VLM), called Diff-VLA. We explore the sparse diffusion representation for efficient multi-modal driving behavior. Moreover, we rethink the effectiveness of VLM driving decision and improve the trajectory generation guidance through deep interaction across agent, map instances and VLM output. Our method shows superior performance in Autonomous Grand Challenge 2025 which contains challenging real and reactive synthetic scenarios. Our methods achieves 45.0 PDMS.

SparseMeXT Unlocking the Potential of Sparse Representations for HD Map Construction

May 12, 2025Abstract:Recent advancements in high-definition \emph{HD} map construction have demonstrated the effectiveness of dense representations, which heavily rely on computationally intensive bird's-eye view \emph{BEV} features. While sparse representations offer a more efficient alternative by avoiding dense BEV processing, existing methods often lag behind due to the lack of tailored designs. These limitations have hindered the competitiveness of sparse representations in online HD map construction. In this work, we systematically revisit and enhance sparse representation techniques, identifying key architectural and algorithmic improvements that bridge the gap with--and ultimately surpass--dense approaches. We introduce a dedicated network architecture optimized for sparse map feature extraction, a sparse-dense segmentation auxiliary task to better leverage geometric and semantic cues, and a denoising module guided by physical priors to refine predictions. Through these enhancements, our method achieves state-of-the-art performance on the nuScenes dataset, significantly advancing HD map construction and centerline detection. Specifically, SparseMeXt-Tiny reaches a mean average precision \emph{mAP} of 55.5% at 32 frames per second \emph{fps}, while SparseMeXt-Base attains 65.2% mAP. Scaling the backbone and decoder further, SparseMeXt-Large achieves an mAP of 68.9% at over 20 fps, establishing a new benchmark for sparse representations in HD map construction. These results underscore the untapped potential of sparse methods, challenging the conventional reliance on dense representations and redefining efficiency-performance trade-offs in the field.

AdaMMS: Model Merging for Heterogeneous Multimodal Large Language Models with Unsupervised Coefficient Optimization

Mar 31, 2025Abstract:Recently, model merging methods have demonstrated powerful strengths in combining abilities on various tasks from multiple Large Language Models (LLMs). While previous model merging methods mainly focus on merging homogeneous models with identical architecture, they meet challenges when dealing with Multimodal Large Language Models (MLLMs) with inherent heterogeneous property, including differences in model architecture and the asymmetry in the parameter space. In this work, we propose AdaMMS, a novel model merging method tailored for heterogeneous MLLMs. Our method tackles the challenges in three steps: mapping, merging and searching. Specifically, we first design mapping function between models to apply model merging on MLLMs with different architecture. Then we apply linear interpolation on model weights to actively adapt the asymmetry in the heterogeneous MLLMs. Finally in the hyper-parameter searching step, we propose an unsupervised hyper-parameter selection method for model merging. As the first model merging method capable of merging heterogeneous MLLMs without labeled data, extensive experiments on various model combinations demonstrated that AdaMMS outperforms previous model merging methods on various vision-language benchmarks.

Beyond Single Frames: Can LMMs Comprehend Temporal and Contextual Narratives in Image Sequences?

Feb 19, 2025Abstract:Large Multimodal Models (LMMs) have achieved remarkable success across various visual-language tasks. However, existing benchmarks predominantly focus on single-image understanding, leaving the analysis of image sequences largely unexplored. To address this limitation, we introduce StripCipher, a comprehensive benchmark designed to evaluate capabilities of LMMs to comprehend and reason over sequential images. StripCipher comprises a human-annotated dataset and three challenging subtasks: visual narrative comprehension, contextual frame prediction, and temporal narrative reordering. Our evaluation of $16$ state-of-the-art LMMs, including GPT-4o and Qwen2.5VL, reveals a significant performance gap compared to human capabilities, particularly in tasks that require reordering shuffled sequential images. For instance, GPT-4o achieves only 23.93% accuracy in the reordering subtask, which is 56.07% lower than human performance. Further quantitative analysis discuss several factors, such as input format of images, affecting the performance of LLMs in sequential understanding, underscoring the fundamental challenges that remain in the development of LMMs.

SG-FSM: A Self-Guiding Zero-Shot Prompting Paradigm for Multi-Hop Question Answering Based on Finite State Machine

Oct 22, 2024

Abstract:Large Language Models with chain-of-thought prompting, such as OpenAI-o1, have shown impressive capabilities in natural language inference tasks. However, Multi-hop Question Answering (MHQA) remains challenging for many existing models due to issues like hallucination, error propagation, and limited context length. To address these challenges and enhance LLMs' performance on MHQA, we propose the Self-Guiding prompting Finite State Machine (SG-FSM), designed to strengthen multi-hop reasoning abilities. Unlike traditional chain-of-thought methods, SG-FSM tackles MHQA by iteratively breaking down complex questions into sub-questions, correcting itself to improve accuracy. It processes one sub-question at a time, dynamically deciding the next step based on the current context and results, functioning much like an automaton. Experiments across various benchmarks demonstrate the effectiveness of our approach, outperforming strong baselines on challenging datasets such as Musique. SG-FSM reduces hallucination, enabling recovery of the correct final answer despite intermediate errors. It also improves adherence to specified output formats, simplifying evaluation significantly.

FAST-GSC: Fast and Adaptive Semantic Transmission for Generative Semantic Communication

Jul 22, 2024

Abstract:The rapidly evolving field of generative artificial intelligence technology has introduced innovative approaches for developing semantic communication (SemCom) frameworks, leading to the emergence of a new paradigm-generative SemCom (GSC). However, the complex processes involved in semantic extraction and generative inference may result in considerable latency in resource-constrained scenarios. To tackle these issues, we introduce a new GSC framework that involves fast and adaptive semantic transmission (FAST-GSC). This framework incorporates one innovative communication mechanism and two enhancement strategies at the transmitter and receiver, respectively. Aiming to reduce task latency, our communication mechanism enables fast semantic transmission by parallelizing the processes of semantic extraction at the transmitter and inference at the receiver. Preliminary evaluations indicate that while this mechanism effectively reduces task latency, it could potentially compromise task performance. To address this issue, we propose two additional methods for enhancement. First, at the transmitter, we employ reinforcement learning to discern the intrinsic temporal dependencies among the semantic units and design their extraction and transmission sequence accordingly. Second, at the receiver, we design a semantic difference calculation module and propose a sequential conditional denoising approach to alleviate the stringent immediacy requirement for the reception of semantic features. Extensive experiments demonstrate that our proposed architecture achieves a performance score comparable to the conventional GSC architecture while realizing a 52% reduction in residual task latency that extends beyond the fixed inference duration.

FSM: A Finite State Machine Based Zero-Shot Prompting Paradigm for Multi-Hop Question Answering

Jul 03, 2024Abstract:Large Language Models (LLMs) with chain-of-thought (COT) prompting have demonstrated impressive abilities on simple nature language inference tasks. However, they tend to perform poorly on Multi-hop Question Answering (MHQA) tasks due to several challenges, including hallucination, error propagation and limited context length. We propose a prompting method, Finite State Machine (FSM) to enhance the reasoning capabilities of LLM for complex tasks in addition to improved effectiveness and trustworthiness. Different from COT methods, FSM addresses MHQA by iteratively decomposing a question into multi-turn sub-questions, and self-correcting in time, improving the accuracy of answers in each step. Specifically, FSM addresses one sub-question at a time and decides on the next step based on its current result and state, in an automaton-like format. Experiments on benchmarks show the effectiveness of our method. Although our method performs on par with the baseline on relatively simpler datasets, it excels on challenging datasets like Musique. Moreover, this approach mitigates the hallucination phenomenon, wherein the correct final answer can be recovered despite errors in intermediate reasoning. Furthermore, our method improves LLMs' ability to follow specified output format requirements, significantly reducing the difficulty of answer interpretation and the need for reformatting.

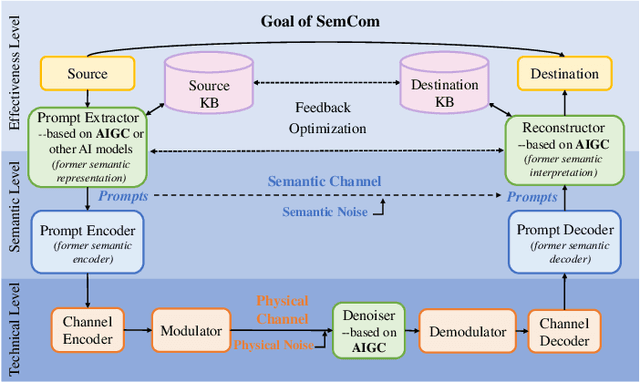

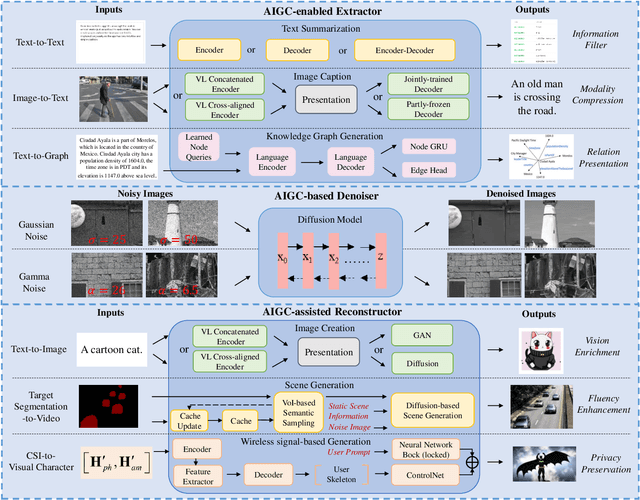

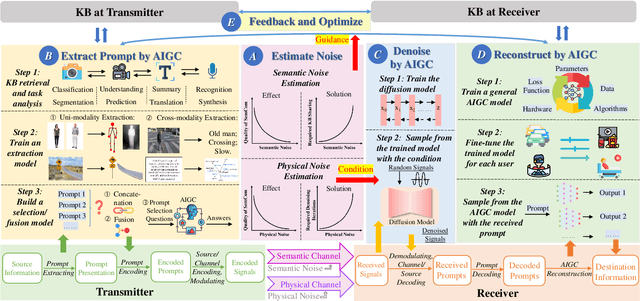

Harnessing the Power of AI-Generated Content for Semantic Communication

Apr 10, 2024

Abstract:Semantic Communication (SemCom) is envisaged as the next-generation paradigm to address challenges stemming from the conflicts between the increasing volume of transmission data and the scarcity of spectrum resources. However, existing SemCom systems face drawbacks, such as low explainability, modality rigidity, and inadequate reconstruction functionality. Recognizing the transformative capabilities of AI-generated content (AIGC) technologies in content generation, this paper explores a pioneering approach by integrating them into SemCom to address the aforementioned challenges. We employ a three-layer model to illustrate the proposed AIGC-assisted SemCom (AIGC-SCM) architecture, emphasizing its clear deviation from existing SemCom. Grounded in this model, we investigate various AIGC technologies with the potential to augment SemCom's performance. In alignment with SemCom's goal of conveying semantic meanings, we also introduce the new evaluation methods for our AIGC-SCM system. Subsequently, we explore communication scenarios where our proposed AIGC-SCM can realize its potential. For practical implementation, we construct a detailed integration workflow and conduct a case study in a virtual reality image transmission scenario. The results demonstrate our ability to maintain a high degree of alignment between the reconstructed content and the original source information, while substantially minimizing the data volume required for transmission. These findings pave the way for further enhancements in communication efficiency and the improvement of Quality of Service. At last, we present future directions for AIGC-SCM studies.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge