Yichen Li

Human-Like Coarse Object Representations in Vision Models

Feb 12, 2026Abstract:Humans appear to represent objects for intuitive physics with coarse, volumetric bodies'' that smooth concavities - trading fine visual details for efficient physical predictions - yet their internal structure is largely unknown. Segmentation models, in contrast, optimize pixel-accurate masks that may misalign with such bodies. We ask whether and when these models nonetheless acquire human-like bodies. Using a time-to-collision (TTC) behavioral paradigm, we introduce a comparison pipeline and alignment metric, then vary model training time, size, and effective capacity via pruning. Across all manipulations, alignment with human behavior follows an inverse U-shaped curve: small/briefly trained/pruned models under-segment into blobs; large/fully trained models over-segment with boundary wiggles; and an intermediate ideal body granularity'' best matches humans. This suggests human-like coarse bodies emerge from resource constraints rather than bespoke biases, and points to simple knobs - early checkpoints, modest architectures, light pruning - for eliciting physics-efficient representations. We situate these results within resource-rational accounts balancing recognition detail against physical affordances.

Nonlinearity as Rank: Generative Low-Rank Adapter with Radial Basis Functions

Feb 05, 2026Abstract:Low-rank adaptation (LoRA) approximates the update of a pretrained weight matrix using the product of two low-rank matrices. However, standard LoRA follows an explicit-rank paradigm, where increasing model capacity requires adding more rows or columns (i.e., basis vectors) to the low-rank matrices, leading to substantial parameter growth. In this paper, we find that these basis vectors exhibit significant parameter redundancy and can be compactly represented by lightweight nonlinear functions. Therefore, we propose Generative Low-Rank Adapter (GenLoRA), which replaces explicit basis vector storage with nonlinear basis vector generation. Specifically, GenLoRA maintains a latent vector for each low-rank matrix and employs a set of lightweight radial basis functions (RBFs) to synthesize the basis vectors. Each RBF requires far fewer parameters than an explicit basis vector, enabling higher parameter efficiency in GenLoRA. Extensive experiments across multiple datasets and architectures show that GenLoRA attains higher effective LoRA ranks under smaller parameter budgets, resulting in superior fine-tuning performance. The code is available at https://anonymous.4open.science/r/GenLoRA-1519.

Incentivizing Tool-augmented Thinking with Images for Medical Image Analysis

Dec 16, 2025Abstract:Recent reasoning based medical MLLMs have made progress in generating step by step textual reasoning chains. However, they still struggle with complex tasks that necessitate dynamic and iterative focusing on fine-grained visual regions to achieve precise grounding and diagnosis. We introduce Ophiuchus, a versatile, tool-augmented framework that equips an MLLM to (i) decide when additional visual evidence is needed, (ii) determine where to probe and ground within the medical image, and (iii) seamlessly weave the relevant sub-image content back into an interleaved, multimodal chain of thought. In contrast to prior approaches limited by the performance ceiling of specialized tools, Ophiuchus integrates the model's inherent grounding and perception capabilities with external tools, thereby fostering higher-level reasoning. The core of our method is a three-stage training strategy: cold-start training with tool-integrated reasoning data to achieve basic tool selection and adaptation for inspecting key regions; self-reflection fine-tuning to strengthen reflective reasoning and encourage revisiting tool outputs; and Agentic Tool Reinforcement Learning to directly optimize task-specific rewards and emulate expert-like diagnostic behavior. Extensive experiments show that Ophiuchus consistently outperforms both closed-source and open-source SOTA methods across diverse medical benchmarks, including VQA, detection, and reasoning-based segmentation. Our approach illuminates a path toward medical AI agents that can genuinely "think with images" through tool-integrated reasoning. Datasets, codes, and trained models will be released publicly.

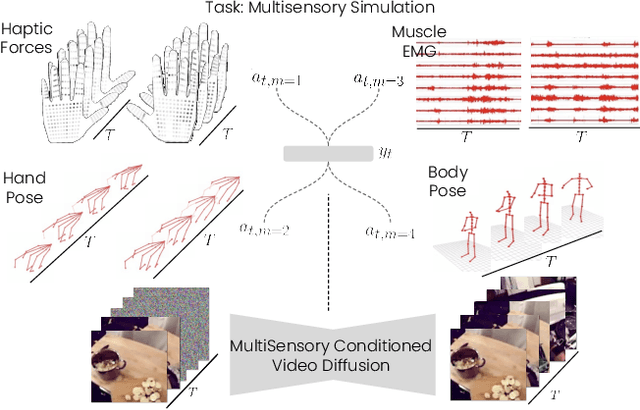

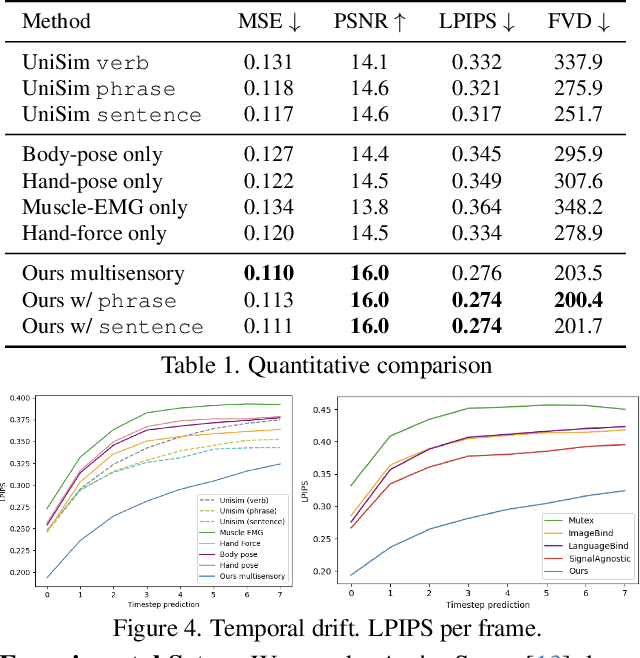

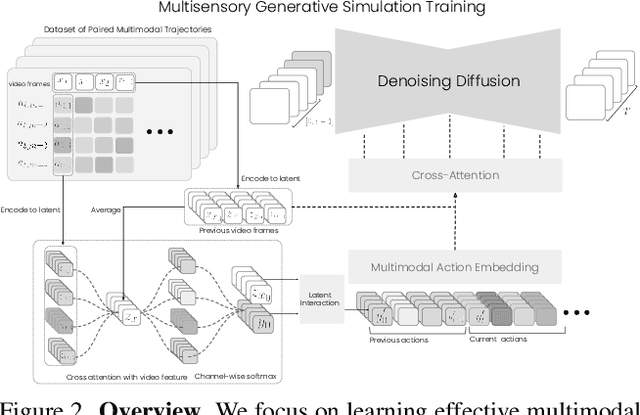

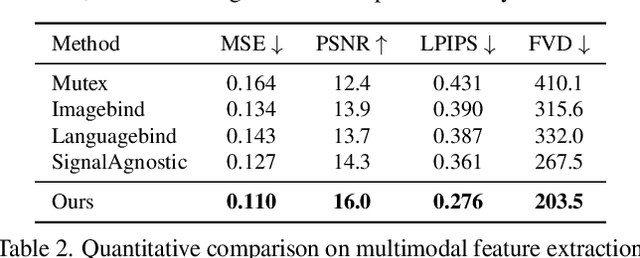

MultiModal Action Conditioned Video Generation

Oct 02, 2025

Abstract:Current video models fail as world model as they lack fine-graiend control. General-purpose household robots require real-time fine motor control to handle delicate tasks and urgent situations. In this work, we introduce fine-grained multimodal actions to capture such precise control. We consider senses of proprioception, kinesthesia, force haptics, and muscle activation. Such multimodal senses naturally enables fine-grained interactions that are difficult to simulate with text-conditioned generative models. To effectively simulate fine-grained multisensory actions, we develop a feature learning paradigm that aligns these modalities while preserving the unique information each modality provides. We further propose a regularization scheme to enhance causality of the action trajectory features in representing intricate interaction dynamics. Experiments show that incorporating multimodal senses improves simulation accuracy and reduces temporal drift. Extensive ablation studies and downstream applications demonstrate the effectiveness and practicality of our work.

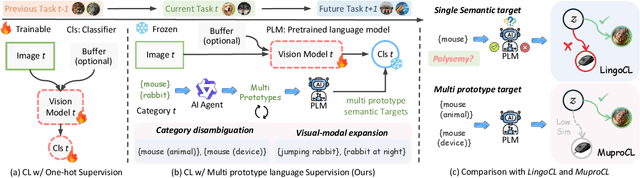

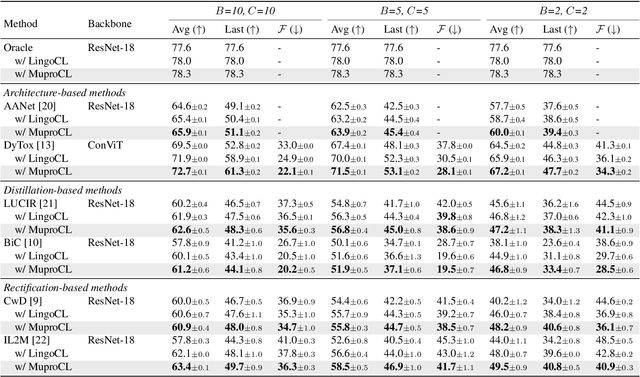

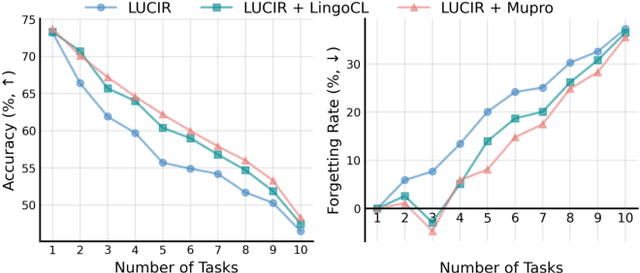

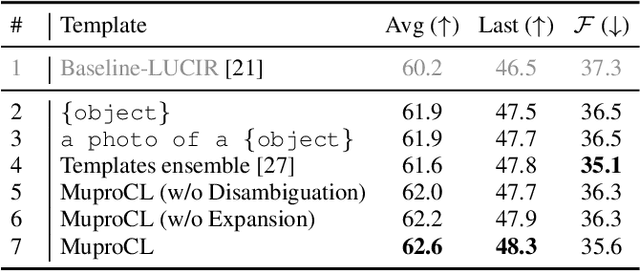

Towards Robust Visual Continual Learning with Multi-Prototype Supervision

Sep 19, 2025

Abstract:Language-guided supervision, which utilizes a frozen semantic target from a Pretrained Language Model (PLM), has emerged as a promising paradigm for visual Continual Learning (CL). However, relying on a single target introduces two critical limitations: 1) semantic ambiguity, where a polysemous category name results in conflicting visual representations, and 2) intra-class visual diversity, where a single prototype fails to capture the rich variety of visual appearances within a class. To this end, we propose MuproCL, a novel framework that replaces the single target with multiple, context-aware prototypes. Specifically, we employ a lightweight LLM agent to perform category disambiguation and visual-modal expansion to generate a robust set of semantic prototypes. A LogSumExp aggregation mechanism allows the vision model to adaptively align with the most relevant prototype for a given image. Extensive experiments across various CL baselines demonstrate that MuproCL consistently enhances performance and robustness, establishing a more effective path for language-guided continual learning.

Exploring Autonomous Agents: A Closer Look at Why They Fail When Completing Tasks

Aug 18, 2025Abstract:Autonomous agent systems powered by Large Language Models (LLMs) have demonstrated promising capabilities in automating complex tasks. However, current evaluations largely rely on success rates without systematically analyzing the interactions, communication mechanisms, and failure causes within these systems. To bridge this gap, we present a benchmark of 34 representative programmable tasks designed to rigorously assess autonomous agents. Using this benchmark, we evaluate three popular open-source agent frameworks combined with two LLM backbones, observing a task completion rate of approximately 50%. Through in-depth failure analysis, we develop a three-tier taxonomy of failure causes aligned with task phases, highlighting planning errors, task execution issues, and incorrect response generation. Based on these insights, we propose actionable improvements to enhance agent planning and self-diagnosis capabilities. Our failure taxonomy, together with mitigation advice, provides an empirical foundation for developing more robust and effective autonomous agent systems in the future.

Next Edit Prediction: Learning to Predict Code Edits from Context and Interaction History

Aug 13, 2025Abstract:The rapid advancement of large language models (LLMs) has led to the widespread adoption of AI-powered coding assistants integrated into a development environment. On one hand, low-latency code completion offers completion suggestions but is fundamentally constrained to the cursor's current position. On the other hand, chat-based editing can perform complex modifications, yet forces developers to stop their work, describe the intent in natural language, which causes a context-switch away from the code. This creates a suboptimal user experience, as neither paradigm proactively predicts the developer's next edit in a sequence of related edits. To bridge this gap and provide the seamless code edit suggestion, we introduce the task of Next Edit Prediction, a novel task designed to infer developer intent from recent interaction history to predict both the location and content of the subsequent edit. Specifically, we curate a high-quality supervised fine-tuning dataset and an evaluation benchmark for the Next Edit Prediction task. Then, we conduct supervised fine-tuning on a series of models and performed a comprehensive evaluation of both the fine-tuned models and other baseline models, yielding several novel findings. This work lays the foundation for a new interaction paradigm that proactively collaborate with developers by anticipating their following action, rather than merely reacting to explicit instructions.

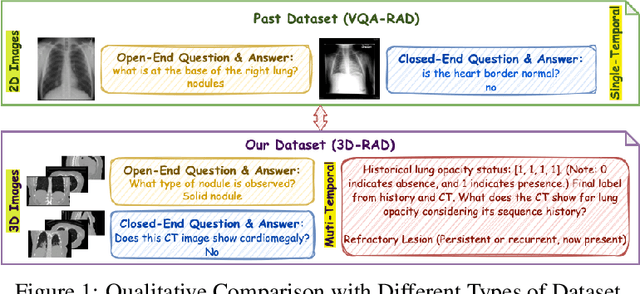

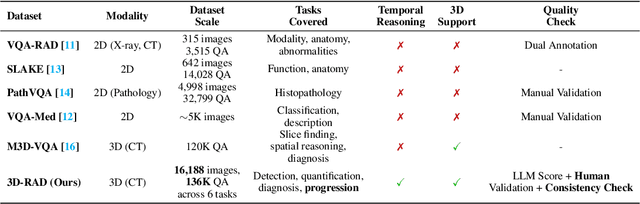

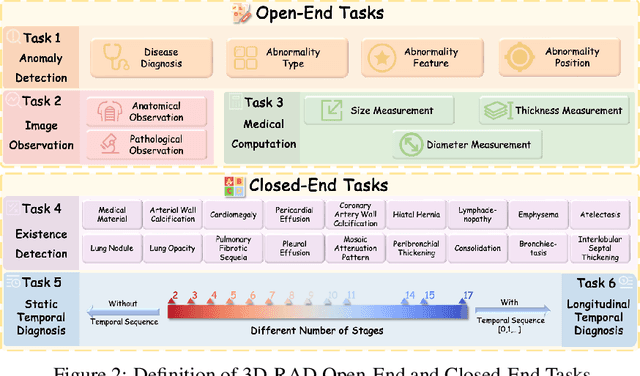

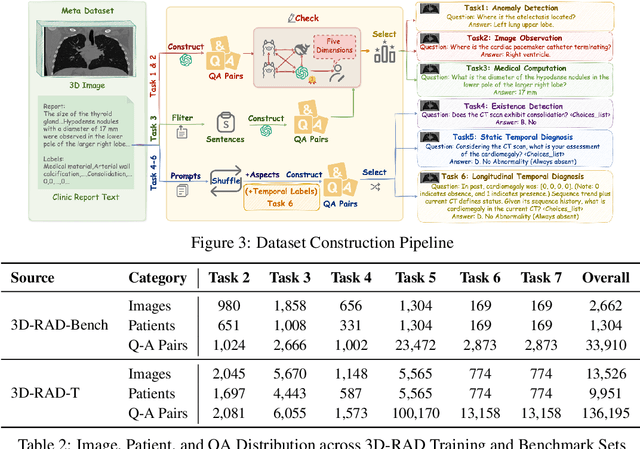

3D-RAD: A Comprehensive 3D Radiology Med-VQA Dataset with Multi-Temporal Analysis and Diverse Diagnostic Tasks

Jun 11, 2025

Abstract:Medical Visual Question Answering (Med-VQA) holds significant potential for clinical decision support, yet existing efforts primarily focus on 2D imaging with limited task diversity. This paper presents 3D-RAD, a large-scale dataset designed to advance 3D Med-VQA using radiology CT scans. The 3D-RAD dataset encompasses six diverse VQA tasks: anomaly detection, image observation, medical computation, existence detection, static temporal diagnosis, and longitudinal temporal diagnosis. It supports both open- and closed-ended questions while introducing complex reasoning challenges, including computational tasks and multi-stage temporal analysis, to enable comprehensive benchmarking. Extensive evaluations demonstrate that existing vision-language models (VLMs), especially medical VLMs exhibit limited generalization, particularly in multi-temporal tasks, underscoring the challenges of real-world 3D diagnostic reasoning. To drive future advancements, we release a high-quality training set 3D-RAD-T of 136,195 expert-aligned samples, showing that fine-tuning on this dataset could significantly enhance model performance. Our dataset and code, aiming to catalyze multimodal medical AI research and establish a robust foundation for 3D medical visual understanding, are publicly available at https://github.com/Tang-xiaoxiao/M3D-RAD.

MAFE R-CNN: Selecting More Samples to Learn Category-aware Features for Small Object Detection

May 22, 2025Abstract:Small object detection in intricate environments has consistently represented a major challenge in the field of object detection. In this paper, we identify that this difficulty stems from the detectors' inability to effectively learn discriminative features for objects of small size, compounded by the complexity of selecting high-quality small object samples during training, which motivates the proposal of the Multi-Clue Assignment and Feature Enhancement R-CNN.Specifically, MAFE R-CNN integrates two pivotal components.The first is the Multi-Clue Sample Selection (MCSS) strategy, in which the Intersection over Union (IoU) distance, predicted category confidence, and ground truth region sizes are leveraged as informative clues in the sample selection process. This methodology facilitates the selection of diverse positive samples and ensures a balanced distribution of object sizes during training, thereby promoting effective model learning.The second is the Category-aware Feature Enhancement Mechanism (CFEM), where we propose a simple yet effective category-aware memory module to explore the relationships among object features. Subsequently, we enhance the object feature representation by facilitating the interaction between category-aware features and candidate box features.Comprehensive experiments conducted on the large-scale small object dataset SODA validate the effectiveness of the proposed method. The code will be made publicly available.

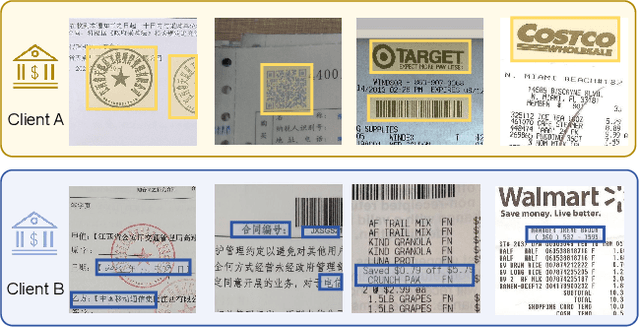

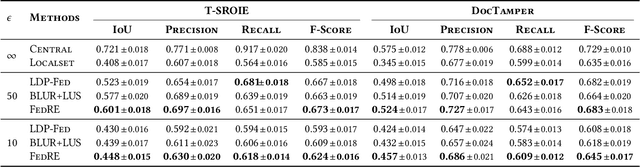

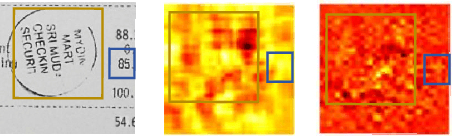

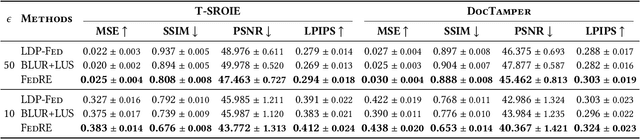

FedRE: Robust and Effective Federated Learning with Privacy Preference

May 08, 2025

Abstract:Despite Federated Learning (FL) employing gradient aggregation at the server for distributed training to prevent the privacy leakage of raw data, private information can still be divulged through the analysis of uploaded gradients from clients. Substantial efforts have been made to integrate local differential privacy (LDP) into the system to achieve a strict privacy guarantee. However, existing methods fail to take practical issues into account by merely perturbing each sample with the same mechanism while each client may have their own privacy preferences on privacy-sensitive information (PSI), which is not uniformly distributed across the raw data. In such a case, excessive privacy protection from private-insensitive information can additionally introduce unnecessary noise, which may degrade the model performance. In this work, we study the PSI within data and develop FedRE, that can simultaneously achieve robustness and effectiveness benefits with LDP protection. More specifically, we first define PSI with regard to the privacy preferences of each client. Then, we optimize the LDP by allocating less privacy budget to gradients with higher PSI in a layer-wise manner, thus providing a stricter privacy guarantee for PSI. Furthermore, to mitigate the performance degradation caused by LDP, we design a parameter aggregation mechanism based on the distribution of the perturbed information. We conducted experiments with text tamper detection on T-SROIE and DocTamper datasets, and FedRE achieves competitive performance compared to state-of-the-art methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge