Zijie Meng

EComStage: Stage-wise and Orientation-specific Benchmarking for Large Language Models in E-commerce

Jan 06, 2026Abstract:Large Language Model (LLM)-based agents are increasingly deployed in e-commerce applications to assist customer services in tasks such as product inquiries, recommendations, and order management. Existing benchmarks primarily evaluate whether these agents successfully complete the final task, overlooking the intermediate reasoning stages that are crucial for effective decision-making. To address this gap, we propose EComStage, a unified benchmark for evaluating agent-capable LLMs across the comprehensive stage-wise reasoning process: Perception (understanding user intent), Planning (formulating an action plan), and Action (executing the decision). EComStage evaluates LLMs through seven separate representative tasks spanning diverse e-commerce scenarios, with all samples human-annotated and quality-checked. Unlike prior benchmarks that focus only on customer-oriented interactions, EComStage also evaluates merchant-oriented scenarios, including promotion management, content review, and operational support relevant to real-world applications. We evaluate a wide range of over 30 LLMs, spanning from 1B to over 200B parameters, including open-source models and closed-source APIs, revealing stage/orientation-specific strengths and weaknesses. Our results provide fine-grained, actionable insights for designing and optimizing LLM-based agents in real-world e-commerce settings.

RedOne 2.0: Rethinking Domain-specific LLM Post-Training in Social Networking Services

Nov 10, 2025Abstract:As a key medium for human interaction and information exchange, social networking services (SNS) pose unique challenges for large language models (LLMs): heterogeneous workloads, fast-shifting norms and slang, and multilingual, culturally diverse corpora that induce sharp distribution shift. Supervised fine-tuning (SFT) can specialize models but often triggers a ``seesaw'' between in-distribution gains and out-of-distribution robustness, especially for smaller models. To address these challenges, we introduce RedOne 2.0, an SNS-oriented LLM trained with a progressive, RL-prioritized post-training paradigm designed for rapid and stable adaptation. The pipeline consist in three stages: (1) Exploratory Learning on curated SNS corpora to establish initial alignment and identify systematic weaknesses; (2) Targeted Fine-Tuning that selectively applies SFT to the diagnosed gaps while mixing a small fraction of general data to mitigate forgetting; and (3) Refinement Learning that re-applies RL with SNS-centric signals to consolidate improvements and harmonize trade-offs across tasks. Across various tasks spanning three categories, our 4B scale model delivers an average improvements about 2.41 over the 7B sub-optimal baseline. Additionally, RedOne 2.0 achieves average performance lift about 8.74 from the base model with less than half the data required by SFT-centric method RedOne, evidencing superior data efficiency and stability at compact scales. Overall, RedOne 2.0 establishes a competitive, cost-effective baseline for domain-specific LLMs in SNS scenario, advancing capability without sacrificing robustness.

SynPo: Boosting Training-Free Few-Shot Medical Segmentation via High-Quality Negative Prompts

Jun 18, 2025Abstract:The advent of Large Vision Models (LVMs) offers new opportunities for few-shot medical image segmentation. However, existing training-free methods based on LVMs fail to effectively utilize negative prompts, leading to poor performance on low-contrast medical images. To address this issue, we propose SynPo, a training-free few-shot method based on LVMs (e.g., SAM), with the core insight: improving the quality of negative prompts. To select point prompts in a more reliable confidence map, we design a novel Confidence Map Synergy Module by combining the strengths of DINOv2 and SAM. Based on the confidence map, we select the top-k pixels as the positive points set and choose the negative points set using a Gaussian distribution, followed by independent K-means clustering for both sets. Then, these selected points are leveraged as high-quality prompts for SAM to get the segmentation results. Extensive experiments demonstrate that SynPo achieves performance comparable to state-of-the-art training-based few-shot methods.

3D-RAD: A Comprehensive 3D Radiology Med-VQA Dataset with Multi-Temporal Analysis and Diverse Diagnostic Tasks

Jun 11, 2025

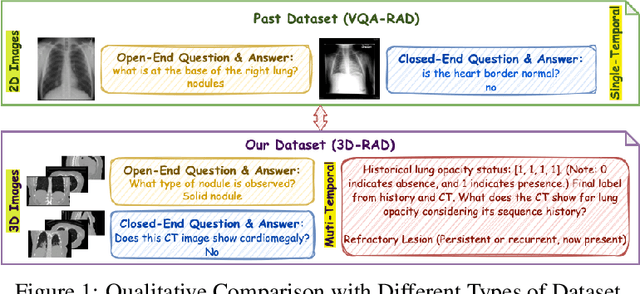

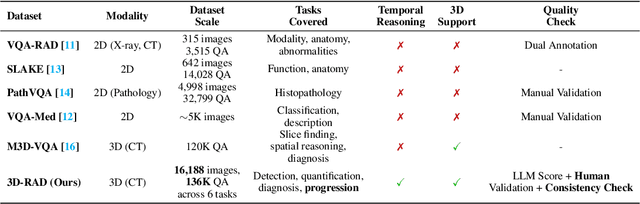

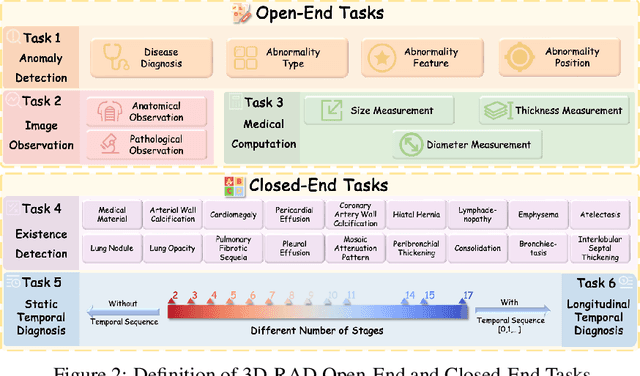

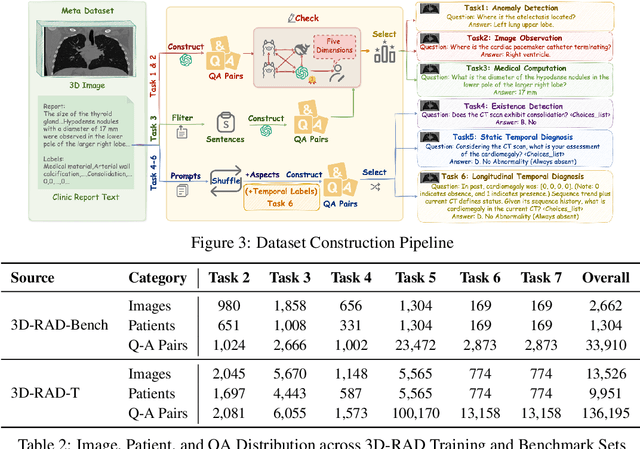

Abstract:Medical Visual Question Answering (Med-VQA) holds significant potential for clinical decision support, yet existing efforts primarily focus on 2D imaging with limited task diversity. This paper presents 3D-RAD, a large-scale dataset designed to advance 3D Med-VQA using radiology CT scans. The 3D-RAD dataset encompasses six diverse VQA tasks: anomaly detection, image observation, medical computation, existence detection, static temporal diagnosis, and longitudinal temporal diagnosis. It supports both open- and closed-ended questions while introducing complex reasoning challenges, including computational tasks and multi-stage temporal analysis, to enable comprehensive benchmarking. Extensive evaluations demonstrate that existing vision-language models (VLMs), especially medical VLMs exhibit limited generalization, particularly in multi-temporal tasks, underscoring the challenges of real-world 3D diagnostic reasoning. To drive future advancements, we release a high-quality training set 3D-RAD-T of 136,195 expert-aligned samples, showing that fine-tuning on this dataset could significantly enhance model performance. Our dataset and code, aiming to catalyze multimodal medical AI research and establish a robust foundation for 3D medical visual understanding, are publicly available at https://github.com/Tang-xiaoxiao/M3D-RAD.

OmniV-Med: Scaling Medical Vision-Language Model for Universal Visual Understanding

Apr 20, 2025

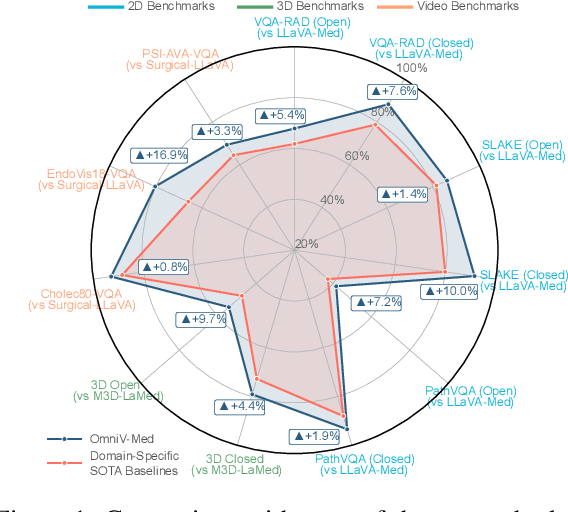

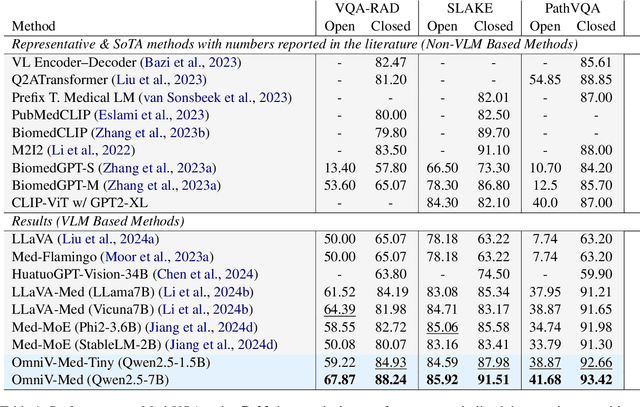

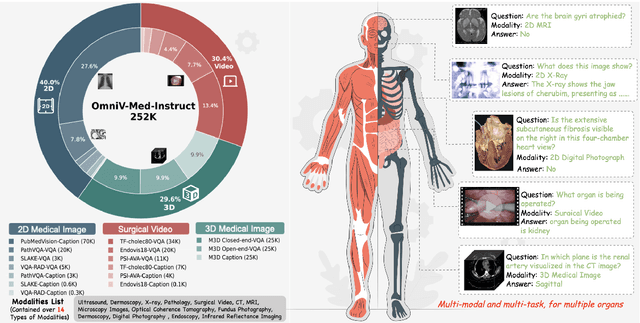

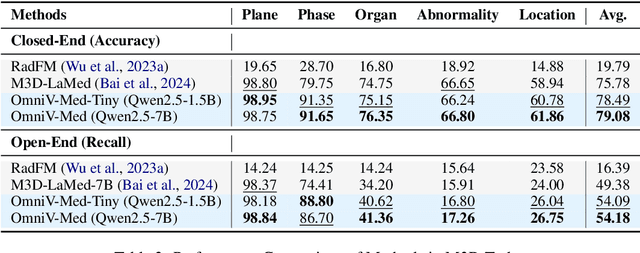

Abstract:The practical deployment of medical vision-language models (Med-VLMs) necessitates seamless integration of textual data with diverse visual modalities, including 2D/3D images and videos, yet existing models typically employ separate encoders for different modalities. To address this limitation, we present OmniV-Med, a unified framework for multimodal medical understanding. Our technical contributions are threefold: First, we construct OmniV-Med-Instruct, a comprehensive multimodal medical dataset containing 252K instructional samples spanning 14 medical image modalities and 11 clinical tasks. Second, we devise a rotary position-adaptive encoder that processes multi-resolution 2D/3D images and videos within a unified architecture, diverging from conventional modality-specific encoders. Third, we introduce a medical-aware token pruning mechanism that exploits spatial-temporal redundancy in volumetric data (e.g., consecutive CT slices) and medical videos, effectively reducing 60\% of visual tokens without performance degradation. Empirical evaluations demonstrate that OmniV-Med-7B achieves state-of-the-art performance on 7 benchmarks spanning 2D/3D medical imaging and video understanding tasks. Notably, our lightweight variant (OmniV-Med-1.5B) attains comparable performance while requiring only 8 RTX3090 GPUs for training and supporting efficient long-video inference. Data, code and model will be released.

EC-Guide: A Comprehensive E-Commerce Guide for Instruction Tuning and Quantization

Aug 06, 2024Abstract:Large language models (LLMs) have attracted considerable attention in various fields for their cost-effective solutions to diverse challenges, especially with advancements in instruction tuning and quantization. E-commerce, with its complex tasks and extensive product-user interactions, presents a promising application area for LLMs. However, the domain-specific concepts and knowledge inherent in e-commerce pose significant challenges for adapting general LLMs. To address this issue, we developed EC-Guide \href{https://github.com/fzp0424/EC-Guide-KDDUP-2024}, a comprehensive e-commerce guide for instruction tuning and quantization of LLMs. We also heuristically integrated Chain-of-Thought (CoT) during inference to enhance arithmetic performance. Our approach achieved the 2nd place in Track 2 and 5th place in Track 5 at the Amazon KDD Cup'24 \href{https://www.aicrowd.com/challenges/amazon-kdd-cup-2024-multi-task-online-shopping-challenge-for-llms}. Additionally, our solution is model-agnostic, enabling effective scalability across larger systems.

Ladder: A Model-Agnostic Framework Boosting LLM-based Machine Translation to the Next Level

Jun 22, 2024Abstract:General-purpose Large Language Models (LLMs) like GPT-4 have achieved remarkable advancements in machine translation (MT) by leveraging extensive web content. On the other hand, translation-specific LLMs are built by pre-training on domain-specific monolingual corpora and fine-tuning with human-annotated translation data. Despite the superior performance, these methods either demand an unprecedented scale of computing and data or substantial human editing and annotation efforts. In this paper, we develop Ladder, a novel model-agnostic and cost-effective tool to refine the performance of general LLMs for MT. Ladder is trained on pseudo-refinement triplets which can be easily obtained from existing LLMs without additional human cost. During training, we propose a hierarchical fine-tuning strategy with an easy-to-hard schema, improving Ladder's refining performance progressively. The trained Ladder can be seamlessly integrated with any general-purpose LLMs to boost their translation performance. By utilizing Gemma-2B/7B as the backbone, Ladder-2B can elevate raw translations to the level of top-tier open-source models (e.g., refining BigTranslate-13B with +6.91 BLEU and +3.52 COMET for XX-En), and Ladder-7B can further enhance model performance to be on par with the state-of-the-art GPT-4. Extensive ablation and analysis corroborate the effectiveness of Ladder in diverse settings. Our code is available at https://github.com/fzp0424/Ladder

Divide and Conquer for Large Language Models Reasoning

Jan 10, 2024Abstract:Large language models (LLMs) have shown impressive performance in various reasoning benchmarks with the emergence of Chain-of-Thought (CoT) and its derivative methods, particularly in tasks involving multi-choice questions (MCQs). However, current works all process data uniformly without considering the problem-solving difficulty, which means an excessive focus on simple questions while insufficient to intricate ones. To address this challenge, we inspired by humans using heuristic strategies to categorize tasks and handle them individually, propose to apply the Divide and Conquer to LLMs reasoning. First, we divide questions into different subsets based on the statistical confidence score ($\mathcal{CS}$), then fix nearly resolved sets and conquer demanding nuanced process ones with elaborately designed methods, including Prior Knowledge based Reasoning (PKR) and Filter Choices based Reasoning (FCR), as well as their integration variants. Our experiments demonstrate that this proposed strategy significantly boosts the models' reasoning abilities across nine datasets involving arithmetic, commonsense, and logic tasks. For instance, compared to baseline, we make a striking improvement on low confidence subsets of 8.72\% for AQuA, 15.07\% for ARC Challenge and 7.71\% for RiddleSense. In addition, through extensive analysis on length of rationale and number of options, we verify that longer reasoning paths in PKR could prevent models from referring infer-harmful shortcuts, and also find that removing irrelevant choices in FCR would substantially avoid models' confusion. The code is at \url{https://github.com/AiMijie/Divide-and-Conquer}

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge