Xintong Li

SceneAlign: Aligning Multimodal Reasoning to Scene Graphs in Complex Visual Scenes

Jan 09, 2026Abstract:Multimodal large language models often struggle with faithful reasoning in complex visual scenes, where intricate entities and relations require precise visual grounding at each step. This reasoning unfaithfulness frequently manifests as hallucinated entities, mis-grounded relations, skipped steps, and over-specified reasoning. Existing preference-based approaches, typically relying on textual perturbations or answer-conditioned rationales, fail to address this challenge as they allow models to exploit language priors to bypass visual grounding. To address this, we propose SceneAlign, a framework that leverages scene graphs as structured visual information to perform controllable structural interventions. By identifying reasoning-critical nodes and perturbing them through four targeted strategies that mimic typical grounding failures, SceneAlign constructs hard negative rationales that remain linguistically plausible but are grounded in inaccurate visual facts. These contrastive pairs are used in Direct Preference Optimization to steer models toward fine-grained, structure-faithful reasoning. Across seven visual reasoning benchmarks, SceneAlign consistently improves answer accuracy and reasoning faithfulness, highlighting the effectiveness of grounding-aware alignment for multimodal reasoning.

EverMemOS: A Self-Organizing Memory Operating System for Structured Long-Horizon Reasoning

Jan 05, 2026Abstract:Large Language Models (LLMs) are increasingly deployed as long-term interactive agents, yet their limited context windows make it difficult to sustain coherent behavior over extended interactions. Existing memory systems often store isolated records and retrieve fragments, limiting their ability to consolidate evolving user states and resolve conflicts. We introduce EverMemOS, a self-organizing memory operating system that implements an engram-inspired lifecycle for computational memory. Episodic Trace Formation converts dialogue streams into MemCells that capture episodic traces, atomic facts, and time-bounded Foresight signals. Semantic Consolidation organizes MemCells into thematic MemScenes, distilling stable semantic structures and updating user profiles. Reconstructive Recollection performs MemScene-guided agentic retrieval to compose the necessary and sufficient context for downstream reasoning. Experiments on LoCoMo and LongMemEval show that EverMemOS achieves state-of-the-art performance on memory-augmented reasoning tasks. We further report a profile study on PersonaMem v2 and qualitative case studies illustrating chat-oriented capabilities such as user profiling and Foresight. Code is available at https://github.com/EverMind-AI/EverMemOS.

ASRL:A robust loss function with potential for development

Apr 09, 2025Abstract:In this article, we proposed a partition:wise robust loss function based on the previous robust loss function. The characteristics of this loss function are that it achieves high robustness and a wide range of applicability through partition-wise design and adaptive parameter adjustment. Finally, the advantages and development potential of this loss function were verified by applying this loss function to the regression question and using five different datasets (with different dimensions, different sample numbers, and different fields) to compare with the other loss functions. The results of multiple experiments have proven the advantages of our loss function .

A Survey on Personalized and Pluralistic Preference Alignment in Large Language Models

Apr 09, 2025Abstract:Personalized preference alignment for large language models (LLMs), the process of tailoring LLMs to individual users' preferences, is an emerging research direction spanning the area of NLP and personalization. In this survey, we present an analysis of works on personalized alignment and modeling for LLMs. We introduce a taxonomy of preference alignment techniques, including training time, inference time, and additionally, user-modeling based methods. We provide analysis and discussion on the strengths and limitations of each group of techniques and then cover evaluation, benchmarks, as well as open problems in the field.

Toward Multi-Session Personalized Conversation: A Large-Scale Dataset and Hierarchical Tree Framework for Implicit Reasoning

Mar 10, 2025Abstract:There has been a surge in the use of large language models (LLM) conversational agents to generate responses based on long-term history from multiple sessions. However, existing long-term open-domain dialogue datasets lack complex, real-world personalization and fail to capture implicit reasoning-where relevant information is embedded in subtle, syntactic, or semantically distant connections rather than explicit statements. In such cases, traditional retrieval methods fail to capture relevant context, and long-context modeling also becomes inefficient due to numerous complicated persona-related details. To address this gap, we introduce ImplexConv, a large-scale long-term dataset with 2,500 examples, each containing approximately 100 conversation sessions, designed to study implicit reasoning in personalized dialogues. Additionally, we propose TaciTree, a novel hierarchical tree framework that structures conversation history into multiple levels of summarization. Instead of brute-force searching all data, TaciTree enables an efficient, level-based retrieval process where models refine their search by progressively selecting relevant details. Our experiments demonstrate that TaciTree significantly improves the ability of LLMs to reason over long-term conversations with implicit contextual dependencies.

Active Learning for Direct Preference Optimization

Mar 03, 2025Abstract:Direct preference optimization (DPO) is a form of reinforcement learning from human feedback (RLHF) where the policy is learned directly from preferential feedback. Although many models of human preferences exist, the critical task of selecting the most informative feedback for training them is under-explored. We propose an active learning framework for DPO, which can be applied to collect human feedback online or to choose the most informative subset of already collected feedback offline. We propose efficient algorithms for both settings. The key idea is to linearize the DPO objective at the last layer of the neural network representation of the optimized policy and then compute the D-optimal design to collect preferential feedback. We prove that the errors in our DPO logit estimates diminish with more feedback. We show the effectiveness of our algorithms empirically in the setting that matches our theory and also on large language models.

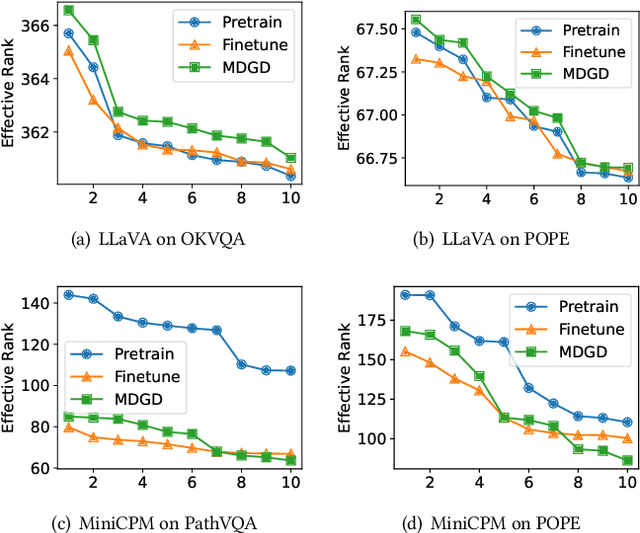

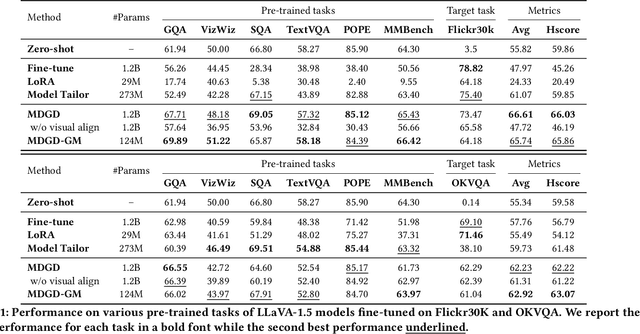

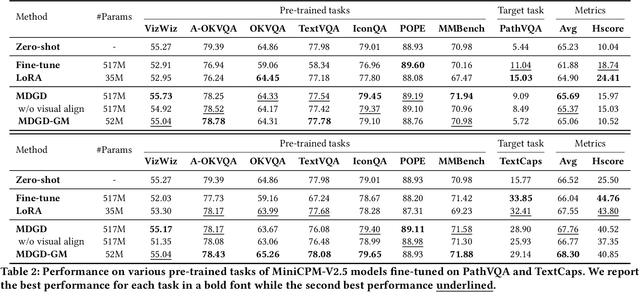

Mitigating Visual Knowledge Forgetting in MLLM Instruction-tuning via Modality-decoupled Gradient Descent

Feb 17, 2025

Abstract:Recent MLLMs have shown emerging visual understanding and reasoning abilities after being pre-trained on large-scale multimodal datasets. Unlike pre-training, where MLLMs receive rich visual-text alignment, instruction-tuning is often text-driven with weaker visual supervision, leading to the degradation of pre-trained visual understanding and causing visual forgetting. Existing approaches, such as direct fine-tuning and continual learning methods, fail to explicitly address this issue, often compressing visual representations and prioritizing task alignment over visual retention, which further worsens visual forgetting. To overcome this limitation, we introduce a novel perspective leveraging effective rank to quantify the degradation of visual representation richness, interpreting this degradation through the information bottleneck principle as excessive compression that leads to the degradation of crucial pre-trained visual knowledge. Building on this view, we propose a modality-decoupled gradient descent (MDGD) method that regulates gradient updates to maintain the effective rank of visual representations while mitigating the over-compression effects described by the information bottleneck. By explicitly disentangling the optimization of visual understanding from task-specific alignment, MDGD preserves pre-trained visual knowledge while enabling efficient task adaptation. To enable lightweight instruction-tuning, we further develop a memory-efficient fine-tuning approach using gradient masking, which selectively updates a subset of model parameters to enable parameter-efficient fine-tuning (PEFT), reducing computational overhead while preserving rich visual representations. Extensive experiments across various downstream tasks and backbone MLLMs demonstrate that MDGD effectively mitigates visual forgetting from pre-trained tasks while enabling strong adaptation to new tasks.

From Selection to Generation: A Survey of LLM-based Active Learning

Feb 17, 2025Abstract:Active Learning (AL) has been a powerful paradigm for improving model efficiency and performance by selecting the most informative data points for labeling and training. In recent active learning frameworks, Large Language Models (LLMs) have been employed not only for selection but also for generating entirely new data instances and providing more cost-effective annotations. Motivated by the increasing importance of high-quality data and efficient model training in the era of LLMs, we present a comprehensive survey on LLM-based Active Learning. We introduce an intuitive taxonomy that categorizes these techniques and discuss the transformative roles LLMs can play in the active learning loop. We further examine the impact of AL on LLM learning paradigms and its applications across various domains. Finally, we identify open challenges and propose future research directions. This survey aims to serve as an up-to-date resource for researchers and practitioners seeking to gain an intuitive understanding of LLM-based AL techniques and deploy them to new applications.

FAP-CD: Fairness-Driven Age-Friendly Community Planning via Conditional Diffusion Generation

Dec 21, 2024Abstract:As global populations age rapidly, incorporating age-specific considerations into urban planning has become essential to addressing the urgent demand for age-friendly built environments and ensuring sustainable urban development. However, current practices often overlook these considerations, resulting in inadequate and unevenly distributed elderly services in cities. There is a pressing need for equitable and optimized urban renewal strategies to support effective age-friendly planning. To address this challenge, we propose a novel framework, Fairness-driven Age-friendly community Planning via Conditional Diffusion generation (FAP-CD). FAP-CD leverages a conditioned graph denoising diffusion probabilistic model to learn the joint probability distribution of aging facilities and their spatial relationships at a fine-grained regional level. Our framework generates optimized facility distributions by iteratively refining noisy graphs, conditioned on the needs of the elderly during the diffusion process. Key innovations include a demand-fairness pre-training module that integrates community demand features and facility characteristics using an attention mechanism and min-max optimization, ensuring equitable service distribution across regions. Additionally, a discrete graph structure captures walkable accessibility within regional road networks, guiding model sampling. To enhance information integration, we design a graph denoising network with an attribute augmentation module and a hybrid graph message aggregation module, combining local and global node and edge information. Empirical results across multiple metrics demonstrate the effectiveness of FAP-CD in balancing age-friendly needs with regional equity, achieving an average improvement of 41% over competitive baseline models.

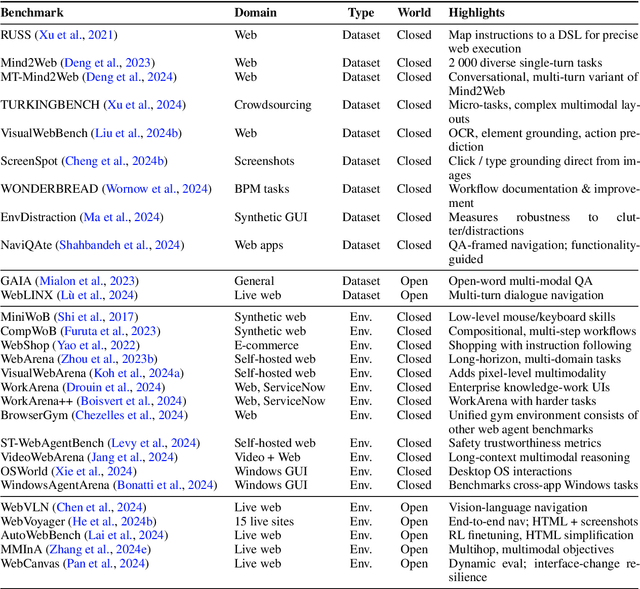

GUI Agents: A Survey

Dec 18, 2024

Abstract:Graphical User Interface (GUI) agents, powered by Large Foundation Models, have emerged as a transformative approach to automating human-computer interaction. These agents autonomously interact with digital systems or software applications via GUIs, emulating human actions such as clicking, typing, and navigating visual elements across diverse platforms. Motivated by the growing interest and fundamental importance of GUI agents, we provide a comprehensive survey that categorizes their benchmarks, evaluation metrics, architectures, and training methods. We propose a unified framework that delineates their perception, reasoning, planning, and acting capabilities. Furthermore, we identify important open challenges and discuss key future directions. Finally, this work serves as a basis for practitioners and researchers to gain an intuitive understanding of current progress, techniques, benchmarks, and critical open problems that remain to be addressed.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge