Xin Peng

Flow Perturbation++: Multi-Step Unbiased Jacobian Estimation for High-Dimensional Boltzmann Sampling

Jan 29, 2026Abstract:The scalability of continuous normalizing flows (CNFs) for unbiased Boltzmann sampling remains limited in high-dimensional systems due to the cost of Jacobian-determinant evaluation, which requires $D$ backpropagation passes through the flow layers. Existing stochastic Jacobian estimators such as the Hutchinson trace estimator reduce computation but introduce bias, while the recently proposed Flow Perturbation method is unbiased yet suffers from high variance. We present \textbf{Flow Perturbation++}, a variance-reduced extension of Flow Perturbation that discretizes the probability-flow ODE and performs unbiased stepwise Jacobian estimation at each integration step. This multi-step construction retains the unbiasedness of Flow Perturbation while achieves substantially lower estimator variance. Integrated into a Sequential Monte Carlo framework, Flow Perturbation++ achieves significantly improved equilibrium sampling on a 1000D Gaussian Mixture Model and the all-atom Chignolin protein compared with Hutchinson-based and single-step Flow Perturbation baselines.

Rethinking Refinement: Correcting Generative Bias without Noise Injection

Jan 29, 2026Abstract:Generative models, including diffusion and flow-based models, often exhibit systematic biases that degrade sample quality, particularly in high-dimensional settings. We revisit refinement methods and show that effective bias correction can be achieved as a post-hoc procedure, without noise injection or multi-step resampling of the sampling process. We propose a flow-matching-based \textbf{Bi-stage Flow Refinement (BFR)} framework with two refinement strategies operating at different stages: latent space alignment for approximately invertible generators and data space refinement trained with lightweight augmentations. Unlike previous refiners that perturb sampling dynamics, BFR preserves the original ODE trajectory and applies deterministic corrections to generated samples. Experiments on MNIST, CIFAR-10, and FFHQ at 256x256 resolution demonstrate consistent improvements in fidelity and coverage; notably, starting from base samples with FID 3.95, latent space refinement achieves a \textbf{state-of-the-art} FID of \textbf{1.46} on MNIST using only a single additional function evaluation (1-NFE), while maintaining sample diversity.

Reducing False Positives in Static Bug Detection with LLMs: An Empirical Study in Industry

Jan 26, 2026Abstract:Static analysis tools (SATs) are widely adopted in both academia and industry for improving software quality, yet their practical use is often hindered by high false positive rates, especially in large-scale enterprise systems. These false alarms demand substantial manual inspection, creating severe inefficiencies in industrial code review. While recent work has demonstrated the potential of large language models (LLMs) for false alarm reduction on open-source benchmarks, their effectiveness in real-world enterprise settings remains unclear. To bridge this gap, we conduct the first comprehensive empirical study of diverse LLM-based false alarm reduction techniques in an industrial context at Tencent, one of the largest IT companies in China. Using data from Tencent's enterprise-customized SAT on its large-scale Advertising and Marketing Services software, we construct a dataset of 433 alarms (328 false positives, 105 true positives) covering three common bug types. Through interviewing developers and analyzing the data, our results highlight the prevalence of false positives, which wastes substantial manual effort (e.g., 10-20 minutes of manual inspection per alarm). Meanwhile, our results show the huge potential of LLMs for reducing false alarms in industrial settings (e.g., hybrid techniques of LLM and static analysis eliminate 94-98% of false positives with high recall). Furthermore, LLM-based techniques are cost-effective, with per-alarm costs as low as 2.1-109.5 seconds and $0.0011-$0.12, representing orders-of-magnitude savings compared to manual review. Finally, our case analysis further identifies key limitations of LLM-based false alarm reduction in industrial settings.

CI4A: Semantic Component Interfaces for Agents Empowering Web Automation

Jan 21, 2026Abstract:While Large Language Models demonstrate remarkable proficiency in high-level semantic planning, they remain limited in handling fine-grained, low-level web component manipulations. To address this limitation, extensive research has focused on enhancing model grounding capabilities through techniques such as Reinforcement Learning. However, rather than compelling agents to adapt to human-centric interfaces, we propose constructing interaction interfaces specifically optimized for agents. This paper introduces Component Interface for Agent (CI4A), a semantic encapsulation mechanism that abstracts the complex interaction logic of UI components into a set of unified tool primitives accessible to agents. We implemented CI4A within Ant Design, an industrial-grade front-end framework, covering 23 categories of commonly used UI components. Furthermore, we developed a hybrid agent featuring an action space that dynamically updates according to the page state, enabling flexible invocation of available CI4A tools. Leveraging the CI4A-integrated Ant Design, we refactored and upgraded the WebArena benchmark to evaluate existing SoTA methods. Experimental results demonstrate that the CI4A-based agent significantly outperforms existing approaches, achieving a new SoTA task success rate of 86.3%, alongside substantial improvements in execution efficiency.

ExpertAD: Enhancing Autonomous Driving Systems with Mixture of Experts

Nov 13, 2025Abstract:Recent advancements in end-to-end autonomous driving systems (ADSs) underscore their potential for perception and planning capabilities. However, challenges remain. Complex driving scenarios contain rich semantic information, yet ambiguous or noisy semantics can compromise decision reliability, while interference between multiple driving tasks may hinder optimal planning. Furthermore, prolonged inference latency slows decision-making, increasing the risk of unsafe driving behaviors. To address these challenges, we propose ExpertAD, a novel framework that enhances the performance of ADS with Mixture of Experts (MoE) architecture. We introduce a Perception Adapter (PA) to amplify task-critical features, ensuring contextually relevant scene understanding, and a Mixture of Sparse Experts (MoSE) to minimize task interference during prediction, allowing for effective and efficient planning. Our experiments show that ExpertAD reduces average collision rates by up to 20% and inference latency by 25% compared to prior methods. We further evaluate its multi-skill planning capabilities in rare scenarios (e.g., accidents, yielding to emergency vehicles) and demonstrate strong generalization to unseen urban environments. Additionally, we present a case study that illustrates its decision-making process in complex driving scenarios.

Argus: Resilience-Oriented Safety Assurance Framework for End-to-End ADSs

Nov 12, 2025Abstract:End-to-end autonomous driving systems (ADSs), with their strong capabilities in environmental perception and generalizable driving decisions, are attracting growing attention from both academia and industry. However, once deployed on public roads, ADSs are inevitably exposed to diverse driving hazards that may compromise safety and degrade system performance. This raises a strong demand for resilience of ADSs, particularly the capability to continuously monitor driving hazards and adaptively respond to potential safety violations, which is crucial for maintaining robust driving behaviors in complex driving scenarios. To bridge this gap, we propose a runtime resilience-oriented framework, Argus, to mitigate the driving hazards, thus preventing potential safety violations and improving the driving performance of an ADS. Argus continuously monitors the trajectories generated by the ADS for potential hazards and, whenever the EGO vehicle is deemed unsafe, seamlessly takes control through a hazard mitigator. We integrate Argus with three state-of-the-art end-to-end ADSs, i.e., TCP, UniAD and VAD. Our evaluation has demonstrated that Argus effectively and efficiently enhances the resilience of ADSs, improving the driving score of the ADS by up to 150.30% on average, and preventing up to 64.38% of the violations, with little additional time overhead.

* The paper has been accepted by the 40th IEEE/ACM International Conference on Automated Software Engineering, ASE 2025

Benchmarking and Enhancing LLM Agents in Localizing Linux Kernel Bugs

May 26, 2025Abstract:The Linux kernel is a critical system, serving as the foundation for numerous systems. Bugs in the Linux kernel can cause serious consequences, affecting billions of users. Fault localization (FL), which aims at identifying the buggy code elements in software, plays an essential role in software quality assurance. While recent LLM agents have achieved promising accuracy in FL on recent benchmarks like SWE-bench, it remains unclear how well these methods perform in the Linux kernel, where FL is much more challenging due to the large-scale code base, limited observability, and diverse impact factors. In this paper, we introduce LinuxFLBench, a FL benchmark constructed from real-world Linux kernel bugs. We conduct an empirical study to assess the performance of state-of-the-art LLM agents on the Linux kernel. Our initial results reveal that existing agents struggle with this task, achieving a best top-1 accuracy of only 41.6% at file level. To address this challenge, we propose LinuxFL$^+$, an enhancement framework designed to improve FL effectiveness of LLM agents for the Linux kernel. LinuxFL$^+$ substantially improves the FL accuracy of all studied agents (e.g., 7.2% - 11.2% accuracy increase) with minimal costs. Data and code are available at https://github.com/FudanSELab/LinuxFLBench.

Code Copycat Conundrum: Demystifying Repetition in LLM-based Code Generation

Apr 17, 2025Abstract:Despite recent advances in Large Language Models (LLMs) for code generation, the quality of LLM-generated code still faces significant challenges. One significant issue is code repetition, which refers to the model's tendency to generate structurally redundant code, resulting in inefficiencies and reduced readability. To address this, we conduct the first empirical study to investigate the prevalence and nature of repetition across 19 state-of-the-art code LLMs using three widely-used benchmarks. Our study includes both quantitative and qualitative analyses, revealing that repetition is pervasive and manifests at various granularities and extents, including character, statement, and block levels. We further summarize a taxonomy of 20 repetition patterns. Building on our findings, we propose DeRep, a rule-based technique designed to detect and mitigate repetition in generated code. We evaluate DeRep using both open-source benchmarks and in an industrial setting. Our results demonstrate that DeRep significantly outperforms baselines in reducing repetition (with an average improvements of 91.3%, 93.5%, and 79.9% in rep-3, rep-line, and sim-line metrics) and enhancing code quality (with a Pass@1 increase of 208.3% over greedy search). Furthermore, integrating DeRep improves the performance of existing repetition mitigation methods, with Pass@1 improvements ranging from 53.7% to 215.7%.

The Composite Visual-Laser Navigation Method Applied in Indoor Poultry Farming Environments

Apr 11, 2025

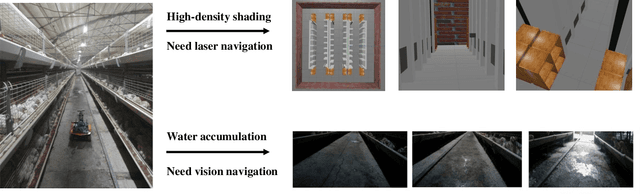

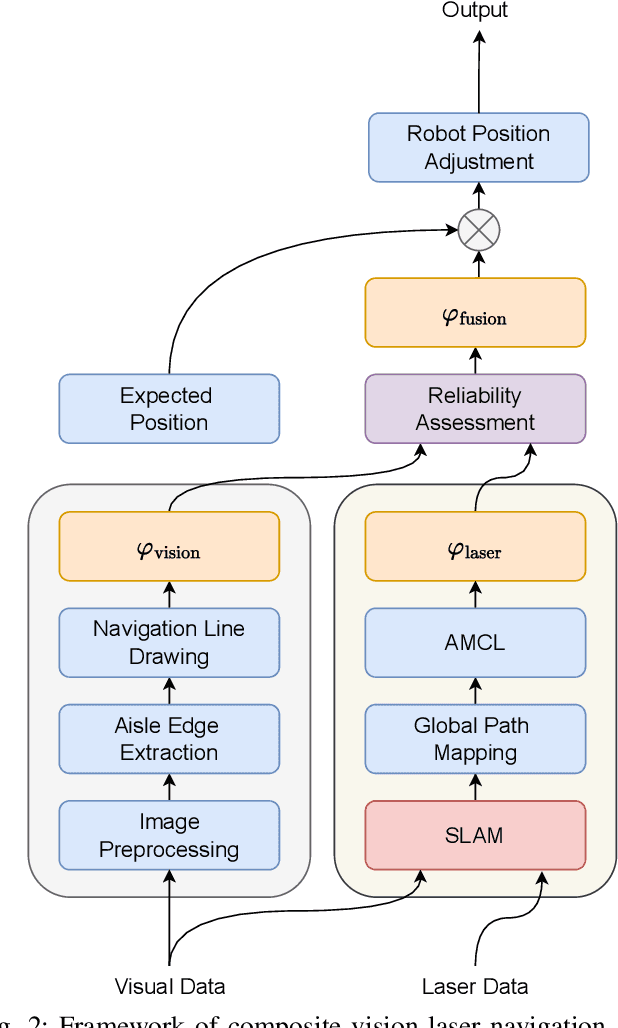

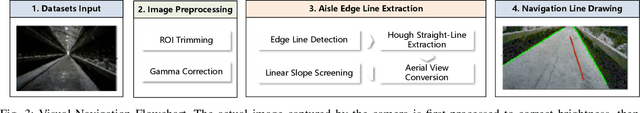

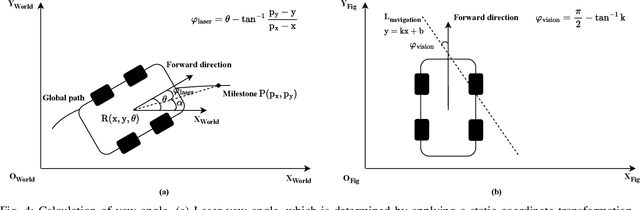

Abstract:Indoor poultry farms require inspection robots to maintain precise environmental control, which is crucial for preventing the rapid spread of disease and large-scale bird mortality. However, the complex conditions within these facilities, characterized by areas of intense illumination and water accumulation, pose significant challenges. Traditional navigation methods that rely on a single sensor often perform poorly in such environments, resulting in issues like laser drift and inaccuracies in visual navigation line extraction. To overcome these limitations, we propose a novel composite navigation method that integrates both laser and vision technologies. This approach dynamically computes a fused yaw angle based on the real-time reliability of each sensor modality, thereby eliminating the need for physical navigation lines. Experimental validation in actual poultry house environments demonstrates that our method not only resolves the inherent drawbacks of single-sensor systems, but also significantly enhances navigation precision and operational efficiency. As such, it presents a promising solution for improving the performance of inspection robots in complex indoor poultry farming settings.

RoadGen: Generating Road Scenarios for Autonomous Vehicle Testing

Nov 29, 2024

Abstract:With the rapid development of autonomous vehicles, there is an increasing demand for scenario-based testing to simulate diverse driving scenarios. However, as the base of any driving scenarios, road scenarios (e.g., road topology and geometry) have received little attention by the literature. Despite several advances, they either generate basic road components without a complete road network, or generate a complete road network but with simple road components. The resulting road scenarios lack diversity in both topology and geometry. To address this problem, we propose RoadGen to systematically generate diverse road scenarios. The key idea is to connect eight types of parameterized road components to form road scenarios with high diversity in topology and geometry. Our evaluation has demonstrated the effectiveness and usefulness of RoadGen in generating diverse road scenarios for simulation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge