Zixian Zhang

Identity-GRPO: Optimizing Multi-Human Identity-preserving Video Generation via Reinforcement Learning

Oct 16, 2025Abstract:While advanced methods like VACE and Phantom have advanced video generation for specific subjects in diverse scenarios, they struggle with multi-human identity preservation in dynamic interactions, where consistent identities across multiple characters are critical. To address this, we propose Identity-GRPO, a human feedback-driven optimization pipeline for refining multi-human identity-preserving video generation. First, we construct a video reward model trained on a large-scale preference dataset containing human-annotated and synthetic distortion data, with pairwise annotations focused on maintaining human consistency throughout the video. We then employ a GRPO variant tailored for multi-human consistency, which greatly enhances both VACE and Phantom. Through extensive ablation studies, we evaluate the impact of annotation quality and design choices on policy optimization. Experiments show that Identity-GRPO achieves up to 18.9% improvement in human consistency metrics over baseline methods, offering actionable insights for aligning reinforcement learning with personalized video generation.

AST-Enhanced or AST-Overloaded? The Surprising Impact of Hybrid Graph Representations on Code Clone Detection

Jun 17, 2025Abstract:As one of the most detrimental code smells, code clones significantly increase software maintenance costs and heighten vulnerability risks, making their detection a critical challenge in software engineering. Abstract Syntax Trees (ASTs) dominate deep learning-based code clone detection due to their precise syntactic structure representation, but they inherently lack semantic depth. Recent studies address this by enriching AST-based representations with semantic graphs, such as Control Flow Graphs (CFGs) and Data Flow Graphs (DFGs). However, the effectiveness of various enriched AST-based representations and their compatibility with different graph-based machine learning techniques remains an open question, warranting further investigation to unlock their full potential in addressing the complexities of code clone detection. In this paper, we present a comprehensive empirical study to rigorously evaluate the effectiveness of AST-based hybrid graph representations in Graph Neural Network (GNN)-based code clone detection. We systematically compare various hybrid representations ((CFG, DFG, Flow-Augmented ASTs (FA-AST)) across multiple GNN architectures. Our experiments reveal that hybrid representations impact GNNs differently: while AST+CFG+DFG consistently enhances accuracy for convolution- and attention-based models (Graph Convolutional Networks (GCN), Graph Attention Networks (GAT)), FA-AST frequently introduces structural complexity that harms performance. Notably, GMN outperforms others even with standard AST representations, highlighting its superior cross-code similarity detection and reducing the need for enriched structures.

Unifying Segment Anything in Microscopy with Multimodal Large Language Model

May 16, 2025Abstract:Accurate segmentation of regions of interest in biomedical images holds substantial value in image analysis. Although several foundation models for biomedical segmentation have currently achieved excellent performance on certain datasets, they typically demonstrate sub-optimal performance on unseen domain data. We owe the deficiency to lack of vision-language knowledge before segmentation. Multimodal Large Language Models (MLLMs) bring outstanding understanding and reasoning capabilities to multimodal tasks, which inspires us to leverage MLLMs to inject Vision-Language Knowledge (VLK), thereby enabling vision models to demonstrate superior generalization capabilities on cross-domain datasets. In this paper, we propose using MLLMs to guide SAM in learning microscopy crose-domain data, unifying Segment Anything in Microscopy, named uLLSAM. Specifically, we propose the Vision-Language Semantic Alignment (VLSA) module, which injects VLK into Segment Anything Model (SAM). We find that after SAM receives global VLK prompts, its performance improves significantly, but there are deficiencies in boundary contour perception. Therefore, we further propose Semantic Boundary Regularization (SBR) to prompt SAM. Our method achieves performance improvements of 7.71% in Dice and 12.10% in SA across 9 in-domain microscopy datasets, achieving state-of-the-art performance. Our method also demonstrates improvements of 6.79% in Dice and 10.08% in SA across 10 out-ofdomain datasets, exhibiting strong generalization capabilities. Code is available at https://github.com/ieellee/uLLSAM.

The Composite Visual-Laser Navigation Method Applied in Indoor Poultry Farming Environments

Apr 11, 2025

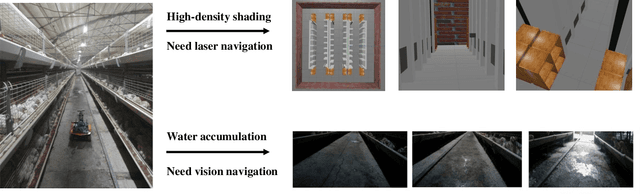

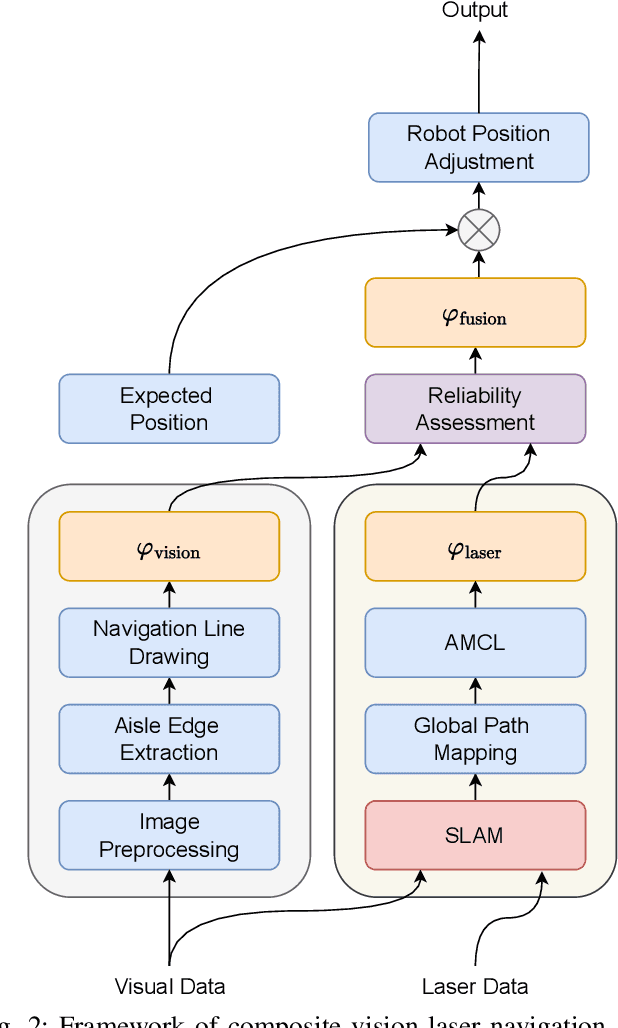

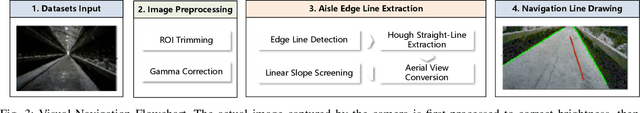

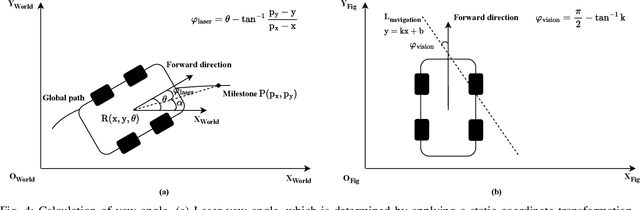

Abstract:Indoor poultry farms require inspection robots to maintain precise environmental control, which is crucial for preventing the rapid spread of disease and large-scale bird mortality. However, the complex conditions within these facilities, characterized by areas of intense illumination and water accumulation, pose significant challenges. Traditional navigation methods that rely on a single sensor often perform poorly in such environments, resulting in issues like laser drift and inaccuracies in visual navigation line extraction. To overcome these limitations, we propose a novel composite navigation method that integrates both laser and vision technologies. This approach dynamically computes a fused yaw angle based on the real-time reliability of each sensor modality, thereby eliminating the need for physical navigation lines. Experimental validation in actual poultry house environments demonstrates that our method not only resolves the inherent drawbacks of single-sensor systems, but also significantly enhances navigation precision and operational efficiency. As such, it presents a promising solution for improving the performance of inspection robots in complex indoor poultry farming settings.

Assessing the Code Clone Detection Capability of Large Language Models

Jul 02, 2024Abstract:This study aims to assess the performance of two advanced Large Language Models (LLMs), GPT-3.5 and GPT-4, in the task of code clone detection. The evaluation involves testing the models on a variety of code pairs of different clone types and levels of similarity, sourced from two datasets: BigCloneBench (human-made) and GPTCloneBench (LLM-generated). Findings from the study indicate that GPT-4 consistently surpasses GPT-3.5 across all clone types. A correlation was observed between the GPTs' accuracy at identifying code clones and code similarity, with both GPT models exhibiting low effectiveness in detecting the most complex Type-4 code clones. Additionally, GPT models demonstrate a higher performance identifying code clones in LLM-generated code compared to humans-generated code. However, they do not reach impressive accuracy. These results emphasize the imperative for ongoing enhancements in LLM capabilities, particularly in the recognition of code clones and in mitigating their predisposition towards self-generated code clones--which is likely to become an issue as software engineers are more numerous to leverage LLM-enabled code generation and code refactoring tools.

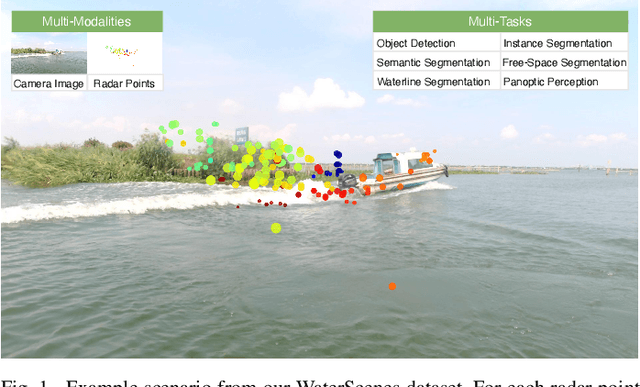

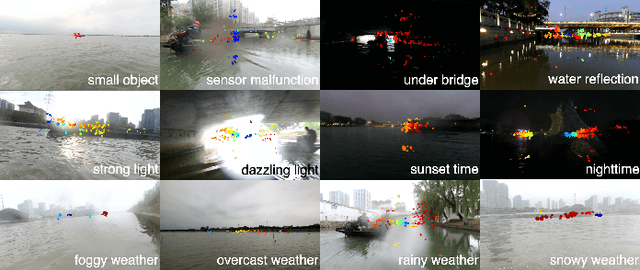

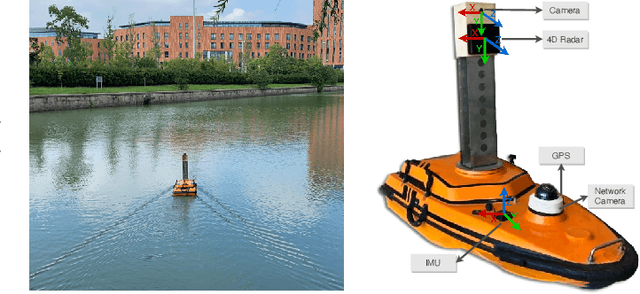

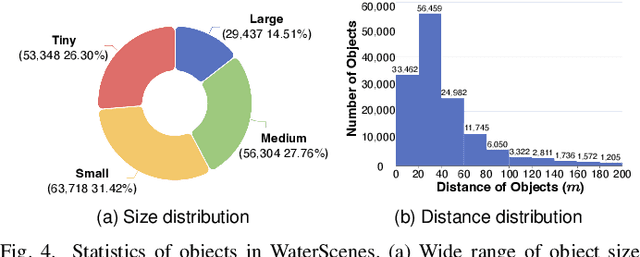

WaterScenes: A Multi-Task 4D Radar-Camera Fusion Dataset and Benchmark for Autonomous Driving on Water Surfaces

Jul 13, 2023

Abstract:Autonomous driving on water surfaces plays an essential role in executing hazardous and time-consuming missions, such as maritime surveillance, survivors rescue, environmental monitoring, hydrography mapping and waste cleaning. This work presents WaterScenes, the first multi-task 4D radar-camera fusion dataset for autonomous driving on water surfaces. Equipped with a 4D radar and a monocular camera, our Unmanned Surface Vehicle (USV) proffers all-weather solutions for discerning object-related information, including color, shape, texture, range, velocity, azimuth, and elevation. Focusing on typical static and dynamic objects on water surfaces, we label the camera images and radar point clouds at pixel-level and point-level, respectively. In addition to basic perception tasks, such as object detection, instance segmentation and semantic segmentation, we also provide annotations for free-space segmentation and waterline segmentation. Leveraging the multi-task and multi-modal data, we conduct numerous experiments on the single modality of radar and camera, as well as the fused modalities. Results demonstrate that 4D radar-camera fusion can considerably enhance the robustness of perception on water surfaces, especially in adverse lighting and weather conditions. WaterScenes dataset is public on https://waterscenes.github.io.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge