Weikang Wang

Symmetry Informative and Agnostic Feature Disentanglement for 3D Shapes

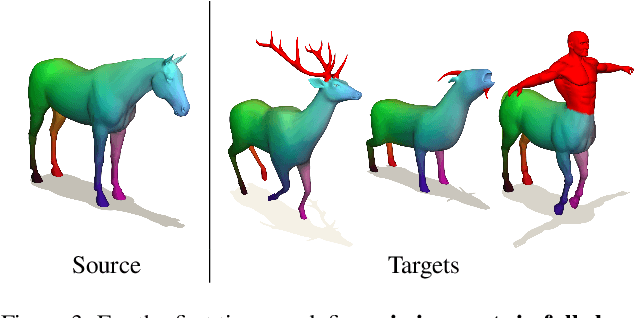

Jan 21, 2026Abstract:Shape descriptors, i.e., per-vertex features of 3D meshes or point clouds, are fundamental to shape analysis. Historically, various handcrafted geometry-aware descriptors and feature refinement techniques have been proposed. Recently, several studies have initiated a new research direction by leveraging features from image foundation models to create semantics-aware descriptors, demonstrating advantages across tasks like shape matching, editing, and segmentation. Symmetry, another key concept in shape analysis, has also attracted increasing attention. Consequently, constructing symmetry-aware shape descriptors is a natural progression. Although the recent method $χ$ (Wang et al., 2025) successfully extracted symmetry-informative features from semantic-aware descriptors, its features are only one-dimensional, neglecting other valuable semantic information. Furthermore, the extracted symmetry-informative feature is usually noisy and yields small misclassified patches. To address these gaps, we propose a feature disentanglement approach which is simultaneously symmetry informative and symmetry agnostic. Further, we propose a feature refinement technique to improve the robustness of predicted symmetry informative features. Extensive experiments, including intrinsic symmetry detection, left/right classification, and shape matching, demonstrate the effectiveness of our proposed framework compared to various state-of-the-art methods, both qualitatively and quantitatively.

Symmetry Understanding of 3D Shapes via Chirality Disentanglement

Aug 07, 2025Abstract:Chirality information (i.e. information that allows distinguishing left from right) is ubiquitous for various data modes in computer vision, including images, videos, point clouds, and meshes. While chirality has been extensively studied in the image domain, its exploration in shape analysis (such as point clouds and meshes) remains underdeveloped. Although many shape vertex descriptors have shown appealing properties (e.g. robustness to rigid-body transformations), they are often not able to disambiguate between left and right symmetric parts. Considering the ubiquity of chirality information in different shape analysis problems and the lack of chirality-aware features within current shape descriptors, developing a chirality feature extractor becomes necessary and urgent. Based on the recent Diff3F framework, we propose an unsupervised chirality feature extraction pipeline to decorate shape vertices with chirality-aware information, extracted from 2D foundation models. We evaluated the extracted chirality features through quantitative and qualitative experiments across diverse datasets. Results from downstream tasks including left-right disentanglement, shape matching, and part segmentation demonstrate their effectiveness and practical utility. Project page: https://wei-kang-wang.github.io/chirality/

Hunyuan-TurboS: Advancing Large Language Models through Mamba-Transformer Synergy and Adaptive Chain-of-Thought

May 21, 2025Abstract:As Large Language Models (LLMs) rapidly advance, we introduce Hunyuan-TurboS, a novel large hybrid Transformer-Mamba Mixture of Experts (MoE) model. It synergistically combines Mamba's long-sequence processing efficiency with Transformer's superior contextual understanding. Hunyuan-TurboS features an adaptive long-short chain-of-thought (CoT) mechanism, dynamically switching between rapid responses for simple queries and deep "thinking" modes for complex problems, optimizing computational resources. Architecturally, this 56B activated (560B total) parameter model employs 128 layers (Mamba2, Attention, FFN) with an innovative AMF/MF block pattern. Faster Mamba2 ensures linear complexity, Grouped-Query Attention minimizes KV cache, and FFNs use an MoE structure. Pre-trained on 16T high-quality tokens, it supports a 256K context length and is the first industry-deployed large-scale Mamba model. Our comprehensive post-training strategy enhances capabilities via Supervised Fine-Tuning (3M instructions), a novel Adaptive Long-short CoT Fusion method, Multi-round Deliberation Learning for iterative improvement, and a two-stage Large-scale Reinforcement Learning process targeting STEM and general instruction-following. Evaluations show strong performance: overall top 7 rank on LMSYS Chatbot Arena with a score of 1356, outperforming leading models like Gemini-2.0-Flash-001 (1352) and o4-mini-2025-04-16 (1345). TurboS also achieves an average of 77.9% across 23 automated benchmarks. Hunyuan-TurboS balances high performance and efficiency, offering substantial capabilities at lower inference costs than many reasoning models, establishing a new paradigm for efficient large-scale pre-trained models.

HUNYUANPROVER: A Scalable Data Synthesis Framework and Guided Tree Search for Automated Theorem Proving

Dec 30, 2024

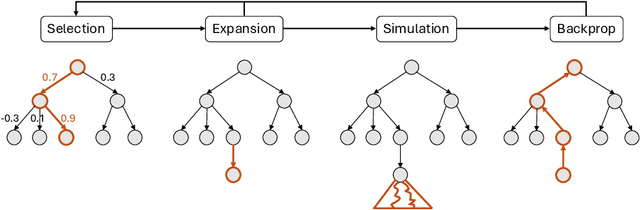

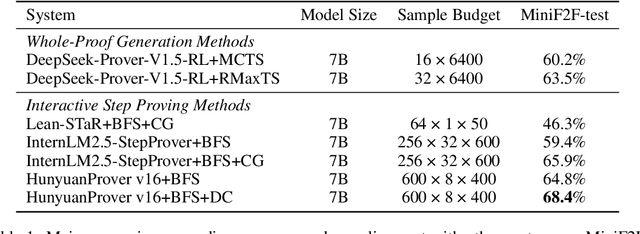

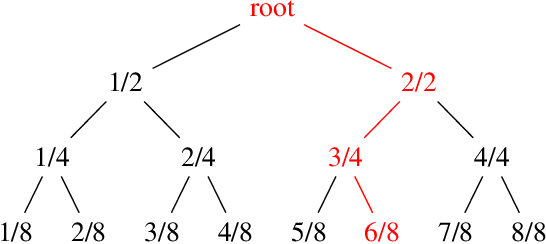

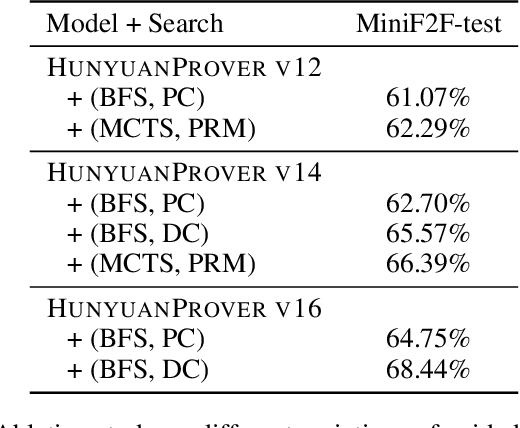

Abstract:We introduce HunyuanProver, an language model finetuned from the Hunyuan 7B for interactive automatic theorem proving with LEAN4. To alleviate the data sparsity issue, we design a scalable framework to iterative synthesize data with low cost. Besides, guided tree search algorithms are designed to enable effective ``system 2 thinking`` of the prover. HunyuanProver achieves state-of-the-art (SOTA) performances on major benchmarks. Specifically, it achieves a pass of 68.4% on the miniF2F-test compared to 65.9%, the current SOTA results. It proves 4 IMO statements (imo_1960_p2, imo_1962_p2}, imo_1964_p2 and imo_1983_p6) in miniF2F-test. To benefit the community, we will open-source a dataset of 30k synthesized instances, where each instance contains the original question in natural language, the converted statement by autoformalization, and the proof by HunyuanProver.

Beyond Complete Shapes: A quantitative Evaluation of 3D Shape Matching Algorithms

Nov 05, 2024

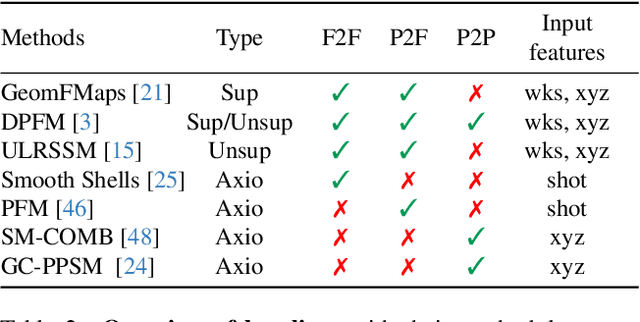

Abstract:Finding correspondences between 3D shapes is an important and long-standing problem in computer vision, graphics and beyond. While approaches based on machine learning dominate modern 3D shape matching, almost all existing (learning-based) methods require that at least one of the involved shapes is complete. In contrast, the most challenging and arguably most practically relevant setting of matching partially observed shapes, is currently underexplored. One important factor is that existing datasets contain only a small number of shapes (typically below 100), which are unable to serve data-hungry machine learning approaches, particularly in the unsupervised regime. In addition, the type of partiality present in existing datasets is often artificial and far from realistic. To address these limitations and to encourage research on these relevant settings, we provide a generic and flexible framework for the procedural generation of challenging partial shape matching scenarios. Our framework allows for a virtually infinite generation of partial shape matching instances from a finite set of shapes with complete geometry. Further, we manually create cross-dataset correspondences between seven existing (complete geometry) shape matching datasets, leading to a total of 2543 shapes. Based on this, we propose several challenging partial benchmark settings, for which we evaluate respective state-of-the-art methods as baselines.

Taking a Deep Breath: Enhancing Language Modeling of Large Language Models with Sentinel Tokens

Jun 16, 2024

Abstract:Large language models (LLMs) have shown promising efficacy across various tasks, becoming powerful tools in numerous aspects of human life. However, Transformer-based LLMs suffer a performance degradation when modeling long-term contexts due to they discard some information to reduce computational overhead. In this work, we propose a simple yet effective method to enable LLMs to take a deep breath, encouraging them to summarize information contained within discrete text chunks. Specifically, we segment the text into multiple chunks and insert special token <SR> at the end of each chunk. We then modify the attention mask to integrate the chunk's information into the corresponding <SR> token. This facilitates LLMs to interpret information not only from historical individual tokens but also from the <SR> token, aggregating the chunk's semantic information. Experiments on language modeling and out-of-domain downstream tasks validate the superiority of our approach.

Quite Good, but Not Enough: Nationality Bias in Large Language Models -- A Case Study of ChatGPT

May 11, 2024

Abstract:While nationality is a pivotal demographic element that enhances the performance of language models, it has received far less scrutiny regarding inherent biases. This study investigates nationality bias in ChatGPT (GPT-3.5), a large language model (LLM) designed for text generation. The research covers 195 countries, 4 temperature settings, and 3 distinct prompt types, generating 4,680 discourses about nationality descriptions in Chinese and English. Automated metrics were used to analyze the nationality bias, and expert annotators alongside ChatGPT itself evaluated the perceived bias. The results show that ChatGPT's generated discourses are predominantly positive, especially compared to its predecessor, GPT-2. However, when prompted with negative inclinations, it occasionally produces negative content. Despite ChatGPT considering its generated text as neutral, it shows consistent self-awareness about nationality bias when subjected to the same pair-wise comparison annotation framework used by human annotators. In conclusion, while ChatGPT's generated texts seem friendly and positive, they reflect the inherent nationality biases in the real world. This bias may vary across different language versions of ChatGPT, indicating diverse cultural perspectives. The study highlights the subtle and pervasive nature of biases within LLMs, emphasizing the need for further scrutiny.

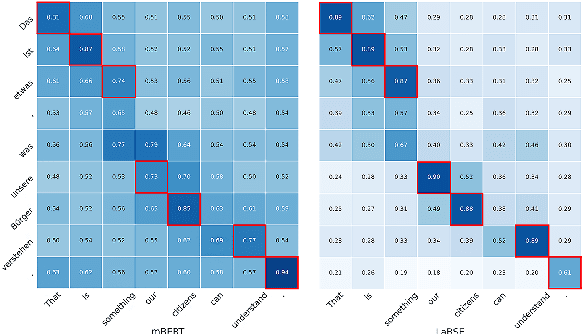

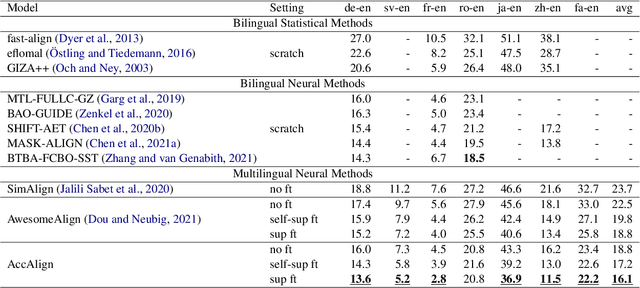

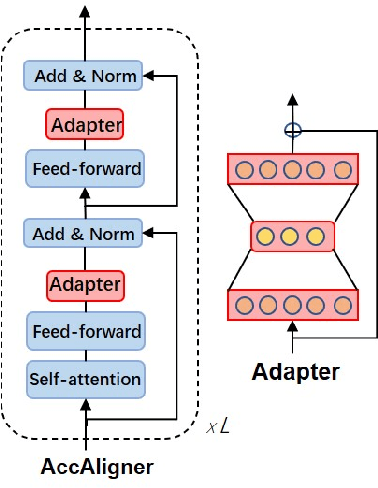

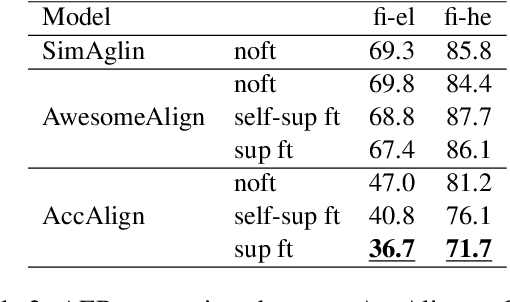

Multilingual Sentence Transformer as A Multilingual Word Aligner

Jan 28, 2023

Abstract:Multilingual pretrained language models (mPLMs) have shown their effectiveness in multilingual word alignment induction. However, these methods usually start from mBERT or XLM-R. In this paper, we investigate whether multilingual sentence Transformer LaBSE is a strong multilingual word aligner. This idea is non-trivial as LaBSE is trained to learn language-agnostic sentence-level embeddings, while the alignment extraction task requires the more fine-grained word-level embeddings to be language-agnostic. We demonstrate that the vanilla LaBSE outperforms other mPLMs currently used in the alignment task, and then propose to finetune LaBSE on parallel corpus for further improvement. Experiment results on seven language pairs show that our best aligner outperforms previous state-of-the-art models of all varieties. In addition, our aligner supports different language pairs in a single model, and even achieves new state-of-the-art on zero-shot language pairs that does not appear in the finetuning process.

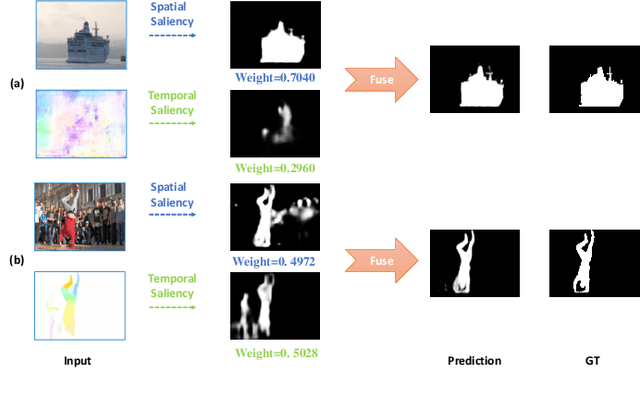

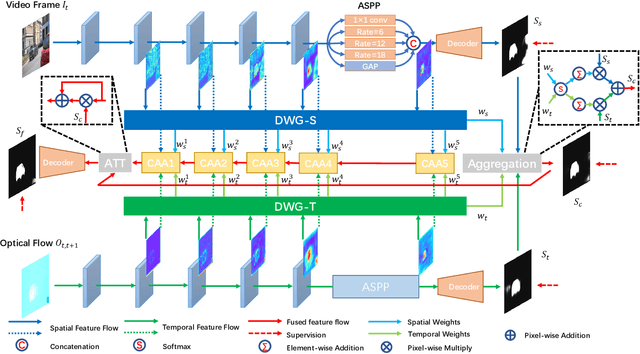

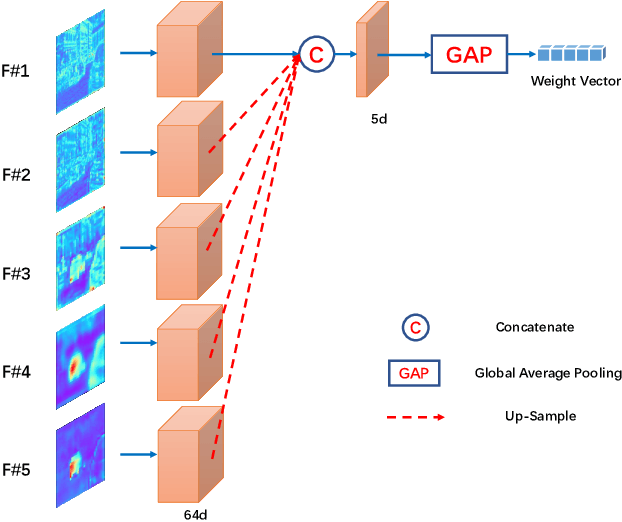

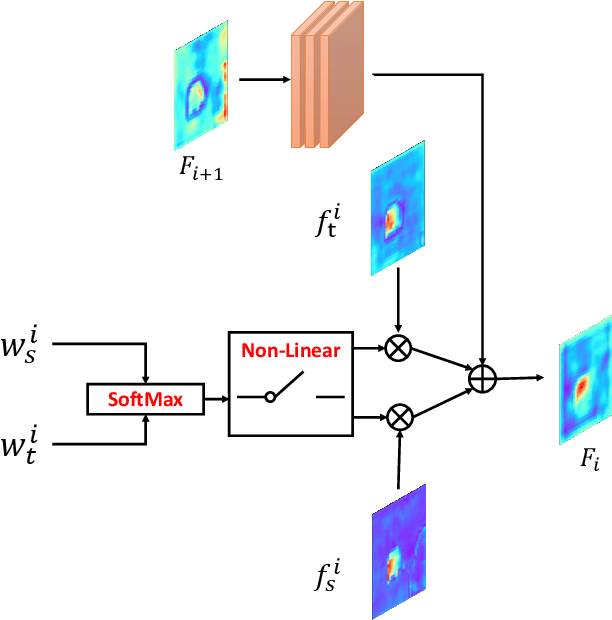

DS-Net: Dynamic Spatiotemporal Network for Video Salient Object Detection

Dec 09, 2020

Abstract:As moving objects always draw more attention of human eyes, the temporal motive information is always exploited complementarily with spatial information to detect salient objects in videos. Although efficient tools such as optical flow have been proposed to extract temporal motive information, it often encounters difficulties when used for saliency detection due to the movement of camera or the partial movement of salient objects. In this paper, we investigate the complimentary roles of spatial and temporal information and propose a novel dynamic spatiotemporal network (DS-Net) for more effective fusion of spatiotemporal information. We construct a symmetric two-bypass network to explicitly extract spatial and temporal features. A dynamic weight generator (DWG) is designed to automatically learn the reliability of corresponding saliency branch. And a top-down cross attentive aggregation (CAA) procedure is designed so as to facilitate dynamic complementary aggregation of spatiotemporal features. Finally, the features are modified by spatial attention with the guidance of coarse saliency map and then go through decoder part for final saliency map. Experimental results on five benchmarks VOS, DAVIS, FBMS, SegTrack-v2, and ViSal demonstrate that the proposed method achieves superior performance than state-of-the-art algorithms. The source code is available at https://github.com/TJUMMG/DS-Net.

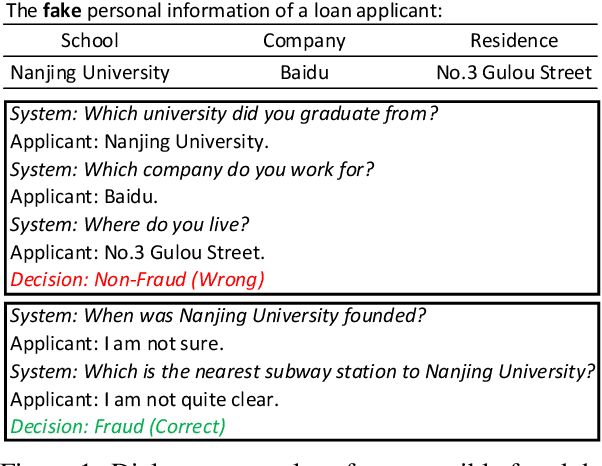

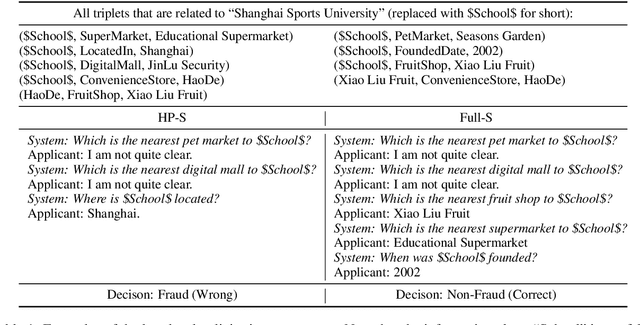

Are You for Real? Detecting Identity Fraud via Dialogue Interactions

Aug 19, 2019

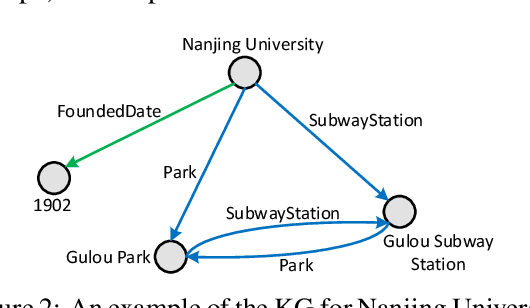

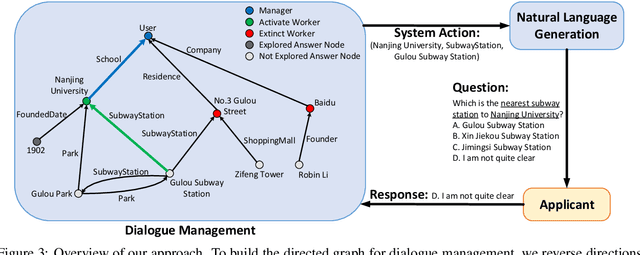

Abstract:Identity fraud detection is of great importance in many real-world scenarios such as the financial industry. However, few studies addressed this problem before. In this paper, we focus on identity fraud detection in loan applications and propose to solve this problem with a novel interactive dialogue system which consists of two modules. One is the knowledge graph (KG) constructor organizing the personal information for each loan applicant. The other is structured dialogue management that can dynamically generate a series of questions based on the personal KG to ask the applicants and determine their identity states. We also present a heuristic user simulator based on problem analysis to evaluate our method. Experiments have shown that the trainable dialogue system can effectively detect fraudsters, and achieve higher recognition accuracy compared with rule-based systems. Furthermore, our learned dialogue strategies are interpretable and flexible, which can help promote real-world applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge