Shaowei Jiang

Video-rate gigapixel ptychography via space-time neural field representations

Nov 08, 2025Abstract:Achieving gigapixel space-bandwidth products (SBP) at video rates represents a fundamental challenge in imaging science. Here we demonstrate video-rate ptychography that overcomes this barrier by exploiting spatiotemporal correlations through neural field representations. Our approach factorizes the space-time volume into low-rank spatial and temporal features, transforming SBP scaling from sequential measurements to efficient correlation extraction. The architecture employs dual networks for decoding real and imaginary field components, avoiding phase-wrapping discontinuities plagued in amplitude-phase representations. A gradient-domain loss on spatial derivatives ensures robust convergence. We demonstrate video-rate gigapixel imaging with centimeter-scale coverage while resolving 308-nm linewidths. Validations span from monitoring sample dynamics of crystals, bacteria, stem cells, microneedle to characterizing time-varying probes in extreme ultraviolet experiments, demonstrating versatility across wavelengths. By transforming temporal variations from a constraint into exploitable correlations, we establish that gigapixel video is tractable with single-sensor measurements, making ptychography a high-throughput sensing tool for monitoring mesoscale dynamics without lenses.

DEFN: Dual-Encoder Fourier Group Harmonics Network for Three-Dimensional Macular Hole Reconstruction with Stochastic Retinal Defect Augmentation and Dynamic Weight Composition

Nov 01, 2023Abstract:The spatial and quantitative parameters of macular holes are vital for diagnosis, surgical choices, and post-op monitoring. Macular hole diagnosis and treatment rely heavily on spatial and quantitative data, yet the scarcity of such data has impeded the progress of deep learning techniques for effective segmentation and real-time 3D reconstruction. To address this challenge, we assembled the world's largest macular hole dataset, Retinal OCTfor Macular Hole Enhancement (ROME-3914), and a Comprehensive Archive for Retinal Segmentation (CARS-30k), both expertly annotated. In addition, we developed an innovative 3D segmentation network, the Dual-Encoder FuGH Network (DEFN), which integrates three innovative modules: Fourier Group Harmonics (FuGH), Simplified 3D Spatial Attention (S3DSA) and Harmonic Squeeze-and-Excitation Module (HSE). These three modules synergistically filter noise, reduce computational complexity, emphasize detailed features, and enhance the network's representation ability. We also proposed a novel data augmentation method, Stochastic Retinal Defect Injection (SRDI), and a network optimization strategy DynamicWeightCompose (DWC), to further improve the performance of DEFN. Compared with 13 baselines, our DEFN shows the best performance. We also offer precise 3D retinal reconstruction and quantitative metrics, bringing revolutionary diagnostic and therapeutic decision-making tools for ophthalmologists, and is expected to completely reshape the diagnosis and treatment patterns of difficult-to-treat macular degeneration. The source code is publicly available at: https://github.com/IIPL-HangzhouDianUniversity/DEFN-Pytorch.

Digital staining in optical microscopy using deep learning -- a review

Mar 14, 2023Abstract:Until recently, conventional biochemical staining had the undisputed status as well-established benchmark for most biomedical problems related to clinical diagnostics, fundamental research and biotechnology. Despite this role as gold-standard, staining protocols face several challenges, such as a need for extensive, manual processing of samples, substantial time delays, altered tissue homeostasis, limited choice of contrast agents for a given sample, 2D imaging instead of 3D tomography and many more. Label-free optical technologies, on the other hand, do not rely on exogenous and artificial markers, by exploiting intrinsic optical contrast mechanisms, where the specificity is typically less obvious to the human observer. Over the past few years, digital staining has emerged as a promising concept to use modern deep learning for the translation from optical contrast to established biochemical contrast of actual stainings. In this review article, we provide an in-depth analysis of the current state-of-the-art in this field, suggest methods of good practice, identify pitfalls and challenges and postulate promising advances towards potential future implementations and applications.

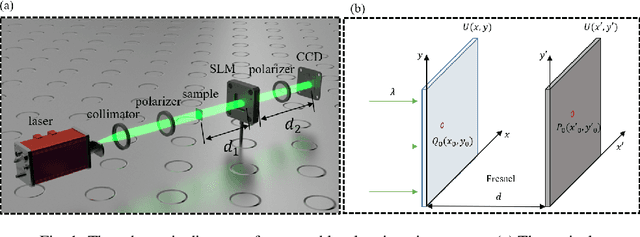

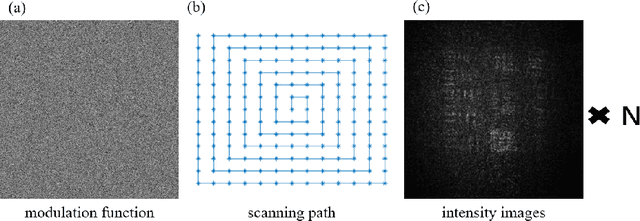

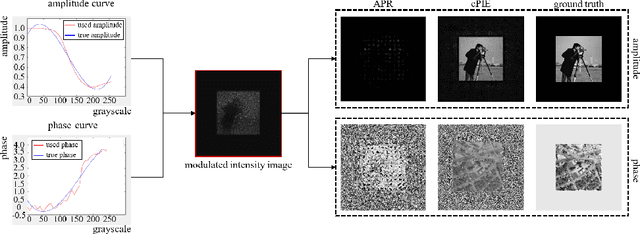

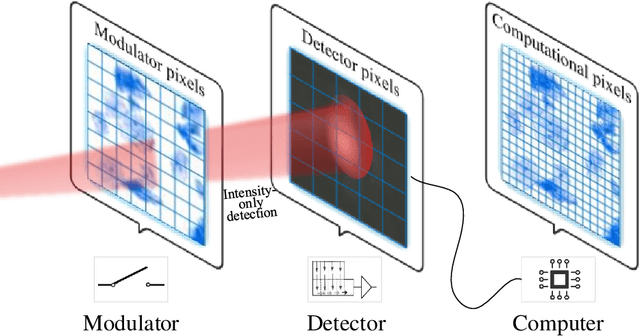

Lensless coherent diffraction imaging based on spatial light modulator with unknown modulation curve

Apr 08, 2022

Abstract:Lensless imaging is a popular research field for the advantages of small size, wide field-of-view and low aberration in recent years. However, some traditional lensless imaging methods suffer from slow convergence, mechanical errors and conjugate solution interference, which limit its further application and development. In this work, we proposed a lensless imaging method based on spatial light modulator (SLM) with unknown modulation curve. In our imaging system, we use SLM to modulate the wavefront of object, and introduce the ptychographic scanning algorithm that is able to recover the complex amplitude information even the SLM modulation curve is inaccurate or unknown. In addition, we also design a split-beam interference experiment to calibrate the modulation curve of SLM, and using the calibrated modulation function as the initial value of the expended ptychography iterative engine (ePIE) algorithm can improve the convergence speed. We further analyze the effect of modulation function, algorithm parameters and the characteristics of the coherent light source on the quality of reconstructed image. The simulated and real experiments show that the proposed method is superior to traditional mechanical scanning methods in terms of recovering speed and accuracy, with the recovering resolution up to 14 um.

Ptychographic sensor for large-scale lensless microbial monitoring with high spatiotemporal resolution

Dec 15, 2021

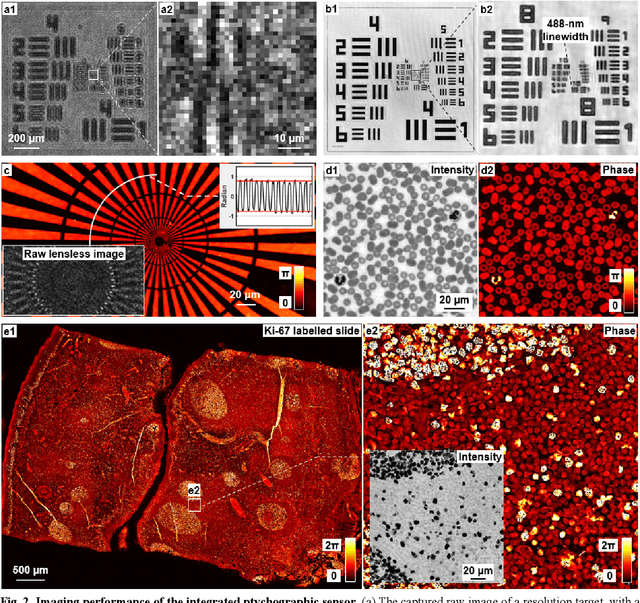

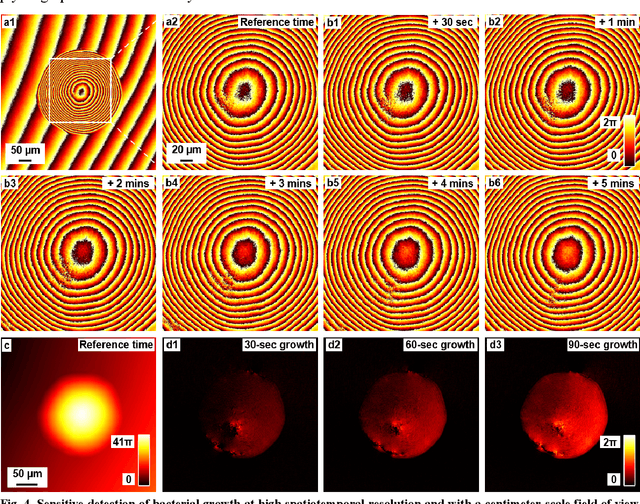

Abstract:Traditional microbial detection methods often rely on the overall property of microbial cultures and cannot resolve individual growth event at high spatiotemporal resolution. As a result, they require bacteria to grow to confluence and then interpret the results. Here, we demonstrate the application of an integrated ptychographic sensor for lensless cytometric analysis of microbial cultures over a large scale and with high spatiotemporal resolution. The reported device can be placed within a regular incubator or used as a standalone incubating unit for long-term microbial monitoring. For longitudinal study where massive data are acquired at sequential time points, we report a new temporal-similarity constraint to increase the temporal resolution of ptychographic reconstruction by 7-fold. With this strategy, the reported device achieves a centimeter-scale field of view, a half-pitch spatial resolution of 488 nm, and a temporal resolution of 15-second intervals. For the first time, we report the direct observation of bacterial growth in a 15-second interval by tracking the phase wraps of the recovered images, with high phase sensitivity like that in interferometric measurements. We also characterize cell growth via longitudinal dry mass measurement and perform rapid bacterial detection at low concentrations. For drug-screening application, we demonstrate proof-of-concept antibiotic susceptibility testing and perform single-cell analysis of antibiotic-induced filamentation. The combination of high phase sensitivity, high spatiotemporal resolution, and large field of view is unique among existing microscopy techniques. As a quantitative and miniaturized platform, it can improve studies with microorganisms and other biospecimens at resource-limited settings.

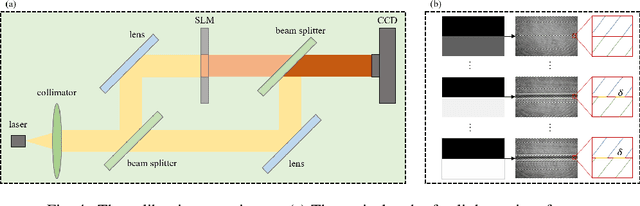

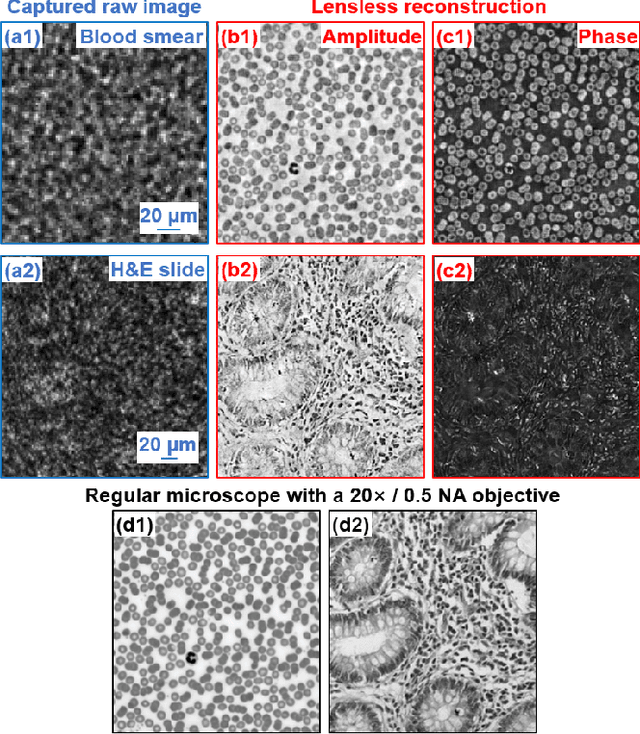

High-throughput lensless whole slide imaging via continuous height-varying modulation of tilted sensor

Sep 28, 2021

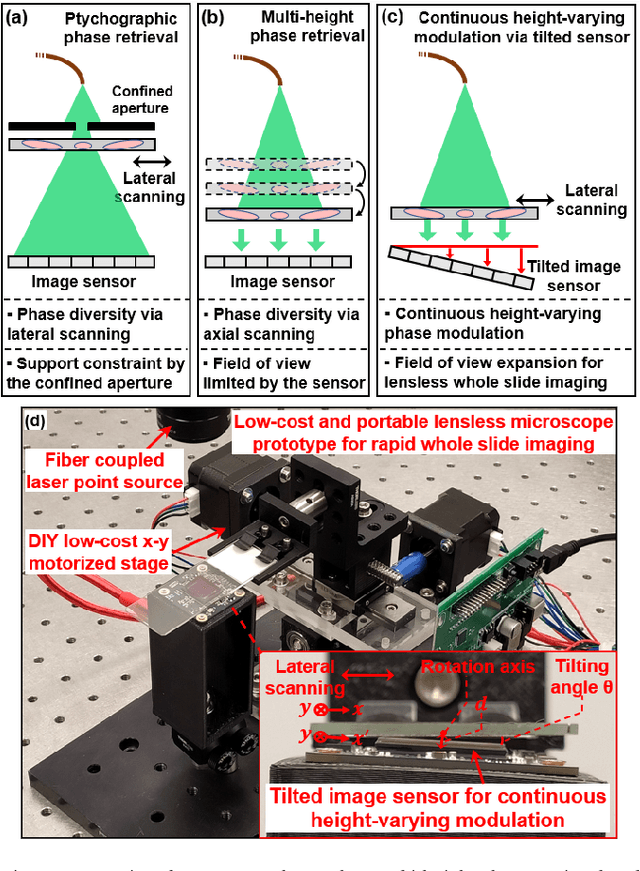

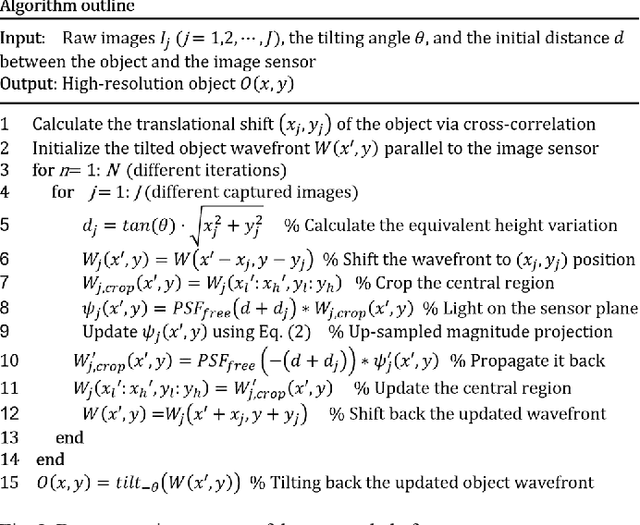

Abstract:We report a new lensless microscopy configuration by integrating the concepts of transverse translational ptychography and defocus multi-height phase retrieval. In this approach, we place a tilted image sensor under the specimen for linearly-increasing phase modulation along one lateral direction. Similar to the operation of ptychography, we laterally translate the specimen and acquire the diffraction images for reconstruction. Since the axial distance between the specimen and the sensor varies at different lateral positions, laterally translating the specimen effectively introduces defocus multi-height measurements while eliminating axial scanning. Lateral translation further introduces sub-pixel shift for pixel super-resolution imaging and naturally expands the field of view for rapid whole slide imaging. We show that the equivalent height variation can be precisely estimated from the lateral shift of the specimen, thereby addressing the challenge of precise axial positioning in conventional multi-height phase retrieval. Using a sensor with a 1.67-micron pixel size, our low-cost and field-portable prototype can resolve 690-nm linewidth on the resolution target. We show that a whole slide image of a blood smear with a 120-mm^2 field of view can be acquired in 18 seconds. We also demonstrate accurate automatic white blood cell counting from the recovered image. The reported approach may provide a turnkey solution for addressing point-of-care- and telemedicine-related challenges.

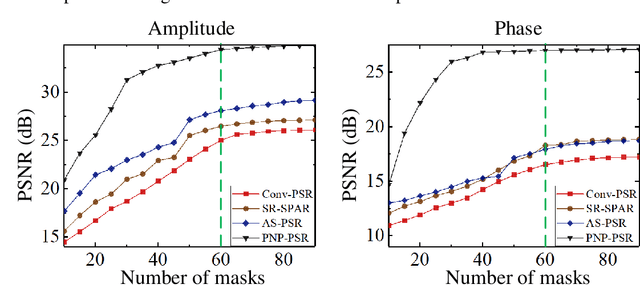

Plug-and-play optimization for pixel super-resolution phase retrieval

May 31, 2021

Abstract:In order to increase signal-to-noise ratio in measurement, most imaging detectors sacrifice resolution to increase pixel size in confined area. Although the pixel super-resolution technique (PSR) enables resolution enhancement in such as digital holographic imaging, it suffers from unsatisfied reconstruction quality. In this work, we report a high-fidelity plug-and-play optimization method for PSR phase retrieval, termed as PNP-PSR. It decomposes PSR reconstruction into independent sub-problems based on the generalized alternating projection framework. An alternating projection operator and an enhancing neural network are derived to tackle the measurement fidelity and statistical prior regularization, respectively. In this way, PNP-PSR incorporates the advantages of individual operators, achieving both high efficiency and noise robustness. We compare PNP-PSR with the existing PSR phase retrieval algorithms with a series of simulations and experiments, and PNP-PSR outperforms the existing algorithms with as much as 11dB on PSNR. The enhanced imaging fidelity enables one-order-of-magnitude higher cell counting precision.

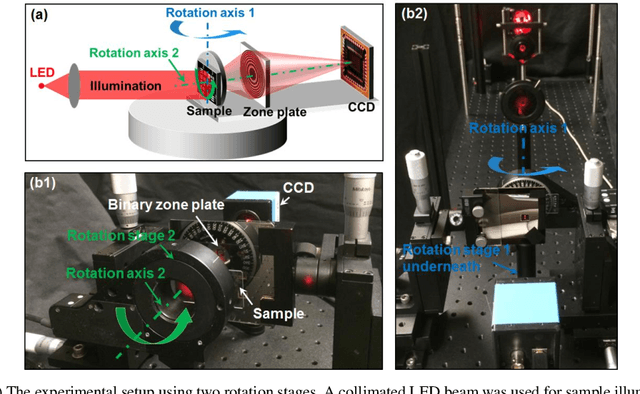

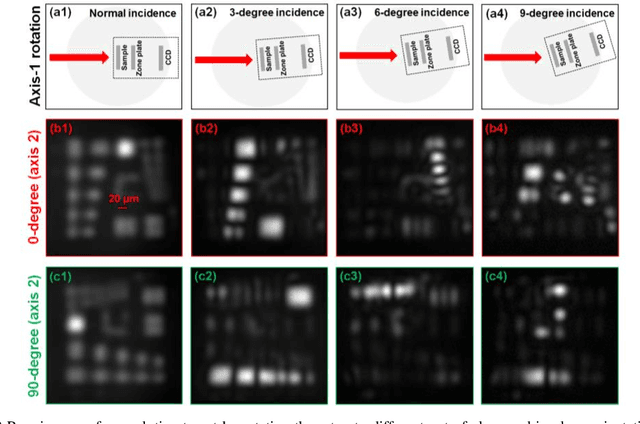

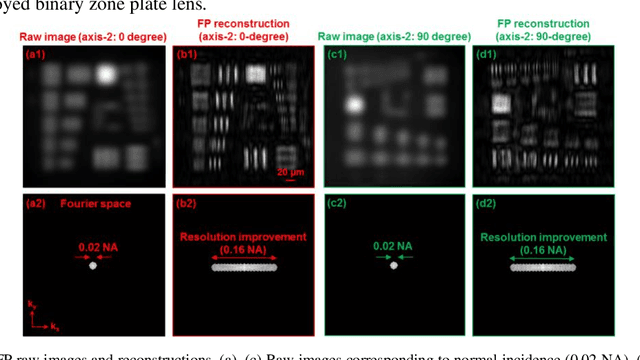

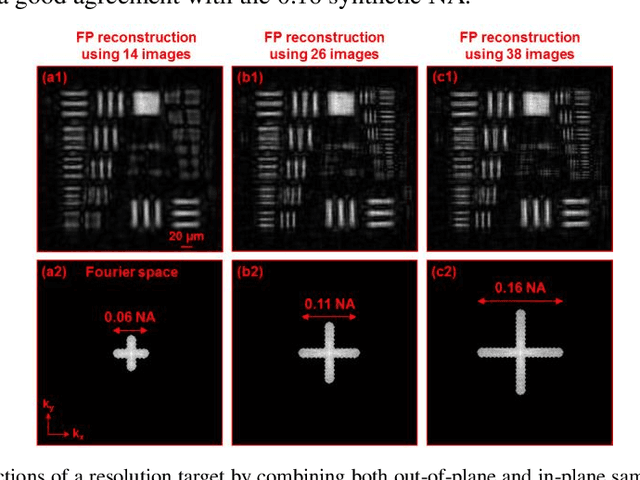

Bypassing the resolution limit of diffractive zone plate optics via rotational Fourier ptychography

Feb 07, 2021

Abstract:Diffractive zone plate optics uses a thin micro-structure pattern to alter the propagation direction of the incoming light wave. It has found important applications in extreme-wavelength imaging where conventional refractive lenses do not exist. The resolution limit of zone plate optics is determined by the smallest width of the outermost zone. In order to improve the achievable resolution, significant efforts have been devoted to the fabrication of very small zone width with ultrahigh placement accuracy. Here, we report the use of a diffractometer setup for bypassing the resolution limit of zone plate optics. In our prototype, we mounted the sample on two rotation stages and used a low-resolution binary zone plate to relay the sample plane to the detector. We then performed both in-plane and out-of-plane sample rotations and captured the corresponding raw images. The captured images were processed using a Fourier ptychographic procedure for resolution improvement. The final achievable resolution of the reported setup is not determined by the smallest width structures of the employed binary zone plate; instead, it is determined by the maximum angle of the out-of-plane rotation. In our experiment, we demonstrated 8-fold resolution improvement using both a resolution target and a titanium dioxide sample. The reported approach may be able to bypass the fabrication challenge of diffractive elements and open up new avenues for microscopy with extreme wavelengths.

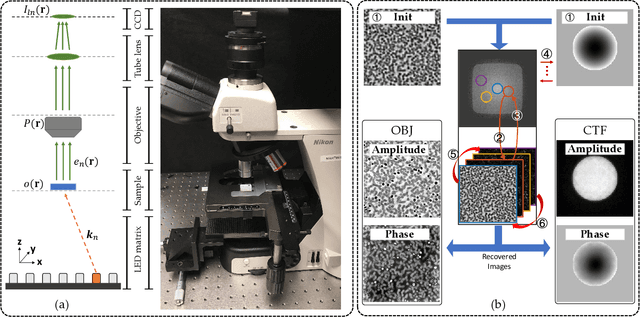

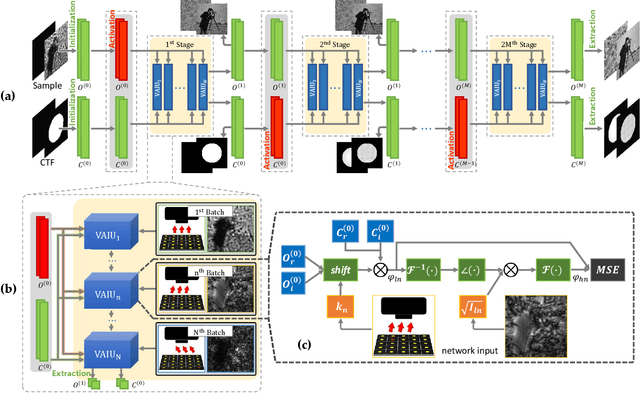

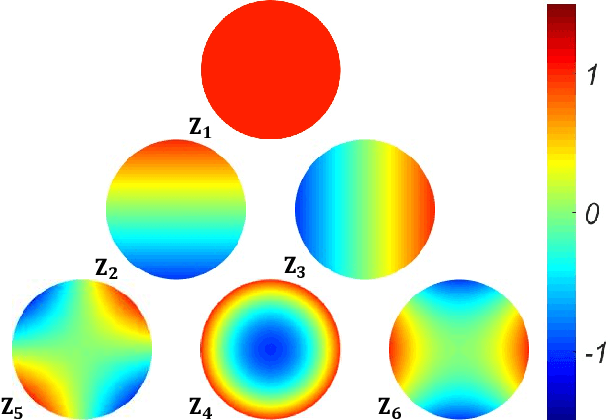

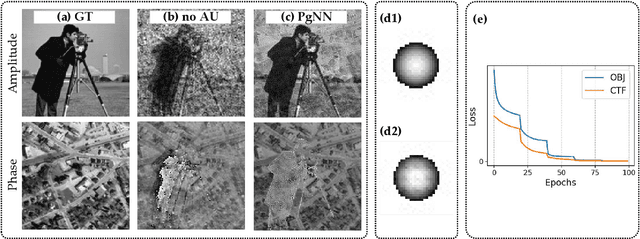

PgNN: Physics-guided Neural Network for Fourier Ptychographic Microscopy

Sep 19, 2019

Abstract:Fourier ptychography (FP) is a newly developed computational imaging approach that achieves both high resolution and wide field of view by stitching a series of low-resolution images captured under angle-varied illumination. So far, many supervised data-driven models have been applied to solve inverse imaging problems. These models need massive amounts of data to train, and are limited by the dataset characteristics. In FP problems, generic datasets are always scarce, and the optical aberration varies greatly under different acquisition conditions. To address these dilemmas, we model the forward physical imaging process as an interpretable physics-guided neural network (PgNN), where the reconstructed image in the complex domain is considered as the learnable parameters of the neural network. Since the optimal parameters of the PgNN can be derived by minimizing the difference between the model-generated images and real captured angle-varied images corresponding to the same scene, the proposed PgNN can get rid of the problem of massive training data as in traditional supervised methods. Applying the alternate updating mechanism and the total variation regularization, PgNN can flexibly reconstruct images with improved performance. In addition, the Zernike mode is incorporated to compensate for optical aberrations to enhance the robustness of FP reconstructions. As a demonstration, we show our method can reconstruct images with smooth performance and detailed information in both simulated and experimental datasets. In particular, when validated in an extension of a high-defocus, high-exposure tissue section dataset, PgNN outperforms traditional FP methods with fewer artifacts and distinguishable structures.

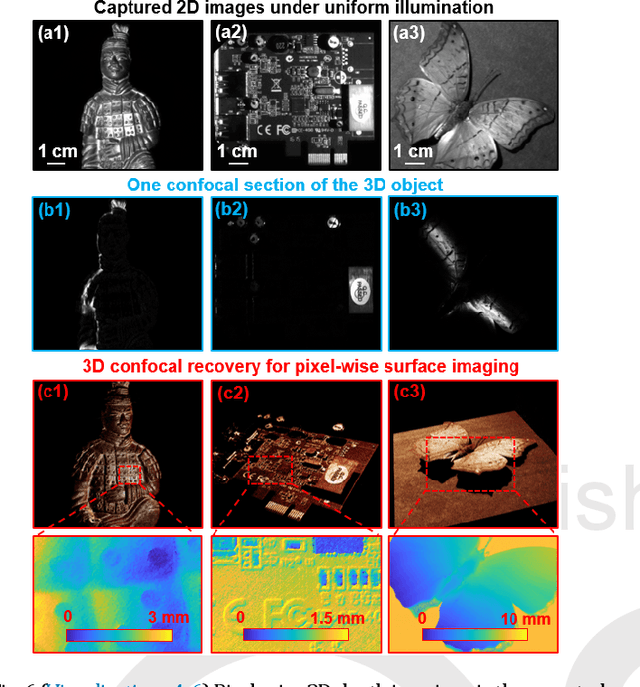

Axially-shifted pattern illumination for macroscale turbidity suppression and virtual volumetric confocal imaging without axial scanning

Dec 14, 2018

Abstract:Structured illumination has been widely used for optical sectioning and 3D surface recovery. In a typical implementation, multiple images under non-uniform pattern illumination are used to recover a single object section. Axial scanning of the sample or the objective lens is needed for acquiring the 3D volumetric data. Here we demonstrate the use of axially-shifted pattern illumination (asPI) for virtual volumetric confocal imaging without axial scanning. In the reported approach, we project illumination patterns at a tilted angle with respect to the detection optics. As such, the illumination patterns shift laterally at different z sections and the sample information at different z-sections can be recovered based on the captured 2D images. We demonstrate the reported approach for virtual confocal imaging through a diffusing layer and underwater 3D imaging through diluted milk. We show that we can acquire the entire confocal volume in ~1s with a throughput of 420 megapixels per second. Our approach may provide new insights for developing confocal light ranging and detection systems in degraded visual environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge