Xuyang Chang

Towards Large-scale Single-shot Millimeter-wave Imaging for Low-cost Security Inspection

May 25, 2023Abstract:Millimeter-wave (MMW) imaging is emerging as a promising technique for safe security inspection. It achieves a delicate balance between imaging resolution, penetrability and human safety, resulting in higher resolution compared to low-frequency microwave, stronger penetrability compared to visible light, and stronger safety compared to X ray. Despite of recent advance in the last decades, the high cost of requisite large-scale antenna array hinders widespread adoption of MMW imaging in practice. To tackle this challenge, we report a large-scale single-shot MMW imaging framework using sparse antenna array, achieving low-cost but high-fidelity security inspection under an interpretable learning scheme. We first collected extensive full-sampled MMW echoes to study the statistical ranking of each element in the large-scale array. These elements are then sampled based on the ranking, building the experimentally optimal sparse sampling strategy that reduces the cost of antenna array by up to one order of magnitude. Additionally, we derived an untrained interpretable learning scheme, which realizes robust and accurate image reconstruction from sparsely sampled echoes. Last, we developed a neural network for automatic object detection, and experimentally demonstrated successful detection of concealed centimeter-sized targets using 10% sparse array, whereas all the other contemporary approaches failed at the same sample sampling ratio. The performance of the reported technique presents higher than 50% superiority over the existing MMW imaging schemes on various metrics including precision, recall, and mAP50. With such strong detection ability and order-of-magnitude cost reduction, we anticipate that this technique provides a practical way for large-scale single-shot MMW imaging, and could advocate its further practical applications.

Large-scale single-photon imaging

Dec 28, 2022

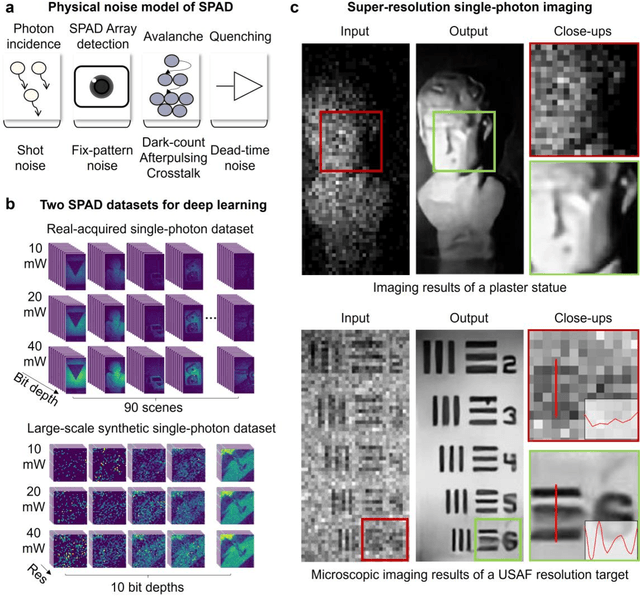

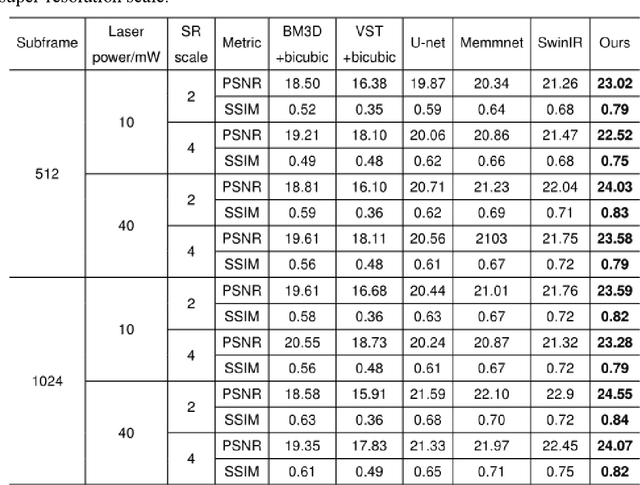

Abstract:Benefiting from its single-photon sensitivity, single-photon avalanche diode (SPAD) array has been widely applied in various fields such as fluorescence lifetime imaging and quantum computing. However, large-scale high-fidelity single-photon imaging remains a big challenge, due to the complex hardware manufacture craft and heavy noise disturbance of SPAD arrays. In this work, we introduce deep learning into SPAD, enabling super-resolution single-photon imaging over an order of magnitude, with significant enhancement of bit depth and imaging quality. We first studied the complex photon flow model of SPAD electronics to accurately characterize multiple physical noise sources, and collected a real SPAD image dataset (64 $\times$ 32 pixels, 90 scenes, 10 different bit depth, 3 different illumination flux, 2790 images in total) to calibrate noise model parameters. With this real-world physical noise model, we for the first time synthesized a large-scale realistic single-photon image dataset (image pairs of 5 different resolutions with maximum megapixels, 17250 scenes, 10 different bit depth, 3 different illumination flux, 2.6 million images in total) for subsequent network training. To tackle the severe super-resolution challenge of SPAD inputs with low bit depth, low resolution, and heavy noise, we further built a deep transformer network with a content-adaptive self-attention mechanism and gated fusion modules, which can dig global contextual features to remove multi-source noise and extract full-frequency details. We applied the technique on a series of experiments including macroscopic and microscopic imaging, microfluidic inspection, and Fourier ptychography. The experiments validate the technique's state-of-the-art super-resolution SPAD imaging performance, with more than 5 dB superiority on PSNR compared to the existing methods.

Plug-and-play optimization for pixel super-resolution phase retrieval

May 31, 2021

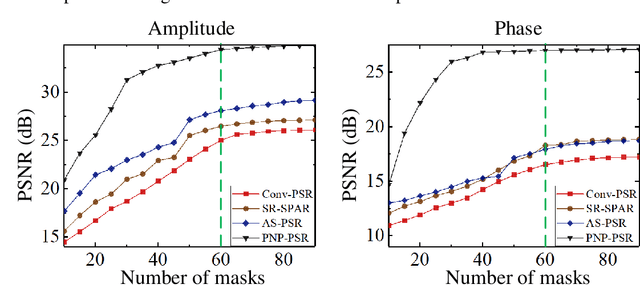

Abstract:In order to increase signal-to-noise ratio in measurement, most imaging detectors sacrifice resolution to increase pixel size in confined area. Although the pixel super-resolution technique (PSR) enables resolution enhancement in such as digital holographic imaging, it suffers from unsatisfied reconstruction quality. In this work, we report a high-fidelity plug-and-play optimization method for PSR phase retrieval, termed as PNP-PSR. It decomposes PSR reconstruction into independent sub-problems based on the generalized alternating projection framework. An alternating projection operator and an enhancing neural network are derived to tackle the measurement fidelity and statistical prior regularization, respectively. In this way, PNP-PSR incorporates the advantages of individual operators, achieving both high efficiency and noise robustness. We compare PNP-PSR with the existing PSR phase retrieval algorithms with a series of simulations and experiments, and PNP-PSR outperforms the existing algorithms with as much as 11dB on PSNR. The enhanced imaging fidelity enables one-order-of-magnitude higher cell counting precision.

Large-scale phase retrieval

Apr 06, 2021

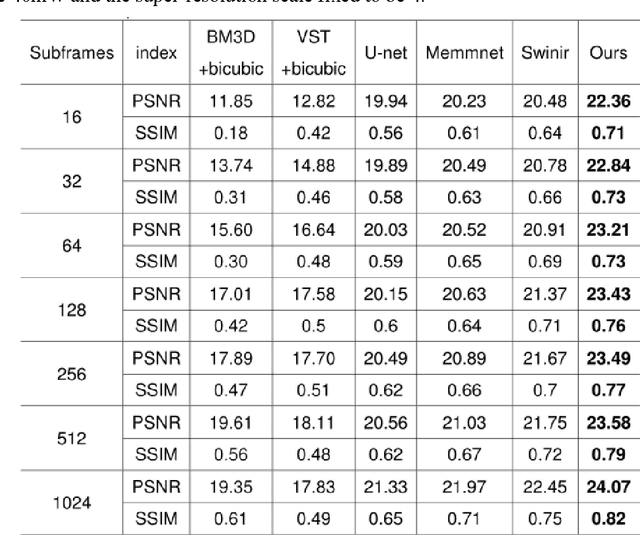

Abstract:High-throughput computational imaging requires efficient processing algorithms to retrieve multi-dimensional and multi-scale information. In computational phase imaging, phase retrieval (PR) is required to reconstruct both amplitude and phase in complex space from intensity-only measurements. The existing PR algorithms suffer from the tradeoff among low computational complexity, robustness to measurement noise and strong generalization on different modalities. In this work, we report an efficient large-scale phase retrieval technique termed as LPR. It extends the plug-and-play generalized-alternating-projection framework from real space to nonlinear complex space. The alternating projection solver and enhancing neural network are respectively derived to tackle the measurement formation and statistical prior regularization. This framework compensates the shortcomings of each operator, so as to realize high-fidelity phase retrieval with low computational complexity and strong generalization. We applied the technique for a series of computational phase imaging modalities including coherent diffraction imaging, coded diffraction pattern imaging, and Fourier ptychographic microscopy. Extensive simulations and experiments validate that the technique outperforms the existing PR algorithms with as much as 17dB enhancement on signal-to-noise ratio, and more than one order-of-magnitude increased running efficiency. Besides, we for the first time demonstrate ultra-large-scale phase retrieval at the 8K level (7680$\times$4320 pixels) in minute-level time.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge