Dezhi Zheng

Intelligent Multimodal Multi-Sensor Fusion-Based UAV Identification, Localization, and Countermeasures for Safeguarding Low-Altitude Economy

Oct 27, 2025Abstract:The development of the low-altitude economy has led to a growing prominence of uncrewed aerial vehicle (UAV) safety management issues. Therefore, accurate identification, real-time localization, and effective countermeasures have become core challenges in airspace security assurance. This paper introduces an integrated UAV management and control system based on deep learning, which integrates multimodal multi-sensor fusion perception, precise positioning, and collaborative countermeasures. By incorporating deep learning methods, the system combines radio frequency (RF) spectral feature analysis, radar detection, electro-optical identification, and other methods at the detection level to achieve the identification and classification of UAVs. At the localization level, the system relies on multi-sensor data fusion and the air-space-ground integrated communication network to conduct real-time tracking and prediction of UAV flight status, providing support for early warning and decision-making. At the countermeasure level, it adopts comprehensive measures that integrate ``soft kill'' and ``hard kill'', including technologies such as electromagnetic signal jamming, navigation spoofing, and physical interception, to form a closed-loop management and control process from early warning to final disposal, which significantly enhances the response efficiency and disposal accuracy of low-altitude UAV management.

LabelGS: Label-Aware 3D Gaussian Splatting for 3D Scene Segmentation

Aug 27, 2025Abstract:3D Gaussian Splatting (3DGS) has emerged as a novel explicit representation for 3D scenes, offering both high-fidelity reconstruction and efficient rendering. However, 3DGS lacks 3D segmentation ability, which limits its applicability in tasks that require scene understanding. The identification and isolating of specific object components is crucial. To address this limitation, we propose Label-aware 3D Gaussian Splatting (LabelGS), a method that augments the Gaussian representation with object label.LabelGS introduces cross-view consistent semantic masks for 3D Gaussians and employs a novel Occlusion Analysis Model to avoid overfitting occlusion during optimization, Main Gaussian Labeling model to lift 2D semantic prior to 3D Gaussian and Gaussian Projection Filter to avoid Gaussian label conflict. Our approach achieves effective decoupling of Gaussian representations and refines the 3DGS optimization process through a random region sampling strategy, significantly improving efficiency. Extensive experiments demonstrate that LabelGS outperforms previous state-of-the-art methods, including Feature-3DGS, in the 3D scene segmentation task. Notably, LabelGS achieves a remarkable 22X speedup in training compared to Feature-3DGS, at a resolution of 1440X1080. Our code will be at https://github.com/garrisonz/LabelGS.

Spatial Frequency Modulation for Semantic Segmentation

Jul 16, 2025

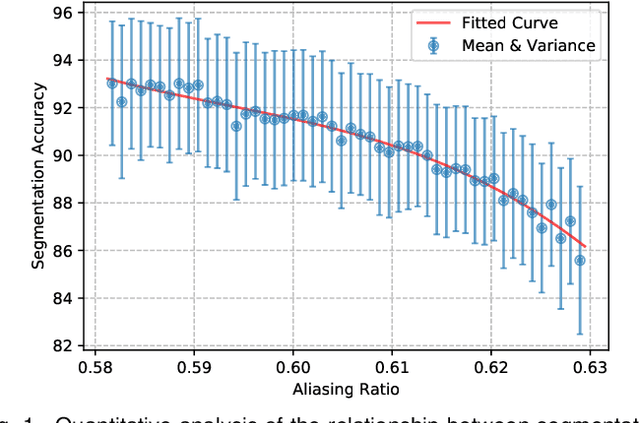

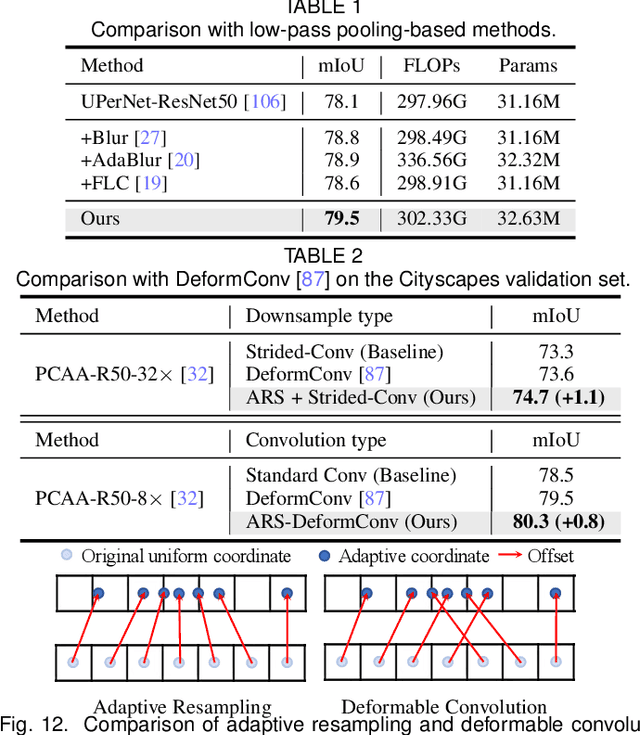

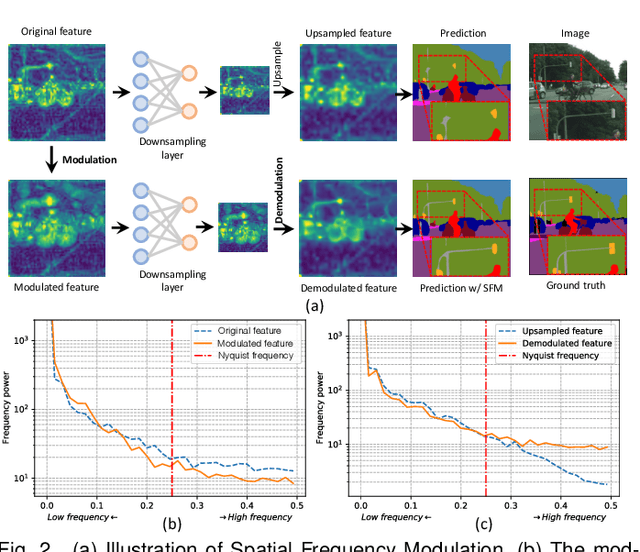

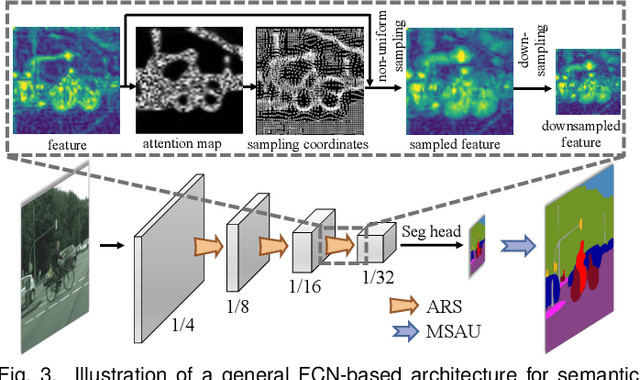

Abstract:High spatial frequency information, including fine details like textures, significantly contributes to the accuracy of semantic segmentation. However, according to the Nyquist-Shannon Sampling Theorem, high-frequency components are vulnerable to aliasing or distortion when propagating through downsampling layers such as strided-convolution. Here, we propose a novel Spatial Frequency Modulation (SFM) that modulates high-frequency features to a lower frequency before downsampling and then demodulates them back during upsampling. Specifically, we implement modulation through adaptive resampling (ARS) and design a lightweight add-on that can densely sample the high-frequency areas to scale up the signal, thereby lowering its frequency in accordance with the Frequency Scaling Property. We also propose Multi-Scale Adaptive Upsampling (MSAU) to demodulate the modulated feature and recover high-frequency information through non-uniform upsampling This module further improves segmentation by explicitly exploiting information interaction between densely and sparsely resampled areas at multiple scales. Both modules can seamlessly integrate with various architectures, extending from convolutional neural networks to transformers. Feature visualization and analysis confirm that our method effectively alleviates aliasing while successfully retaining details after demodulation. Finally, we validate the broad applicability and effectiveness of SFM by extending it to image classification, adversarial robustness, instance segmentation, and panoptic segmentation tasks. The code is available at \href{https://github.com/Linwei-Chen/SFM}{https://github.com/Linwei-Chen/SFM}.

Chirp Delay-Doppler Domain Modulation: A New Paradigm of Integrated Sensing and Communication for Autonomous Vehicles

May 22, 2025Abstract:Autonomous driving is reshaping the way humans travel, with millimeter wave (mmWave) radar playing a crucial role in this transformation to enabe vehicle-to-everything (V2X). Although chirp is widely used in mmWave radar systems for its strong sensing capabilities, the lack of integrated communication functions in existing systems may limit further advancement of autonomous driving. In light of this, we first design ``dedicated chirps" tailored for sensing chirp signals in the environment, facilitating the identification of idle time-frequency resources. Based on these dedicated chirps, we propose a chirp-division multiple access (Chirp-DMA) scheme, enabling multiple pairs of mmWave radar transceivers to perform integrated sensing and communication (ISAC) without interference. Subsequently, we propose two chirp-based delay-Doppler domain modulation schemes that enable each pair of mmWave radar transceivers to simultaneously sense and communicate within their respective time-frequency resource blocks. The modulation schemes are based on different multiple-input multiple-output (MIMO) radar schemes: the time division multiplexing (TDM)-based scheme offers higher communication rates, while the Doppler division multiplexing (DDM)-based scheme is suitable for working in a lower signal-to-noise ratio range. We then validate the effectiveness of the proposed DDM-based scheme through simulations. Finally, we present some challenges and issues that need to be addressed to advance ISAC in V2X for better autonomous driving. Simulation codes are provided to reproduce the results in this paper: \href{https://github.com/LiZhuoRan0/2025-IEEE-Network-ChirpDelayDopplerModulationISAC}{https://github.com/LiZhuoRan0}.

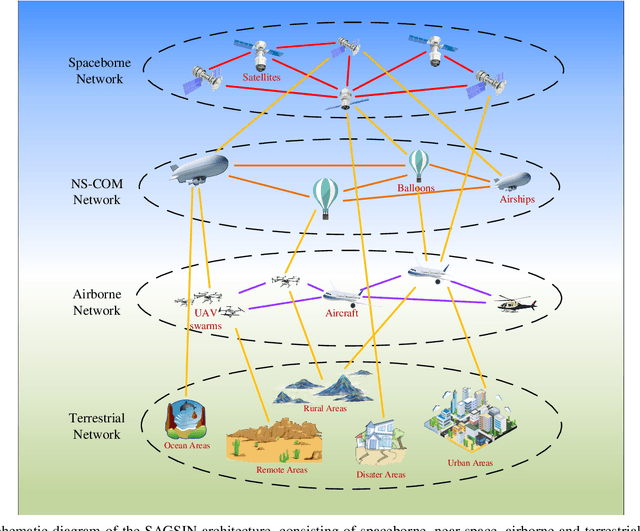

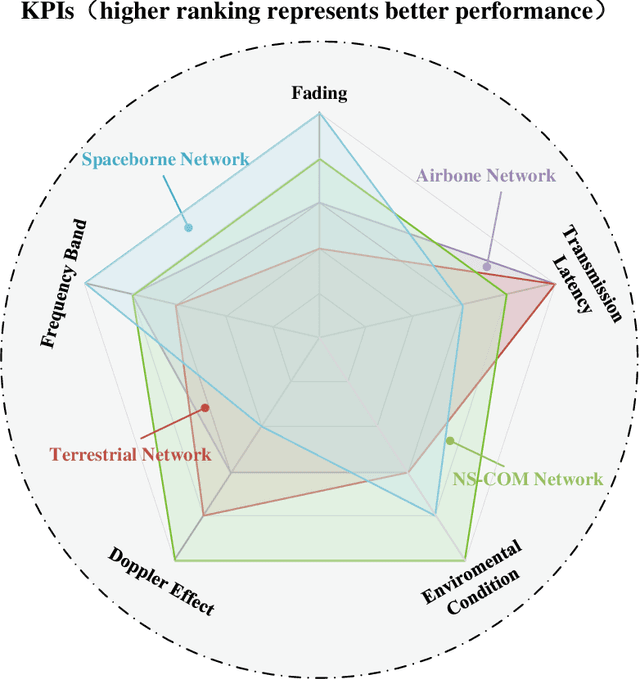

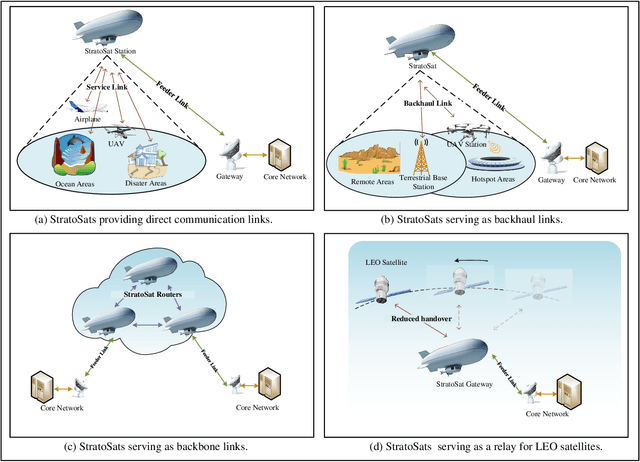

Toward Near-Space Communication Network in the 6G and Beyond Era

May 18, 2025Abstract:Near-space communication network (NS-ComNet), as an indispensable component of sixth-generation (6G) and beyond mobile communication systems and the space-air-ground-sea integrated network (SAGSIN), demonstrates unique advantages in wide-area coverage, long-endurance high-altitude operation, and highly flexible deployment. This paper presents a comprehensive review of NS-ComNet for 6G and beyond era. Specifically, by contrasting satellite, low-altitude unmanned-aerial-vehicle (UAV), and terrestrial communications, we first elucidate the background and motivation for integrating NS-ComNet into 6G network architectures. Subsequently, we review the developmental status of near-space platforms, including high-altitude balloons, solar-powered UAVs, and stratospheric airships, and analyze critical challenges faced by NS-ComNet. To address these challenges, the research focuses on key enabling technologies such as topology design, resource and handover management, multi-objective joint optimization, etc., with particular emphasis on artificial intelligence techniques for NS-ComNet. Finally, envisioning future intelligent collaborative networks that integrate NS-ComNet with satellite-UAV-terrestrial systems, we explore promising directions. This paper aims to provide technical insights and research foundations for the systematic construction of NS-ComNet and its deep deployment in the 6G and beyond era.

DEGSTalk: Decomposed Per-Embedding Gaussian Fields for Hair-Preserving Talking Face Synthesis

Dec 28, 2024

Abstract:Accurately synthesizing talking face videos and capturing fine facial features for individuals with long hair presents a significant challenge. To tackle these challenges in existing methods, we propose a decomposed per-embedding Gaussian fields (DEGSTalk), a 3D Gaussian Splatting (3DGS)-based talking face synthesis method for generating realistic talking faces with long hairs. Our DEGSTalk employs Deformable Pre-Embedding Gaussian Fields, which dynamically adjust pre-embedding Gaussian primitives using implicit expression coefficients. This enables precise capture of dynamic facial regions and subtle expressions. Additionally, we propose a Dynamic Hair-Preserving Portrait Rendering technique to enhance the realism of long hair motions in the synthesized videos. Results show that DEGSTalk achieves improved realism and synthesis quality compared to existing approaches, particularly in handling complex facial dynamics and hair preservation. Our code will be publicly available at https://github.com/CVI-SZU/DEGSTalk.

Reinforcement-Learning-Enabled Beam Alignment for Water-Air Direct Optical Wireless Communications

Sep 05, 2024

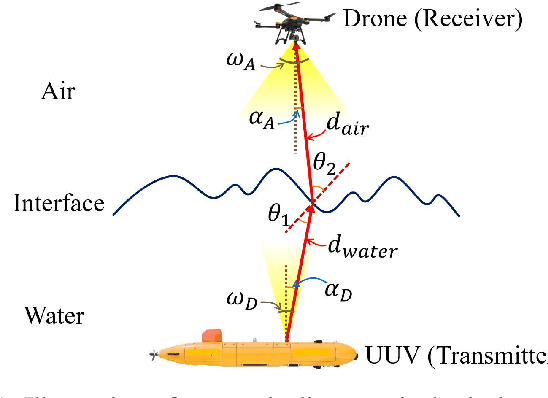

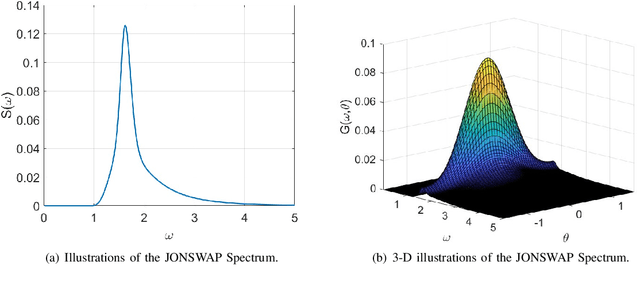

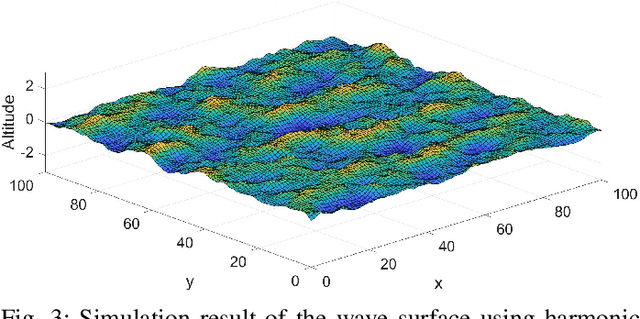

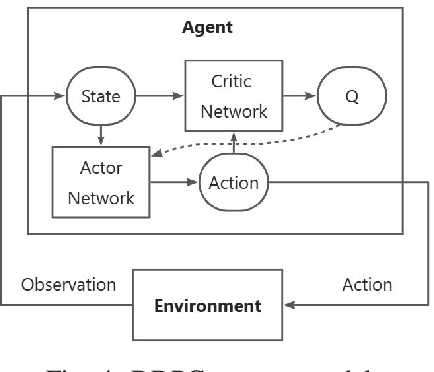

Abstract:The escalating interests on underwater exploration/reconnaissance applications have motivated high-rate data transmission from underwater to airborne relaying platforms, especially under high-sea scenarios. Thanks to its broad bandwidth and superior confidentiality, Optical wireless communication has become one promising candidate for water-air transmission. However, the optical signals inevitably suffer from deviations when crossing the highly-dynamic water-air interfaces in the absence of relaying ships/buoys. To address the issue, this article proposes one novel beam alignment strategy based on deep reinforcement learning (DRL) for water-air direct optical wireless communications. Specifically, the dynamic water-air interface is mathematically modeled using sea-wave spectrum analysis, followed by characterization of the propagation channel with ray-tracing techniques. Then the deep deterministic policy gradient (DDPG) scheme is introduced for DRL-based transceiving beam alignment. A logarithm-exponential (LE) nonlinear reward function with respect to the received signal strength is designed for high-resolution rewarding between different actions. Simulation results validate the superiority of the proposed DRL-based beam alignment scheme.

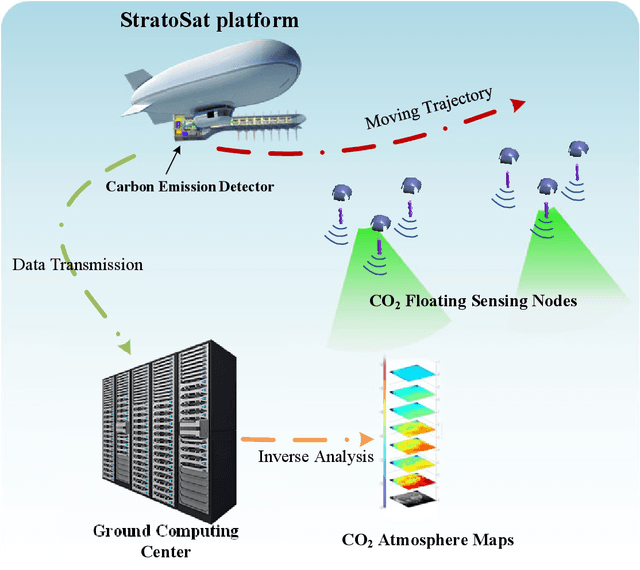

Near-Space Communications: The Last Piece of 6G Space-Air-Ground-Sea Integrated Network Puzzle

Dec 30, 2023

Abstract:This article presents a comprehensive study on the emerging near-space communications (NS-COM) within the context of space-air-ground-sea integrated network (SAGSIN). Specifically, we firstly explore the recent technical developments of NS-COM, followed by the discussions about motivations behind integrating NS-COM into SAGSIN. To further demonstrate the necessity of NS-COM, a comparative analysis between the NS-COM network and other counterparts in SAGSIN is conducted, covering aspects of deployment, coverage and channel characteristics. Afterwards, the technical aspects of NS-COM, including channel modeling, random access, channel estimation, array-based beam management and joint network optimization, are examined in detail. Furthermore, we explore the potential applications of NS-COM, such as structural expansion in SAGSIN communications, remote and urgent communications, weather monitoring and carbon neutrality. Finally, some promising research avenues are identified, including near-space-ground direct links, reconfigurable multiple input multiple output (MIMO) array, federated learning assisted NS-COM, maritime communication and free space optical (FSO) communication. Overall, this paper highlights that the NS-COM plays an indispensable role in the SAGSIN puzzle, providing substantial performance and coverage enhancement to the traditional SAGSIN architecture.

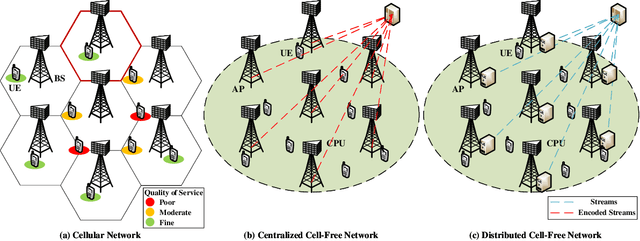

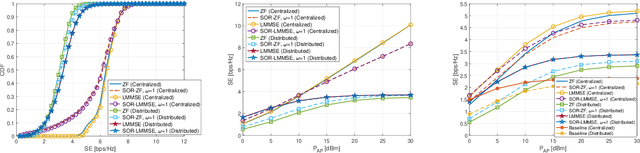

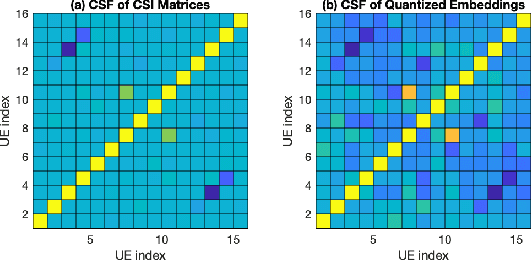

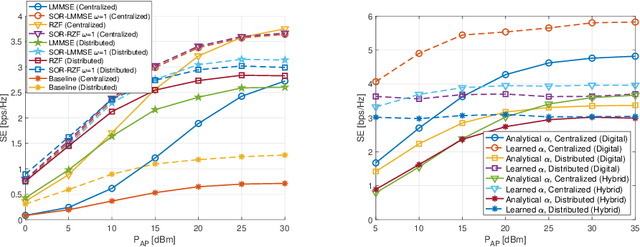

Hybrid Knowledge-Data Driven Channel Semantic Acquisition and Beamforming for Cell-Free Massive MIMO

Jul 21, 2023

Abstract:This paper focuses on advancing outdoor wireless systems to better support ubiquitous extended reality (XR) applications, and close the gap with current indoor wireless transmission capabilities. We propose a hybrid knowledge-data driven method for channel semantic acquisition and multi-user beamforming in cell-free massive multiple-input multiple-output (MIMO) systems. Specifically, we firstly propose a data-driven multiple layer perceptron (MLP)-Mixer-based auto-encoder for channel semantic acquisition, where the pilot signals, CSI quantizer for channel semantic embedding, and CSI reconstruction for channel semantic extraction are jointly optimized in an end-to-end manner. Moreover, based on the acquired channel semantic, we further propose a knowledge-driven deep-unfolding multi-user beamformer, which is capable of achieving good spectral efficiency with robustness to imperfect CSI in outdoor XR scenarios. By unfolding conventional successive over-relaxation (SOR)-based linear beamforming scheme with deep learning, the proposed beamforming scheme is capable of adaptively learning the optimal parameters to accelerate convergence and improve the robustness to imperfect CSI. The proposed deep unfolding beamforming scheme can be used for access points (APs) with fully-digital array and APs with hybrid analog-digital array. Simulation results demonstrate the effectiveness of our proposed scheme in improving the accuracy of channel acquisition, as well as reducing complexity in both CSI acquisition and beamformer design. The proposed beamforming method achieves approximately 96% of the converged spectrum efficiency performance after only three iterations in downlink transmission, demonstrating its efficacy and potential to improve outdoor XR applications.

Instance Segmentation in the Dark

Apr 27, 2023Abstract:Existing instance segmentation techniques are primarily tailored for high-visibility inputs, but their performance significantly deteriorates in extremely low-light environments. In this work, we take a deep look at instance segmentation in the dark and introduce several techniques that substantially boost the low-light inference accuracy. The proposed method is motivated by the observation that noise in low-light images introduces high-frequency disturbances to the feature maps of neural networks, thereby significantly degrading performance. To suppress this ``feature noise", we propose a novel learning method that relies on an adaptive weighted downsampling layer, a smooth-oriented convolutional block, and disturbance suppression learning. These components effectively reduce feature noise during downsampling and convolution operations, enabling the model to learn disturbance-invariant features. Furthermore, we discover that high-bit-depth RAW images can better preserve richer scene information in low-light conditions compared to typical camera sRGB outputs, thus supporting the use of RAW-input algorithms. Our analysis indicates that high bit-depth can be critical for low-light instance segmentation. To mitigate the scarcity of annotated RAW datasets, we leverage a low-light RAW synthetic pipeline to generate realistic low-light data. In addition, to facilitate further research in this direction, we capture a real-world low-light instance segmentation dataset comprising over two thousand paired low/normal-light images with instance-level pixel-wise annotations. Remarkably, without any image preprocessing, we achieve satisfactory performance on instance segmentation in very low light (4~\% AP higher than state-of-the-art competitors), meanwhile opening new opportunities for future research.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge