Mingyu Zhou

DAM: A Universal Dual Attention Mechanism for Multimodal Timeseries Cryptocurrency Trend Forecasting

May 01, 2024

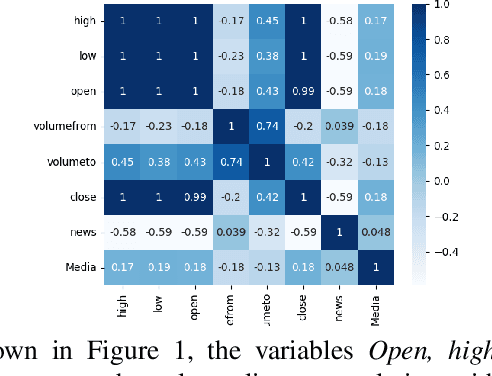

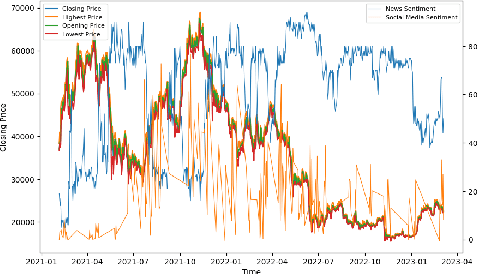

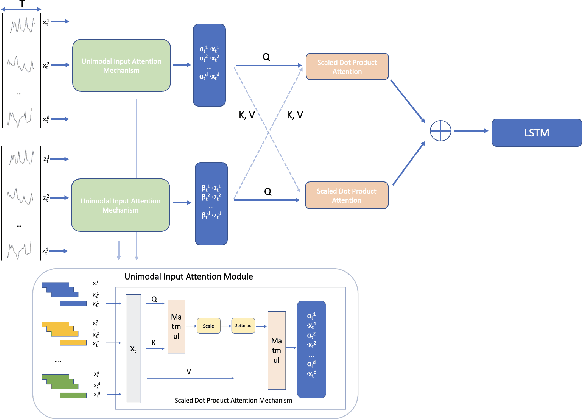

Abstract:In the distributed systems landscape, Blockchain has catalyzed the rise of cryptocurrencies, merging enhanced security and decentralization with significant investment opportunities. Despite their potential, current research on cryptocurrency trend forecasting often falls short by simplistically merging sentiment data without fully considering the nuanced interplay between financial market dynamics and external sentiment influences. This paper presents a novel Dual Attention Mechanism (DAM) for forecasting cryptocurrency trends using multimodal time-series data. Our approach, which integrates critical cryptocurrency metrics with sentiment data from news and social media analyzed through CryptoBERT, addresses the inherent volatility and prediction challenges in cryptocurrency markets. By combining elements of distributed systems, natural language processing, and financial forecasting, our method outperforms conventional models like LSTM and Transformer by up to 20\% in prediction accuracy. This advancement deepens the understanding of distributed systems and has practical implications in financial markets, benefiting stakeholders in cryptocurrency and blockchain technologies. Moreover, our enhanced forecasting approach can significantly support decentralized science (DeSci) by facilitating strategic planning and the efficient adoption of blockchain technologies, improving operational efficiency and financial risk management in the rapidly evolving digital asset domain, thus ensuring optimal resource allocation.

Quasi-Synchronous Random Access for Massive MIMO-Based LEO Satellite Constellations

Apr 10, 2023Abstract:Low earth orbit (LEO) satellite constellation-enabled communication networks are expected to be an important part of many Internet of Things (IoT) deployments due to their unique advantage of providing seamless global coverage. In this paper, we investigate the random access problem in massive multiple-input multiple-output-based LEO satellite systems, where the multi-satellite cooperative processing mechanism is considered. Specifically, at edge satellite nodes, we conceive a training sequence padded multi-carrier system to overcome the issue of imperfect synchronization, where the training sequence is utilized to detect the devices' activity and estimate their channels. Considering the inherent sparsity of terrestrial-satellite links and the sporadic traffic feature of IoT terminals, we utilize the orthogonal approximate message passing-multiple measurement vector algorithm to estimate the delay coefficients and user terminal activity. To further utilize the structure of the receive array, a two-dimensional estimation of signal parameters via rotational invariance technique is performed for enhancing channel estimation. Finally, at the central server node, we propose a majority voting scheme to enhance activity detection by aggregating backhaul information from multiple satellites. Moreover, multi-satellite cooperative linear data detection and multi-satellite cooperative Bayesian dequantization data detection are proposed to cope with perfect and quantized backhaul, respectively. Simulation results verify the effectiveness of our proposed schemes in terms of channel estimation, activity detection, and data detection for quasi-synchronous random access in satellite systems.

Next-Generation URLLC with Massive Devices: A Unified Semi-Blind Detection Framework for Sourced and Unsourced Random Access

Mar 20, 2023Abstract:This paper proposes a unified semi-blind detection framework for sourced and unsourced random access (RA), which enables next-generation ultra-reliable low-latency communications (URLLC) with massive devices. Specifically, the active devices transmit their uplink access signals in a grant-free manner to realize ultra-low access latency. Meanwhile, the base station aims to achieve ultra-reliable data detection under severe inter-device interference without exploiting explicit channel state information (CSI). We first propose an efficient transmitter design, where a small amount of reference information (RI) is embedded in the access signal to resolve the inherent ambiguities incurred by the unknown CSI. At the receiver, we further develop a successive interference cancellation-based semi-blind detection scheme, where a bilinear generalized approximate message passing algorithm is utilized for joint channel and signal estimation (JCSE), while the embedded RI is exploited for ambiguity elimination. Particularly, a rank selection approach and a RI-aided initialization strategy are incorporated to reduce the algorithmic computational complexity and to enhance the JCSE reliability, respectively. Besides, four enabling techniques are integrated to satisfy the stringent latency and reliability requirements of massive URLLC. Numerical results demonstrate that the proposed semi-blind detection framework offers a better scalability-latency-reliability tradeoff than the state-of-the-art detection schemes dedicated to sourced or unsourced RA.

Proximal Policy Optimization-based Transmit Beamforming and Phase-shift Design in an IRS-aided ISAC System for the THz Band

Mar 21, 2022

Abstract:In this paper, an IRS-aided integrated sensing and communications (ISAC) system operating in the terahertz (THz) band is proposed to maximize the system capacity. Transmit beamforming and phase-shift design are transformed into a universal optimization problem with ergodic constraints. Then the joint optimization of transmit beamforming and phase-shift design is achieved by gradient-based, primal-dual proximal policy optimization (PPO) in the multi-user multiple-input single-output (MISO) scenario. Specifically, the actor part generates continuous transmit beamforming and the critic part takes charge of discrete phase shift design. Based on the MISO scenario, we investigate a distributed PPO (DPPO) framework with the concept of multi-threading learning in the multi-user multiple-input multiple-output (MIMO) scenario. Simulation results demonstrate the effectiveness of the primal-dual PPO algorithm and its multi-threading version in terms of transmit beamforming and phase-shift design.

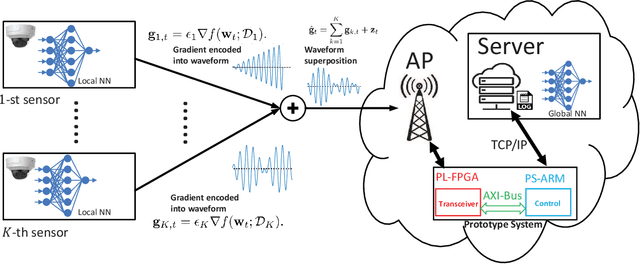

Over-the-Air Aggregation for Federated Learning: Waveform Superposition and Prototype Validation

Oct 27, 2021

Abstract:In this paper, we develop an orthogonal-frequency-division-multiplexing (OFDM)-based over-the-air (OTA) aggregation solution for wireless federated learning (FL). In particular, the local gradients in massive IoT devices are modulated by an analog waveform and are then transmitted using the same wireless resources. To this end, achieving perfect waveform superposition is the key challenge, which is difficult due to the existence of frame timing offset (TO) and carrier frequency offset (CFO). In order to address these issues, we propose a two-stage waveform pre-equalization technique with a customized multiple access protocol that can estimate and then mitigate the TO and CFO for the OTA aggregation. Based on the proposed solution, we develop a hardware transceiver and application software to train a real-world FL task, which learns a deep neural network to predict the received signal strength with global positioning system information. Experiments verify that the proposed OTA aggregation solution can achieve comparable performance to offline learning procedures with high prediction accuracy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge