Haijun Zhang

One-Shot Federated Clustering of Non-Independent Completely Distributed Data

Jan 24, 2026Abstract:Federated Learning (FL) that extracts data knowledge while protecting the privacy of multiple clients has achieved remarkable results in distributed privacy-preserving IoT systems, including smart traffic flow monitoring, smart grid load balancing, and so on. Since most data collected from edge devices are unlabeled, unsupervised Federated Clustering (FC) is becoming increasingly popular for exploring pattern knowledge from complex distributed data. However, due to the lack of label guidance, the common Non-Independent and Identically Distributed (Non-IID) issue of clients have greatly challenged FC by posing the following problems: How to fuse pattern knowledge (i.e., cluster distribution) from Non-IID clients; How are the cluster distributions among clients related; and How does this relationship connect with the global knowledge fusion? In this paper, a more tricky but overlooked phenomenon in Non-IID is revealed, which bottlenecks the clustering performance of the existing FC approaches. That is, different clients could fragment a cluster, and accordingly, a more generalized Non-IID concept, i.e., Non-ICD (Non-Independent Completely Distributed), is derived. To tackle the above FC challenges, a new framework named GOLD (Global Oriented Local Distribution Learning) is proposed. GOLD first finely explores the potential incomplete local cluster distributions of clients, then uploads the distribution summarization to the server for global fusion, and finally performs local cluster enhancement under the guidance of the global distribution. Extensive experiments, including significance tests, ablation studies, scalability evaluations, qualitative results, etc., have been conducted to show the superiority of GOLD.

Chain-of-Thought Compression Should Not Be Blind: V-Skip for Efficient Multimodal Reasoning via Dual-Path Anchoring

Jan 21, 2026Abstract:While Chain-of-Thought (CoT) reasoning significantly enhances the performance of Multimodal Large Language Models (MLLMs), its autoregressive nature incurs prohibitive latency constraints. Current efforts to mitigate this via token compression often fail by blindly applying text-centric metrics to multimodal contexts. We identify a critical failure mode termed Visual Amnesia, where linguistically redundant tokens are erroneously pruned, leading to hallucinations. To address this, we introduce V-Skip that reformulates token pruning as a Visual-Anchored Information Bottleneck (VA-IB) optimization problem. V-Skip employs a dual-path gating mechanism that weighs token importance through both linguistic surprisal and cross-modal attention flow, effectively rescuing visually salient anchors. Extensive experiments on Qwen2-VL and Llama-3.2 families demonstrate that V-Skip achieves a $2.9\times$ speedup with negligible accuracy loss. Specifically, it preserves fine-grained visual details, outperforming other baselines over 30\% on the DocVQA.

Towards a Theoretical Framework for Robust Node Deployment in Cooperative ISAC Networks

Jan 03, 2026Abstract:This paper investigates node deployment strategies for robust multi-node cooperative localization in integrated sensing and communication (ISAC) networks.We first analyze how steering vector correlation across different positions affects localization performance and introduce a novel distance-weighted correlation metric to characterize this effect. Building upon this insight, we propose a deployment optimization framework that minimizes the maximum weighted steering vector correlation by optimizing simultaneously node positions and array orientations, thereby enhancing worst-case network robustness. Then, a genetic algorithm (GA) is developed to solve this min-max optimization, yielding optimized node positions and array orientations. Extensive simulations using both multiple signal classification (MUSIC) and neural-network (NN)-based localization validate the effectiveness of the proposed methods, demonstrating significant improvements in robust localization performance.

No Vision, No Wearables: 5G-based 2D Human Pose Recognition with Integrated Sensing and Communications

Dec 31, 2025Abstract:With the increasing maturity of contactless human pose recognition (HPR) technology, indoor interactive applications have raised higher demands for natural, controller-free interaction methods. However, current mainstream HPR solutions relying on vision or radio-frequency (RF) (including WiFi, radar) still face various challenges in practical deployment, such as privacy concerns, susceptibility to occlusion, dedicated equipment and functions, and limited sensing resolution and range. 5G-based integrated sensing and communication (ISAC) technology, by merging communication and sensing functions, offers a new approach to address these challenges in contactless HPR. We propose a practical 5G-based ISAC system capable of inferring 2D HPR from uplink sounding reference signals (SRS). Specifically, rich features are extracted from multiple domains and employ an encoder to achieve unified alignment and representation in a latent space. Subsequently, low-dimensional features are fused to output the human pose state. Experimental results demonstrate that in typical indoor environments, our proposed 5G-based ISAC HPR system significantly outperforms current mainstream baseline solutions in HPR performance, providing a solid technical foundation for universal human-computer interaction.

Empower Low-Altitude Economy: A Reliability-Aware Dynamic Weighting Allocation for Multi-modal UAV Beam Prediction

Dec 30, 2025Abstract:The low-altitude economy (LAE) is rapidly expanding driven by urban air mobility, logistics drones, and aerial sensing, while fast and accurate beam prediction in uncrewed aerial vehicles (UAVs) communications is crucial for achieving reliable connectivity. Current research is shifting from single-signal to multi-modal collaborative approaches. However, existing multi-modal methods mostly employ fixed or empirical weights, assuming equal reliability across modalities at any given moment. Indeed, the importance of different modalities fluctuates dramatically with UAV motion scenarios, and static weighting amplifies the negative impact of degraded modalities. Furthermore, modal mismatch and weak alignment further undermine cross-scenario generalization. To this end, we propose a reliability-aware dynamic weighting scheme applied to a semantic-aware multi-modal beam prediction framework, named SaM2B. Specifically, SaM2B leverages lightweight cues such as environmental visual, flight posture, and geospatial data to adaptively allocate contributions across modalities at different time points through reliability-aware dynamic weight updates. Moreover, by utilizing cross-modal contrastive learning, we align the "multi-source representation beam semantics" associated with specific beam information to a shared semantic space, thereby enhancing discriminative power and robustness under modal noise and distribution shifts. Experiments on real-world low-altitude UAV datasets show that SaM2B achieves more satisfactory results than baseline methods.

Diversity Recommendation via Causal Deconfounding of Co-purchase Relations and Counterfactual Exposure

Dec 19, 2025

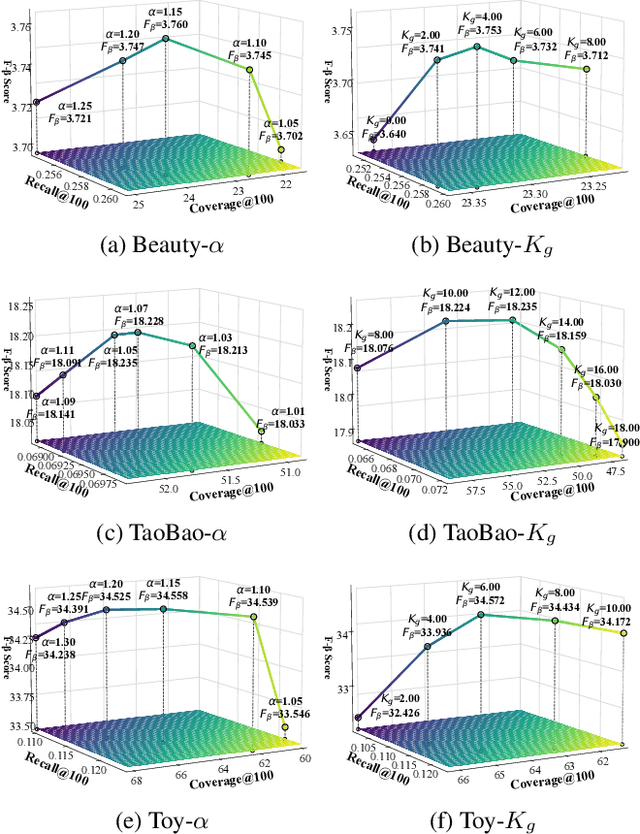

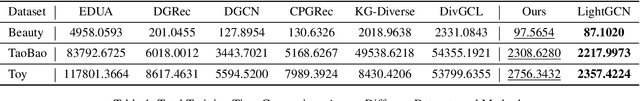

Abstract:Beyond user-item modeling, item-to-item relationships are increasingly used to enhance recommendation. However, common methods largely rely on co-occurrence, making them prone to item popularity bias and user attributes, which degrades embedding quality and performance. Meanwhile, although diversity is acknowledged as a key aspect of recommendation quality, existing research offers limited attention to it, with a notable lack of causal perspectives and theoretical grounding. To address these challenges, we propose Cadence: Diversity Recommendation via Causal Deconfounding of Co-purchase Relations and Counterfactual Exposure - a plug-and-play framework built upon LightGCN as the backbone, primarily designed to enhance recommendation diversity while preserving accuracy. First, we compute the Unbiased Asymmetric Co-purchase Relationship (UACR) between items - excluding item popularity and user attributes - to construct a deconfounded directed item graph, with an aggregation mechanism to refine embeddings. Second, we leverage UACR to identify diverse categories of items that exhibit strong causal relevance to a user's interacted items but have not yet been engaged with. We then simulate their behavior under high-exposure scenarios, thereby significantly enhancing recommendation diversity while preserving relevance. Extensive experiments on real-world datasets demonstrate that our method consistently outperforms state-of-the-art diversity models in both diversity and accuracy, and further validates its effectiveness, transferability, and efficiency over baselines.

Jamming Identification with Differential Transformer for Low-Altitude Wireless Networks

Aug 17, 2025Abstract:Wireless jamming identification, which detects and classifies electromagnetic jamming from non-cooperative devices, is crucial for emerging low-altitude wireless networks consisting of many drone terminals that are highly susceptible to electromagnetic jamming. However, jamming identification schemes adopting deep learning (DL) are vulnerable to attacks involving carefully crafted adversarial samples, resulting in inevitable robustness degradation. To address this issue, we propose a differential transformer framework for wireless jamming identification. Firstly, we introduce a differential transformer network in order to distinguish jamming signals, which overcomes the attention noise when compared with its traditional counterpart by performing self-attention operations in a differential manner. Secondly, we propose a randomized masking training strategy to improve network robustness, which leverages the patch partitioning mechanism inherent to transformer architectures in order to create parallel feature extraction branches. Each branch operates on a distinct, randomly masked subset of patches, which fundamentally constrains the propagation of adversarial perturbations across the network. Additionally, the ensemble effect generated by fusing predictions from these diverse branches demonstrates superior resilience against adversarial attacks. Finally, we introduce a novel consistent training framework that significantly enhances adversarial robustness through dualbranch regularization. Simulation results demonstrate that our proposed methodology is superior to existing methods in boosting robustness to adversarial samples.

Efficient Edge LLMs Deployment via HessianAware Quantization and CPU GPU Collaborative

Aug 10, 2025Abstract:With the breakthrough progress of large language models (LLMs) in natural language processing and multimodal tasks, efficiently deploying them on resource-constrained edge devices has become a critical challenge. The Mixture of Experts (MoE) architecture enhances model capacity through sparse activation, but faces two major difficulties in practical deployment: (1) The presence of numerous outliers in activation distributions leads to severe degradation in quantization accuracy for both activations and weights, significantly impairing inference performance; (2) Under limited memory, efficient offloading and collaborative inference of expert modules struggle to balance latency and throughput. To address these issues, this paper proposes an efficient MoE edge deployment scheme based on Hessian-Aware Quantization (HAQ) and CPU-GPU collaborative inference. First, by introducing smoothed Hessian matrix quantization, we achieve joint 8-bit quantization of activations and weights, which significantly alleviates the accuracy loss caused by outliers while ensuring efficient implementation on mainstream hardware. Second, we design an expert-level collaborative offloading and inference mechanism, which, combined with expert activation path statistics, enables efficient deployment and scheduling of expert modules between CPU and GPU, greatly reducing memory footprint and inference latency. Extensive experiments validate the effectiveness of our method on mainstream large models such as the OPT series and Mixtral 8*7B: on datasets like Wikitext2 and C4, the inference accuracy of the low-bit quantized model approaches that of the full-precision model, while GPU memory usage is reduced by about 60%, and inference latency is significantly improved.

Model Splitting Enhanced Communication-Efficient Federated Learning for CSI Feedback

Jun 04, 2025Abstract:Recent advancements have introduced federated machine learning-based channel state information (CSI) compression before the user equipments (UEs) upload the downlink CSI to the base transceiver station (BTS). However, most existing algorithms impose a high communication overhead due to frequent parameter exchanges between UEs and BTS. In this work, we propose a model splitting approach with a shared model at the BTS and multiple local models at the UEs to reduce communication overhead. Moreover, we implant a pipeline module at the BTS to reduce training time. By limiting exchanges of boundary parameters during forward and backward passes, our algorithm can significantly reduce the exchanged parameters over the benchmarks during federated CSI feedback training.

COutfitGAN: Learning to Synthesize Compatible Outfits Supervised by Silhouette Masks and Fashion Styles

Feb 12, 2025

Abstract:How to recommend outfits has gained considerable attention in both academia and industry in recent years. Many studies have been carried out regarding fashion compatibility learning, to determine whether the fashion items in an outfit are compatible or not. These methods mainly focus on evaluating the compatibility of existing outfits and rarely consider applying such knowledge to 'design' new fashion items. We propose the new task of generating complementary and compatible fashion items based on an arbitrary number of given fashion items. In particular, given some fashion items that can make up an outfit, the aim of this paper is to synthesize photo-realistic images of other, complementary, fashion items that are compatible with the given ones. To achieve this, we propose an outfit generation framework, referred to as COutfitGAN, which includes a pyramid style extractor, an outfit generator, a UNet-based real/fake discriminator, and a collocation discriminator. To train and evaluate this framework, we collected a large-scale fashion outfit dataset with over 200K outfits and 800K fashion items from the Internet. Extensive experiments show that COutfitGAN outperforms other baselines in terms of similarity, authenticity, and compatibility measurements.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge